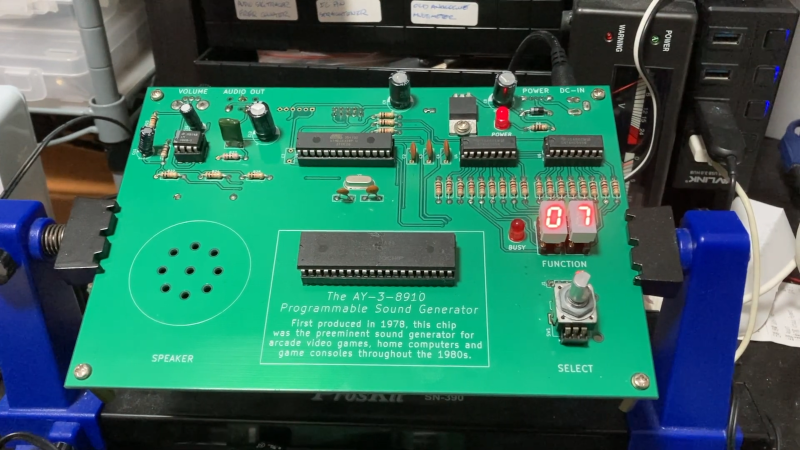

Talking computers are nothing these days. But in the old days, a computer that could speak was quite the novelty. Many computers from the 1970s and 1980s used an AY-3-8910 chip and [InazumaDenki] has been playing with one of these venerable chips. You can see (and hear) the results in the video below.

The chip uses PCM, and there are different ways to store and play sounds. The video shows how different they are and even looks at the output on the oscilloscope. The chip has three voices and was produced by General Instruments, the company that initially made PIC microcontrollers. It found its way into many classic arcade games, home computers, and games like Intellivision, Vectrex, the MSX, and ZX Spectrum. Soundcards for the TRS-80 Color Computer and the Apple II used these chips. The Atari ST used a variant from Yamaha, the YM2149F.

There’s some code for an ATmega, and the video says it is part one, so we expect to see more videos on this chip soon.

General instruments had other speech chips, and some of them are still around in emulated form. In fact, you can emulate the AY-3-8910 with little more than a Raspberry Pi.

Always thought it was amazing how much money it took to produce a single word of synth speech in Berzerk back in 1980:

https://en.wikipedia.org/wiki/Talk:Berzerk_(video_game)#Features_section:_Synthesis_&_Compression?

…I’m guessing that this is cheaper and easier nowadays, even if you’re using period hardware for the final output

Reading between the lines: That would have been how much the speech synthesis manufacturer charged them to process each word into phonemes. There’s no way they spent that much per machine on hardware, eg. The similar-tech Speak and Spell sold for $50 and had 200-odd words.

Oh yes it was the development cost, not the hardware cost. Hiring some guy to mess around with a keyboard until he manually modulated some notes to kinda sound like words. Not the correct way to do it anymore ;)

that really is remarkable. looking at the specific algorithm, it seems you should be able to do the encoding yourself today even in, say, python or something on your pc. it’s funky; definitely not LPC as was used in “Speak & Spell”, or the digital filter as used in the “SPO256”.

here is some discussion of the gory details of the chip (SSi TSI S14001A, which was apparently first used for a talking calculator for the disabled) if curious:

http://www.vintagecalculators.com/html/development_of_the_tsi_speech-.html

(further) and apparently:

“The speech technology was licensed (I believe with a 3 year exclusive deal) from Forrest S. Mozer, a

professor of atomic physics (speech was a spare time thing for him) at Berkeley. Forrest Mozer would encode the speech in his

basement laboratory using his novel form of speech encoding (the encoding process apparently involved several minicomputers

running FFTs and a spectrum analyzer), and then General Instruments would make the resulting speech data into a mask ROM to

be used with the TSI chip.”

ref: https://archive.org/stream/pdfy-QPCSwTWiFz1u9WU_/david_djvu.txt

Oh wow I didn’t know that angle, thanks! I kinda assumed it was crafted manually, but it sounds like a much more formal process than I expected.

Really good stuff :-)

Thanks for this! I designed an S-100 Sounding Board about that time using these chips.

“Would you like to play a game of chess ?”

“It found its way into many … home computers … like … ZX Spectrum”

AFAIK The ZX Spectrum does not contain an AY-3-8910.

The originals did not. The later models I think got improved sound, so maybe them?

I think I saw a

en.wikipedia.org/wiki/Currah#Currah_%CE%BCSpeech_for_the_ZX_Spectrum

demonstrated, but from that article, it uses a different chip.

The 128K models used an AY-3-8912 — newer version, I think.

ST Speech is quite famous for doing speech synthesis on an AY-3-8910 derivative (YM2149)

http://notebook.zoeblade.com/ST_Speech.html

had a ti99 with a voice synth module. it was pretty nifty.

Oh yes!

I have been experimenting with the TMS5220 chip in those days, ripping the text to speech from the terminal emulator module. It was a lot of fun…

And while I worked at Texas instruments, I created portable equipment for creating the linear predictive coding or LPC data that those synthesis chips used. Some of the most fun engineering I did in my entire career.

“Shall we play a game?”

Somewhere in the dark dungeon of my basement is a hearsay 1000 for the Commodore 64. I found it to be a novelty at best so getting it was a bit anticlimactic, but for handicapped users, I could see all kinds of cool uses for it.

“The chip uses PCM” – no it does not, it’s a fairly simple PSG. What [InazumaDenki] has done, is use it for PCM, comparable to how the PC speaker could be used to play samples.

Back in the early 70’s I proposed in EET class 10 analog synth words to “speak” I wanted just numbers to be spoken from a digital voltmeter so I didn’t have to look away from a easy to slide off ball tip test probe, instead I sharpened hardened steel to make a probe that won’t slip or bridge traces. Radio Shack had one years ago but they are an oddity.

One HAD article from ’14 comes up, Arduino powered of course. I need a mulitmeter not just volts. It’s not a blind accessibility thing but about positioning a meter to be able to see it and not having it fall leads attached whilst poking around in a furnace, car underdash, something overhead, dark spaces lit only by one flashlight, just about any place other than a flat workbench. Also having to head bob repeatedly between a row of pins and the meter can be tiring.

The only thing better would be AR glasses display.

There’s recordings on YouTube of an IBM 7094 mainframe “singing” the song Daisy, Daisy. As I understand it, this wasn’t digital speech synthesis, but analog, using a rubber/synthetic membrane of some sort. It starts with some slightly ropey-sounding monophonic melody, which had me thinking “huh, primitive but I suppose good for 1961…” before adding percussion and polyphonic harmonies (“wow, better audio than my Sinclair 48K had 20+ years later…”) and finally, the synthesized speech, which is frankly amazing for the technology & time-period.

https://youtu.be/41U78QP8nBk

1970s synth speech reminds me Kraftwerk.

That’s cool!

A few years ago I recreated the “talk back” board for the ZX81 using a General Instruments SPO256

https://www.sinclairzxworld.com/viewtopic.php?t=3781

Not nearly as much work as this project.

But fun to get my ZX81 to say “I am not a robot”

I wrote some text to speech software for fun back in vb6 times. It was very easy because in Italian you just need to pronounce the letters as they are written, apart from a few well defined cases of groups of letters. I recorded the sounds one by one and I was done. It didn’t sound natural as it didn’t know where to place the stress in long words, but it worked.