Brain-to-speech interfaces have been promising to help paralyzed individuals communicate for years. Unfortunately, many systems have had significant latency that has left them lacking somewhat in the practicality stakes.

A team of researchers across UC Berkeley and UC San Francisco has been working on the problem and made significant strides forward in capability. A new system developed by the team offers near-real-time speech—capturing brain signals and synthesizing intelligible audio faster than ever before.

New Capability

The aim of the work was to create more naturalistic speech using a brain implant and voice synthesizer. While this technology has been pursued previously, it faced serious issues around latency, with delays of around eight seconds to decode signals and produce an audible sentence. New techniques had to be developed to try and speed up the process to slash the delay between a user trying to “speak” and the hardware outputting the synthesized voice.

The implant developed by researchers is used to sample data from the speech sensorimotor cortex of the brain—the area that controls the mechanical hardware that makes speech: the face, vocal chords, and all the other associated body parts that help us vocalize. The implant captures signals via an electrode array surgically implanted into the brain itself. The data captured by the implant is then passed to an AI model which figures out how to turn that signal into the right audio output to create speech. “We are essentially intercepting signals where the thought is translated into articulation and in the middle of that motor control,” said Cheol Jun Cho, a Ph.D student at UC Berkeley. “So what we’re decoding is after a thought has happened, after we’ve decided what to say, after we’ve decided what words to use, and how to move our vocal-tract muscles.”

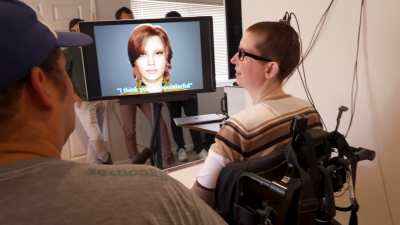

The AI model had to be trained to perform this role. This was achieved by having a subject, Ann, look at prompts and attempting to “speak ” the phrases. Ann has suffered from paralysis after a stroke which left her unable to speak. However, when she attempts to speak, relevant regions in her brain still lit up with activity, and sampling this enabled the AI to correlate certain brain activity to intended speech. Unfortunately, since Ann could no longer vocalize herself, there was no target audio for the AI to correlate the brain data with. Instead, researchers used a text-to-speech system to generate simulated target audio for the AI to match with the brain data during training. “We also used Ann’s pre-injury voice, so when we decode the output, it sounds more like her,” explains Cho. A recording of Ann speaking at her wedding provided source material to help personalize the speech synthesis to sound more like her original speaking voice.

To measure performance of the new system, the team compared the time it took the system to generate speech to the first indications of speech intent in Ann’s brain signals. “We can see relative to that intent signal, within one second, we are getting the first sound out,” said Gopala Anumanchipalli, one of the researchers involved in the study. “And the device can continuously decode speech, so Ann can keep speaking without interruption.” Crucially, too, this speedier method didn’t compromise accuracy—in this regard, it decoded just as well as previous slower systems.

The decoding system works in a continuous fashion—rather than waiting for a whole sentence, it processes in small 80-millisecond chunks and synthesizes on the fly. The algorithms used to decode the signals were not dissimilar from those used by smart assistants like Siri and Alexa, Anumanchipalli explains. “Using a similar type of algorithm, we found that we could decode neural data and, for the first time, enable near-synchronous voice streaming,” he says. “The result is more naturalistic, fluent speech synthesis.”

It was also key to determine whether the AI model

was genuinely communicating what Ann was trying to say. To investigate this, Ann was qsked to try and vocalize words outside the original training data set—things like the NATO phonetic alphabet, for example. “We wanted to see if we could generalize to the unseen words and really decode Ann’s patterns of speaking,” said Anumanchipalli. “We found that our model does this well, which shows that it is indeed learning the building blocks of sound or voice.”

For now, this is still groundbreaking research—it’s at the cutting edge of machine learning and brain-computer interfaces. Indeed, it’s the former that seems to be making a huge difference to the latter, with neural networks seemingly the perfect solution for decoding the minute details of what’s happening with our brainwaves. Still, it shows us just what could be possible down the line as the distance between us and our computers continues to get ever smaller.

Featured image: A researcher connects the brain implant to the supporting hardware of the voice synthesis system. Credit: UC Berkeley

NuraLink is making good headway in this technology, 3 people have been outfitted with Nuralink. https://www.foxnews.com/health/paralyzed-man-als-third-receive-neuralink-implant-can-type-brain

Great article thanks

Perhaps, but this study is based on surface recordings – not Neuralink, which is penetrating. And the original work on speech prosthetics was done with the Utah Array (another system of penetrating microelectrodes), invented over 35 years ago. Musk is way behind the curve.

The article and the title of the embedded video both contain the word “implant”.

I’m no neuroscientist:

“Here we used high-density surface recordings of the speech sensorimotor cortex in a clinical trial participant with severe paralysis and anarthria to drive a continuously streaming naturalistic speech synthesizer. “, https://www.nature.com/articles/s41593-025-01905-6

Not sure if this is the surface of the brain, skull, skin, hair. You tell me, but the actual paper says “surface”.

Im no neuroscientist either, but I do read the blurb, attached articles, and their links before entering into a discussion….Its a brain implant being used.

Chang leads a clinical trial at UCSF that aims to develop speech neuroprosthesis technology using high-density electrode arrays that record neural activity DIRECTLY FROM THE BRAIN SURFACE. “It is exciting that the latest AI advances are greatly accelerating BCIs for practical real-world use in the near future,” he said.

and more detail from the linked previous study involving the same subject:

“To do this, the team implanted a paper-thin rectangle of 253 electrodes onto the surface of her brain over areas they previously discovered were critical for speech. The electrodes intercepted the brain signals that, if not for the stroke, would have gone to muscles in Ann’s lips, tongue, jaw and larynx, as well as her face. A cable, plugged into a port fixed to Ann’s head, connected the electrodes to a bank of computers.”

It’s an implant to read from the brain surface, nuralink requires an implant invasive to the brain itself, which is why it’s killing animals in trials. It really is literally 30 years out of date.

Very cool, but not remotely realtime

A two second delay is near realtime for a conversation, especially considering other technologies are 100x slower. Stop thinking about it as a process and think about it as a human-to-human interface.

That is truly incredible! What an amazing time we live in.

Username checks out.

Too bad it’s happening in an entirely capitalistic land, though.

Patients like her are lab rats and will be abandoned as soon as the experiment is over or when the company folds.

Then there’s no social institution which continues to do maitenance for the implant in absence of the original company.

And that will continue to be the case as long as the narrative that businesses do research better is believed, when it’s really just about getting taxpayer funding into private companies rather than educational institutions.

Am I the only person wondering about the amount damage some of these implants could do in instances of high “jerk” (rate of change of acceleration). It could be caused by an elevator stopping fast or a car fender bender. I’m picturing metal cutting through squishy flesh.

And I also wonder what is the long term effects of spikes/strands of metal inside the body. Could there be electrolytic corrosion, or eventual biological rejection.

In time there will likely be entirely implanted devices relying on wireless charging and communication. In the meantime, I would assume that the transuctaneous interface “port” is designed in a way that any significant force causes detachment of the external portion at a much lower pressure threshold than would result in bio damages. Bone anchoring screws can take quite a bit of force, even when they surround a transcranial access.

As for electrilytic corrosion or biological rejection, thats as simple as appropriate material choices. Gold, Platinum, titanium and many of its alloys perform very well in these regards. Ultimately, theres always potential issues and unknowns lurking as biotech advances, thats one of many reasons progress favors a snails pace.

I’m happy to see this. I’ve thought for while that we needed more blending in our brains computer interfaces.

The system that I’ve been envisioning is something like this:

Grow neurons onto an array so that they can interface with the brain and relay the signals.

Take those signals and feed them directly into an AI neural network of some form that feeds into the computer as a whole.

Basically:

Brain -> Processing Neurons -> Simulated neurons -> Computer

This is already possible to do in a small lab. Look around YouTube for a couple of groups demonstrating both training of biological neurons and direct interfaces with them.

Most of this isn’t done directly with humans or animals yet for obvious reasons, but the control interfaces are for specific cases like prosthetics. The reason you didn’t see more of it? Cuts to funding needed and all private enterprise being as expensive and proprietary as possible.

What a crappy voice sound. Couldn’t they get a better synthesized voice than that of Stephen Hawking in 2025?