The Intel 8051 series of 8-bit microcontrollers is long-discontinued by its original manufacturer, but lives on as a core included in all manner of more recent chips. It’s easy to understand and program, so it remains a fixture despite much faster replacements appearing.

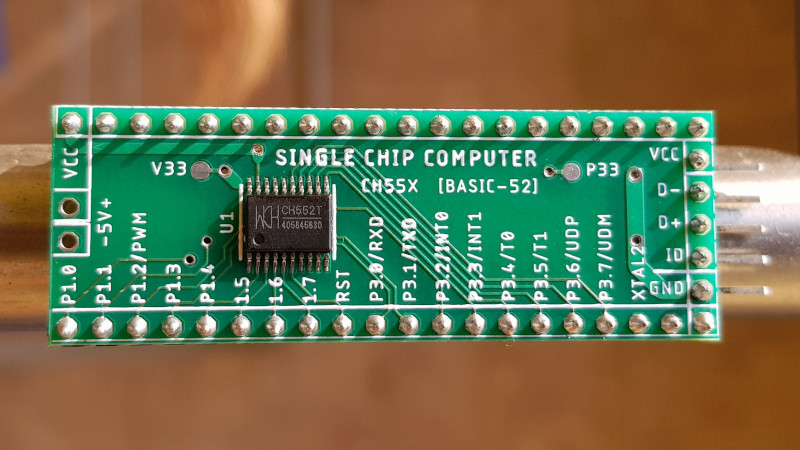

If you can’t find an original 40-pin DIP don’t worry, because [mit41301] has produced a board in a compatible 40-pin format. It’s called the single chip computer not because such a thing is a novelty in 2025, but because it has no need for the support chips which would have come with the original.

The modern 8051 clone in use is a CH558 or CH559, both chips with far more onboard than the original. The pins are brought out to one side only of the board, because on the original the other side would interface with an external RAM chip. It speaks serial, and can be used through either a USB-to-serial or Bluetooth-to-serial chip. There’s MCS-BASIC for it, so programming should be straightforward.

We can see the attraction of this board even though we reach for much more accomplished modern CPUs by choice. Several decades ago the original 8051 on Intel dev boards was our university teaching microcontoller, so there remains here a soft spot for it. We certainly see other 8051 designs, as for example this Arduino clone.

Starting out with a controller that features definite CPU-cycle and memory-resource constraints, will teach you a great deal about how to code efficiently. That’s something completely lost to the ‘programmers’ of today. Hence, we typically now see bloated, inefficient software running on what would otherwise be very, very performant hardware.

Consider starting-out by learning (speed of light) C on a tiny processor. The skills you will learn by doing so, may put you ahead of the curve later in your career. You’ll also develop a very fond appreciation for more advanced hardware, where agonising over every cycle and memory byte is (sometimes) less of a requirement.

Nobody should have to stick their hand in a jar of nettles as an initiation rite just because some arrogant person, decades ago, chose to do so.

I always advocate for learning C/C++ first, because learning the ins and outs of manual memory management tends to teach a healthy level of fear and respect for the garbage collector in Java/C#, or at the very least the fundamental realization that it isn’t magic, and nothing is free when it comes to CPU cycles. This is something which is pragmatically beneficial – it makes these people better programmers.

I’ve been working in software development for over two decades, and quite frankly, I believe the field could do with fewer people trying to ward off newbies, with ostensibly “good” intentions (to whom?), by spelling doom and gloom about “these kids these days” not knowing CPU-cycle or memory-resource constraints.

With the massively microcoded CPUs that we have these days, with microcode that can change at the drop of a hat due to a firmware update, the best possible standpoint is one of gratitude that silicon engineers are still managing to wring incremental IPC improvements across generations.

“[We] now see bloated, inefficient software running on what would otherwise be very, very performant hardware”? Sure! Totally! It would be an absolute panacea if a modern x86-UEFI system were treated identically to the Commodore 64 we grew up with. It would also come at the cost of more or less every modern convenience that we enjoy, unless you’re dead-set on living your life off the grid with technology that hasn’t moved past roughly 1990. That is ideologically beneficial – it makes you feel good, but has no practical benefit within the overall scope of software development. The people who care and who excel at refining down the number of cycles taken by a snippet of code will always exist. But that’s not a barrier to entry for coding.

Whether you like it or not, software development stands on some level of shoulders, and these shoulders increase in breadth with hardware complexity. Not everyone can pull a Terry A. Davis and make their own personal Temple OS. Some people just want to get things done.

Get real, get with the program, and – most importantly – get over yourself.

” I always advocate for learning C/C++ first, ” You should advocate learning assembler first. There is no better way to learn the ins and outs.

To be fair… You’re both right… The problem isn’t the language it’s the constraints.

If you want to be an efficient coder use less external libraries, watch your arrays and other variables.. use pointers..pass and reference your variables, and objects properly and try and keep your code clean and efficient.

Doesn’t really matter what language you learn…

“There is no better way to learn the INs and OUTs”

I see what you did there, and I’m here for it.

Two words: punch cards.

Oh don’t be silly! It’s paper tape. Ideally written by a Teletype 33. God help you if you’re carrying a stack of cards and you drop them. You’ll be suggesting Fortran 77 next!

Talk to me after another two and you’ll think differently. You’re still in the “this is great!” stage with a bunch of energy. Wait until the bloat exceeds your internal thresholds and you’ll start talking just .. like .. us.

I don’t like it how people became so aggressive all the time.

What happened to polite interaction? 🙁

Why is human development not at the height of technology?

Why was that person arrogant decades ago for doing it like that? I wasn’t aware they had options back then. They were limited by the hardware and they had to do things in the most efficient way. Calling it arrogant is quite the stretch.

Why would you encourage newbies to be careless by not thinking too much, even more in the present day when the AI slop is taking over?

The arrogance came later and reeks off the attitude of an unfortunate number of fellow oldbie coders, a level of bitterness because “kids these days” don’t have to stick their own hands in the nettle jar, and bah gawd back in mah day hurmph glurmph durph.

You’re correct that people were limited by the hardware they had back then. We’re not now. There’s no inherent value in living what amounts to a monastic life, hardware- or software-wise, unless you choose to. I love doing low-level programming because I choose to, but at my day job I do UI/UX coding because I choose to do that, too. I once had the CTO at my job ask why I’m twiddling my thumbs working on menus rather than doing engine or architecture coding – it was intended as a compliment, but it also highlighted the unfortunate attitude that’s so prevalent in software development: That just because someone can do, and wants to do, a perceptually simple task A (which UI/UX is not, people unfamiliar with it just think it is), they’re incapable of doing complex task B.

Speaking of, you’re also presenting a false dichotomy. Newbies shouldn’t overthink things, so therefore they shouldn’t think at all? That’s not what I said. I didn’t stutter. In any decent organization there are people like me filling senior-level ranks who enjoy drilling down into the nitty-gritty with a profiler and figuring out precisely where all the performance is going in some innocuous-looking routine. But that is not something that junior- or intermediate-level programmers should have to bother themselves with. If they want to, that’s great, it puts them on the fast track for a promotion, but I’m not going to expect the world out of people who are just barely getting their feet wet.

Also, who brought up AI? You did. But on that point, who really cares if someone leans too heavily on LLM-generated garbage for writing code? At the end of the day they’ll either use it as a crutch until their metaphorical foot heals and their skill-set catches up, or they’ll run into the brick wall where skill and LLM-generated code diverge. If you have programmers in your organization that are leaning too heavily on generative trash, that speaks just as ill of the policies where you work as it is the fact that someone like that would even be hired.

I’m reminded of the downright religious worship that people have for “Uncle” Bob Martin’s “Clean Code”. A tome which has a set of pretty good ideas, to be applied at the appropriate times, but which so many coders choose instead to treat as dogma. It’s religion for the areligious, and much like so many other holy texts, it has unfortunate resulted in what amounts to holy wars. Functions should fit within an 80×25 monospaced window? Sure, ideally, yeah, but we’re not hammering away at a DEC VT-100 anymore. We have 4K monitors, portrait-orientable, and employers willing to pay for such hardware – why not use it? The principle of functions being isolated, scrutable, and reading as a linear set of imperative statements is a good one, but the difference between a good coder and a great coder is the ability to know the rules, but also know when to waive them.

[deL] was completely right in their second paragraph, but the first one exhibits an attitude that I would very much like to see less of. “Programmers of today” meet the hardware, capabilities, and expectations of today. It’s not that deep. If you want to code with a hand behind your back, great, but other people are free not to, and they’re not lesser programmers for choosing not to.

I’ve been in the development business in a wide range of roles (and product purposes) for closing in on 40 years now. Stuff including server software, real-time data acquisition, machine and guidance control systems, business communications software, language interpretation, even a few games… and these types of arguments will never stop cracking me up.

I had a fun one just a couple weeks ago where I was talking to a fellow ham radio operator about a web application for contact logging I was whipping up for my own personal use. He’s a retired microcontroller programmer, and wanted to know what language I was using. Of course, he scoffed when I told him Node. “You should write that in assembly.” … I knew it was coming, and I still split my side laughing. Yeah, that’s exactly the best approach to a rapid development, database driven web app. “You do that. Let me know how it goes.”

Everything has it’s place. The way “you” have always done a thing isn’t always THE best way (or even applicable!), and part of the allure of the art and science of development is that there’s always more than one way to skin the metaphorical cat.

There are business needs to getting things done, too. And if you get paid to deliver on time, you’re going to sacrifice what could be your best work to keep the mortgage paid. …or, heck, pick a hundred other scenarios where the business demand trumps a thing you know you should be doing.

That said, the CONCEPTS of good memory management, efficient code, minimizing clock cycles… All of those should be taught, understood, and practiced, but you know what? Sometimes it really doesn’t matter all that much, and if someone being a little sloppy means the door gets opened for them to learn and grow, and creates a path for the great developers of the future? I’ll grumble about the poor code now, but welcome the end result. There are definitely more important things to get worked up about.

I just don’t want to see people use electron to program a simple application don’t know why we need to JavaScript interpreter on a system architecture where JavaScript sandboxing and security features I’m not really required on a desktop application

I understand that we sit on top of the shoulders of Giants in situations where an interpreted language should be used over a compiled language I feel like electron is really just the lazy project developers way to create desktop application using the hacked up up version of website they made

What I didn’t say, that some appear to have assumed that I did, was that there’s a case for both ‘bottom’ and ‘top’ programmers. One employer wants instant results for a one-off problem, so the use of Python libraries might be appropriate. Another employer wants to save on silicon for a million-off production run, so low-level, super efficient code is the answer.

Position yourself as a dime-a-dozen ‘top’ programmer however, and you’ll be infrequently and poorly compensated, will be ever dependent on others, and clueless when things go wrong. Take the longer, all encompassing route to becoming a ‘bottom’ programmer, and you might work your way up to coding for GPU clusters that crunch radio-astronomical data. The choice is yours.

On the matter of civility, and personal (or in this case anonymous) reputations: …

I’m outa here.

…the moderation wasn’t ever really up to par here, anyway.

With the 8051, IMO the best option is to use assembly. The bit-level I/O instructions are a joy to use and allow one to write very efficient code, usually obviating the need for external memory, and thus freeing up those pins for I/O. Back in the day I wrote 1000’s of lines of ASM-51, but today if you’re going to use an external memory chip then just use some more modern hardware and a high level language

The BASIC-8052 revamp looks really good. I could not see what is on the underbelly of the board, USB-TTL, and I’m missing the decoupling caps. Having a BASIC interpreter is really useful, especially for learning to code.

{Strange somebody’s comment got nuked about doing this old school 1980’s style to save a few bytes… those days are thankfully gone, lots of memory and clock cycles}

You can see some of the underbelly here: https://cdn.hackaday.io/images/8343561711385550585.jpg

Just not real clear.

bascom-8051 is not an interpreter – its a compiler. Performance is close to ASM

“The Intel 8051 series of 8-bit microcontrollers is long-discontinued by its original manufacturer, but lives on as a core included in all manner of more recent chips.”

Proprietary video editing board long relegated to history. Information hard to find, but deep in the system diagram was an intel core.

There might be a soft spot for it … but with so many better options out there (from my point of view) why go backward — except for nostalgia purposes I suppose. I had to go look up CH 558 and 559 as I never heard of these chips except in this article. Again, learn something every day.

As for Basic, well, I admit I recently wrote a Basic interpreter for fun to play some of the old text games on a Pico, but prefer c/assembly/Python for all current programming needs.

At 40¢ each they may be the right hammer/nail 🤷🏼♂️

The article was a little(/s) coy, I googled CH552t (the chip in the picture) and adafruit has this to say:

16MHz and 3.3V logic, built-in 16K program FLASH memory and, 256-byte internal RAM plus 1K-byte internal xRAM (xRAM supports DMA.

4 built-in ADC channels, capacitive touch support, 3 timers / PWM channels, hardware UART, SPI, and a full-speed USB device controller.

Does this mean USB on your retro computer? I don’t know, but the bit about the RAM not being connected makes me think you could plug this in to quickly check old computers for RAM/socket/PCB problems. If it works you can start troubleshooting from there instead of looking at power and display.

You may have a point with an RP2040 running at what, about $1.

Maybe it’s nostalgia for you but I have a front panel for a mid-2010s DVR in my spare parts bin that is driven by a modern 8051 replacement. They’re very much still in use.

I seem to recall many keyboards that used the 8051 chip.

If you mean PC type keyboards your memory is misleading you, they tended to use 8048/9 chips

Any one used Intel’s PLM-51 to write sofware for the 8051 or remember programing 8751s and using an UV lamp to erase to try again?

Yeah, 8748/8751 was my mom’s daily horse in those old days. I remember those evenings – her, sitting in her office with a PC-XT, amber monitor, development board, EPROM programmer, and an UV eraser with a couple of 87C51s baking in it. I even found the programmer in some box a year or two ago. Rest in peace, mamele.

There is no any problems in getting modern 8051 clone or even just original i8051 in any package you want.

Situation is way worse with replacement of i8048 that often could be found in vintage electronics from car ECUs to home aplliances and computer peripherals of 80-s era. There just no any modern pin and instruction compatible devices. It is possible to download code from 8048 if you have another working device around where 8048 could be desoldered and put into programmer. But then you have to disassemble code and rewrite for MCU of your choice. Not always an easy task. And of course you will have to make PCB to mimic 8048 pinout.

I’ve done 8051 development (in C), and I’ve played with the 8052, which was an 8051 chip with additional built-in ROM with a BASIC interpreter. (I would not refer to any sort of BASIC programming as anything other than “play.”)

So it looks like this board is a substitute for the 8052 and not the 8051.

“ANY” sort of BASIC programming is “play”?

As a former Access/Excel/Word/VBA Database Applications Developer, I beg to differ

I’ve heard (more than once) that Basic-51 was developed by one of the members of the band Looking Glass, famous for the song “Brandy (you’re a fine girl”).

Am I nuts?

The original Basic-52 had a hidden programmers ego message saying “John Katausky”. Later that was removed to make room for bug fixes.

John Katausky was one of my managers when I worked at Intel — hell of a nice guy.

Back in high school, John played in a garage band with Elliot Lurie, who would later found Looking Glass. Elliot had a girlfriend named Mandy, and John wrote a song of the same name. Despite the name, the song wasn’t biographical — just made-up lyrics.

John didn’t pursue music as a career. Instead, he went to college, earning a B.S., M.S., and was working on his Ph.D. in Electrical Engineering at UC Davis when Intel made him an offer he couldn’t refuse.

Meanwhile, Elliot continued with music and founded Looking Glass. He wanted to use “Mandy,” and bought the rights from John, who was then a broke student. It’s been years since John told me the story, but he said he sold the song for somewhere around $1,400–$1,800. He gave up all rights — which is why he’s never listed as a songwriter anywhere… except here. 🙂

Elliot changed the title from Mandy to Brandy — after all, who wants to sing about an ex?

In 2006–07, Intel laid off about 15,000 employees, including most of John’s team and myself. John chose to retire.

We kept in touch for a while. After retirement, a former colleague from many year prior asked if he’d consult on some decades-old chip still used in tugboat safety systems. Not really interested, he jokingly said he’d do it for $300/hour (about $450/hour in 2025 dollars) — and they accepted! Pretty good money for a short-term gig.

John Katausky passed away in 2023. He’s missed by many.

You’re not totally nuts haha, he wasn’t a part of the band but knew the singer through his mother and sold the original version of the song to her at a party for $1000 dollars. OH3MVV is also correct and his name was John Katausky.

You also have 1 less content contributor for your ‘authors’ to plagiarize.

MCS-BASIC is quite fun on the tiny CH55x chips. It will run on the CH552, but leaves about 600 bytes for your program. It would be nice if there was a way of saving the program (there’s no external flash/eeprom option) and nicer still if the serial communications used the CH55x USB ACM device instead of an external UART, but fun doesn’t have to mean practical …