In the early days of the World Wide Web – with the Year 2000 and the threat of a global collapse of society were still years away – the crafting of a website on the WWW was both special and increasingly more common. Courtesy of free hosting services popping up left and right in a landscape still mercifully devoid of today’s ‘social media’, the WWW’s democratizing influence allowed anyone to try their hands at web design. With varying results, as those of us who ventured into the Geocities wilds can attest to.

Back then we naturally had web standards, courtesy of the W3C, though Microsoft, Netscape, etc. tried to upstage each other with varying implementation levels (e.g. no iframes in Netscape 4.7) and various proprietary HTML and CSS tags. Most people were on dial-up or equivalently anemic internet connections, so designing a website could be a painful lesson in optimization and targeting the lowest common denominator.

This was also the era of graceful degradation, where us web designers had it hammered into our skulls that using and navigating a website should be possible even in a text-only browser like Lynx, w3m or antique browsers like IE 3.x. Fast-forward a few decades and today the inverse is true, where it is your responsibility as a website visitor to have the latest browser and fastest internet connection, or you may even be denied access.

What exactly happened to flip everything upside-down, and is this truly the WWW that we want?

User Vs Shinies

Back in the late 90s, early 2000s, a miserable WWW experience for the average user involved graphics-heavy websites that took literal minutes to load on a 56k dial-up connection. Add to this the occasional website owner who figured that using Flash or Java applets for part of, or an entire website was a brilliant idea, and had you sit through ten minutes (or more) of a loading sequence before being able to view anything.

Another contentious issue was that of the back- and forward buttons in the browser as the standard way to navigate. Using Flash or Java broke this, as did HTML framesets (and iframes), which not only made navigating websites a pain, but also made sharing links to a specific resource on a website impossible without serious hacks like offering special deep links and reloading that page within the frameset.

As much as web designers and developers felt the lure of New Shiny Tech to make a website pop, ultimately accessibility had to be key. Accessibility, through graceful degradation, meant that you could design a very shiny website using the latest CSS layout tricks (ditching table-based layouts for better or worse), but if a stylesheet or some Java- or VBScript stuff didn’t load, the user would still be able to read and navigate, at most in a HTML 1.x-like fashion. When you consider that HTML is literally just a document markup language, this makes a lot of sense.

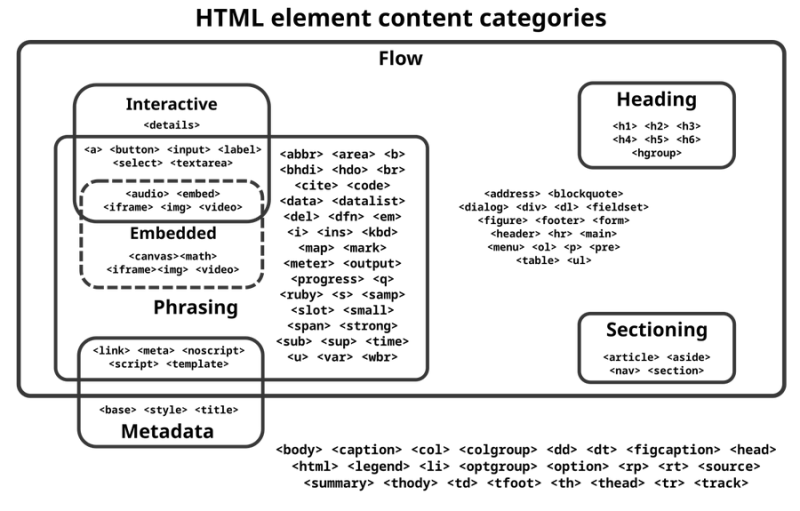

More succinctly put, you distinguish between the core functionality (text, images, navigation) and the cosmetics. When you think of a website from the perspective of a text-only browser or assistive technology like screen readers, the difference should be quite obvious. The HTML tags mark up the content of the document, letting the document viewer know whether something is a heading, a paragraph, and where an image or other content should be referenced (or embedded).

If the viewer does not support stylesheets, or only an older version (e.g. CSS 2.1 and not 3.x), this should not affect being able to read text, view images and do things like listen to embedded audio clips on the page. Of course, this basic concept is what is effectively broken now.

It’s An App Now

Somewhere along the way, the idea of a website being an (interactive) document seems to have been dropped in favor of a the website instead being a ‘web application’, or web app for short. This is reflected in the countless JavaScript, ColdFusion, PHP, Ruby, Java and other frameworks for server and client side functionality. Rather than a document, a ‘web page’ is now the UI of the application, not unlike a graphical terminal. Even the WordPress editor in which this article was written is in effect just a web app that is in constant communication with the remote WordPress server.

Somewhere along the way, the idea of a website being an (interactive) document seems to have been dropped in favor of a the website instead being a ‘web application’, or web app for short. This is reflected in the countless JavaScript, ColdFusion, PHP, Ruby, Java and other frameworks for server and client side functionality. Rather than a document, a ‘web page’ is now the UI of the application, not unlike a graphical terminal. Even the WordPress editor in which this article was written is in effect just a web app that is in constant communication with the remote WordPress server.

This in itself is not a problem, as being able to do partial page refreshes rather than full on page reloads can save a lot of bandwidth and copious amounts of sanity with preserving page position and lack of flickering. What is however a problem is how there’s no real graceful degradation amidst all of this any more, mostly due to hard requirements for often bleeding edge features by these frameworks, especially in terms of JavaScript and CSS.

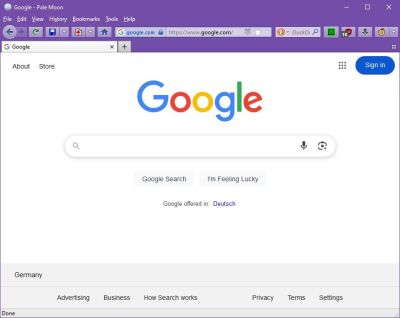

Sometimes these requirements are apparently merely a way to not do any testing on older or alternative browsers, with ‘forum’ software Discourse (not to be confused with Disqus) being a shining example here. It insists that you must have the ‘latest, stable release’ of either Microsoft Edge, Google Chrome, Mozilla Firefox or Apple Safari. Purportedly this is so that the client-side JavaScript (Ember.js) framework is happy, but as e.g. Pale Moon users have found out, the problem is with a piece of JS that merely detects the browser, not the features. Blocking the browser-detect-* script in e.g. an adblocker restores full functionality to Discourse-afflicted pages.

Wrong Focus

It’s quite the understatement to say that over the past decades, websites have changed. For us greybeards who were around to admire the nascent WWW, things seemed to move at a more gradual pace back then. Multimedia wasn’t everywhere yet, and there was no Google et al. pushing its own agenda along with Digital Restrictions Management (DRM) onto us internet users via the W3C, which resulted in the EFF resigning in protest.

Although Google et al. ostensibly profess to have only our best interests at heart when features were added to Chrome, the very capable plugins system from Netscape and Internet Explorer taken out back and WebExtensions Manifest V3 introduced (with the EFF absolutely venomous about the latter), privacy concerns are mounting amidst concerns that corporations now control the WWW, with even new HTML, CSS and JS features being pushed by Google solely for its use in Chrome.

For those of us who still use traditional browsers like Pale Moon (forked from Firefox in 2009), it is especially the dizzying pace of new ‘features’ that discourages us from using effectively non-Chromium-based browsers, with websites all too often having only been tested in Chrome. Functionality in Safari, Pale Moon, etc. often is more a matter of luck as the assumption is made by today’s crop of web devs that everyone uses the latest and greatest Chrome browser version. This ensures that using non-Chromium browsers is fraught with functionally defective websites, as the ‘Web Compatibility Support’ section of the Pale Moon forum illustrates.

Question is whether this is the web which we, the users, want to see.

Low-Fidelity Feature

Another unpleasant side-effect of web apps is that they force an increasing amount of JS code to be downloaded, compiled and ran. This contrasts with plain HTML and CSS pages that tend to be mere kilobytes in size in addition to any images. Back in The Olden Days™ browsers gave you the option to disable JavaScript, as the assumption was that JS wasn’t used for anything critical. These days if you try to browse with e.g. a JS blocking extension like NoScript, you’ll rapidly find that there’s zero consideration for this, and many sites will display just a white page because they rely on a JS-based stub to do the actual rendering of the page rather than the browser.

In this and earlier described scenarios the consequence is the same: you must be using the latest Chromium-based browser to use many sites, you will be using a lot of RAM and CPU for even basic pages, and forget about using retro- or alternative systems that do not support the latest encryption standards and certificates.

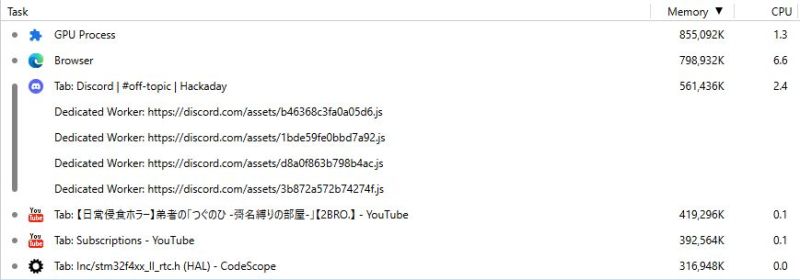

The latter is due to the removal of non-encrypted HTTP from many browsers, because for some reason downloading public information from HTTP and FTP sites without encrypting said public data is a massive security threat now, and the former is due to the frankly absurd amounts of JS, with the Task Manager feature in many browsers showing the resource usage per tab, e.g.:

Of these tabs, there is no way to reduce their resource usage, no ‘graceful degradation’ or low-fidelity mode, so that older systems as well as the average smart phone or tablet will struggle or simply keel over to keep up with the demands of the modern WWW, with even a basic page using more RAM than the average PC had installed by the late 90s.

Meanwhile the problems that we web devs were moaning about around 2000 such as an easy way to center content with CSS got ignored, while some enterprising developers have done the hard work of solving the graceful degradation problem themselves. A good example of this is the FrogFind! search engine, which strips down DuckDuckGo search results even further, before passing any URLs you click through a PHP port of Mozilla’s Readability. This strips out anything but the main content, allowing modern website content to be viewed on systems with browsers that were current in the very early 1990s.

In short, graceful degradation is mostly an issue of wanting to, rather than it being some kind of unsurmountable obstacle. It requires learning the same lessons as the folk back in the Flash and Java applet days had to: namely that your visitors don’t care how shiny your website, or how much you love the convoluted architecture and technologies behind it. At the end of the day your visitors Just Want Things to Work™, even if that means missing out on the latest variation of a Flash-based spinning widget or something similarly useless that isn’t content.

Tl;dr: content is for your visitors, the eyecandy is for you and your shareholders.

In my book the S of JS does not stand for “Script”.

I hate pharmacy websites in my country. All of them reskinned copies of each other, and have so many scripts that I have to open in an ublocked tab to see them properly.

we’ll get to the point soon where a website is just a link to install the mandatory proprietary app from some walled garden app store

Welcome to the Chinese “www”

Actually, the Chinese Internet is very modern.

It used IPv6 from very start and didn’t bother with legacy tech.

If that’s good or bad is another story, though.

App will just frame a locked down broken browser.

And that app will refuse to open unless you grant it literally every permission your operating system has short of Device Admin (looking at you, Meta family of apps), and use it to exfiltrate literally all of your personal data, because a mere webpage in a “standard” browser doesn’t have that kind of access.

If a user grants these permissions, the user is part of the problem.

We are already there. Last year Cinemark wouldn’t let me browse desktop mode, it blocked me completely. This year Lowe’s does the same. Heaven forbid I want 500+ DPI on my 2,000+ wide pixel screen 🤦🏼♂️

My favourite is using the Air Canada app, where 90% of what you want to do it boots you back out to the browser, which then suggests that you try their app.

Were everything is started from :D

Everyone said I was daft to build a site on a JS swamp, but I built in all the same, just to show them. It sank into the swamp. So I built a second one. That sank into the swamp. So I built a third. That burned down, fell over, then sank into the swamp. But the fourth one stayed up. And that’s what you’re going to get, Lad, the strongest website in all of England.

TFA author is going easy on ‘them’.

Some JS on the browser WAS just unavoidable.

Those fools run JS on the server…spit.

Best prospective hire question for company:

‘Do you now or have you ever run JS on the backend?’

You’d never want to work for a company that makes that kind of decision.

90%+ of staff are at their level of incompetence, the rest are looking to get out ASAP.

Likely storing critical data on mySQL.

Resume stain, burn bridge before crossing.

Amen. Literally just had a coworker complaining about this as I was in the middle of reading.

Appropriate tools for the job.

Some sites should be static html, but that isn’t applicable to all sites.

It’s kinda hard to pull content updates from a static page, so you add a refresh meta tag, but then you have an issue with browsers that support JavaScript but not the refresh meta tag. So you add some JavaScript for those cases. Then you notice a layout issue that exists because multiple browser vendors disagree on what the standard says. You can fix it, but it’s probably another smidge of JavaScript. Then you do that, but stumble across another issue where you need to configure conditional comments. It’s ugly, but the only browser that gives a shit about them is the one browser that fails to meet anything called a standard. Now that you’ve got that covered, someone’s reached out to you and asked you to update the phone number for the bash web’s that’s on the site. It’s not hard to fix, but there’s 200 pages, and while most of them could be search/replaced, it turns out there’s a couple dozen that won’t work for.

Now you realize if you just generate the pages the problem will be eliminated so you start converting to a scripting language like php…

I agree in principle with your statement that “some sites should be static html,” but HTML isn’t really standardized anymore, so I don’t know how to make good static HTML today. In a century, will it still be readable as it is today?

“HTML isn’t really standardized anymore” – is HTML is less standardised now than it was in the bad old days? What’s non-standard now that a static page would struggle with?

Yes, HTML is less standardized now than it was in the bad old days.

In the bad old days, there were versioned standards that web page authors could refer to. These days, there’s a “living standard” (i.e., not a stable standard) that basically waves its hands at whatever WebKit is doing this week.

Not WebKit for a long time, the Chrome team forked Blink seat in 2013, due in part to issues with Apple’s OS specific changes. This means that Safari also breaks on a lot of things now, and that is going to get worse.

In a venture funded company right now Safari story won’t even be tested unless the CEO uses it. In some cases even Firefox won’t be.

As for your second question, I don’t know what page elements could at some point get deprecated and removed, and that itself is a problem. Since there’s no stable standard that I can point to and say, “this page needs these features,” it’s not possible to write a static HTML page and expect it to be readable in a century.

“Living standard” is an oxymoron, yet WHATWG acts like it’s somehow a real thing.

Any HTML will still be readable in a century because the source is legible. It’s not like it’s being compiled from some bizarre moon language, it’s just markup scattered through plain text. No meaning will be lost. People can still read classical Latin hundreds of years later, English with a few tags in will be trivial.

I’m curious as to what sort of sites you’re building that are still going to be online in a hundred years and receive no maintenance in the intervening time.

At some level it’s all undecipherable “1”‘s and “0”‘s without some kind of translator between it and me.

Plain old html with simple tags like body, title, a, p, img, b, i, em, ul, ol, li, br, h1, h2, h3, sub, sup can probably be expected to work for a long time. And for most static non interactive sites, I think that’s pretty much all you need.

Absolutely right. Plain old html tags. I am all for it as information is what we normally are looking for. Not glitz. Text and tables, images. Perfect for most things.

I agree! That is why I still nowadays create new websites always in plain text. For my static content I do not even use mariaDb/mySql as it is static anyway. I am fluent with modern html js and css so that is all no problem. The sites look very modern, because the (static minimalistic) css and js and html ís modern (but less than 1 kb).

I do use some small default css to reset the layout, and some other trivial css.

I also use a tiny bit of js and php to make sure the robots.txt and such are automaticly taken care of, and web forms are protected from spam.

The result is that the sites are blazingly fast loading for the user (average page is 300kb including all .avif images and .svg icons in css) and run bizarly fast on the cheapest cheapest shared hosting too.

Excellent, I would love to see some examples of your work!

“I also use a tiny bit of js and php to make sure the robots.txt and such are automaticly taken care of,”

I’m curious/baffled about what you mean by this? You run JS and PHP on every refresh/reload to make sure of what?

Nah, hard disagree. With modern web sites this isn’t even necessary. It is completely possible to build an interactive page with “live” elements that also graceful displays only static content with less or no JavaScript.

And doing this is a GOOD THING. Being able to directly link to content is good, being able to save a web page snapshot is good, and none of this is hard.

The problem is that companies don’t want this. They want an opaque proprietary application you can only view through a particular lens and cannot view offline in any meaningful way. This isn’t speculation, it’s a literal directive in some places (though often using much more bs in the language).

This of course has consequences. Once they are building an application they don’t want to support standards, they want to support a specific “platform”. That was IE, remember ActiveX in web pages? Now it’s Chrome. Not that Mozilla is helping with their schizophrenic management practices.

One of the problem is wanting the website to look exactly the same in each and every webbrowser.

Who cares if a box is a bit bigger or smaller, or if the round corners d’ont have the same shadow?

I came to have the god damned opening hours! I don’t need to load 2Mb of javascript and activate cookies for that…

But, yeah, for multiple bad and worse reasons, most companies want it that way.

On the other hand, nothing prevent you from building your personal website “the correct way” = )

imho

Yes! This seems to be a constant tug of war between the idea of intent based markup (which aims to be device independent enough for screen readers, screens of different size and shape, touch screen vs. mouse, etc.) and the idea of tightly controlling appearance (as if it was a print layout) and interaction (as if it was a real local application).

This dovetails with the economic imperative to squeeze as much user interaction data and tracking for advertisers and analytics where if we went back to single static pages where every single request had a cause the user could identify and content they could verify and control. Using a chatty web design paradigm (especially one chatty over an inner encrypted web socket) makes it a daunting task for the user to audit content and purpose of all communication to gague what may be exfiltrated.

Remember web bugs and browser cache mining? Those were easy to identify and sidestep by comparison. It seems the whole mess is very entangled these days…

You mentioned W3C and web standards at the beginning of this article. But those days are dead and gone, thanks to the sabotage of the WHATWG.

To be honest, my biggest concern about the web is that we no longer even have real standards. As an author, I can no longer write a (halfway) modern HTML page to some stable specification and know that it will look the same on a browser that implements that particular specification. The web is not archival. I’m worried that our modern web will entirely be lost, at least in its proper presentation, in a few hundred years’ time.

Don’t believe me? Try the Acid3 test in a modern browser. Every one I’ve tried scores 97/100 these days, where they used to pass perfectly around a decade ago. Then try the Acid2 test and be even more shocked.

You are being a little too optimistic. There are far too many sites out there right now with JS and CSS scripts that don’t work correctly in current browsers. The problem is already here.

I don’t think anything I wrote was intended to sound optimistic. I know the problem is here and I said so, please don’t twist my words.

Viewing compatability and interoperability with contempt isn’t isolated to web development.

There is a big push to move everything over to the latest and greatest framework and language. Even if that involves stripping out all support for alternative architectures and operating systems.

This is nothing new.

In the 2000s half of websites were built around Flash. The other half were tumorous masses of tables. And don’t get me started on the little badges in the corner saying “this site is made for version-whatever of Internet Explorer”

The first wave of destruction came when Netscape started showing images as part of the webpage, not just as links to a file that would open separately. That brought in the graphic designers who didn’t know what HTML was supposed to do, and didn’t care. Their mindset was “screen equal paper; pretty thing I make go right there.”

The second wave came when the executives who’d turned TV into 50% advertising and 50% formulaic mass-market pap saw a new medium and started doing everything in their power to turn John Perry Barlow’s “Death from Above” into a business plan. The idea that you can’t force people to download advertising in a pull-based network is still an affront to their souls, and they’ve never stopped trying to make that go away.

Concurrent to both was the internet’s threat to Microsoft. Having a computer’s value tied to remote servers undercut Microsoft’s efforts to own the desktop, and piping that value through network connections 1000x slower than the CPU undercut the Wintel treadmill of buying a new machine every two years.

Microsoft’s customers have always been the OEMs who consider the OS a component, and the industry groups who want technology standards that keep them in power. As such, Microsoft has an unbroken track record of building whatever technology the industry groups want, especially when the industry groups are willing to accept an undocumented proprietary standard belonging to Microsoft.

The interests of those three groups intersected in walled gardens were the corporate overlords could decide what the masses would be allowed to see, hear, think, and talk about.. just like TV and print publication.

https://imgs.xkcd.com/comics/infrastructures.png

“In the 2000s half of websites were built around Flash. ”

They were pretty and playful, at least. The CPU went somewhere, at least.

Modern websites are full of scripts while looking like a blank HTML site.

Also, Macromedia Flash/Shockwave were separate and consistant, at least.

ActionScript was almost same as JavaScript, too.

“The first wave of destruction came when Netscape started showing images as part of the webpage, not just as links to a file that would open separately.”

And? As if CompuServe+WinCIM or AOL or Prodigy or Minitel/BTX was any different.

Before the www, we had various online services with their graphical clients.

And these did try to load graphics my default, if the feature wasn’t disabled manually in the settings.

Netscape did at least support displaying graphics while loading the site.

It supported interlaced GIFs and progressive JPGs, I think.

Info: https://www.desy.de/www/faq/pjpeg.htm

“The other half were tumorous masses of tables.”

Ah yes, the good old days! 🥲

We learned doing that at school! Microsoft FrontPage was our power tool!

“And don’t get me started on the little badges in the corner saying “this site is made for version-whatever of Internet Explorer””

You forgot the resolution note (“best viewed in 1024×768 pixel resolution”).. 🙂

If NoScript breaks a webpage then I just never visit that site again. They obviously didn’t want me there anyway, right?

LIAR! Here you are posting at HaD which requires JS to comment.

Well, on my computer commenting simply doesn’t work (it did for a pretty short time some months ago, though, but I have no idea why). The moment my employer stops tolerating the use of his computers for that I won’t be able to comment any more. That is also the main reason I don’t have a hackaday.io account.

i think i have the same preferences as OP, but i’m surprised to say that in every detail i think i feel the exact opposite!

things used to change so quickly! i watched flash, javascript, and css spread across the world in front of my eyes. when those things were new, you really had to have the absolute newest browser. and it wasn’t just because of badly-designed websites…you really wanted the new features.

even into the late aughts, i struggled to view media reliably on linux. all of the standards were new, there was no mature standard where the core functionality hadn’t changed in years, and where all of the major browsers had caught up to that core functionality. there were pages that that needed the absolute newest browser, and also pages that required an old and obsolete one! it was awful.

today, the standards are stronger than they’ve ever been. and love-or-hate the chrome monopoly, but it has given us a defacto standard in addition to the formal ones. it’s a good thing, not a bad thing, that users all all auto-upgrading their browsers every 2 weeks. but old browsers are good too! in 1995, there were no old browsers, but today you can use an old browser if you’re crazy enough to want to, and you will still have an enormous set of modern features.

so as someone who still sometimes has to put together a website, i have never been in a better position. for example, i was frustrated by an oversight in the design of css. and i discovered w3c had finally invented a good feature for my specific needs: vw/vh – viewport width/height units. and i was discouraged because i grew up in the 1990s and i know every good answer to a problem is too bleeding edge to actually use. “how many years will i have to wait until 90% of end users have access to this newfangled feature?” i wondered.

my brother in christ, vw/vh was invented in 2012!! ever since 2012, css has been good enough! in the 90s, CSS didn’t exist. in the aughts, CSS was not good enough. in the teens, good enough CSS was still “new”. but this is 2025 and good-enough CSS is more than a decade old!!!

so yeah there’s a huge problem with a certain class of website. but the underlying infrastructure for new content / service developers has never been better. and i think the greatest thing is that if you want to live in some sort of isolated ivory tower, but sometimes you are forced to use a modern browser…that’s never been easier either. buy a $30 android phone at your grocery store and you’ll have the most bog-standard least common denominator browser. show me that hack in the 1990s! we used to dual boot into windows so we could run MSIE because we couldn’t afford a second PC!

This. ^

“In 1995, there were no old browsers”

Sure there were! Please don’t me started!

There were world web browsers which used HTTP 0.9 (1991) or a draft of HTTP 1.0 (1992-1996), for example. Final HTTP 1.0 was specified in 1996.

https://http.dev/0.9

https://en.wikipedia.org/wiki/HTTP#History

Minuet internet suite in 1994 did partially support HTML 2.0 (pre-HTML 2) and was the defacto standard for DOS users or IBM PC users.

https://en.wikipedia.org/wiki/Minnesota_Internet_Users_Essential_Tool

The original MOSAIC world wide web browser..

It was basically made obsolete the day Netscape Navigator came out.

https://en.wikipedia.org/wiki/NCSA_Mosaic

Cello, from 1993, first Windows 3.1 web browser.

https://en.wikipedia.org/wiki/Cello_(web_browser)

IBM WebExplorer on OS/2.

Shortlived, promising, but with very limited capabilities just a year later. Outdated by 1995.

https://en.wikipedia.org/wiki/IBM_WebExplorer

Erwise for Unix/X11. From 1992.

https://en.wikipedia.org/wiki/Erwise

Last but not least, the various Amgia web browsers.

They were pretty much all being outdated all the time! 😂

More information about old wide web browsers:

https://en.wikipedia.org/wiki/List_of_web_browsers#Historical

https://en.wikipedia.org/wiki/History_of_the_web_browser

Nevermind the scripts, Try simply blocking tracking cookies and watch how many sites turn into a blank page. A few here and there will toss up an error message about disabling your security. Cloudflare loves to toss the “I’m not a robot” check box bull@*#, for cookie blocking.

And lets not forget Firefox for disabling any add ons running in an older version of their browsers.

HTML is more-or-less based on the printing industry concepts, canvas, offset, font, etc etc. From the beginning it wasn’t GUI, it was document formatting, which is different. Hence, CSS plugged the gaping hole within, but that’s where the line was crossed – since documents HAVE to be dynamic, merely formatting, however fancy wasn’t good enough. Right, with any GUI you’ll have some kind of need for variables, and W3C was asked to add variables to handle all kinds of internal stuffs, which they finally added to the CSS. Again, neither HTML nor CSS was meant to be GUI, so it couldn’t support scripting, however nice it might have been. Adobe saw that and added scripting to PDF, which opened a can or worms, “can you run Doom in PDF” started right away (along with script kiddies exploring all kinds of security holes that scripting usually does – indirectly).

I share the pain with JS, I’ve used it on and off (and some JS projects I wrote pretty much created entire GUI frameworks, data validation and all – within the browser), but it was always not the best tool for the job for many reasons – afterall, it BECAME the language of choice, but originally it was just a simple glue-on scripting. It was not good at things that would require good computing so to speak. It is the interpreted language, and meant to be only used within the rendering engine. It never was good at what it was used for, building entire frameworks of business logic.

Browsers are only as good as their rendering engines, which was always the case except for the M$ attempt at making it part of the OS (by baking in the engine into the DLLs so that it cannot be removed without destroying the OS). That might have speed up the scripting (M$ was pushing hard at the time to make VBA the first scripting choice, again, baked into the DLLs), but it really didn’t – I had projects where we were unsure which one we’d be using, so we wrote two version, one – in VBA, another one – in JS. Both sucked equally badly, just in two different ways.

Point being? Neither HTML nor JS nor anything invented since (that I’ve seen so far) invented much new since umm … I’d say mid-2000s. HTML did NOT became GUI standard, though, there was notable push to make it uniform GUI that will work similarly (if not the same) on all wares. We ended up with TWO GUis, one – OS’s native, another one – in the browsers. Neither one won over the other. There were attempts at making HTML/CSS the GUI – actually, M$ Outlook lets one generate/render HTML/CSS and edit it as needed, but it is an afterthought, too, cludgy, etc.

It is a mess of a gargantuan proportions, much worse than the incompatible hardwares/softwares of the 1980s and 1990s. I don’t see any real breakthroughs happening any time soon, only deepning the already entrenched niches (Node.js … erm … who thought this was a brilliant idea, no, it wasn’t, yet, it won over similar projects due to simplicity, but it is not exactly grand to start with … it had to do with generations of JS programmers loving the thing, so they demanded it becomes client-server deal … weird, but true; though, ANY language now can be client-server, plenty of glue-on libraries to make it happen).

Back to the topic at hand, HTML/CSS is reasonably good enough for ITS MAIN PURPOSE – document formatting so that it can be rendered by a browser engine. Of course it will work 100% of the times, and of course it will be darn fast doing what it supposed to do. Details vary a bit, but since we are down to what, three winning render engines, the differences are not that grand any more, more like annoyances. Again, document formatting, and NOT GUI, ie, active controls that react to your actions, and that’s where the confusion (IMHO) starts – this was never the point of document rendering, making them actively react to your actions. The two serve two different purposes, but this is where it all started – GUI is kinda sorta a document that’s infinitely flexible and easily outshines the menu at the top of the browser (translation – menu at the top bar WAS the original GUI for the document rendered … that’s why it was at first NOT embedded within the document, because aside from the hyperlinks that WERE the sole reason for the WWW to be invented, WHY would it be there?)

Just outlining the framework and a bit of a history.

As a side note, the WORST part of the web pages that are now portals amalgamating gazillion JS libraries from bajillion sources, say, MSNBC news page. Some are well-known libraries, some are in-house written ones, some are pulled elsewhere, which, by themselves, repeat the thing, too, loading their own framework libraries, some are well-known, some are custom-written, etc etc. There are also hidden libraries that run on the backend and generate the JS libraries that load other libraries that point to other libraries. Pointer to a pointer that points to a pointer. Arrays of pointers that point to arrays of pointers that point to arrays of pointers.

You get the idea.

The ironic thing about PDF is that it was originally intended as a simplified, cut-down, version of PostScript, which was judged to be getting too big and complex.

PDF 1.3 was fine, I think. :)

I had not expected to see the day when a PDF was lightweight. But recently one tab had a 1000 page book with pictures in “only” 17meg, while another had not much more than a page of text, but took 760meg of memory because frameworks

Yep, I’ve programmed PDFs. Literally. Had to use the horrendously expensive Adobe PDF Editor (years back when it was still standalone, and NOT per-use licensed, umbrella licensing for entire organization). File size was quite large, and I even tracked the reason – parts of it were pixelated/raster images. PDF, the jack of all trades, can do all three, plain vanilla ASCII text, vectors, and horrible raster-scans embedded within. I was wondering why they went with the raster imaging – more on that later.

My project back then? You guessed it, create a GUI that users can fill in. Script it so that the data will be submitted to a web service. Horrible. Never again. Total of 24+ forms. Took me almost a month, testing, etc.

Story continue – Rewind few years later and I get a different request “remember those forms? users are complaining they are not working well and there is no proper error checking”. Aha. Out comes trusty ASP.NET and creates a web page through which they will do their data entry, error checking and all, and AFTER THAT the web service will fill THOSE SAME PDFs with the data they just entered. iText was used, wonderful library for doing server-side PDFing, and speaking Java when needed. Oh, whilst doing that I figured out how to replace the raster images with their plain vanilla ASCII equivalent. I work for a shop that’s very very adamant about things like the font size used, so I had to make sure it is exactly the same, except smaller files. I don’t remember stats, but I slimmed them all down to like 1/10th each. I also tracked down the dude who scanned rasters into PDFs and asked why oh why he did that. His literal answer “I’ve been told by the management”. Same reason. Particular font HAD to be used.

Which brings me back to my own reply – PRINTING INDUSTRY had all those growing pains decades ago, and they had figured it all out. Mid-1970s to be exact, maybe 1960s even. Actually newspaper publishing was where all the magic was happening, document handling, standards, lowest rez to print the photos and how (dots that are either tiny or wide – PWM = pulse-width-modulation equivalent of the modern days’ uber-technologies so to speak). Newspapers also had decidedly limited real estate (only few pages – compared with books or magazines that can always add extras), so they’ve worked it all out. Look at any web page now and find the same challenges, which content goes where, for what reason, etc etc. Newspaper editors of the past, we need them NOW.

Other than that, SVG may pick up the slack from PDFs, since it pretty much can do the same AND it is no longer proprietary. SVG can handle all three, vectors, raster images, plain vanilla ASCII, and has its own scripting (in addition to the whichever browser’s favorite will work too, JS or VBA, depending on the rendering engine). Compressed/zipped SVG may become emergent standard. May. PDFs are more popular still.

PostScript was actually not bad, relatively easy to learn and master (when needed). My shop STILL has some PostScript stuffs, which I avoid, but for a different reason (I have more fascinating/shiny projects to pursue). I personally found LaTeX better, but that’s me, nobody mentions it any more, at least not where I work. LaTeX separates text from presentation, and compressed LaTeXes are quite light.

Shoutout for LaTeX: I use it for all my documents including letters, and I remember it being popular at the university. I wish it was more common, but I fear, it then would be destroyed from within the same way all Nice Things are destroyed nowadays, if they become popular.

I can only imagine that things have become more challenging for users with vision impairments to navigate. There are built in readers for many platforms, but without the document structures… 🥺

A friend told me downloading pr0n was specially galling with all those pop ups and slow speeds.

Yes and no…

Accessing stuff without https is a security risk unless you trust all the networks. Unless you’ve got a completely safe OS/browser without any exploits, in which case welcome to the Security Friday column. And it’s a privacy risk.

Forms pretty much mandate JS to prevent spam, which is unfortunate but better than no forms or spam filled sites.

But there’s no excuse for sites not using basic HTML markup. Those drag-drop builders leave a horrific mess of nested divs and stuff. And often rely on the latest features.

Though given how unsafe any not-latest browser is, only supporting the latest isn’t as big a deal as it used to be. If your 6-month old version of chrome doesn’t work for a website, upgrade it already before it gets exploited.

“Accessing stuff without https is a security risk unless you trust all the networks.

Unless you’ve got a completely safe OS/browser without any exploits, in which case welcome to the Security Friday column.

And it’s a privacy risk.”

Arachne on DOS? Or MicroWeb 2? 🙂 It works surprisingly well.

If there only wasn’t HTTPS, which is such a power-hungry behemoth.

Not even the power of a whole dozen of C64 is capable to handle HTTPS.

Even NeoCities now requires HTTPS, what a shame!

Because the only workaround is using an external proxy for accessing HTTPS servers, which might not be trustworthy.

The forced HTTPS thus causes issues on platforms that had no issue before when HTTP still was widely being supported as fallback.

HTTPS is as much of a cure as IPv6 is, I’d say. ;)

“Accessing stuff without https is a security risk unless you trust all the networks.”

Alright! But why do I need encryption when I’m visiting fan sites of Harry Potter, Star Wars or something along these lines?

These are harmless things and can be tracked no problem, I don’t mind a profile of me being made.

Fans of such things used to have their personal interests being listed on their personal homepage and everyone got to know.

Nowadays, they do it on anti-social networks.

No HTTPS can help here.

Back in the day, there was a book called a “telephone book” with all sorts of personal Information, such as names and addresses.

I do understand that things like on-line banking do have a need for HTTPS, but even this used to be a non-issue.

Way back in the 80s, 90s and early 2000s people used online services for things like that,

which couldn’t be hacked in same way like internet.

AOL and CompuServe had features for booking a flight, home banking etc.

Here were I live, T-Online Classic was a thing. It allowed for a non-internet connection to the bank computer.

The main problem is bad guys hijacking a machine in the middle and replacing data. You think that the obiwankenobi.jpg is perfectly sane but without encryption it can be replaced with a version that has a little package attached that your imageloader.dll/.so will accidentally execute.

The modern web is a place full of back-behind openings and not the nice place it was in the 90s anymore.

But then that’s a problem of bad browser/OS architecture, rather, isn’t it? 🤨

There are MIME types that are supposed to tell the browser what the type of file it is.

The file extension itself should be meaningless,

the image processing code should check for header and footer/end-of-file section, too.

Buffer overflow exploits are so 2000, also.

Things like NX-Bit/DEP should help to separate data from active program code.

Things like WMF (Windows Meta File), too.

Heck, even Windows 3.1x had protection based on segmentation (in Standard-Mode, at least).

And the internet never really was a safe place, I think. 🙁

My generation always knew that, at least.

The internet was like a long, dark alley or a lone highway. 😣

I just think that all the encryption has a reverse effect, it encourages hacking.

Just like copy-protection schemes in the 80s on C64/Amiga took the interest of young crackers.

They saw it as a challenge to crack games and applications.

Without the copy-protection, the level of piracy might have been much lower for a given application/game.

Sometimes it’s just funny. Does anyone remember upsidedownternet?

No. Use a browser just out of support. The old security holes are fixed, and the new security holes are in the parts not implemented yet in your version.

This is why I like the Japanese speaking side of the internet. Everything is plain HTML+CSS. Everything is in columns. JavaScript is indeed used, but not to add visual clutter.

All information is available on the page at all times, your eyes and attention is the only limiting factor

Its simple, and lovely

+1

It’s comparable to how the western web used to be in 2005.

Before the iPhone had introduced the dumbed-down mobile web.

In Japan, the flip-phones also didn’t die out so easily, I think.

Things like IrDA remained important to exchange digital business cards.

To this day, I wonder why our western web had to end like this.

For mobile web, we already had WAP and i-mode technologies.

Why was it needed to strip real information (text!) from normal, desktop-oriented web and turn it into this mess?

because the web can be used to do things now, not just read about doing them.

“can be used to do things now”

Woah! That’s so deep, man! 🤯

Dunno about you, but we had forums in the 90s and they worked just fine. Even web chat that had a no JS fallback was there for everyone to enjoy.

My first real experience with the web was at Karstadt Essen when they opened their Cyber Cafe somewhere in 1995 and they had a chat system that worked without anything fancy in the browser.

And IoT? Still no JS needed, a link that tells some CGI script running on your Pi to turn on a lamp with work just fine.

IMHO, forums/BBBs and chats don’t REALLY need fancy-schmancy frameworks to run. Extended ASCII (or unicode) communications are still doable. Literally, one can forum/chat through the command line just fine (well, with some initial learning curve that’s not exactly user-friendly).

I am kinda puzzled why glorified forums (facebook, etc) don’t have plain text equivalents that can work through Curve, since their mobile versions are not much better (relatively speaking) than their PC versions. I’d would imagine one can probably fake PC and extract ASCII out of the bitstream, but that’s a bit reegnineering, and I suspect they’ll just shut down what they don’t like.

IoT picked up the slack quite nicely – sometimes one just DOESN’T NEED all the uber-extra to slosh around, say, weather data (or local/regional news, or market data, anything that can be plain/extended ASCII). Meshtastic got the right idea – I’ll even go as far as call it “TRUE wireless low-speed internet”, since it kinda sorta follows the same paradigm (packets forwarded through multiple independent gateways – yes, Vint Serf and Co, who, btw, also invented what they called “Galactic Internet” to talk to rovers on Mars). I’d also say we are indirectly into re-inventing regional/local CB channels, because the technology is there and Meshtastic is kinda sorta the modern equivalent of the CB channels of the past.

So, in short, if it is not banking or private, there is no reason why the data has to be drowned in add-ons making it hefty to push through. As mentioned, weather feeds, local news, rumors and market data, public chat, etc – all that can easily fly though Meshtastic and relieve them internets from all the extras that usually gets attached to it.

What’s missing now, is hundreds public-use low-orbit tax-funded gateways that would extend Meshtastic to the municipal/state level, but I am an optimist, it may just happen. 6G, so to speak, and not only for the profit-makers (for-profit comm providers). I am also looking forward when my quantum link 7G (I hope) connects to any spot on Earth without expensive satellites, which may happen at about the same time, too : – ]

Breaking changes are breaking changes fam. Yes backwards compatibility is nice, but it was far more important when browser updates were slow and took hours to download over 56k. Consider this: how many hours of labor has the decision to make ethernet RJ45 wiring backwards compatible cost? 25 years of the whole planet untwisting that silly green pair and trying to get it to lay on either side of the blue….might have been necessary to drive adoption in the 80s, but nobody has used that particular “feature” since.

Dude, I was there back then, in 1996.

The websites and software were much smaller, in the KB (Kilobyte) range.

Things didn’t take hours on average, but just a couple of minutes, even on dial-up.

Except for video files or MP3, Real Audio etc.

Your typical web storage was 2MB in total. A bit more than an 1,44 MB floppy.

Yes, that’s right. Private homepages did fit on a single floppy at the time!

Late 90s programs such as MS FrontPage even had shown an estimation about how long a website loads with different speeds.

You could check how long it loads with 14k4, 28k8, 33k6 and 56k modems.

Let’s remember: People back then weren’t stupid, they knew what they were doing and saw the limitations.

They couldn’t know however, that the dystopian world of Idiocracy would be comming eventually.

Because the film wasn’t even made back then.

PS: There also was T1 mines and ISDN in the developed world.

ISDN had full 64KBit/s or 128KBit/s (two phone lines combined). In the 90s.

“how many hours of labor has the decision to make ethernet RJ45 wiring backwards compatible cost? ”

None. Because the transceivers in the network cards are smart.

They can figure out if it’s a normal LAN cable (straight) or a crossover-cable (null-modem).

Also, all wires are being useful. Higher bandwidths need more wires (and better shielding).

So the “obsolete” wires of the early 90s, of an earlier standard, aren’t really obsolete. It’s just that more pairs are being used now.

https://en.wikipedia.org/wiki/Ethernet_over_twisted_pair#Cabling

What’s bad though: modern network cards nolonger have an AUI port.

It was useful to “convert” an existing network device so it can be used in a different topology.

https://en.wikipedia.org/wiki/Attachment_Unit_Interface

There are various perspectives on the topic of graceful degradation, but here is my viewpoint:

Initially, web layouts relied on table-based structures, which many would agree were not an ideal solution. These were largely replaced with the advent of Cascading Style Sheets (CSS) and HTML 4, allowing for more flexible and semantically meaningful page design. The introduction of

<div>elements enabled developers to segment content into distinct areas such as navigation and main content. This semantic structure improved accessibility, allowing screen readers and other assistive technologies to efficiently navigate to and interpret the important parts of a webpage, thereby enhancing the user experience.However, in recent years, the proliferation of JavaScript frameworks has complicated accessibility support, sometimes hindering assistive technologies’ ability to accurately interpret and present content to users. I believe accessibility should be a top priority in web development. While innovative and engaging features are valuable, they should not come at the expense of accessibility and usability for all users.

What kills me is the use of JavaScript to submit basic forms such as checking out. This code then fails because I am blocking tracking by Facebook, etc. It is a common practice it seems to report when you buy something to a bunch of 3rd parties, and if you block that, well we won’t sell to you.

In 1999, in the middle of a push to make all state websites accessible, mandated by the current governor, a lead programmer snarkily said to me, the ISO and webmaster, “I don’t care if blind people use my program!” Unfortunately for him, I was in a position to enforce said rules, as agency heads were bugged about the deadline every week. I showed the coder reluctantly assigned to the task the very minor changes needed and she completed them and we tested them for accessibility in one morning. That was the entire program interface and subsite. It annoyed him to no end, as he had apparently planned on blaming “Accessibility” for his delay in implementation. The coder is now the department head. As my brother would say, “Heh heh”

His attitude, however, has spread into businesses who forget that studies show that that the users of accessibility have cash to spend, and are highly loyal.

No profit in doing the right thing.

I’ve came to the conclusion that no matter how fast we make CPU’s or being able to increase physical RAM limits, we will always continue to bloat everything to the point that we are always just a hair behind the resource wall. “Wow! PC’s are standard with 128Gig of RAM now! I’m going to put all kinds of bling and flashy useless stuff in my programs now!”. That puts you right back where you started. One step forward. One step back. Never gaining any real advantage. Imagine how fast our systems would be if we created software modeled after 1990’s limitations with multi-core CPUs and Gig after Gig of RAM. We used to develop programs to take advantage of 4Mb (Megabytes) of RAM and CPUs under 100Mhz. We were careful about how much bloat we put into our programs. But as I look back it seems that every time the resource limits were raised, we fattened up our programs so that any advantage we gained by the increase in CPU and RAM was once again, degraded. It’s a losing battle sometimes. Imagine how fast and responsive Windows 98SE would be on modern hardware. Not saying would should go back to WIn98 but, I think as developers we should start thinking more about performance and usability over flash and bling.

That’s ‘Gate’s Law’.

The reciprocal of ‘Moore’s Law’.

Moore’s law is dead and gone.

The first 1 gig processor was 2000.

We should be rocking 4.5 THz.

(Yes, I know, that’s not what Moore’s law actually says.)

Anyhow, ‘Gate’s Law’ will not die.

The only thing keeping our system from ‘running like it’s 1975’ is the sub Moore’s law rate of ongoing performance gain.

Just wait until the new OS written in JS becomes standard…it will be great!

An ever increasing number of software use webapps instead of native coding, which obviously saves money during development, multi-platform development is never easy.

However, we have already reached a point where seemingly “harmless” software from reckless enough, mostly large companies can take up a half or rather nearly a gigabyte of real memory. The new basic unit… Examples include not only the Electron-based monstrosities but “simple” instant messaging apps and text editors as well. Anything.

I don’t know how else they could be developed to remain affordable and without lacking functionality, but if this trend continues, you’ll need to purchase additional RAM before installing any software.

Some frameworks claim to use so little memory that they are no worse than normal applications. I tried a couple of them, but, of course, this was only true for “Hello world”-level applications.

I think users want functionality. You cannot do a chat in pure HTML. Web is developing.

And encryption, it’s good to have even for downloading public data, because site hijacking is a thing.

I also bang my head because of wasting bandwidth, but it’s mostly because of poor website design, not necessarily because we have scripts on sites.

(E.g. you download the scripts one time, and after then, it traffics less data to site, because it does not download the whole page again, just what you send/receive in the page.)

//You cannot do a chat in pure HTML.

Says who? Static HTML chat with zero Javascript was very much available as a fallback in the 90s.

Tell us you weren’t using the internet in the 1990s without saying you weren’t using the internet in the 1990s.