From the very dawn of the personal computing era, the PC and Apple platforms have gone very different ways. IBM compatibles surged in popularity, while Apple was able to more closely guard the Macintosh from imitators wanting to duplicate its hardware and run its software.

Things changed when Apple announced it would hop aboard the x86 bandwagon in 2005. Soon enough was born the Hackintosh. It was difficult, yet possible, to run MacOS on your own computer built with the PC parts your heart desired.

Only, the Hackintosh era is now coming to the end. With the transition to Apple Silicon all but complete, MacOS will abandon the Intel world once more.

End Of An Era

2025 saw the 36th Worldwide Developers Conference take place in June, and with it, came the announcement of macOS Tahoe. The latest version of Apple’s full-fat operating system will offer more interface customization, improved search features, and the new attractive ‘Liquid Glass’ design language. More critically, however, it will also be the last version of the modern MacOS to support Apple’s now aging line of x86-based computers.

The latest OS will support both Apple Silicon machines as well as a small list of older Macs. Namely, if you’ve got anything with an M1 or newer, you’re onboard. If you’re Intel-based, though, you might be out of luck. It will run on the MacBook Pro 16 inch from 2019, as well as the MacBook Pro 13-inch from 2020, but only the model with four Thunderbolt 3 ports. It will also support iMacs and Mac Minis from 2020 or later. As for the Mac Pro, you’ll need one from 2019 or later, or 2022 or later for the Mac Studio.

Basically, beyond the release of Tahoe, Apple will stop releasing versions of its operating system for x86 systems. Going forward, it will only be compiling MacOS for ARM-based Apple Silicon machines.

How It Was Done

Of course, it’s worth remembering that Apple never wanted random PC builders to be able to run macOS to begin with. Yes, it will eventually stop making an x86 version of its operating system, but it had already gone to great lengths trying to stop macOS from running on non-authorized hardware. The dream of a Hackintosh was to build a powerful computer on the cheap, without having to pay Apple’s exorbitant prices for things like hard drive, CPU, and memory upgrades. However, you always had to jump through hoops, using hacks to fool macOS into running on a computer that Apple never built.

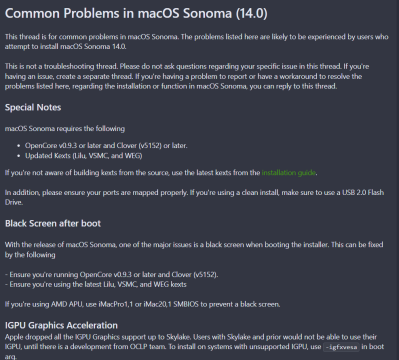

Installing macOS on a PC takes some doing.

Getting a Hackintosh running generally involved pulling down special patches crafted by a dedicated community of hackers. Soon after Apple started building x86 machines, hackers rushed to circumvent security features in what was then called Mac OS X, allowing it to run on non-Apple approved machines. The first patches landed just over a month after the first x86 Macs. Each subsequent Apple update to OS X locked things down further, only for the community to release new patches unlocking the operating system in quick succession. Sometimes this involved emulating the EFI subsystem which contemporary Macs used in place of a traditional PC’s BIOS. Sometimes it was involved as tweaking the kernel to stick to older SSE2 instructions when Apple’s use of SS3 instructions stopped the operating system running on older hardware. Depending on the precise machine you were building, and the version of OS X or MacOS that you hoped to run, you’d use different patches or hacks to get your machine booting, installing, and running to operating system.

Running a Hackintosh often involved dealing with limitations. Apple’s operating system was never intended to run on just any hardware, after all. Typical hurdles included having to use specific GPUs or WiFi cards, for example, since broad support for the wide range of PC parts just wasn’t there. Similarly, sometimes certain motherboards wouldn’t work, or would require specific workarounds to make Apple’s operating system happy in a particularly unfamiliar environment.

Of course, you can still build a Hackintosh today. Instructions exist for installing and running macOS Sequoia (macOS 15), macOS Sonoma (macOS 14), as well as a whole host of earlier versions all the way back to when it was still called Mac OS X. When macOS Tahoe drops later this year, the community will likely work to make the x86 version run on any old PC hardware. Beyond that, though, the story will end, as Apple continues to walk farther into its ARM-powered future.

Ultimately, what the Hackintosh offered was choice. It wasn’t convenient, but if you were in love with macOS, it let you do what Apple said was verboten. You didn’t have to pay for expensive first party parts, and you could build your machine in the manner to which you were accustomed. You could have your cake and eat it too, which is to say that you could run the Mac version of Photoshop because that apparently mattered to some people. Now, all that’s over, so if you love weird modifier keys on your keyboard and a sleek, glassy operating system, you’ll have to pay the big bucks for Apple hardware again. The Hackintosh is dead. Long live Apple Silicon, so it goes.

Add that to high prices from Apple hardware and the result is a decreasing MacOS user base. Redmond and Antartida welcome the new users.

Apple Silicon has lead to much cheaper Macs. I have the M4 mini ($600) and a MacBook Air 13 ($850) and they both work very well. The latter is my work machine and gets subjected to the rigors of professional software development.

Any Wintel PC you could find below those price points is probably garbage not worth owning. As someone who ran Windows for decades – you get what you pay for on that platform as well.

Lol “cheaper macs” is correct. My almost 3-year-old 7950x is still 30% faster than the M4 Max 16 core. With the full system built for $1400, it beats the pants off the Apple Studio. The M3 Ultra is about 10% faster…for nearly 3x the price.

if you’re willing to shell out for a ryzen 9 7950x then the cost of mac hardware doesn’t seem exorbitant at all :)

from my perspective, mac hardware is expensive but my “super computer” is only a ryzen 7 3700x

wow! i had no idea apple prices had actually come down. i last bought an ibook in 2004, when $1000 for a laptop was almost the cheapest laptop, and the specs couldn’t be beat. but i’ve been using sub-$300 laptops for more than a decade now, so apple has been going in the wrong direction imo.

$600 still seems like a lot to spend on a nuc, but man the m4 is one fast chip if you need the performance!! if cpubenchmark.net is to be believed, it’s 10x as powerful as my ancient celeron “pi killer” nuc!

Other than the fact you really need to a SMD rework master and be able to source suitable chips to actually have RAM and Storage at a sane price…

The base model price does look good, but it really doesn’t once you get into the sort of specs many folks will actually require…

16GB RAM in the base model isn’t enough for you?

16GB ought to be enough for anybody.

16GB is minimum for current macOS versions, I think.

The shortlived 8GB models suffered from worn SSDs I read (through swapping).

32GB makes sense if Windows is run via Parallels Desktop (8GB is the default value of allocated RAM for Windows VM).

Of course it’s not.

Not just the RAM, which from my understanding is very much the bare minimum to actually handle their OS, its also the storage – 256GB sounds like a lot to some folks, but it really isn’t very adequate for so many modern files sizes – one of the core things folks seem to love Apple for is Audio/Visual production, when all that RAW audio and today’s pretty default resolution footage will destroy that in no time…

And the cost of upgrading to the more reasonable sizes is bonkers – its charged at least an 8x price gouging on those parts to turn that base system into an actually useful system for many users.

Also no 16GB of RAM wouldn’t be enough for me now, epically if its ‘unified’ as I frequently do things like run VM that will eat a whole GB or two (so much better than running many many systems), and the graphics memory is going to eat 3-4GB of that system RAM at a minimum most likely (and I’d not be shocked with how potent the GPU elements are if to really make good use of them you need to give ’em 8GB+. Also I’m a bit of a tab fiend as its nice to have your references all set up in familiar places to come back to, and that does come with a bit of memory footprint. All meaning the system is going to have very little left for itself…

This isn’t 2010.

If you use media production applications, it isn’t, and there are many other cases to consider for professionals using these machines.

– Video production should be in ram as much as possible on a system with non-replacable storage. Modern video compression benefits hugely from GPU accelerated compression too.

– If you do anything related to production 3D you’ll need at least half that ram for scene display and rendering, and 8GB isn’t much for asset heavy work.

– Anyone doing mundane Windows work will need a VM with a few GB of ram and at least some vram allocation, requirements go up a lot for a number of use cases.

– Developers will likely run multiple VMs with system ram allocations dependent on the task, etc.

Funny enough, you can stress these systems readily in the same ways with just web browsing due to the mess sites have become readily consuming 8gb of ram and handling background ads and hd video streams on the GPU. I’ve managed to accidentally crash OSX with only Safari or Chrome running, and no more than 20-30 tabs.

The only way around this is to sacrifice your irreplaceable (without surgery) SSD. It’s no wonder people are working hard to make aftermarket mods viable, it’s not like apple will ever repair your machine if real damage occurs anyway.

Apple silicon had NOTHING to do with the price drop. They simply decided to reduce the gouging they’d been indulging in as Macs are not quite the status symbol they had been.

Bahahaha.

They haven’t; Apple Silicon is cheap to produce compared to buying processors from Intel.

At least initially it wasn’t, the were plenty of indications the M1 was sold under cost. Not like we will ever get accurate numbers, so whatever I guess.

Note: that unified memory is not cheap die space.

Source?

I agree Apples ARM silicon is light years ahead of Intel. Then you add windows 11 with all its bloat and features that nobody asked for or wants. These days Redmond ain’t welcoming new users. They are losing market share and laying off workers in mass. Intel is currently laying off 50 percent of their entire work force. That tells you something. Microsoft in a desperate attempt to catch up are shoving snapdragons into PC’s with dismal results. Compatibility issues galore. Intel missed the ARM boat many years ago and they will never catch up. They have lost tons of market share and I doubt they will ever recover. If they are serious about regaining market they will need to reverse course on their terrible windows 11 OS. For many including myself windows 10 is the end of the road.

I remember similar sentiments when Microsoft migrated from Win XP. Presently though— is the present situation the apocalypse? Stay tuned

Yea I saw the Steam hardware survey today, definite downward trend in OSX

Apple’s products were reasonably price-competitive. Apple didn’t compete at the low end. They never made cheap garbage. That’s why they’ve always been perceived as expensive.

But they often made expensive garbage, lol!

That’s marketing, they have made both cheap and expensive garbage. Often customer reports are buried in their forums, but entire hardware generations are notorious for various hardware flaws, and I say that as someone who generally liked most of their hardware implementations.

The “high” prices bothered the user base the least, I think.

Generally speaking, the user base rather wants something that doesn’t cause headaches, just works.

It was more the interest in the ecosystem, the software etc that mattered.

In the 80s, Mac power users had owned an Amiga/Atari ST to run shrink-wrapped software by the help of Aladin, Magic Sac, Spectre 128/GCR etc.

In the 90s, Power PC-based Mac Clones were a thing.

But not necessarily because of “cheap”, but expandibility, standardized chassis and high performance.

When the Intel Hackintoshs appeared, the ability to run existing Power PC-based software mattered the most (by help of Rosetta).

There’s a reason that Mac OS X 10.4 “Tiger” and Mac OS X 10.6 “Snow Leopard” (aka Snowy) had been so popular for so long.

Tiger on Power PC-based Macs supported “Classic Environment” (seamless Mac OS 9.x VM), while Snow Leopard was last to have had Rosetta.

Carbon applications with Power PC instructions could run on Mac OS 8/9 and X (up to Snow Leopard).

Carbon API itself was supported for a few more years.

Hackintosh users were never an important part of the macOS userbase. So small as to be indistinguishable from zero. It will have no effect at all.

Apple’s “Big Brother”-smashing ad of 1984 seems a bit dusty now that they have become the walled-garden monster that they putatively battled.

There have been and will always be people willing to spend money to avoid the complexities of technology that they use, but the number can wither overnight in the face of broad adoption of actual customer service, good user experience, and intuitive and reliable operation. Unfortunately the big three OS for consumer end-use ( Apple, MS, & Google*) seem to have taken the path of Doctorow’s enshittification in pursuit of monetizing those same consumers, so Apple will prevail for the moment.

*I’m excluding Linux here because despite acolytes’ claims, it’s not particularly uniform, reliable, nor user-friendly for someone who isn’t fascinated by working within the operating system rather than applications. This can change as well, but there doesn’t seem to be a profit center driving it.

I’m willing to pay extra for good hardware that works, but I’m not willing to pay a subscription for it. I only want to buy it once. Thus, at least currently, I’m relegated to old hardware, old operating systems, and, piracy.

“old” hardware might be a 7840u 8 core with an iGPU that trades blows with discrete GPUs these days. Not a terrible place to be, especially if it suits your needs and is affordable.

I’m finding I do a lot of work on an i5 Intel 11th gen Carbon X1 though, even if I have an AMD T14 6850u and T16 7840u (also watch out for the 8840u, it’s just a re-named 7840u).

So Lenovo T, P or X class is high quality. E class, L class, ‘Ideapad’ and Legion are cheesy.

Exactly…have a multi boot system for the OS’s!!

Linux is user friendly and ‘very’ reliable. ‘Not uniform’ DE isn’t a ‘negative’ because ‘everyone’ has their own style of workflow. Mine works well with KDE/Cinnamon style. Others with Gnome or something else. Win Win. Even my computer illiterate dad is comfortable with it (KUbuntu LTS). And it runs every application that I want/need to use. And I don’t ‘work’ with the operating system in general. I just use the apps to get the job done. Of course as a programmer, I do some low level stuff with Linux, but that isn’t a necessity to use the system (as well as Windoze or Apple).

Don’t see the draw to Apple. And since they’ve went back to a ‘closed’ system even more reason to not buy their systems.

The draw for me was having a UNIX-like with proprietary software support. I’ve been a Linux user since 2007, and Hackintoshing quickly became a hobby of mine. OS X really seemed like the best of both worlds, and it’s why I inevitably bought a MBP in 2015. That said, after El Capitan, Apple stopped respecting users as users, and started putting up guardrails (IE, SIP) that made it more difficult to do low-level system administration. I ended up going back to Linux in 2018 when the buzz around Proton started up, and I don’t see myself leaving any time soon. Things have came a long way since 2007, and while I may not have the support I want, I do now have the knowledge to work around that.

Lets not paint roses here. The only time linux is reliable is if you have the exact same hardware that the developers used in their testing and you use the OS for the same thing as the developers. Venturing into other avenues than intended, you can kiss both friendliness and reliability goodbye. Again, like apple, linux is a niche environment, windows is the standard.

Linux hardware support is almost universally good, and frequently much much better than Windoze… Not always as some device manufacturer are very much better at keeping their stuff in support and/or very very anti-linux so its community reverse engineering to get any function at all, which can be slow. But as a rule any hardware combination and any hardware you want to throw at it will just work these days. (at least in x86 land – ARM platforms can require more manual config work).

Also the problems of disparate hardware between user and programmers and weird edge cases happen in M$’ side about as if not more darn frequently too! The difference there is the Linux community will often have a fix in hours once they notice and can track the source. But are not very likely to notice on their own – which means you personally probably have to make some noise. Where with such a huge user base Windoze or the software will eventually be patched to work even if you do nothing in the end – somebody else complained for you.

This is exactly what Apple does with their computers.

The mistake is thinking that Linux should work perfectly on all the crap out there and often, people complaining about Linux on their 200eur Acer Win10 family plastic blob and when fed up, don’t mind to spend 6x more for an entry level Mac and praising Apple because yes, they control the stack!

do you get it, now?

I think it’s getting repetitive that Linux must constantly be mentioned.

It makes me think of the former comments of tox. Amiga users that verbally attacked PC platform/PC users through sheer frustration.

I remember the reader’s letters in 90s computer magazines here.

They apparently couldn’t handle the situation that their beloved platform wasn’t leading on the desktop (anymore).

And then there’s OS/2. ;-)

Yup. But it didn’t “rival” DOS or Windows, but rather unified them.

It was more of an upgrade to DOS/Windows users. Like Windows XP was to Windows 98 users.

OS/2 Warp had the ability to run a real copy of Windows 3.1x, to boot a floppy in a window, run Win32 applications via Win32s or ODIN..

In early 90s, Amiga users even considered switching to OS/2, because the Microsoft alternatives didn’t suit them.

Nowadays, OS/2 can run ported *nix applications, too. There’s GCC and other GNU tools.

The OS is working silently behind stage, basically.

OS/2 users these days don’t brag about OS/2. Not that I know of, at least.

Another cool “OS” (graphical environment, rather) used to be PC GEOS:

GeoWorks, Breadbox Ensemble, New Deal Office etc.

Runs on DOS, but is very lightweight. Was popular among users of low-end PCs in the 90s, also because it shipped with a complete office suite.

A Turbo XT with V20/V30, EMS and VGA basically ran as good as Windows 95 on a Pentium 1..

Now It’s Open Sausage, has a small community, has Freeware.

It even can play sound and use networking.

PC GEOS? You should have seen the original on a C64.

It was doing a Mac-style GUI and office suite in a place I never expected to see one

I really liked OS/2 and touted it at my company at the time… but my company was ‘stuck’ on Windows and that is where it stayed. Hindsight of course, staying with Windows was the better choice for selling our software solutions… Later wanted them to move to Linux for clients, but that didn’t work out either. To much inertia on using Windows… But did get them on a Linux server which saved us a lot of money and maintenance costs.

Hi, my apologies (seriously). I can feel your disappointment.

Where I live, Microsoft used to dominate software industry and very few made the switch.

OS/2 was an elegant workaround for so many reasons.

Could multitask existing DOS applications very well (timer emulation, VGA emulation/pass-through, memory options etc).

Could multitask Windows applications preemptively via separate Windows 3.1x instances (with direct-hardware access support, *.DRV drivers worked)

Had an built-in HDD cache

Had supported HPFS filesystem (comparable to NTFS)

Unfortunately, many users used to DOS/Windows 3.x didn’t realize this.

Because, after all, if you’re used to work with one application a time then nothing seems different.

Except that OS/2 appears to be big, sluggish and ugly to you.

But once you’ve been dealing with background tasks,

such as a Fax software that sends out a batch of files or you have a filetransfer running in background,

the difference becomes clear.

That’s why power users or Amiga users had used OS/2.

If you have your raytracing applications running or a MOD player running, then Windows 3.1x was pretty much locked for the time being.

(The fine MOD4WIN player even had resorted to use a helper VXD to make multitasking/background playing possible on Windows 3.1x.)

And that’s why me, an Windows 3.1 user at the time, really liked the alternative OSes.

OS/2, NT or Unix+Wabi had allowed running Windows 3.1x applications in a much smoother environment than real Windows 3.1x did provide.

And that was great, because Windows 3.x had excellent integrated development tools such as Visual Foxpro, Visual Basic, Delphi, CA dBFast.

Just to name a couple.

These RAD tools (Rapid Application Development) had fueled the shareware scene of early 90s.

You had one man companies that tried their luck, hobby programmers etc.

Prototyping was a joy at the time, it was painless to create a semi-working “dummy application” in no time.

The days of Windows 3.x was a fascinating time, thanks to Visual Basic 3 or Delphi and Borland Pascal 7 (Windows compiler).

Just Windows 3.x wasn’t exactly best there was.

And OS/2 had changed this for a few of us, it made using Windows applications less painful.

Only, that 1984 ad wasn’t about the IBM PC being a walled garden (it was, and is, the opposite). They were saying the PC platform was drab and conformist because it was all generic parts, with no overarching vision, and the Mac was a single vertically-integrated gesamtkunstwerk.

Microsoft and Dell run grocery stores; Apple is in the restaurant business. I think a lot of home cooking enthusiasts on the internet could be much calmer if they sat with this thought.

‘Cause I feel like what a lot of people really hate is that there aren’t other Apples to choose from. Like Palm, I mean – but the problem is, I can’t think of a single other example this century

Bobtato, I think your restaurant comparison is very good.

About PC platform vs Mac..

I personally think they were more like uneven siblings, sometimes.

PCs were seen as “tools” or workhorses, or comparable to tractors.

Not most elegant in their design most of time (there are exceptions!), but strong and fixable in a quick&dirty fashion.

On DOS, about anything could be done at the time.

Mac by comparison were more on the artistry side, were meant to be easy to use. Compared like a Porsche 911, if you will.

However, this also had positive side in the business world. Mac Paint wasn’t everything.

Doing DTP or word-processing in a graphical way was less stressful.

Macs also supported easy networking, so sharing a single printer became a reality early on. Important in an office.

Interestingly, the GEM environment had analog copies to Mac.

GEM Paint, GEM Draw and GEM Write were similar to Mac Paint, Mac Draw and Mac Write.

Big applications like Ventura Publisher or Aldus PageMaker were available on both Mac and PC (GEM, Windows).

So PC and Mac weren’t that contradictionary all the time.

There had been software such as Executor to run Mac applications on PC, even.

Or the abandoned StarTrek project (System 7 on an 486/Novell DOS).

In reverse, emulating a PC on the Macintosh wasn’t uncommon, either.

In early 90s, solutions such as SoftPC, SoftAT, SoftWindows etc. appeared.

Apple itself released the Macintosh Performa 630 DOS Compatible, for example.

It had a PC daughtercard with 486 processor, SB16 and VGA if memory serves.

In the 80s, various Apple II/PC hybrids had existed, too.

They had an 8088 and 6502 processor and could boot into Apple DOS or PC-DOS/MS-DOS.

So yeah, there was/is something for everyone. I don’t understand the hard rivalry all the time.

It’s like with Sega vs Nintendo in the 90s. Others argued, I liked both in their own way.

There is no rivalry. Apple is irrelevant to mainstream users.

And Apple tastes good, too!!!

The 1984 ad was saying that the IBM PC was totalitarian and it’s drab, because it’s totalitarian. It still is.

its funny that the 1984 ad related to PCs as a a drab corporate overlord needing to be toppled, and Apple as a tool for combating conformity and asserting originality.

Now Apple is a walled garden controlling and restricting hardware and software options (more so with IOS app store than macOS’s gatekeeper), while PCs offer a wide and diverse market of both hardware and software options.

“while PCs offer a wide and diverse market of both hardware and software options.”

did

Times are changing.

TPM chip requirement, secure boot requirement, removal of CSM (BIOS) and DOS support,

removal of A20 gate (could be worked around; by using V86 memory managers etc),

impending loss of VGA/VBE BIOS on graphics cards,

impending removal of 8086 Real-Mode instructions (AX, BX, CX registers etc)..

The IBM PC platform used to be future proof, open and expandable.

Unrestricted backwards compatibility and natural evolution (with cool hacks) over long time period was the essence of the PC platform.

But intel is now doing more harm than good.

X86S was just the latest abonimation, there’s still more to come.

The end of x86 PC platform is inevitable.

“its funny that the 1984 ad related to PCs as a a drab corporate overlord needing to be toppled, and Apple as a tool for combating conformity and asserting originality.”

The 1984 Apple advertisement was a reference to the 1984 novel.

At the time, IBM was called “big blue” and a symbol for a behemoth of an old company.

Apple by contrast represented the young start-up company, with young, dynamic and fresh minds that question status quo.

The guys who stand up against “the system” that surpresses everything.

The Macintosh was a symbol for free expression, creativity and so on.

While IBM PC was a machine; something cold, dead, without heart.

Something like that. It must be seen in the context of its day, I think.

Tempest in a teapot. I’ve been using Macs since a 128k running Lotus Jazz as an engineering intern. I’ve heard decades of the “I’ll get one when there’s a clone”, “the OS runs faster on my ST”, and the like. Today, I run Mini vMac on a Linux box, but only to experiment with software configuration before I waste time loading it onto my vintage Mac SE. Obviously, this is a hobby. Real work happens on a last gen Intel Mini.

I’m sure all that’s really at stake here is the hobby. If more than a handful of people are using a Hackintosh for paid work, I’ll slam a door on my da kine.

I can’t speak for everyone, but here in Germany of late 80s/early 90s, the Atari ST basically played the role of the Macintosh.

The 640×400 mono monitor (SM124) used to be very popular, along with productive software.

Calamus, GFA Basic, Signum, Degas, Cubase etc.

So since the “Jackintosh” (Atari ST) was so popular, it makes sense that Macintosh applications had been run on Atari ST, too.

There had been more Ataris than Macs, likely.

By early 90s, Atari STs running System through emulation maybe even outnumbered real Macs over here.

The higher-end models had 16 MHz or Blitter chip or were upgraded with an 68010-68030,

so performance of Ataris perhaps indeed was better than an Mac 128k, 512k or Plus.

The resolution of 640×400 was higher than the Mac’s, too, providing more workspace.

vMac emulator has an 640×480 “hack”, too, to allow higher resolution.

how is your memory so good? I can’t remember these numberer CPUs or software titles to save my life

Hi, you mean the 680xx upgrade?

There was the PAK68 project, which was published in German c’t magazine in 1987 first time.

It upgrades existing 68000 systems, such as Amiga, Atari or Mac.

Here’s a review for the 68020 version.

It was published in an 1988 issue of ST Computer magazine.

https://www.stcarchiv.de/stc1988/11/pak-68

Other Atari ST models such as Atari TT or Mega STE ran an 68000 at 16 MHz, I think.

Which was still twice as fast as an 7 MHz b/w Macintosh from the 80s.

Last Gen Intel Mini is 14th gen. That’s crazy, 11th and 12th are still plenty fast for most things. I’ve got a NUC8 with 7th (8th?) gen Iris which is still pretty quick. Still bummed the M.2 port didn’t work with a GPU adapter.

This is defeatism because automated binary translation is still an option. What about the kernel, right? Simple, it’s still open source and thus can be replaced with a fresh build. I’m not saying I would bother to build the kernel or translate the applications to x86_64 but I’m saying it’s still entirely possible which is what the “Hackintosh” is all about.

I’m saddened. I recently took advantage of the planned obsolescence of the x86 Mini Mac to pick one up for less than $300 (plus another $300 for the things I added to it).

When it’s no longer eligible for security patches, I’ll be able to convert it to Linux, and then run its original MacOS software inside a Docker sandbox; that way, I’ll be able to isolate it from the wi(l)der world In order to keep it safe.

The thing is, I’m coming to hate e-waste – something both Apple and Microsoft seem to have no problem creating in abundance. Yes, it costs money to continue to support old operating systems with security patches; but without such patches it’s not safe to run such operating systems – Which means that the machines that were designed to run such systems either have to be repurposed or end up on the scrap heap.

And those scrap heaps aren’t getting any smaller.

I don’t worship any particular operating system; I’m down to four Windows machines, and one of those is currently migrating to Linux. The other three are a game machine, a travel notebook, and a server to make it easier for me to lock the others down. Other than the Intel Mini Mac, the rest of the machines in my home lab run either Linux or ChromeOS – and the Chrome machines will probably run FydeOS when THEY fall out of support (that, or keep running ChromeOS via Brunch).

Say what you want about ease of use, but Linux at least gives you the means to repurpose old hardware and extend its lifespan another 10-15 years past the point where the commercial OS vendors are willing to continue paying to have that hardware supported. From my perspective, that makes it possible for me to put off the day that I’m going to have to chuck a computer onto the midden heap until it finally drops dead from hardware failure.

It’s too bad we throw away perfectly good machines before their time. Such a waste.

“Say what you want about ease of use, but Linux at least gives you the means to repurpose old hardware and extend its lifespan another 10-15 years past the point where the commercial OS vendors are willing to continue paying to have that hardware supported. ”

The problem with “paying” is that it’s all relative, though. There are different “currencies”, if you will.

You can pay with money (obviously), your personal data or with your lifetime/nerves/stress level.

Getting an old Mac/PC to run longer through Linux seems like a good deal, unless you’re realizing the price is to.. have to deal with Linux. ;)

If you stay away from systemd+Linux and stick to GNU+Linux, things just work.

” unless you’re realizing the price is to.. have to deal with Linux.” That’s the easy part. Linux is easy to use and reliable. Download iso, install, done. Off to being productive. From SBCs, to servers, desktops, workstations, and laptops. Simple as 1, 2, 3. Only the ‘user’ makes it hard as we are creatures of habit… ;) .

Uh, depends.. :) macOS is taking users by the hand, while Linux is, err, a bit picky about its user. :)

macOS applications exist as ready-to-use binary packages, often distributed in DMG images.

They can be dragged and dropped into application folder.

It’s not necessary to use a package manager, but possible.

MacPorts and brew all *nix style installation of applications via command line.

PS: I’ve used an Raspberry Pi for over ten years, even as a daily driver for a while.

It was useful for tinkering, I do admit.

Especially for ham radio or retro computing things, with homebrew circuits on a veroboard.

Linux isn’t all rainvows and sunshine, however.

Upgrading a distribution still doesn’t work flawlessly.

Many distributions end up being half-damaged when upgrading to next major revision.

Not so much on macOS, because the applications are pretty portable/disconnected.

Many can be run from USB pen drive, like in the Windows 3.x/95 days.

They mainly store configuration files in the macOS directories.

Using Linux is not mandatory. One can install ReactOS, FreeBSD or even FreeDOS if the machine is repurposed to run retrogames.

Recalbox can get you from downloading the image to playing in half an hour, and just works. I was really impressed how easy it was when I installed it on my arcade.

how hard is it really to run “sudo apt update && upgrade -y” once a year really? oh no linux so hard. give me a break.

Why do you think that wasn’t done ?

I’ve been using terminals for ages, I was there when Linux distros started in the 90s.

Just because I see and mention the quirks, I must be somewhat unfamiliar with Linux? Right?

Because it’s never Linux that has issues, but the users? Jesus. 😮💨

I’m saddened. I recently took advantage of the planned obsolescence of the x86 Mini Mac to pick one up for less than $300 (plus another $300 for the things I added to it).

When it’s no longer eligible for security patches, I’ll be able to convert it to Linux, and then run its original MacOS software inside a Docker sandbox; that way, I’ll be able to isolate it from the wi(l)der world In order to keep it safe.

The thing is, I’m coming to hate e-waste – something both Apple and Microsoft seem to have no problem creating in abundance. Yes, it costs money to continue to support old operating systems with security patches; but without such patches it’s not safe to run such operating systems – Which means that the machines that were designed to run such systems either have to be repurposed or end up on the scrap heap.

And those scrap heaps aren’t getting any smaller.

I don’t worship any particular operating system; I’m down to four Windows machines, and one of those is currently migrating to Linux. The other three are a game machine, a travel notebook, and a server to make it easier for me to lock the others down. Other than the Intel Mini Mac, the rest of the machines in my home lab run either Linux or ChromeOS – and the Chrome machines will probably run FydeOS when THEY fall out of support (that, or keep running ChromeOS via Brunch).

Say what you want about ease of use, but Linux at least gives you the means to repurpose old hardware and extend its lifespan another 10-15 years past the point where the commercial OS vendors are willing to continue paying to have that hardware supported. From my perspective, that makes it possible for me to put off the day that I’m going to have to chuck a computer onto the midden heap until it finally drops dead from hardware failure.

It’s too bad we throw away perfectly good machines before their time. Such a waste.

I triple booted OSX, XP and Ubuntu all bqck in 2009. Intel macs were a strange but wonderous thing.

I knew a Linux user who didn’t like Windows, but his girl friend needed it on her Macintosh for work..

They thus installed Windows XP via Bootcamp assistent and were amazed how surprisingly painless Windows had worked afterwards.

The experience was better than on an average real PC.

I tried to triple boot but when I installed Ubuntu it corrupted the disks. But I still have an old (2015) MacBook air that I dual boot windows XP and it runs like a champ

“the PC and Apple platforms have gone very different ways”

No, they haven’t. Apple only sells PCs; not mainframes or minicomputers.

When you mean Windows, say Windows.

The use of ‘PC’ to mean an x86 desktop, probably running Windows, became widespread in the early 90’s.

In 1981 IBM released an Intel 8088 processor computer named the IBM Personal Compuler

In 1983 They released its successor the IBM Personal Computer XT running the same processor.

In 1984 They released the IBM PC/AT running the Intel 80286 processor, with the ability to run WIndows 1.0

This being the first model to both officially abbreviate Personal Computer to PC, AND to run windows cemented the x86 running windows as the meaning of PC.

Within a year of the first IBM Personal Computer, Columbia Data released the first PC clone, Columbia Data Products’ MPC 1600. The following year Compaq released the Compaq Portable, widely referred to in media as being a PC Clone.

After a few Apples, in 1983 Apple released the Apple Lisa at $9,995, their first computer with a GUI OS.

The next year, 1984, The same year IBM PC/AT started running Windows 1.0, they released the Macintosh 128K at $2,495.

This pretty much solidified the home computer users choices between owning a PC or a MAC.

The alternative option of linux didnt arrive until 1991. If we ignore phones, servers, supercomputers and embedded devices, focusing only on the global desktop operating system market Linux only nibbles a 4.27% share.

Hi! I think Ttere’s some truth within, I do agree.

In the 80s and 90s, we also said things like “IBM PC” , “(IBM) PC compatible”.

Then there’s “WinTel” term of mid-90s, which I think was slang.

Like “WinDOS”, “Windoze” and so on.

By the late 90s, the term “Windows PC” became more common place, too.

Which of course, wasn’t so easily being accepted by Linux fans. ;)

Also, it’s a difference if it’s a Personal Computer™ or a personal computer (lower case).

The former is a marketing term, the latter a description for a certain computer type.

The C64 had been described as personal computer, too, despite it being most famous 8-Bit home computer in western history.

(The British used the term “micro” and “micros” when refering to 8-Bit micro computers aka home computers.)

My Sharp MZ 7xx computer of 1983 has “Personal Computer” written on the original box, too.

But here the words are in capitals for stylistic reasons, I think.

Box arts of home computers with “personal computer”:

https://vintagecomputer.com/commodore-64.html

https://www.nightfallcrew.com/18/05/2012/sharp-mz-721-mz-700-series-boxed/

“This pretty much solidified the home computer users choices between owning a PC or a MAC.”

That explains Commodore’s enormous sales figures I guess, sort of or completely no?

Commodore indeed was popular over here in good old Europe.

The PETs were their business class machines, originally. Their “PCs”, if we will.

Commodore even named itself “CBM” in an reference to IBM.

Then there was the Commodore 8-Bit line of home computers (VIC20, many C64s, C128 etc), the Amigas and the Commodore PC compatibles.

PC10 was a low-price IBM PC clone being popular at Deutsche Bahn, I read.

It sold very well and gave birth to other models..

Atari was also popular over here.

The 8-Bit 400/800 series, the Atari ST/TT line, their PC clones etc.

Technically, both Amiga and Atari ST series were very IBM PC-like.

But users rarely called them PCs, but by their names.

In a similar way to how Macintosh users do it, still.

In principle, the C128D was a desktop PC, too.

It looked pretty much like an Amiga A1000 and there was GEOS 128 (GUI).

It also could boot into CP/M Plus, use a mouse etc. Very PC-like.

But barely no-one I know called it PC or personal computer.

Compared to a $2,495 Mac or a $4,000 to $6,000 PC/AT, Commodore’s enormous sales figures were simple to explain, at US$595 it was a relatively affordable gift you could buy your kid. The Vic20 might have sold 2.5 tmillion, C64 might have sold 12.5 to 17 million, the C128 might have sold 12.5 to 4.5 million, but 24 million together pales in comparison to the 100 million apple computer users or the 1.5 billion Windows PCs, NOT SOLD but rather, IN USE TODAY. Even Linux who only holds a 4.27% share of the desktop market has an estimated 33.57 million and 39.17 million PCs worldwide today. exceeding the 3 main COMMODORE models (yeah yeah “AMIGA COULD HAVE RULED THE WORLD!!!”, I know).

While they enjoyed a little more than a decade of success, Commodore is a footnote in the history of computing. It was a great starter computer that many of us love(d). But I was speaking about the reality of the association of the acronym “PC” with the x86 computer running windows,

Since 1985 Apple has always adopted proprietary technology and impeded modification. A friend bought one of the first Apple Mac’s and wanted to add memory. To open the case you needed a special screwdriver and the screws were hidden recessed at bottom of the handle. Never touched Apple since.

The good old days, apple memory from certified apple suppliers cost 2x the price of the same memory on the windows side. Makes sense, since MacOS at the time was business oriented.

My first home computer was an Apple //e I bought in 1983. I loved it. I used it for word processing (AppleWorks) and my home programming projects.The first Macintosh was released the next year and all the cool kids in the local Apple user group migrated to Macs. I had just sunk a ton of money into my //e setup and couldn’t justify the switch. By the time I was ready to upgrade in the late 80s I just decided to buy an IBM PC clone. That’s what we were using at work, and at home they were much cheaper than a Mac. Never bought another Apple machine since. I wonder how different my computing life would have been if I had waited a year to buy my first computer. Not that I regret it, but I can see clearly how circumstance steered me in a particular direction.

Hi, but I must say that Apple II and Macintosh were being two different sides of Apple Computer, personality wise.

Apple II and IIgs were brainchilds of Woz., while Lisa/Mac were rather those of Jobs.

The Apple II was open architecture and meant for hobbyists, the Mac was not, really.

So it’s no wonder that hobbyists/tinkerers switched from Apple II not to Macintosh, but something else.

Such as IBM PC, which was derived from Apple II (same edge connector slot concept, open design, off-the-shelf parts).

It’s also different type of memory, maybe.

For example, memory with parity was rather niche (servers etc).

So there might be a reason the RAM used in Macs was more expensive from the manufacturer side.

I mean, Macintosh HDDs used to be more expensive, too, because they were SCSI drives rather than cheap AT-Bus (IDE) drives.

The technology that Apple had chosen back then simply was less mainstream and more sophisticated, so it made sense.

Or let’s take SSDs (not Apple related here).

You can have hundreds of GBs of storage by choosing cheap MLC/TLC/QLC SSDs.

But once you want a real, proper SLC SSD of same capacity, then the price tenfolds.

I find it beyond irritating that I cannot choose to run my 1TB TLC Drive as a 300GB SLC if I so choose, maybe open hardware/firmware will be a thing. Even choosing ratio of SLC to TLC would be nice.

The better consumer drives strike a good enough balance, shameOptane never took off for the boost in latency. Only some laptops got the hybrid Optane/NVMe drives, they needed bifurcation of the x2/x2 variety because they were technically 2 drives in one.

“Since 1985 Apple has always adopted proprietary technology and impeded modification.”

Compaq in the PC world wasn’t that much different, though.

It had quite some proprietary things on its own (80s, esrly 90s)..

Such as RAM, drive bays, BIOS Setup programs stored on hidden HDD partitions, proprietary “business audio” on-board sound.

But was that so bad? How can you cause innovation or stand out of the crowd if you don’t be original at times? 🙂

“To open the case you needed a special screwdriver and the screws were hidden recessed at bottom of the handle. Never touched Apple since.”

I think that’s good, actually.

Better than the horrible thing that US Americans do refer to as “Philips screwdriver” (that cross-headed screwdriver).

It’s one of the most low-quality types, I think.

Also, Nintendo had used Triwing screws for the NES/SNES and GB game packs (game cassettes).

Here, they’re acting as security screws, which I think is reasonable.

This practice seems annoying at first, until you had worked with better screw types.

The Torx type is much more reliable than the cross-headed type, it doesn’t wear out so easily! 🙂

Phillips head screws are specifically designed to “torque out” to prevent over-tightening. They aren’t low quality, people just don’t understand there purpose. The main problem is that they became almost ubiquitous, so accessibility ended up trumping appropriate use cases.

However, most computer screws are cross-head, not Philips, and even if they were Philips they shouldn’t need tightening to anywhere near the point of camming -out.

Torx etc, maybe more appropriately designed for computer hardware, but until fairly recently represented a standard that required having to buy a new appropriate driver, rather than the convenience of using one that nearly every household already owned.

While Torx or Hex are common enough now to be a better option, the use of triwing and other standards may have had manufacturing advantages, using an unusual standard is a handy extra barrier against modification for companies that don’t want users tinkering with (or fixing) their products.

I’m impressed with your ability to post from 2007.

is macOS self-hosting?

AI Overview.

Yes, macOS can be used for self-hosting, but it’s not inherently a “self-hosting” operating system

in the same way that some Linux distributions are. macOS has built-in features and tools that

allow users to host various services, but it also requires additional setup and potentially

third-party tools for more complex setups.

Here’s a breakdown:

In essence, macOS provides the foundation and tools for self-hosting, but users may need

to leverage additional tools and configurations to achieve specific self-hosting goals.

does Rust generate itself from source?

AI Overview.

Yes, the Rust compiler (rustc) is written in Rust and is self-hosted.

This means that the compiler is able to compile its own source

code.

Here’s a breakdown of the process:

Self-hosting: Rust’s compiler was initially written in OCaml. It w

as then rewritten in Rust, a process called “self-hosting”.

Bootstrapping: The

initial Rust compiler (written in OCaml) was used to compile

the first version of the Rust compiler written in Rust. This process

is known as bootstrapping.

Verification: To ensure correctness, the self-compiled Rust compiler is

verified by comparing its output with the output of the previous compiler

(the one written in OCaml).

Modern Usage: Today, the Rust compiler is written entirely in Rust and

uses LLVM for code generation.

LLVM: While the front-end of the compiler is written in Rust, it relies on

LLVM (Low Level Virtual Machine) for code generation and optimization.

Therefore, the Rust compiler can indeed be built from its own source

code, making it a self-hosted language.

By the way, I read that macOS Tahoe drops support for FireWire (aka i.Link, IEEE 1394).

This is a serious problem for audio professionals, which have Fire-Wire-based audio equipment.

Because via adapters (for Thunderbolt etc), FireWire was still available on the Mac platform.

Historically, Macs supported both USB Audio and FireWire Audio but the latter had better specs (low latency, daisy chaining etc).

So especially on Hackintoshs, FireWire Audio was next best thing to on-board audio.

That’s because there are no PCI/PCIe soundcards with the usual Realtek ALCxxx chips, which are supported by macOS out-of-box.

External FireWire soundcards such as classic M-Audio Firewire 410 were more compatible

to certain applications that expected on-board audio and which had their issues with generic USB Audio.

https://simple.wikipedia.org/wiki/IEEE_1394

https://9to5mac.com/2025/07/07/macos-tahoe-reports-of-firewires-death-are-not-greatly-exaggerated/

This, and licensing costs are why a fair number of composers are still using whatever they’re copy of Sibelius/etc. runs on in conjunction with their 20-30 year old hardware. Apple’s attempts to throw incredibly expensive workhorses at these people rarely gets a bite unless they made it big enough that someone else sets up everything for them. And the current generation has no reason to upgrade at all, likely ever.

Apple dropping x86 support doesn’t have to mean the end of Hackintosh. ARM-based computers are every day more common and maybe those can become the new Hackintosh.

Some basically can already, people are working on kernel patches to adapt M1’s quirks as we read this. Consider that Linux on M* is actually usable despite being unfinished.

Gaming is what makes the world go around. Any OS that doesnt support nvidias gametailoring software will never become mainstream. Then again, apple never was, nor will it ever be, more than a niche player.

I’ve been doing this the other way around – wait for Apple to end support for a machine, get given it for free by the person who now has to upgrade, then nuke it and install Linux Mint on it to enjoy many more years of use from pretty nice hardware.

The screens especially are fantastic quality, my partner is rocking a 27″ retina iMac that cost nothing and it’s very lovely to look at photos on that monitor.

Yeah, this can work. Sometimes you get lemon hardware though. The upside is many older ones can be overhauled and upgraded a fair bit.

I wouldn’t be surprised Hackintosh running Qualcomm based laptops