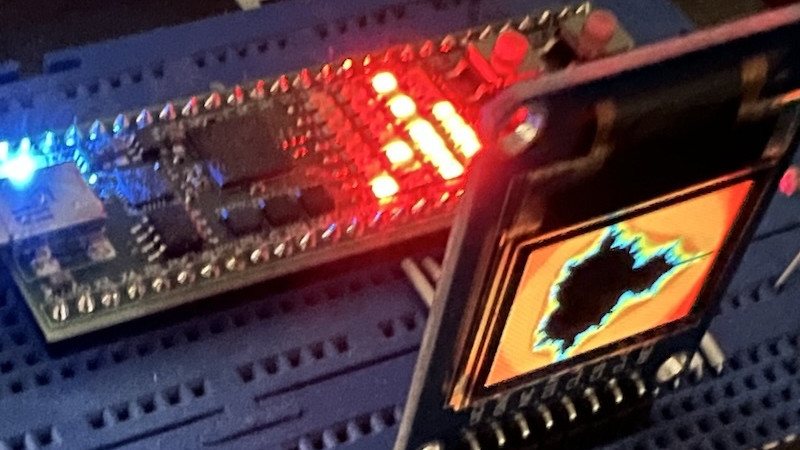

In the beginning was the MOS6502, an 8-bit microprocessor that found its way into many famous machines. Some of you will know that a CMOS 6502 was created by the Western Design Center, and in turn, WDC produced the 65C816, a 16-bit version that was used in the Apple IIgs as well as the Super Nintendo. It was news to us that they had a 32-bit version in their sights, but after producing a datasheet, they never brought it to market. Last October, [Mike Kohn] produced a Verilog version of this W65C832 processor, so it can be experienced via an FPGA.

The description dives into the differences between the 32, 16, and 8-bit variants of the 6502, and we can see some of the same hurdles that must have faced designers of other chips in that era as they moved their architectures with the times while maintaining backwards compatibility. From our (admittedly basic) understanding it appears to retain that 6502 simplicity in the way that Intel architectures did not, so it’s tempting to imagine what future might have happened had this chip made it to market. We’re guessing that you would still be reading through an Intel or ARM, but perhaps we might have seen a different path taken by 1990s game consoles.

If you’d like to dive deeper into 6502 history, the chip recently turned 50.

Thanks [Liam Proven] for the tip.

That’s cool. Hopefully it finds it way into real hardware eventually (say, an ASIC).

It would be nice for making a more modern SuperCPU for the C64, for example.

https://en.wikipedia.org/wiki/SuperCPU

I am imagining what a C64 386 equivalent would look like.

With C64’s BASIC and GEOS adapted to run on this W65C832 as a start.

More likely we’d have Commodore 256, similar to 128 with 256K RAM and 16-bit CPU, and is also backward compatible with existing 64 and 128 programs.

With an NEC V20 instead of an Z80, that would be cool.

Let’s imagine being able to access C64/C128 hardware from Minix or ELKS. :)

Or a 8 MHz NEC V30 – pin compatible with 8086 but faster than an 8 MHz 80286 for some operations.

My history is getting a bit gray, but if I recall, NEC was successfully sued by Intel for the V20 infringing on the 8088, but the V30 contained more novel content. History seems to have largely forgotten the V30.

I had a Xerox 6064 which was the same as the AT&T PC6300, both of which were the Olivetti M24 rebranded. That was an 8 MHz 8086 based machine and for its time it was pretty impressive when a V30 was swapped in. The M24 had a 16 bit bus, but the byte order on the card slots was the opposite (50/50 chance) of the IBM PC/AT which came a few years later. I only found two EGA cards which were able to work. I believe it was an Everex and an Orchid.

Hi. I would go with C128 BASIC, it was much better in about every way.

Or at least C64 BASIC+SIMONS BASIC as standard.

GEOS would be really nice to have, too!

My parents had a C128 and if I recall had GEOS or something which looked like GEOS on it. I remember seeming it set up in their office. I believe they also found a port of Smalltalk for it. It is too bad that Commodore ran out of gas. They had a number of really cool variants of the C64 including a Kaypro like lunchbox version. The , though they sold well, were not enough to save the bacon. I learned a lot writing for-fun projects in Metacomco C on the original Amiga A1000. One of the most laughable things was the PC Transfoemer which was an 8088 emulator for the 68K CPU. It did boot MS-DOS from the 5.25 inch drive, but it was beyond painfully slow. If I recall, it took five minutes to load Lotus 1-2-3. It was kind of interesting because you got to see how everything was rendered in slow motion.

That should say above: Amigas, though they sold well,…

I don’t know why you couldn’t use it. The last version I used wrote to 3.5″ floppies and emulated a 4 Mhz 8088 perfectly. I used it daily for my CS class as I used an Amiga 500 and the professor preferred Intel machines. I ran DOS, the compiler and an editor. It was fine for what it was and did.

A computer without software has not earned its right to live. It’s a nice idea to have such a CPU. But why? There is no software at all, because the CPU never existed.

Of course you could see it as an accelerator for an old 6502 system… But come on, you don’t need a 32 bit CPU for that. All you need is a hugely fast 8-bit 6502. And they exist already.

Much easier, cheaper and more feasible would be a Raspberry PI with a 6502 emulator. Like a PiStorm is for 68000, surely someone could make a PiStorm for 6502, right?

TBH, I did a quick search, but there doesn’t seem to be a PiStorm for 6502.

The Apple IIGS could use it. Also an Atari 8bit with a VBXE upgrade would benefit also.

I liked the Apple IIgs, though I never owned one. It had the Emu synthesizer chip, so you could get pretty decent quality music. Macintoshes overshadowed the IIgs within the Apple line and the IIgs was too expensive to compete with Commodore, Atari and game consoles.

Hi, that’s an interest statement. I wonder if it’s same as with DOS platform.:

Did we have had needed an 32-Bit 386/486 based PC back in 1990 if we instead had access to an Super Turbo XT that ran an (fictional) 8-Bit 8088 at 100 MHz?

I’ll leave this question unanswered. I think it’s an interesting analogy, though.

The 6502 fans may feel similar about the situation, though. Not sure.

Yes. We would have needed a 32-bit 6502 as well if it didn’t die a sudden death in the 16-bit era.

Really bad idea: Hook it to an Atari TIA, run a game 2600-esque graphics in 3d.

I could see it in a C65, or an AppleIIGS plus. The issue is that every major users of the 6502 had a future plan away from it. Atari had the ST, Commodore the Amiga, and Apple the Mac. Apple could have gone the Apple IIGs plus for schools and home with the Mac for Universities and Business.

Nintendo could have used it for a SuperNES 32. But they went MIPs for good reason. for the N64. As to why now. Why not? Really if people want to make a super C-64, AppleII or, Atari why the heck not.

To be useful, you’d need a simple OS to load programs, and a compiler. The OS need not be more complex than CP/M: no big deal. Once you have a compiler for something like a C subset, you’re on the road to just about anywhere.

The two combined need not be more than a man-year of effort.

Hi, there’s an 6502 port (re-make) of CP/M.

It even can run CP/M-80 programs using an i8080 emulator.

Zork runs, for example.

https://hackaday.com/2023/04/04/cp-m-6502-style/

https://www.youtube.com/watch?v=oHrtUj0fEk0

I doubt an ASIC is in the works. The NRE is expensive. If it happens ,it would definitely be a passion project with almost no financial payback.

NXP have some 16-bit variants of the 6502, in their automotive HCS12 series.

But even they resort to ARM Cortex cores, once you go 32-bit.

It’s an interesting concept. Kudos to anyone who gets an FPGA project to work. With open source tools, no less.

Actually, the HCS12 is an evolution of the 6800/6805 from Motorola, not the MOS 6502.

https://www.nxp.com/docs/en/supporting-information/WCSHCS12.pdf

There is a 6502 32-bit variant already, we just call it an ARM-Cortex. Please look at the history of ARM. The original ARM was a 16-bit machine designed, developed and sold by Acorn Risc Machines (ARM) of Cambridge in England, UK. This was on the back of their great success with the 2MHz 6502-powered BBC Microcomputer. ARMs are largely licensed to other vendors as part of SoC designs but the design work is still done in Cambridge.

IIRC ARM was formed in 1990 some time after Furber and Wilson visited Western Design and were very unimpressed by the 65816. ARM was formed by Acorn, Apple, and VLSI. I was not aware of any 16 bit architectures other than the WDC parts. I had heard the origin came from deciding they could do a better job than WDC on the flight back to England. The first ARM hardware (from VLSI before ARM Ltd) was in 1985. Apple had a project to make an Apple II using ARM. It worked too well and there was fear it would kill the Mac growth.

I’m sure that the speeds of devices at the time kept WDC from a doing what is obvious today, making a “32 bit 6502” with more registers and without all those modes and contortions, and emulating 6502/65816 in software. Today Apple’s M4 systems can emulate X86 and anything else rather briskly. I have a VLSI motherboard with their ARM7500 chip with all the features of a PC system of the time. 1994 and 40MHz. I think emulating a 65C02 is close to a one-to-one instruction rate if 65C02 timing and cycle count are not needed. I put a very efficient FORTH on it back then.

ARM was formed solely by Acorn (Acorn RISC Machines), and was never 16-bit.

The company you know as ARM Ltd was Apple Acorn and VLSI. Acorn did not have the resources to get serious, and VLSI in San Jose, a bicycle ride from Apple, was producing their first silicon before the ARM company was formed.

Not sure there is any link between a 6502 and the ARM processors – acorn made the BBC micro machines using off the shelf 6502 cpu, and then created arm risc themselves, but one is not an evolution of the other….

There’s an very interesting, old video about Acorn Archimedes, the ARM chip and a comparison to the older computers.

https://www.youtube.com/watch?v=VK5AZrg3ZD8

Here’s another one that ties an apple m4 as being a a direct family line since apple used 6502 also

Just cause acorn used 6502 and later created arm later has nothing else to connect the two

I mean i can compare a Mach E and a model T and say see see they based on each other!

I don’t understand. Did I say anything wrong? 🤷♂️

ARM has the same conditions codes as 6502 in the same bit positions. 6502 is a one address arch – what you kids today who never studied the Old Masters call a “load-store” architecture. As if that describes it better? Arm is one-address. 6502 has a stack pointer register. ARM can have any register be a stack pointer and is especially efficient when the top of the stack is also in a register. The big advantage on ARM is you can have a bunch of fast stacks. Compilers love stacks for parsing and other things. I used a data stack (parameter stack), floating point stack, return stack, a rapid text search stack, and some others.

I suspect the influence is obvious to anyone who did much assembly coding of both systems.

(Old Masters = Hoare, Dykstra, Knuth, Wirth. Read their books!)

Hi there. I’m the Liam Proven namechecked in the article — I shared this original post, which Jenny wrote up.

Your comment is very badly wrong.

No it wasn’t.

No it wasn’t.

That’s the only bit that’s right. :-)

ARM was originally 32-bit. There was never a 16-bit version.

ARM was not designed by Arm Ltd. ARM was designed at Acorn Computers, by Sophie Wilson and Steve Furber.

I have met and talked to both of them.

The design of the ARM was inspired by 6502 as others have commented here. Source: me, personally, directly from the mouths of Wilson and Furber.

No, the Cortex series isn’t related directly here.

The history goes:

ARM1 — the first standalone 32-bit RISC CPU. Sold as a co-processor for the BBC Micro, circa 1985.

https://acorn.huininga.nl/pub/software/BeebEm/BeebEm-4.14.68000-20160619/Help/armcopro.html

Launched 1985.

ARM2 — launched in the Acorn Archimedes range, 1987.

ARM3 — a faster 2nd-gen ARM CPU with 4kB cache, circa 1989.

Arm Ltd is the CPU part of Acorn, spun off when the company stopped making computers.

And just to extend on this, Acorn also used the 65C816, in the Acorn Communicator computer. It was very much Wilson’s & Furber’s desire to avoid the Nat Semi 32016 and the Intel 80286, both of which had been tried by Acorn, that led to the idea of a green field 32-bit processor using the small instruction set/fast execution model that the 6502 had used, while mixing in the RISC ideas they had seen

If there had been a 65C832, and Acorn had used it, then perhaps the whole Arm processor history would never have happened

The National Semi processors were interesting. IIRC they have a pointer to the registers which are in RAM, which means instant context switching or multi-tasking. My recollection is very fuzzy but I think National was making a line of DEC compatible cards for 11/70 and VAX 11/780, etc. and one of them was a CPU using the NS32032 and they ran into legal trouble with DEC and that took the momentum out of the design.

One day at that time I walked in the Fry’s component store in Sunnyvale and there was bit stack of NS32 dev kits that had the CPU, FPU, MMU, and ROMS and a load of data books for $100. I bought one and still have it. My intentions was to wire-wrap it and I will if I live another decade or two. The CPU is in a package like a big CPLD and I got a socket for it on a breakout board. The rest are all DIP. Wait….this page. Top is the VLSI ARM7500 and bottom is the NS32000. http://regnirps.com/Apple6502stuff/arm.htm

Aha, yes. You mention the Apple ARM prototype.

It was called Möbius, and I summarised all the info about it I could find in this article here:

https://www.theregister.com/2024/09/04/where_computing_went_wrong_feature_part_3/

You may enjoy parts 1 and 2 as well.

Apple took early ARM chips and built a testbed that ran 6502 code under emulation. It was much much faster than a real 6502, or a 65c816. The problem is they also tried running 68000 (Lisa/Mac) code on it under emulation and it was faster than a real Motorola chip, too. In other words, there was a risk (risk/RISC, haha) that this successor Apple II could be faster than a Mac. That would be disastrous for the Mac line.

So, Apple killed it. But the chip ended up in the handheld Newton instead.

If Apple in 1985 had thought of building a RISC-based Mac that could run native apps as well as classic ones under emulation, it could have launched a RISC Mac nearly a decade earlier than the first Power Macintosh, which launched in 1994.

And since what Apple in fact did was:

68000 -> PowerPC

PowerPC -> Intel

Intel -> Arm

It could have bypassed two entire platform migrations and started the Arm migration 35 years earlier.

if bill herd decides to make a commodore 256 i hope he remembers the bodge wire :-p

This is why I love HaD.

I’m confused. Why is a 32 bit 6502 implemented on a FPGA not a HW chip? An appropriate pinout and form factor would allow being anything. With a standard mezzanine one could adapt to any device design.

I’m a big 6502 fan. I like the architecture. FWIW I’ve got Patterson & Hennessy 1-4, though only read a portion of 4. Plus such horrors as the i860 manuals.

Hm? Because of my comment I wrote earlier?

I meant to say that I hope it will eventually be available as a dedicated, hardcoded chip. As an ASIC, which is sort of related to FPGA.

Or maybe some flavor of PLD (PAL, GAL), maybe as CPLD..

It would also make the chip more affordable, likely.

Technology using the equivalent to diode matrices or ROM would also be very close to hardware level (see CPLD).

Anything not FPGA/software-configurable would be.

Because most FPGAs contain an microcontroller and RAM, unlike older CPLDs and PAL/GAL chips do.

The older technologies are more technological pure, thus. More down to earth.

FPGA is hardware level emulation, as some would put it.

Very nice for simulating mapper chips in game cartridges, for example.

It has its place, too. It just shouldn’t be seen as final answer to everything, I think.

Yup. Just like Ditto from Pokèmon. It can take any shape, too. Even that of a Pikachu.

But it still remains a Ditto, not a Pikachu.

Just like an FPGA remains an FPGA and is not native hardware.

Then there are at least two kind of FPGA cores, I think.

One that basically translates C++ to VHDL (relationship to software emulators)

and the other one that does recreate hw logic at the gate level (more accurate).

In simply words. I’m just a layman, after all.

i wonder if this might not actually have performance advantages if it saw the same kind of investment as ‘real’ chips do (ARM, x86, etc).

there have been some rather counter-intuitive results in the evolution of performance computing. i’m sure i won’t do a good job of summarizing them but for example, x86 keeps up even though its instruction set is awful, because the vast number of transistors necessary to decode its instructions and feed them into a superscalar execution engine doesn’t turn out to have much of a performance penalty. another, which Bjarne Stroustrup likes to bring up, is that for small n, O(n^3) is often better than O(n*lg(n)), because if the problem fits within L1 cache then execution might as well be instantaneous — and the n where this is true is “larger than you think”. conditional branches are more expensive than — sometimes — hundreds of straight line instructions. register-register moves are ‘free’ because they’re implemented by the superscalar scheduler without moving anything (“register renaming”). in fact, i think most chips these days have a zillion registers that the execution engine maps dynamically to the few ISA regs — effectively an L0 cache.

suffice it to say, a lot of the things that compiler and runtime coders have obsessed over for decades simply don’t matter the way they used to.

so i’m not convinced a modern chip couldn’t achieve efficient execution with “only” 3 registers. and i’m not sure it wouldn’t actually win compared to more complicated ISAs, because:

the biggest single lesson in modernity is that cache matters more than anything else. in the innermost levels of cache, computers are inconceivably fast. but each outer level is slower by 100x or 1000x or more. network is much slower than spinning rust is much slower than flash is much slower than RAM is much slower than L2.

so code density is worth something all on its own. instructions get pushed out of cache just like data does. intuitively, a lot of instruction sets are designed around having a lot of registers so that you can keep your working values in registers throughout the whole computation. but that often leads to bloated instruction encodings, where each instruction has to address 2, 3, or even 4 registers out of a bank of 16. we’re talking 16 bits per instruction just indicating which register to use.

i wonder if a radically simpler instruction set won’t have a marginal advantage in the future. of course in practice, minor simplifications like ARM Thumb probably already pushed this idea far enough

Instead of a 32bit, just go 64bit, so have a multicore 4-64 core 65C864 (64bit) in a new C64, heh.

I don’t know, but has FPGA stagnated? They are so costly and are limited compared to ASIC.

Thanx Jenny, After much thinking about what to put into a reply in the comments. I decided I would like to see a W65C864 to keep it modern.

32 bit 6502? It’s called an ARM chip.