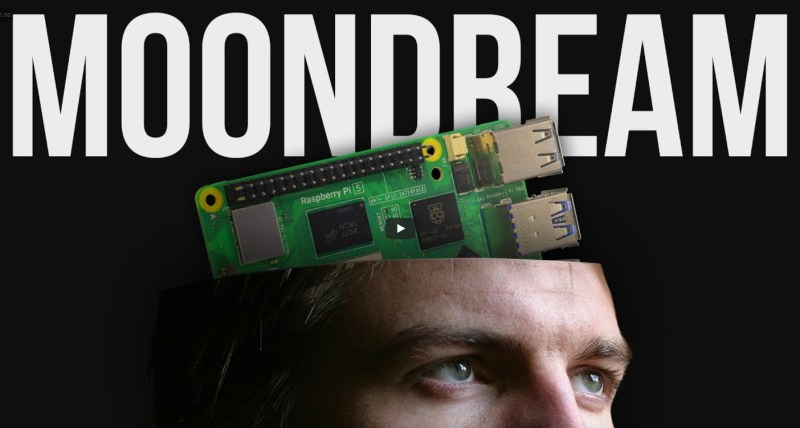

[Jaryd] from Core Electronics shows us human-like computer vision with Moondream on the Pi 5.

Using the Moondream visual language model, which runs directly on your Raspberry Pi, and not in the cloud, you can answer questions such as “are the clothes on the line?”, “is there a package on the porch?”, “did I leave the fridge open?”, or “is the dog on the bed?” [Jaryd] compares Moondream to an alternative visual AI system, You Only Look Once (YOLO).

Processing a question with Moondream on your Pi can take anywhere from just a few moments to 90 seconds, depending on the model used and the nature of the question. Moondream comes in two varieties, based on size, one is two billion parameters and the other five hundred million parameters. The larger model is more capable and more accurate, but it has a longer processing time — the fastest possible response time coming in at about 22 to 25 seconds. The smaller model is faster, about 8 to 10 seconds, but as you might expect its results are not as good. Indeed, [Jaryd] says the answers can be infuriatingly bad.

In the write-up, [Jaryd] runs you through how to use Moonbeam on your Pi 5 and the video (embedded below) shows it in action. Fair warning though, Moondream is quite RAM intensive so you will need at least 8 GB of memory in your Pi if you want to play along.

If you’re interested in machine vision you might also like to check out Machine Vision Automates Trainspotting With Unique Full-Length Portraits.

This doesn’t seem to be particularly useful setup for anything needing real-time recognition. However, I could see this being part of a larger inventory system.

I wish there was a way to specify output datatype. Prompting it to “answer yes or no” is not enough guarantee for me. Imagine that you could specify list of parameters to be filled that would be enforced by model programatically rather than defined by natural language.

Something like prompting:

(bool_dog, int_count) = query(“Are there dogs on my bed? [BOOL] How many dogs are on my bed? [UINT8]”)

In the end, that doesn’t actually change anything or make it more reliable. It maybe ensures that the output will be grammatically correct if you force it to generate text that is valid JSON, but the model is generating JSON in exactly the same probabilistic way as it generates normal text. To the model, such structured queries are just a funny sort of english grammar.

It’s a good proof of concept that could be pretty responsive on a faster computer.

Looks like Apple Metal might be the most efficient way to speed this up. As far as I can see the Pi doesn’t offer any acceleration and can take up to a minute and a half to respond. Potentially 15-20 seconds on average for easier tasks.

Apple Silicon Macs are fairly power efficient and small, possibly even get a laptop with a broken screen or something. The speed up might be worth the extra money and power, also it comes in a chassis with a power supply and storage, so the price point seems reasonable for $200-300.

If you just want to play with it on the cheap a PC would be a good fit too.

I want to like Pi, I pre-ordered it (from the kickstarter?), I’ve had the 2, 3, 4, Zero, Zero2, Compute module 3(?), but facts are when you try to do big stuff like this it needs either dedicated accel or you can get used compute equipment that can run rings around it, also is usually expandable, or usuable for other things.

Cool project, but telling people to run it on a Pi sounds like a school project, fine in and of itself. Yes I am spoiled with $50 server compute CUDA cards and used GTX1080. Also Apple Silicon seems fairly performant and available. Pi would be great running off solar?

Run on the camera itself.

They are called LLaVA (or Large Language and Vision Assistant) models. They have a translation layer which lets LLMs to see images. I’m not very knowledgeable on their inner workings.

About a year ago I wrote a script which used a locally hosted LLaVA model on my desktop to go through my 20K family photos and generate descriptions and search keywords for them in an sqlite database so I could search images just from their keywords

TBH, if I wanted to just recognize objects I’d skip the language part and use a simpler and faster image recognition model like You Only Look Once (YOLO, or something else trained on the COCO dataset). You’ll get much faster performance and a LOT fewer hallucinations. Images go in, probabilities for each of the recognized classes of object come out. Many of them can even highlight the areas in the image where each object is. At this point, these models are nearly a decade old.

iv had good results with a esp32 sending a picture back to chat gpt api and have the prompt ask questions about the picture. although this is a good setup for local.