During the AI research boom of the 1970s, the LISP language – from LISt Processor – saw a major surge in use and development, including many dialects being developed. One of these dialects was Scheme, developed by [Guy L. Steele] and [Gerald Jay Sussman], who wrote a number of articles that were published by the Massachusetts Institute of Technology (MIT) AI Lab as part of the AI Memos. This subset, called the Lambda Papers, cover the ideas from both men about lambda calculus, its application with LISP and ultimately the 1980 paper on the design of a LISP-based microprocessor.

Scheme is notable here because it influenced the development of what would be standardized in 1994 as Common Lisp, which is what can be called ‘modern Lisp’. The idea of creating dedicated LISP machines was not a new one, driven by the processing requirements of AI systems. The mismatch between the S-expressions of LISP and the typical way that assembly uses the CPUs of the era led to the development of CPUs with dedicated hardware support for LISP.

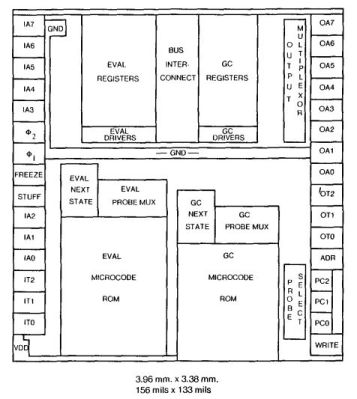

The design described by [Steele] and [Sussman] in their 1980 paper, as featured in the Communications of the ACM, features an instruction set architecture (ISA) that matches the LISP language more closely. As described, it is effectively a hardware-based LISP interpreter, implemented in a VLSI chip, called the SCHEME-78. By moving as much as possible into hardware, obviously performance is much improved. This is somewhat like how today’s AI boom is based around dedicated vector processors that excel at inference, unlike generic CPUs.

During the 1980s LISP machines began to integrate more and more hardware features, with the Symbolics and LMI systems featuring heavily. Later these systems also began to be marketed towards non-AI uses like 3D modelling and computer graphics. As however funding for AI research dried up and commodity hardware began to outpace specialized processors, so too did these systems vanish.

Top image: Symbolics 3620 and LMI Lambda Lisp machines (Credit: Jason Riedy)

Miss my Symbolics 3645. Too bad I couldn’t hold on to it.

Mine is still in the cabinet in the garage! Saw it this weekend. Haven’t turned it on in years. I have Genera VM that I can play with.

Interesting OS. There’s an OpenGenera image floating out there somewhere.

Obligatory: Knights of the Lamda Calculus

Obligatory xkcd: Lisp and Lisp Cycles

Reminds me of:

https://en.wikipedia.org/wiki/Jazelle

Which apparently evolved into ThumbEE, and then devolved into the history books too?

TI built their own Lisp processor in a sea of GALs to make the Explorer workstation. It was a truly weird piece of hardware from the VME-like backplane but it’s NuBus to the fiber optic connected, gas cylinder supported, music playing monitor.

They followed it up with the Explorer II which condensed most of the CPU down to a single VLSI chip called the Mega. The last Explorer was a Mac Lisp accelerator that put most of an Explorer II on a single NuBus card.

TI was kind enough to document nearly everything about their hardware, and those docs survive thanks to Bitsavers: https://bitsavers.org/pdf/ti/explorer/

The first time I have seen LISP was on a Apple][+ with UCSD. It impressed me so much that I device to never ever look again on this language. :)

Hmm, same effect at CSUDH, BUT, we had a LISP oriented department dean. Failed that class. Oh well

Microsoft: The future of work is here,

thanks to Windows 11 and AI.

These specialized processors can achieve amazing things in terms of reducing the amount of complexity. Like the P21/F21 forth microprocessors had an impressive amount of capacity for basically not drawing any current at all. But in terms of improving performance, it’s really hard to get too excited about them. Regular extant processors are very fast at running Scheme code. It’s easy to make a code-generator targetting any of the modern architectures.

I feel like i’m basically riffing on the Guy L Steele paper “Debunking the expensive procedure call myth.” Procedure calls — the fundamental operation in LISP and Scheme and Forth — simply aren’t that expensive on any platform. When Steele wrote that, people meditated on that overhead to such an extent that it made them incapable of writing good software. A Scheme microprocessor represents meditating on that overhead to such an extent that you give up the advantages of mass-market hardware designs. I don’t think either is worth it. The overhead just isn’t significant — modern CPUs are plenty good at Scheme.

There is one stubborn bit of overhead i have some curiosity about overcoming….memory management. I really like (from the programmer’s perspective) implicit memory management, but it tends to have some performance penalties which can be hard to accept. In that vein, here’s an article on hardware reference counting

https://help.luddy.indiana.edu/techreports/TRNNN.cgi?trnum=TR163

Using a Lisp Machine has truly made a difference in my life regarding my faith in Jesus Christ. It allows me to dive deep into biblical texts, helping me explore themes related to Jesus, almost like having a personal biblical study assistant. I’ve also developed practical tools like a virtual prayer journal and an app for memorizing scripture. These keep me connected to my faith every day.

Would you be willing to share any of the tools you’ve developed? My user name is a valid Gmail address, if you’d like to get in touch.

This comment captivated my attention so much that I lost awareness of my index finger hovering over the phone screen; I’ve reported it by mistake. I’m so sorry! It would be good to include an “undo” option for such moments.

…”As however funding for AI research dried up and commodity hardware began to outpace specialized processors, so too did these systems vanish.”

Not soon enough.

Asianometry did a nice video on Lisp machines: https://www.youtube.com/watch?v=sV7C6Ezl35A

Given the mention of AI it would be interesting to read some of the AI hype from back then and see how much of today’s AI hype is an echo of the ancients.

I’m too young for that era but I do remember the “fuzzy logic will save us all” bandwagon briefly rolling through town.

Haha that picture is great, I was just standing in front of that machine about two weeks ago. I wish the CHM had more on lisp and the AI winter but at least they had something.