The reason why large language models are called ‘large’ is not because of how smart they are, but as a factor of their sheer size in bytes. At billions of parameters at four bytes each, they pose a serious challenge when it comes to not just their size on disk, but also in RAM, specifically the RAM of your videocard (VRAM). Reducing this immense size, as is done routinely for the smaller pretrained models which one can download for local use, involves quantization. This process is explained and demonstrated by [Codeically], who takes it to its logical extreme: reducing what could be a GB-sized model down to a mere 63 MB by reducing the bits per parameter.

While you can offload a model, i.e. keep only part of it in VRAM and the rest in system RAM, this massively impacts performance. An alternative is to use fewer bits per weight in the model, called ‘compression’, which typically involves reducing 16-bit floating point to 8-bit, reducing memory usage by about 75%. Going lower than this is generally deemed unadvisable.

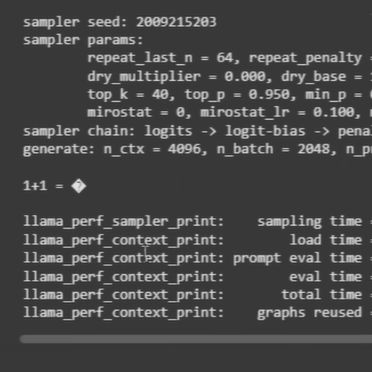

Using GPT-2 as the base, it was trained with a pile of internet quotes, creating parameters with a very anemic 4-bit integer size. After initially manually zeroing the weights made the output too garbled, the second attempt without the zeroing did somewhat produce usable output before flying off the rails. Yet it did this with a 63 MB model at 78 tokens a second on just the CPU, demonstrating that you can create a pocket-sized chatbot to spout nonsense even without splurging on expensive hardware.

ESPressif has really knocked this out of the park for microcontrollers. You do have to reduce the instruction set and limit it – but I have a LLM capable of voice only interaction that can answer local questions and uses websocket to talk to a bigger LLM when necessary running on an ESP32S3. Check out the project at XiaoZhi on Github.

That sounds really cool! I assume this is the Github repo:

https://github.com/Mo7d748/xiaozhi-esp32

I really hope you post your project somewhere!

Now we know what corporate will use to replace human workers – it pretty much matches some of the customer service contacts I’ve had over the years.

Humans replaced by…math. Oh the ingloriousness of it all. Now aren’t you all glad you studied in school?

That header picture is so eerie/disturbing and yet fascinating, something about mixing flesh and metal… meh.

It’s from the terminator

I suppose, perhaps a bit closer to a Cyberpunk 2077 character who went too far with modifications.

I like your website a lot :). Perhaps consider adding https so it would not throw insecure access warnings in modern browsers, it has interesting projects.

After Solenoid’s glowing review, I had to go to your page. Is the microwave PC still around? Did you ever make use of the VFD? This recent post should help: https://hackaday.com/2025/10/22/esp32-invades-old-tv-box-forecast-more-than-just-channels/

I have an old microwave chassis, too. I think I’ll try something similar. I wonder if I could mount a small LCD panel in the door…

What a useless video. Didn’t show anything at all of what he’s speaking about. Spent maybe a couple of weeks working on that, another one making the video, and I lost 5 minutes of my time to watch something that could’ve been read in 20 seconds or a whole week reading the details that are no where to be found.

Interesting!

The parallels between how this was trained and how m4g4 are “educated” is striking!

Maybe combine with dictionary compression after quantization?

Isn’t that what tokenisation is? I’ve probably misunderstood.

“The reason why large language models are called ‘large’ is not because of how smart they are, but as a factor of their sheer size in bytes.”

… Has anyone ever confused the concept of size with intelligence? Stating the obvious is a quite peculiar way to start the article.

I used a script from Predator for one of my first self built chatbots for its learn model well over 20 years ago lol. It was a foul mouthed beast but had partial awareness by the end of our journey.

Not quite close to the smallest, go checkout the llama4micro repo. It literally run a llama2 on a MCU.