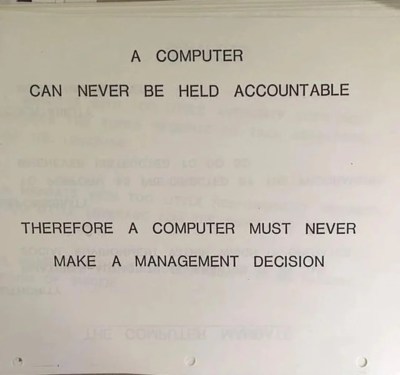

There’s been a lot of virtual ink spilled about LLMs and their coding ability. Some people swear by the vibes, while others, like the FreeBSD devs have sworn them off completely. What we don’t often think about is the bigger picture: What does AI do to our civilization? That’s the thrust of a recent paper from the Boston University School of Law, “How AI Destroys Institutions”. Yes, Betteridge strikes again.

We’ve talked before about LLMs and coding productivity, but [Harzog] and [Sibly] from the school of law take a different approach. They don’t care how well Claude or Gemini can code; they care what having them around is doing to the sinews of civilization. As you can guess from the title, it’s nothing good.

The paper a bit of a slog, but worth reading in full, even if the language is slightly laywer-y. To summarize in brief, the authors try and identify the key things that make our institutions work, and then show one by one how each of these pillars is subtly corroded by use of LLMs. The argument isn’t that your local government clerk using ChatGPT is going to immediately result in anarchy; rather it will facilitate a slow transformation of the democratic structures we in the West take for granted. There’s also a jeremiad about LLMs ruining higher education buried in there, a problem we’ve talked about before.

If you agree with the paper, you may find yourself wishing we could launch the clankers into orbit… and turn off the downlink. If not, you’ll probably let us know in the comments. Please keep the flaming limited to below gas mark 2.

Not a hack…

And similarly a politician can never be held accountable…yet he makes management decisions.

If an entity consistently makes better decisions than for instance the leader of a very large country, i know which one i would prefer. There are a lot of advantages when choices are made without human selfish desires, power corrupts and accountability doesn’t reverse the mishaps that have been done.

Yet can just replace the current deep state with AI. What can go wrong there?

Going to have to read this paper more fully, but it certainly seems to start strong making some decent points in the first few pages. However I really can’t imagine a world in which the LLM is worse than some of the current ‘human statesman’ and their equally ‘human’ enablers…

But I also can’t imagine they will actually be remotely useful for a long long time (if ever) in that sort of role – can’t say I think much of vibe coding, but at least that produces something that either works or it doesn’t and will turn into a game of debug for the person trying to get the result they want – so hopefully they learn something about real coding in that language in the process. With a definitive and limited goal in the users mind you could argue the vibe coding is more like training wheels on a bicycle, at some point they will have learned enough they don’t actually need it. But all those more nuanced and complex web of interactions that need some real consideration so you don’t make things worse, espeically the slow building up of a really devastating problem that will be much harder to fix then. About the only way a LLM might be useful there is allowing for a better ‘vibe check’ from the users/voters who have ‘written to their statesman’ etc, allowing the actually rational minds to find patterns in the reports.

The LLM is already in many ways ruined the internet at large to a much larger extent that I’d realised till very recently – as even dry rather niche academic web searches when you don’t have your trusted repository of knowledge on this topic now seem to be rather likely to be poisoned, but in ways rather hard to immediately detect. I’ve actually come to the conclusion its time to get a new university ID and master the art of searching for and taking notes from books.

For instance for a reason I can’t remember I was trying to look up medieval shoe construction (think it was something about a particular style that came up as a side curiosity) and other than 1 or 2 companies that sell custom/cosplay shoes everything on the first 3 pages of that websearch as you read into it proved to be AI slop with almost all of them making the same obvious mistake eventually and claiming these shoes from a few hundred years before faux leathers existed were created out of some variety of fake leather/plastic! Along with other obvious enough tells once you actually read the article knowing anything at all, making the whole darn thing suspect.

I’m sure if that question had been important enough I’d have been able to find the right cluster of serious history students or cobblers and their forum etc eventually, and add them to my growing list of quality resources on various topics but this is the first time I’d encountered no genuine correct answers at all from a well enough constructed general websearch – the search worked perfectly turning up articles that should be exactly what I wanted, or at least that generic overview and closely related content by their wording, but it turns out all the pages found are just good enough looking junk that I really don’t know how you could structure a websearch to exclude them, other than only searching for pages old enough the LLM couldn’t have generated them!

Oh waiting for the bubble to pop!