There’s been a lot of virtual ink spilled about LLMs and their coding ability. Some people swear by the vibes, while others, like the FreeBSD devs have sworn them off completely. What we don’t often think about is the bigger picture: What does AI do to our civilization? That’s the thrust of a recent paper from the Boston University School of Law, “How AI Destroys Institutions”. Yes, Betteridge strikes again.

We’ve talked before about LLMs and coding productivity, but [Harzog] and [Sibly] from the school of law take a different approach. They don’t care how well Claude or Gemini can code; they care what having them around is doing to the sinews of civilization. As you can guess from the title, it’s nothing good.

The paper a bit of a slog, but worth reading in full, even if the language is slightly laywer-y. To summarize in brief, the authors try and identify the key things that make our institutions work, and then show one by one how each of these pillars is subtly corroded by use of LLMs. The argument isn’t that your local government clerk using ChatGPT is going to immediately result in anarchy; rather it will facilitate a slow transformation of the democratic structures we in the West take for granted. There’s also a jeremiad about LLMs ruining higher education buried in there, a problem we’ve talked about before.

If you agree with the paper, you may find yourself wishing we could launch the clankers into orbit… and turn off the downlink. If not, you’ll probably let us know in the comments. Please keep the flaming limited to below gas mark 2.

Not a hack…

This comment on every post that you don’t seem worthy to be a hack becomes a bit boring and monotinic…

It is very interesting to most of the hacker-minded people in general.

Wordcel lawyer types are paranoid about AI replacing them, I hope it does, but the rest of us shape rotators are safe for now.

And similarly a politician can never be held accountable…yet he makes management decisions.

That is not entirely true, given there are numerous examples of politicians being held accountable.

One might instead wonder why so few seem to be held accountable for their actions today.

They are held accountable at the next election. The problem is that malfeasance is forgiven by the people who regard them as on their ‘side’.

To be fair a lot of people in even lower management positions aren’t ever held accountable. That said, this is also a large component as to why a lot of companies crumble and produce less and less actual value to their customers over time.

The tldr makes a lot of sense to me. Maybe I’ll read the paper sometime.

If an entity consistently makes better decisions than for instance the leader of a very large country, i know which one i would prefer. There are a lot of advantages when choices are made without human selfish desires, power corrupts and accountability doesn’t reverse the mishaps that have been done.

Yet can just replace the current deep state with AI. What can go wrong there?

An AI has no desires or emotion. The collected works of Isaac Asimov explore this. Start with Franchise and the complete robot. Maybe try Foundation series.

AI by definition will create anarchy, not to be preferred.

Note I am not by any means supporting politics, although I remain a law abiding citizen in line with the principle in Mark 12:17.

Going to have to read this paper more fully, but it certainly seems to start strong making some decent points in the first few pages. However I really can’t imagine a world in which the LLM is worse than some of the current ‘human statesman’ and their equally ‘human’ enablers…

But I also can’t imagine they will actually be remotely useful for a long long time (if ever) in that sort of role – can’t say I think much of vibe coding, but at least that produces something that either works or it doesn’t and will turn into a game of debug for the person trying to get the result they want – so hopefully they learn something about real coding in that language in the process. With a definitive and limited goal in the users mind you could argue the vibe coding is more like training wheels on a bicycle, at some point they will have learned enough they don’t actually need it. But all those more nuanced and complex web of interactions that need some real consideration so you don’t make things worse, espeically the slow building up of a really devastating problem that will be much harder to fix then. About the only way a LLM might be useful there is allowing for a better ‘vibe check’ from the users/voters who have ‘written to their statesman’ etc, allowing the actually rational minds to find patterns in the reports.

The LLM is already in many ways ruined the internet at large to a much larger extent that I’d realised till very recently – as even dry rather niche academic web searches when you don’t have your trusted repository of knowledge on this topic now seem to be rather likely to be poisoned, but in ways rather hard to immediately detect. I’ve actually come to the conclusion its time to get a new university ID and master the art of searching for and taking notes from books.

For instance for a reason I can’t remember I was trying to look up medieval shoe construction (think it was something about a particular style that came up as a side curiosity) and other than 1 or 2 companies that sell custom/cosplay shoes everything on the first 3 pages of that websearch as you read into it proved to be AI slop with almost all of them making the same obvious mistake eventually and claiming these shoes from a few hundred years before faux leathers existed were created out of some variety of fake leather/plastic! Along with other obvious enough tells once you actually read the article knowing anything at all, making the whole darn thing suspect.

I’m sure if that question had been important enough I’d have been able to find the right cluster of serious history students or cobblers and their forum etc eventually, and add them to my growing list of quality resources on various topics but this is the first time I’d encountered no genuine correct answers at all from a well enough constructed general websearch – the search worked perfectly turning up articles that should be exactly what I wanted, or at least that generic overview and closely related content by their wording, but it turns out all the pages found are just good enough looking junk that I really don’t know how you could structure a websearch to exclude them, other than only searching for pages old enough the LLM couldn’t have generated them!

Oh waiting for the bubble to pop!

“Oh waiting for the bubble to pop!”

I agree, but mostly because I want the current price of DDR5 to return to normal. Regarding AI itself, the cat is out of the box…

The search engine war was lost almost 20 years ago when the advertisers targeted the search algorithm to feed us ads. Many sites I used to enjoy are lost to history, living only in my memory.

The difference AI brings is to make the job of those stealing the search results easier. All they need to do is get you to click a crap result and serve ads on the page. They get paid.

I never understood the point of ads anyway. I personally do want to purchase products, not many that I see advertised but that’s a different story, however ads have gotten so inaccurate and dumb that I feel stupider for having seen them.

Nissan car ads are some of the dumbest, near as I can tell all they convey is “car; has wheels, vroom” with suitable shots of a city runabout doing u-turns on a dirt road.

Microsoft’s AI ads I can’t begin to understand. They have one where AI tells a person they need an e-bike. Why he needed AI to tell him that is beyond me. Presumably AI will also tell him where to get a usurious loan or how to commit petty larceny to pay for it?

Not really – the advert and sponsored links stuff is a mild annoyance decades ago, and generally would pay to put their adverts on relevant quality content, or put titties in adverts everywhere… Not ideal for make the web sane to allow your children on, but the content was decent stuff and porn (whatever your opinion on that)… The sponsored links and shopping links straight from the search engine type stuff and Google dropping the don’t be evil pretence hasn’t been good, but it wasn’t making it impossible to find those real dedicated to their craft folks…

a politician thinks about the consequences for the next election, a statesman thinks about the consequences for the next generation

“Authoritarian leaders and technology oligarchs are deploing AI systems to hollow out public institutions with an astonishing alacrity.”

If only there could have been some system which would have prevented the paper’s authors from making such a glaring typography error as writing “deploing” instead of “deploying” within the first proper paragraph of their entire paper.

An Ai hallucination can sometimes produce spelling mistakes. Just shows they used an LLM to make the paper sound more lawerly. “Gemini ….. re-format the paper uploaded to make it understandable to the layman (again)”

Or they are dyslexic and/or not good at proof reading – some folks find it practically impossible to spot the missing or reversed letters punctuation etc, especially if you wrote it so you already know what it is meant to say, but I tend to skate right by those errors without noticing even if I don’t know – the meaning was so clear that missing letter etc just didn’t register at all.

AI has two aspects which are superficially separate but deeply entwined in today’s reality. The first is the Kurzweil-esque singularity…technology is changing at an ever-faster pace and how will we build a society around technology that changes faster than society does? The other is the financial aspect. For at least a couple decades it has been obvious to me that there are financiers who are able to make wagers with a sum of money that is much larger than physical capital or anticipated production. It’s a reaction to the tendency of the rate of profit to fall as commodity production matures. The financier demands an ever-increasing profit, but mundane reality has few options for them. So physically intangible things without an intrinsic limit on their profitability have become very popular — e.g., bitcoin and chatgpt. Obvious bubbles become the only success story in of our economy.

That’s obviously a disaster because the bubble will pop. But it’s also a disaster because now anything real and useful that isn’t as profitable as the bubble is being abandoned or turned over to the whims of people who have unreal sums of money they got from riding the bubble. And it’s a disaster because much of our labor force is still selling productive labor but an ever-growing segment is instead focused on reaping the bubble. We are losing the productivity of the bubble-focused people at the same time as we are deepening the class divides between them and real workers.

The detail is, these facets are actually the same thing. Classical liberalism, finance capital, uneven development / colonial exploitation, collar-identified labor, these are all social structures built around changing technology.

The ‘bubble will pop’ is not a boolean. Please revise your comment with a probabilty. I’m giving it 32% pop myself, + or – 10%.

If it does not pop it isn’t a bubble now is it Drone Enthusiast.

No it certainly is going to pop eventually – AI concepts themselves are not going anywhere as much as I don’t currently think much of them. But this Nvidia lends money to their customers to buy more of their hardware cyclic money farming making the numbers look good for investors and rapidly increasing the ‘value’ of all the companies involved is 100% a bubble that 100% will pop at some point. The only question is how long it takes and how much work will be put in to kicking that can down the road hoping for a miracle solution..

If no effort is made to find a softer landing and control the fallout this could be 2008 all over again (but likely worse as the product is ‘useful’ and getting everywhere, so when the providers start collapsing so will their customers that have become reliant, alongside all the usual finacial market crap of folks holding shares finding them tanking in value for the knock on fiscal effects to pension pots etc).

Not a bad comment, I feared the worst, but the point made is fairly accurate it seems.

And of course nobody is going to do anything about it and all we can do is hope it is like an unchecked forest fire and just runs out of fuel eventually and goes out on its own.

These concepts are not foreign to anyone who has read ‘Franchise’ by Isaac Asimov. Published in 1955 it envisions a 2008 ‘election’ chosen by a ‘computer’. The twist spoilers

Asimov wrote extensively about a “positronic brain”, even concluding that eventually humans would no longer construct them due to the complexity, merely allow each successive generation to design the next. While it seems AMD is allowing machine code to pack transistors to achieve higher density (for a speed trade-off; look up Phoenix 2 if interested), it could certainly apply to programming and LLM coding.

**The twist is that the computer chooses a ‘voter’ to scapegoat. The computer interviews one human to verify that the data it collected is an accurate representation of the population. Whoever is chosen they have to skulk home avoiding angry people

The person chosen in “Franchise” is not a scapegoat.

The results of the election are extrapolated from that one person’s responses.

The computer is not using the person to take the blame. It is using the person as a data source representative of the entire population, from which it can calculate the results you would get if everyone voted.

That the rest of the population gripes and complains about the selected person is a human problem.

i have a friend who drives a Tesla with the latest version of “autopilot.” it works amazingly well 99.99 percent of the time, which is probably better than most human drivers most of the time. but you still have to be in the driver’s seat with your eyes pointed at the road (enforced by cameras looking at your pupils). this is because a human ultimately has to be accountable for the car. certainly Tesla doesn’t want the lawsuits. Human accountability is a huge part of why our society functions at all, and disembodied intelligences that can be spun up in an instant just cannot have the same incentive system.

So basically, if I understand it all, the Tesla “autopilot” takes all the fun out of driving yourself with the added chore of babysitting the machine so that if all hell breaks loose you have front seats watching all the drama enfold… and no matter how it goes you are to blame. So in short, why would you want “autopilot” on your car?

PS: I have had a Commodore 128, a model that claims achieving nearly 100% compatibility with the original C64, one of the first games I tried on it, didn’t even get past the cracking intro. This instantly made me doubt the compatibility claim. Now how do you (or does Tesla) justify that claim of 99.99%? Does it drive down the same road for 10000 times and when it crashed violently they stopped the test? Seriously, how meaningful are such claims and under what conditions?

Hopefully they will keep digging. The key insight is not LLMs vs no LLMs – it’s democracy itself, and the incentives around it. Read Edmund Burke, deToqueville, John Adams – pure democracy is a menace, but some democracy is required. LLMs are just another tool.

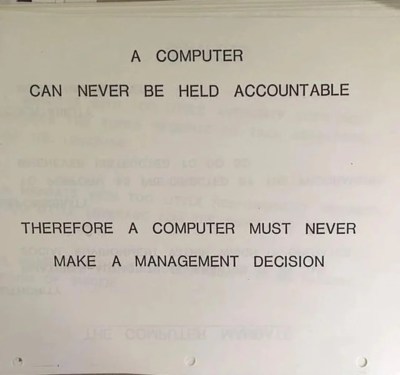

A COMPUTER CAN NEVER BE HELD ACCOUNTABLE

BUT

A PRINTER CAN!!!

https://youtu.be/N9wsjroVlu8?si=2oRrh7l2wnntm5D6