It’s a counterintuitive result that you might need to add noise to an input signal to get the full benefits from oversampling in analog to digital conversion. [Paul Allen] steps us through a simple demonstration (dead link, try Internet Archive) of why this works on his blog. If you’re curious about oversampling, it’s a good read.

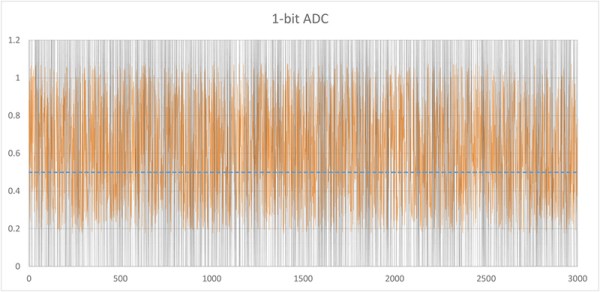

Oversampling helps to reduce quantization noise, which is the sampling equivalent of rounding error. In [Paul’s] one-bit ADC example, the two available output values are zero volts and one volt. Any analog signal between these two values is rounded off to either zero or one, and the resulting difference is the quantization error.

In oversampling, instead of taking the bare minimum number of samples you need you take extra samples and average them together. But as [Paul] demonstrates, this only works if you’ve got enough noise in the system already. If you don’t, you can actually make your output more accurate by adding noise on the input. That’s the counterintuitive bit.

We like the way he’s reduced the example to the absolute minimum. Instead of demonstrating how 16x oversampling can add two bits of resolution to your 10-bit ADC, it’s a lot clearer with the one-bit example.

[Paul’s] demo is great because it makes a strange idea obvious. But it got us just far enough to ask ourselves how much noise is required in the system for oversampling to help in reducing quantization noise. And just how much oversampling is necessary to improve the result by a given number of bits? (The answers are: at least one bit’s worth of noise and 22B, respectively, but we’d love to see this covered intuitively.) We’re waiting for the next installment, or maybe you can try your luck in the comment section.