Tired of that unsettling feeling you get from looking for paywalled papers on that one site that shall not be named? Yeah, us too. But now there’s an alternative that should feel a little less illegal: this new index of the world’s research papers over on the Internet Archive.

It’s an index of words and short phrases (up to five words) culled from approximately 107 million research papers. The point is to make it easier for scientists to gain insights from papers that they might not otherwise have access to. The Index will also make it easier for computerized analysis of the world’s research. Call it a gist machine.

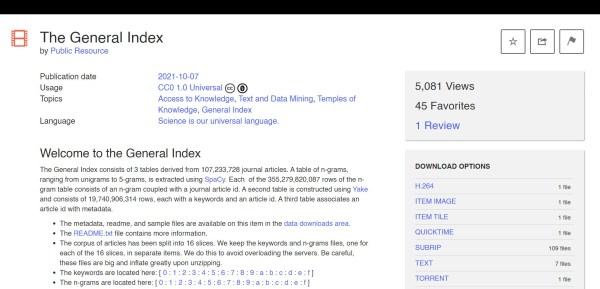

Technologist Carl Malamud created this index, which doesn’t contain the full text of any paper. Some of the researchers with early access to the Index said that it is quite helpful for text mining. The only real barrier to entry is that there is no web search portal for it — you have to download 5TB of compressed files and roll your own program. In addition to sentence fragments, the files contain 20 billion keywords and tables with the papers’ titles, authors, and DOI numbers which will help users locate the full paper if necessary.

Nature’s write-up makes a salient point: how could Malamud have made this index without access to all of those papers, paywalled and otherwise? Malamud admits that he had to get copies of all 107 million articles in order to build the thing, and that they are safe inside an undisclosed location somewhere in the US. And he released the files under Public Resource, a non-profit he founded in Sebastopol, CA. But we have to wonder how different this really is from say, the Google Books N-Gram Viewer, or Google Scholar. Is the difference that Google is big enough to say they’re big enough get away with it?

If this whole thing reminds you of another defender of free information, remember that you can (and should) remove the DRM from his e-book of collected writings.

Via r/technology