[John] has been working on a video-based eye tracking solution using OpenCV, and we’re loving the progress. [John]’s pupil tracking software can tell anyone exactly where you’re looking and allows for free head movement.

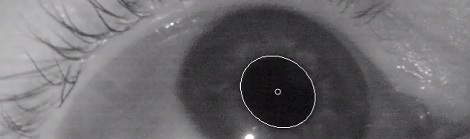

The basic idea behind this build is simple; when looking straight ahead a pupil is perfectly circular. When an eye looks off to one side, a pupil looks more and more like an ellipse to a screen-mounted video camera. By measuring the dimensions of this ellipse, [John]’s software can make a very good guess where the eye is looking. If you want the extremely technical breakdown, here’s an ACM paper going over the technique.

Like the EyeWriter project this build was based on, [John]’s build uses IR LEDs around the edge of a monitor to increase the contrast between the pupil and the iris.

After the break are two videos showing the eyetracker in action. Watching [John]’s project at work is a little creepy, but the good news is a proper eye tracking setup doesn’t require the user to stare at their eye.

[youtube=http://www.youtube.com/watch?v=-B5JolF2N6s&w=470]

[youtube=http://www.youtube.com/watch?v=8edm_LGQWDA&w=470]

It’s pretty cool and I’ve thought about making similar.There’s quite a few posts on stackoverflow that discuss tracking the pupil with opencv.

I was thinking about making an app that moved the mouse pointer based on where you’re looking. But it seemed like a lot of work for something that probably wouldn’t be that useful.

Actually, there is a fairly big potential in this adaptation. For instance – imagine the auto-scroll functionality while reading articles, simulating real field of view in games and serving as a hands-free controller for tools, mechanized security cameras, and devices for disabled people (e/g: screen keyboard as a fast way to write without a need to physically touch anything). It sounds pretty useful for me!

> simulating real field of view in games

Please see the youtube link in my comment below/above this!

Combine this with games like ArmA2 where the point of aim is not fixed to the screen center…

I assure you that having the cursor follow where you are looking can be VERY useful. Like all things, it boils down to preference. However, it reduces many operations from (move mouse + click) to (click) when an item of interest is identified.

Also, this is cool to me: http://www.youtube.com/watch?v=HdW1v9TPNYw

Actually, it’d be pretty cool to see a smartphone doing this for an interface, ideally with some sort of “clicking” mechanism so that scanning a page doesn’t automatically go to any links there.

I wonder if this is similar to the eye-tracking technology that is currently being used in military helicopters.

Whether it is or is something completely different, well done!

Military helicopters use head tracking in the helmet, not eye tracking.

head tracking in the helmet? um your head is inside the helmet, it does eye tracking in the helmet’s hud. my friends a heli pilot for Canadian military.

Wrong.

It tracks where your head is pointing. Particularly useful for locking caged heat seekers prior to uncage/launch. It’s been that way up to at least the F35. Don’t take this the wrong way, but I doubt Canada’s military is up to or beyond that point…

BadHaddy is ( incomplete but ) correct. Fo Apaches at least, the HUD monocle does track eye movement. The helmet also tracks head movement.

Source: a former Apache driver.

The massive hope, is that someone finds a way to make inexpensive eye-tracking. I’d then expect to see huge benefits for disabled people (see Tobii and Tobii prices especially) – and as much so for the likes of Kinect 2 to augment a number of other sensors. Good luck!!

This software can be (and is) used with the exact same hardware setup as the eyewriter. It’s also the hardware used to develop this. The only thing extra is four IR LEDs.

If you can afford a playstation 3 camera and 5 LEDS (one for the eye!), then you can afford eye tracking. It still requires DIY, but then again, who can complain about <$100 eyetracking?

The problem is not the technology (this is pretty much what the Tobii devices are doing already) but the market. Tobii charge high prices because the market is very small and they have no competitors. It’s one thing to make a prototype out of webcams and duct tape. It is another thing to make a mass-market version.

Very cool. I’ve had trouble with OpenCV’s calculation of moments. Sadly it only works on binary images, which is fine on this case but I found the 2nd and 3rd moment calculations to be problematic (which I think is being used in this project). Well I am glad that someone smarter than I sorted it out.

– Robot

Oh, I see; two cameras are being used to extract pupil pose. Interesting and smart. I wonder why the horizontal resolution is “noisy”?

Would a third camera resolve that?

I can see how two cameras on the same horizontal would nail down side-to-side movement, but their vertical tracking wouldn’t be as precise. A third camera could correct for that, provided it’s as far away vertically as possible (eg cameras at top left, top right, and bottom center)

finally… now I can finish my shoulder mounted cannon

How does the system allow for free head movement when the camera seems to be head-mounted? To get screen coordinates, you need head direction too.

This is a good start but the videos are taken under ideal conditions. I have been involed in eyetracker development and getting good results for a glass-wearing guy leaning back in his chair in a sun-lit room is still much more tricky than this, unfortunately. Then, hair gets in the way and the camera changes position as he scratches himself…

With IR LEDs attached to the monitor, you can track the reflections, allowing for (relatively) free head movement. If you’re looking at an LED, it’s closer to the center of your pupil. Take four points, one on each corner, and you’re good to go. If you watch the first video, you will see that there are color coded points. The ones overlaid on the eye correspond to the circles on the screen.

Wouldn’t having a bunch of IR LEDs beaming into your eyes all day cause some form of fatigue? I realize you don’t perceive it, but that emitted energy is going to be absorbed to some extent.

Very good concern to have, but nope.

http://hackaday.com/2012/05/30/opencv-knows-where-youre-looking-with-eye-tracking/#comment-664457

Good observation about tracking the LED reflections. You have a good eye.

Not trying to be negative or anything, but as much as I’m fascinated by this and other methods, I really wonder what shining bright but invisible IR at the eyes for extended periods of time is going to do…

Please think about it this way: what happens when you go outside? A torrent of infrared light bombards your entire body and eyes, coming from the sun. Damage from infrared wavelengths typically comes in the form of heat damage. These LEDs are driven using PWM, meaning that the eye gets a many short breaks to “cool down”. The amount of IR light reaching the eye in this case is about an order of magnitude less than the limit established for extended workplace exposure.

On top of all of this, our bodies are MUCH more transparent to infrared radiation than visible light. I hope this soothes your worries. Going outside during the day is many many many times more dangerous than using this.

is this something that could be used with a Kinect?

Yes! I just got the asus version of the kinect, and 3d depth sensing seems like it could really be useful for cool interfaces.

I want stuff like this! http://www.youtube.com/watch?v=HdW1v9TPNYw

I was able to do this as a class project last year without the fancy setup – only a webcam.

Check it out: http://www.youtube.com/watch?v=XcEsDJA0CWE

The method we used worked in real time and is actually very simple

Nice! That sounds much more impressive/complicated than this. I’m just doing thresholding, ellipse fitting, and perspective projections, which is just a few easy calls to opencv.

Jon, Is there anyway you could forward me a link to eyetrax or send me your information about it. I have some applications I am working on to help my cousins for cheap instead of having to buy high end equipment.

Hey John ! Very great precise work !

my question is did u use a screen or a head mounted camera ??

Thank you in advance !

Hello , if you please answer me , i would be so thankful , is there a way that i can build an eye writer with a usb webcam and using opencv only ???

it is for my last year college project . pleassssss …

Hi,

this work is pretty interesting for me. However, the link to the paper is not working anymore and I have not been able to find it by myself, so far. Could someone provide a working link / a copy / the correct title oder a bibtex file?

Thanks in advance!.

Sebastian

Hi there during the current COVID crisis, i am looking to find help to analyse eye movements/pupil tracking from pediatric patients who are not able to attend the hospital. I would as the parent to take a video of the child and send it in to us. Any help/direction would be great