We were initially skeptical of this article by [Aleksey Statsenko] as it read a bit conspiratorially. However, he proved the rule by citing his sources and we could easily check for ourselves and reach our own conclusions. There were fatal crashes in Toyota cars due to a sudden unexpected acceleration. The court thought that the code might be to blame, two engineers spent a long time looking at the code, and it did not meet common industry standards. Past that there’s not a definite public conclusion.

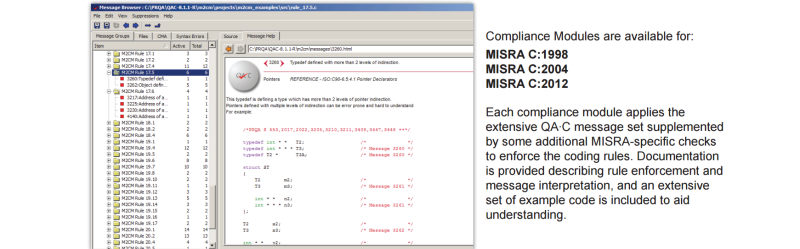

[Aleksey] has a tendency to imply that normal legal proceedings and recalls for design defects are a sign of a sinister and collaborative darker undercurrent in the world. However, this article does shine a light on an actual dark undercurrent. More and more things rely on software than ever before. Now, especially for safety critical code, there are some standards. NASA has one and in the pertinent case of cars, there is the Motor Industry Software Reliability Association C Standard (MISRA C). Are these standards any good? Are they realistic? If they are, can they even be met?

When two engineers sat down, rather dramatically in a secret hotel room, they looked through Toyota’s code and found that it didn’t even come close to meeting these standards. Toyota insisted that it met their internal standards, and further that the incidents were to be blamed on user error, not the car.

So the questions remain. If they didn’t meet the standard why didn’t Toyota get VW’d out of the market? Adherence to the MIRSA C standard entirely voluntary, but should common rules to ensure code quality be made mandatory? Is it a sign that people still don’t take software seriously? What does the future look like? Either way, browsing through [Aleksey]’s article and sources puts a fresh and very real perspective on the problem. When it’s NASA’s bajillion dollar firework exploding a satellite it’s one thing, when it’s a car any of us can own it becomes very real.

You already correctly spelled “MISRA” when you correctly expanded the acronym.

Grammar Nazis are the lowest form of trolling

Logical fallacy detected.

“Adherence to the MIRSA C standard entirely voluntary…”

Thanks, fixed.

There’s a second one. Hint: Find Replace All.

Adherence to the MIRSA C

Moscow’s International Radiation Specialist Associate

Get your radiation training

Adherence to the MISRA C

You all (except for someone who mentioned Simulink) seem to be missing an important point: MISRA is a standard for human-written code, designed to catch human errors. The Toyota code was machine-generated from a Simulink-like environment where numerical function-blocks are chained together to achieve a numerical result. It’s basically a z-domain signal-processing function-graph, and the whole graph is executed once per sample-period.

One of the major complaints about the code is that it had thousands of shared, static variables. In human-written software, that would be a *disaster*, but actually, those “shared variables” are all the input- and output-ports of the functional blocks that the system was programmed with. Yes, if a human got hold of that code, they could erroneously write to a shared value that shouldn’t be written to, but there is no human C touching it. The graphical code-generation engine ensures that output ports are written to only by their generating block, and allows the values to be read elsewhere.

There were other issues, like the watchdogs were insufficiently independent and were unreliable, but that’s separate from all the C and MISRA whingeing going on here. Sure, it’s not MISRA-compliant. MISRA is not relevant to machine-generated code, and would not have detected or prevent the bugs that actually existed in the system and (probably) caused unwanted acceleration: stack overflows and faulty watchdogs.

(my post is not to defend Toyota – clearly there are serious defects – but to point out that all of the coding-style analysis and “8500000 MISRA violations!!!eleventy!” hysteria is, well, uninformed hysteria)

Here are some better articles:

http://embeddedgurus.com/state-space/2014/02/are-we-shooting-ourselves-in-the-foot-with-stack-overflow/

https://users.ece.cmu.edu/~koopman/pubs/koopman14_toyota_ua_slides.pdf (this one also misses the point about machine-generated code and shared variables but that’s like 3 slides buried in over 50 of otherwise excellent analysis)

http://www.safetyresearch.net/blog/articles/toyota-unintended-acceleration-and-big-bowl-%E2%80%9Cspaghetti%E2%80%9D-code

http://www.i-programmer.info/news/91-hardware/6995-toyota-code-could-be-lethal-.html

This argument seems correct to me. First, there is also a MISRA AC AGC standard in the context of automatic code generation.

Secondly, you may document deviations or deviation permits in your project anyway, when you can prove that it will not compromise the safety, and still be MISRA conforming. See MISRA: MISRA-Compliance-2016.pdf document.

Of all the things that could be said about the article…you choose to point out a minor typo. Damn.

How about pointing out that companies setting their own internal standards has been leading to a lot of major issues. Fiascoes such as this and the battery issue at Samsung can be avoided by companies being held to standards from outside their four walls. When it comes to high velocity, extremely large caliber projectiles(ie cars) with a massive potential for death and destruction, by all means, a company should be held to outside safety standards and indeed should even try to exceed them.

Read article -> mention spelling -> read linked blog article -> go down the rabbit hole -> forget followup to initial HaD comment -> continue life.

Comment threads are a thing you know.

I say we let the free market decide. If cars are deathtraps, consumers will figure it out through trial and error. /s

No they won’t…

If asbestos wasn’t banned in new installations, you can bet your ass there’d be a lot of people willingly using it instead of safer, but more expensive alternatives.

Same goes for catalytic converters and pretty much any pollutant-limiting tech, if it weren’t mandatory, very few people would pay the extra cost.

Or leaded fuel.

Or CFCs in everything.

Or PCBs.

Or DDT… the list goes on, the regular Billy Bod just doesn’t give shit and ignores the warnings.

And in case of US, when it finally catches up to him, goes to court and wins.

the /s is for “sarcasm”

Professional writing in English has fallen to an all time low. Someone has to point it out.you can’t read an article that is even spelled right, much less uses the right words and references.

I think Hanlon’s razor applies here.

Writing good, secure, reliable C code is hard. Really hard. And it takes a lot of painstaking, methodical effort. That’s the sort of effort that people who are racing to get a whizzy new feature to market before other people get there first are not likely to put in. Then they demo the new feature to senior management, who say, “Great, we’ll start marketing it tomorrow.”

We are getting to the point where C is not fit for purpose for a lot of the purposes for which it is used. Humans can only deal with so much complexity; when a system’s high-level complexity increases, you need to compensate by having the tools you use to build that system hide some of the low-level complexity for you. Sure, you *can* build very complex systems from very low-level components, but doing so takes *much* more time and money. For this reason, very few people write operating systems in assembler, or write 3D engines in C, or build cars from individual atoms, and so on; though it’s certainly possible, and was once commonplace (well, the software examples), those things have got much too complex to do in low-level languages, and are much easier in high-level languages.

We need system-level tools that provide better facilities than C. I’m not sure what that looks like. Rust is an attempt at it, particularly regarding memory safety, but from what little I’ve seen, it looks like a rather clunky attempt that isn’t really gaining a lot of traction. D is another attempt at it, but not one that’s suited to real-time systems (it’s not possible to use it without a stop-the-world garbage collector). Ada was another attempt at a safe high-level language, but its defence background led to it not keeping up with innovations that might have improved it. It’s possible to write system-level code in C++, but the opportunities to break it are enormous.

Possibly what’s needed is innovation in silicon rather than in software. Perhaps many of the problems that lead to fragility are due to high-level languages that don’t map well onto low-level hardware. Would C++ be a better systems-level language if exceptions mapped directly to processor features, for instance? And the biggest mis-match of all is that modern languages give you the facade of essentially-infinite available memory when the reality is… different (I know, I know, pointers are fixed size and virtual memory lets the OS deal with where to store it – but a 64-bit pointer lets you address tens of thousands of petabytes; who has that kind of disk space available?) Even in MIRSA C, one of the first things you’re told is, “Don’t use malloc; it might fail.”

D’oh. MISRA C, of course.

That’s just lipstick on a pig. You still have a pig underneath. C its self is the problem, it just wasn’t designed for complex safety-critical code.

I think the solution may be to revert back to state machines as they are easier to simulate and conclusively test.

Programs are implementing state machines…

“Programs are implementing state machines”

Only in the sense that every computer is a Turing machine in the end. It’s a little like saying, “Human beings are organic.” True, but it has no useful content.

You don’t get state machine BEHAVIOR without deliberately writing the code to implement it.

C++ as a safety enhancement over C? This has to be kidding:

– C++ inserts code on it’s own (constructors, destructors, etc.) by best guess practices.

– C++ is more complex than a single engineer can grok. Which means, code written by one engineer can’t be read and understood be every other engineer of a similar skill level.

– Techniques like operator overloading or templates easily lead to unexpected behavior.

One of the few advantages of C++ is syntax to ease code organization in classes. Can be done without too many efforts in plain C, too.

C is horrendous when it comes to safety, C++ at least allows you to avoid som of the more tedious micromagament that is the source of the majority of the bugs.

If you seriously believe C is “safer” (in any meaning that includes “less crashing”) than C++ then you need to seriously consider learning how to write software.

And what if this automatic micromanaging doesn’t do what’s expected? Then you have well hidden bugs, while in C one can at least see what’s going on.

As C++ is pretty much a superset of C, each bug opportunity in C is one in C++, too. Plus many additional ones in C++ on top.

Just a minute ago I fixed a bug in such a high level language (Python) which a C compiler had catched at compile time: https://github.com/Traumflug/Teacup_Firmware/issues/246 As the code was written in a high level language, the bug went unnoticed for months.

I am dumbfounded that you think this. You seriously have it backwards when you said “you need to seriously consider learning how to write software”

“If you seriously believe C is “safer” (in any meaning that includes “less crashing”) than C++ then you need to seriously consider learning how to write software.”

We already know. I have worked for multi-million-$-US companies that staked their reputations and bottom line on the FACT that C is safer in embedded systems than C++.

All your objections are fixed by having coding standards and not use features that isn’t worth the trouble.

Good point! Accordingly, the solution isn’t to have even more complex languages (C -> C++ -> C#, etc.), but to implement and enforce coding standards. Enforcing coding standards essentially means to reduce language complexity (by removing the non-standard features).

unfortunately that is only viable where safety is absolutely paramount and resources not an issue, abstraction is what made coding practical after all…

Well, resources are always an issue. It’s just a case of where you allocate them – extra developer time to work with safety constraints, or legal fees on endless lawsuits. Also, abstraction makes coding practical, but working with limited abstractions, as in the NASA standard, is not the same thing as working with none at all. It’s finding the sweet spot between easy and simple that makes the product reliable.

@Traumflug

Every professional C++ team I’ve encountered basically decides on a sane subset of C++ and cordons off everything else. I have a hard time imagining working on a team where you’re allowed to use literally any and all features of the language.

I guess they could always bring back HAL/S which they used to make the shuttle’s firmware which was used as an example of some of the most bug free embedded control software ever written in comparison to Toyota’s bug ridden code.

Just a reminder: the first Columbia launch (STS-1) was delayed two days because of an error in the negotiations between the quad-redundant flight control computers and the Backup Flight System (a computer identical to the other four but running separate software.)

Sorry, you’re going to come in for a kicking as a representative of all those here saying the same things.

If you are writing “Hello, world,” then yes, C is probably safer than C++.

But most of your comment is ignorant hor*****t.

“inserts code on it’s own by best guess practices”? WTF? By “best guess practices,” you mean, “in accordance with the ISO standard,” right? You do *know* there’s an ISO standard for this, don’t you? If you’re having to guess about what’s happening, that’s your ignorance, not a problem with the language.

A competent software engineer can grasp all of C++. A competent mechanical engineer probably can’t, which is why we give complex control software to software engineers, not mechanical engineers. Conversely, well-designed complex C++ software is *much* easier to understand than the same level of complexity written in C. Remember those “constructors, destructors, etc” that are put in by “best guess practices”? Those are the things that mean you don’t have to figure out *every* way your code can *possibly* exit and manually clean up in every possible scenario. I’m sure someone will wheel their wheelchair out of the dark corner over there to say, “every function should only have a single return.” This is a bone-head-stupid rule that only ever existed *precisely because C makes you find every possible exit path and clean up after yourself*. And I’m sure we’ve all seen the horribly convoluted if-else structures and use of flag variables that the rule inspires. When a rule forces you to write hard-to-read, unmaintainable code, it’s a bad rule, inspired by a bad language.

“operator overloading or templates easily lead to unexpected behavior” – yes, but hammers also easily lead to broken fingers. We don’t ban hammers. We use them safely.

Your attitude to software is exactly equivalent to a mechanical engineer banning everything from the factory floor except vices, files and hand-cranked drills. After all, lathes, mills, drills, and welders are all dangerous bits of kit. We don’t trust welding – it’s too easy to make a bad joint. And who needs a lathe or a mill anyway? You can just drill a hole and shape it with a file “without too many efforts.” If you can’t hold a hand drill square to a surface, you shouldn’t be in the machine shop!

As the complexity of a control system increases, the potential for unsafe mistakes increases at different rates in different languages, and that rate is faster in C than in C++, because the same complexity of functionality is more complex to express in C.

Consider an example of what is probably the most common cause of errors in C. You need to find the average of a list of measured values, and the length of that list is dependent on some operator-configurable parameter. For whatever reason, the length of that list *cannot* be arbitrarily limited. You need to dynamically allocate some memory on startup. In C, you’re stuck with malloc and friends. You’ll have to keep track of the length of the array and you’ll have to iterate over it with a for loop. You will almost certainly include at least one memory access bug, be it off-by-one, read-past-end, write-past-end, failure to deallocate or whatever. The bug will probably be difficult to trigger (because if it happened every time then you’d fix it). In C++, you’d use a vector allocated at construction. All those errors are still possible, but you have to go out of your way to make them. Write best-practices C++ and you avoid all of them without having to think about it.

C is a tool.

Ada is a better tool.

A fool with a tool is still just a fool.

Tool is a band.

A band-aid is a medical device

Kool-aid is a sugar drink.

I’m not sure whether I’ve read much about Graphical Programming languages on Hackaday, but this is what we use for engine ECU control code and Test Cell control code (Simulink + Stateflow and Labview respectively). I believe graphical programming languages are pretty widely used in the automotive idustry. Simulink essentially allows you to ‘wire up’ your data flow paths between logic blocks, and Stateflow allows you to create a State machine as simply as any flow chart tool. It then autocodes this into C code, and compiles it to binary to run in real-time on your hardware of choice.

I’m not sure what the hard core *insert text based langauge here* coders would think to this, perhaps specifically embedded C coders, but personally I believe it’s fantastic. I’ve coded a lot in PHP, and a little bit in C/C++/Java etc. Simulink is far less prone to typo-style silly mistakes, weird logic written by a wizard-in-the-zone, addressing incorrect data and state/data mismatches. It is also much more clear with a quick look what the logic is doing, the ‘code’ could be directly pasted into a management presentation to illustrate what some logic does. Debugging (which is required less often) is much faster to isolate the problem as the code is easier to understand. The fact that you mostly want all of your code to fit on one screen also massively encorages code modularity which therefore encorages unit testing and code reuse. The biggest benefit of all is the speed of development, but i suspect this is in part due to a goos compreshensive library provided with simulink. However, it is still faster to develop new logic than in C based languages, and mostly it works second try!

To me it seems that graphical languages work very well for embedded applications, perhaps because they are appear analagous to analog electronics which, when well designed, can perhaps be neater and simpler than a digital electronics solution.

There are of course some cases where a graphical language doesnt quite make as much sense, for example, I really wish I hadn’t used LabVIEW to try and process large sets of engine test cell data and generate reports. Even MySQL and PHP would have been easier for this. Simulink also isn’t so good for code which needs to be written in a specific way for speed purposes (since it’s autocoded) however, I suspect it can still achieve better code optimisation than the average coder – certainly better than my C code!

I’d be very interested in hearing what other’s experience is with graphical verses text based, and primarily whether they have had specific issues with Simulink for embedded applications. We currently maintain two different Engine Control architectures in Simulink using the OpenECU platform, and while we are still in the relatively early stages of product development, I just couldn’t imagine doing it any other way – and this will become even more true as our systems are taken to a higher level of refinement and into high volume production.

I would also be interested to know of any other (open source/free) graphical programming languages, as the biggest disadvantage for my personal projects would be the cost of a Simulink/LabVIEW buildchain.

Drag and drop programming is limited, and can/as optimisation problems/limitations/limits..

An ECU relies on semi-custom cpu’s with dedicated hardware, search for eTPU’s(http://www.nxp.com/products/microcontrollers-and-processors/power-architecture-processors/mpc5xxx-5xxx-32-bit-mcus/mpc56xx-mcus/enhanced-time-processor-unit:eTPU) its kinda of a timer on steroids and you can have 20 or 30 of those puppies running along all reading and writing data to the ecu and actuating things in the engine(be it sensors, solenoids, injectors, ignition, abs, etc, etc, etc), add to that high level monitoring and a bazillion things hooked in the CAN bus of a semi-recent car and you have a pretty nice fluster cuck of concurrency..

You can code that in ASM or in node.js it will be a chore any time, and you will need to relly on almost unique parts in each ECU, dont expect compilers to every language in the world, expect more of crappy gcc adaptations..

I’m a huge fan of Simulink, and I know that it’s being used for many mission-critical applications like spacecraft, satellites, and cars. Mathworks has tools that automatically generate C code from the graphical language.

_BUT_: Several years ago both the auto industry and NASA (not sure about DoD) approved what they called simulation-based testing. What that means is, after developing, say, an engine-controller design in Simulink, you also TEST it in Simulink.

Back when this Toyota story first broke, I mentioned this on the Internet, and expressed my concern that people might be putting too much faith in simulations. I got a surprising number of replies from people that said that modern coding standards were so good, it was impossible to generate code that misbehaved.

Need I say that I don’t buy this attitude for a moment?

Think about it. In the real world of embedded systems, multiple events can happen simultaneously. In a simulation, that’s by definition impossible, because the computer that the simulation is running on can only do one thing at a time. Events only seem simultaneous because the computer has a very high clock rate.

My own personal opinion is, there is no freakin’ WAY a simulation is going to catch every possible combination of events that can send the software off the rails.

I’d like to see a lot more field testing, but truly, I fear that the chances of catching software errors that way is even less.

IMNSHO there’s only one way to verify that complicated software is sound, and that’s to validate it, by inspecting the code to the point where you can absolutely guarantee that a sequence of multiple events can’t break it.

Static code analyzers help a lot (when they work at all), but I still think it would take a team of experts to totally validate the software.

Back in the day, I’ve done validation many times. The folks who assigned the task to me had in mind that I’d run a few simple tests (ask it to add 2 & 2) and write a report. They were shocked when I actually dug into the code, and verified that every single code snippet couldn’t fail.

I think that’s the only way code gets truly validated.

Jack

I came across this some time ago: There are two ways of constructing a software design: One way is to make it so simple that there are obviously no deficiencies,and the other way is to make it so complicated that there are no obvious deficiencies. The first method is far more difficult. — C.A.R. Hoare

I like this sentiment. On the whole I do only allow a more complex solution if a simpler one becomes too complex to understand.

Graphical languages are a subset of what are called Domain Specific Languages. I think DSLs are a very underappreciated concept. Microsoft Research Labs have done a lot of work in this area, but I haven’t seen them take off in my industry yet. And a lot of their demos have been built on top of the .Net framework, which doesn’t help in the RTOS environment of an engine control unit, or in any embedded system.

But, DSLs are a great way of keeping application developers working within a safe zone without exposing them to the vagaries of an unpredictable garbage collector, resource leaks, buffer overflows, most type safety problems, etc. The code they emit works.

Of course, these limits aren’t without a price. They rely on an extensively tested code generator to emit code that doesn’t have any of those faults. They constrain developers from expanding beyond the boundaries defined by the DSL. And they have a few hidden constraints that some people don’t recognize: the DSL is the source code, and the generated code must always be considered an intermediate product, and treated like an .OBJ file; it must not be modified. The output code may be highly optimized and not be the most friendly to read, understand, or interface with. That also means the true cost (CPU, RAM, storage) of a particular design choice might not be well understood by the app developer. And tool versioning is critical: you can’t rely on DSL 2.0 to emit the same code as DSL 2.0.1.

And nothing about a DSL automatically solves all of the security problems that still plague the software industry. They have to be equally careful about doing things right, like validating input, preventing deliberate buffer overflow attacks, preventing injection attacks, etc. And every problem they’re coded to solve adds complexity and rules that may impact the performance of the generated code in undesirable ways, or in ways that will increase the cost of the final product.

And just because you’re using a DSL doesn’t mean it’s a *good* DSL. I left my last position because they forced a switch to a graphical DSL that was abominably bad; it was supposed to be “multithreaded” but it was impossible to do even simple tasks without massive deadlock and corruption issues – it turned out their engineers didn’t understand designing for concurrency at *all*.

I’ve done a fair amount of control system programming with packages developed internally for Simulink. I think the graphical approach is really nice. In my opinion, it’s displaying programming functions graphically, that’s about it. But for humans, it can be immensely easier to understand this way; no small thing.

I would point out that it doesn’t help a bit in understanding the subtleties of control systems, only the big picture. For example, it’s easy to see if a feedback input is not hooked up. But it’s harder to appreciate stability concerns that stem from digital controls issues, if the blocks are designed to simulate continuous paradigms. This doesn’t make visual programming bad. I just point out that visual programming doesn’t solve all issues.

I wouldn’t go back to programming in C if I had a choice for applications like control systems.

TI has been leveling-up the hardware for awhile now, see: http://www.ti.com/lsds/ti/processors/dsp/automotive_processors/tdax_adas_socs/overview.page

Those are for in-car entertainment and radar/front/back cameras and the whole driving aids that are getting more and more common for the morons, cant park?

The car will try.

Cant brake when a car in front brakes?

Car wil try.

And so on….

Not for ECU usage..

This has gotta be a troll, right? Blaming C language for failure to adhere to a software standard. Well played.

Not quite. A guy accidentally stepped off of a high scaffold and fell to his death. The fact that there was no railing around the scaffold is just a coincidence.

A language is a tool. A saw is a tool also, and you can use a saw effectively, even if it does not have any safety features. However, I would prefer my saw to also have covers for the sharp spinning bits. It would also be nice if there were a language that somehow made it easier to write correct code. I do not know what such a language would look like, being a hardware guy. However, languages do differ wildly in how they are used. Prolog, for example, makes some problems easy that would be hard in C (or at least a lot more work).

This is not a matter of languages. If you look at the rules that were applied to Toyota’s source code – both the MISRA and NASA standards – these are rules that, if followed, result in clearer code with far fewer traps that programmers tend to fall into. This is a matter of programmer discipline, which has nothing to do with the complexity of the problem to be solved OR of the language being used (although these standards were clearly written with C/C++ in mind). But it doesn’t matter what language you use, if you don’t check return values or for cases of buffer overrun or underrun, you’re going to have trouble. The goal of standards like these is to coerce programmers into better habits, such as restating the problem in simpler terms rather than (as in Toyota’s example) writing functions 700 lines long.

I like your thoughts on this. I also had some thoughts about this issue, below is a post I placed on Facebook. My thoughts weren’t specifically about this instance, but what it means for self-driving cars. I may have a simple solution that is here for open criticism:

Statistically speaking, self driving cars will reduce traffic fatalities because they will easily outperform the average driver. That effect will reduce fatalities even more dramatically when the percentage of human drivers is either reduced by public policy or reduced substantially by consumers making that choice for themselves. Unfortunately, those of us who deliberately or incidentally employ driving strategies that mitigate our chance of being involved in a crash will now be subject to the same statistical outcomes as everyone else. Although the number of deaths and injuries will be reduced dramatically by this technology, people who are the most careful will be incurring a different risk. Above average drivers will have to accept the risk of technological complexity with a disproportionate reduction in the risk of traffic incidents when compared to the average driver. This is because the only likely scenario for a collision in a world of exclusively self-driving cars is a failure of some part of that autonomous system or the mechanical vehicle itself.

I submit this example (linked article below) as an example of such a system failure.

What are the solutions for this problem of technical complexity and application of liability to such systems?

I suggest we require automobile and control-systems manufacturers to publicly release their software source-code and their electronic architectures no less than 180 days prior to use on any public roadway. In this way, the consumers can verify the safety and validity of the control systems and control strategies employed. This also will foster an even playing field among the competing control strategies so they can work effectively with each other on the roadway. This solution still allows each manufacturer to develop in secret and patent certain technologies without them being “black box” technologies when utilized in production vehicles.

Standards just give paper pushers a job.

Car software should be open source and there is no reason on earth why all cars cannot have the same software where control of the vehicle is concerned. If a manufacturer wants to add bits by calling standard open source routines to make a cruise control or make the windows open in an accident then provided they release the code it is OK. Electronics in cars gives us the only time in history where an end user cannot work out how something works after something has gone wrong no matter how good they are.

+1

they can add all the bits and bobs they wanted to non critical systems like AC, stereo etc. etc.

But engine, safety and stability systems should be both spearate from anything else and open source

but of course it is not going to happen

I’d have to disagree here. There is a lot of innovation in software, and with the level of competiton it could really kill a car maker. Cars are also incredibly dangerous, and giving people free access to modify their own cars programming spells disaster. I *wish* it could be that easy. Perhaps there could be a layer on top of the hard coded safety standards to let people experiment with certain things. Locking out access to vehicle controls, and asking to do certain things in a sort of Java wrapper could solve the problem. If the lower level disagrees, it returns an error code explaining what your code could cause a safety conflict with.

open source would still work, it would however require something that our modern society seems determined to forget, personal responsibility, if you modified your car so that it posed a danger then you should be held accountable.

so insurance will require the unmodified software to run, and checking this live along the GPS position, speed and other metrics … enough to get them satisfied.

And it would still be possible to hack the source code on a race track, or simulate it … car manufacturers keep their job: designing and building cars. They also get an standard,

framework to validate safety and pollution norms compliance.

It would allow a whole car software ecosystem and market to coexist and develop.

It would also allow to compare car performances more accurately, and check safety compliance easily.

It would also permit define responsibility between the manufacturers embedded code / hardware,

and the norm-defined standard driving model when an accident happens, which insurances will ask for.

It would also allow bug correction of this model or the manufacturers code, and define a local zones where government would enforce this or that version (and possibly their own backdoor)

Nonetheless the code and tools could still be open-source but only hacked only on a car with a, gov-approved, insurance validated signed certificate.

It would not only boost competition on car manufacturing, but seriously improve innovation by removing barriers.

Also (as many turbo diesel hot rodders discovered), the manufacturer would be within their rights to not honor any warranty claims on the vehicle power/drivetrain systems. You change the factory calibrations (software) on what was a thoroughly tested system – it’s no longer the same system, and therefore the initial specifications under which the vehicle was warrantied, no longer exists.

You play, you pay. I’m now going ahead and using HP Tuners to adjust my new C7 Z06 to get a bit more rwh HP and decrease my quarter mile times. I am fully aware GM can scan the ECM and determine a new “tune” – calibrations are in place, and they could disallow any engine, power/drivetrain warranty.

This “open source” garbage is the geek world equivalent of the ‘tree hugger’ movement.

Allowing the end user to screw around with factory software opens up nothing but liability issues (emissions, safety, and as mentioned above – warranty coverage).

@oodain

Personal responsibility won’t bring back my family after they’re run over by some dumbass doing the automotive equivalent of disabling his page file to try to improve performance.

no your family also wont be brought back after a car from brand x has a failure dure to procutioon errors or similar, my point was that in either case you wont see anyone doing any better without incentives, that not hurting others isn’t incentive enough is also a big part of this problem.

“Cars are also incredibly dangerous, and giving people free access to modify their own cars programming spells disaster.”

People already have access to modify their own cars. There is a billion dollar industry built on the back of modifying cars. You can turn a shopping trolley into a nitrous enhanced rocket sled. Open source is not giving people tools to do anything they can’t already do. It does however allow more eyes to see what has been done, and correct and enhance the code. Closed source allows flaws to go unchallenged.

Well it sounds good right? Opensource -> more eyes -> less problems -> safer stuff. Than came the OpenSSL disasters and the rest is history now. It doesn’t work this way.

I get your point, but I disagree. More eyes reveals flaws. The fact that people can then exploit those flaws doesn’t obviate the fact that they were flaws in the first place– and just as exploitable in their *undiscovered* state. Opening the code shines the ugly light on everything. It is then incumbent upon the people who wrote it– the manufacturer or whomever is responsible for it– to *fix it*, or at least acknowledge it and say “we won’t fix it”. But at least if it is open source, the possibility that it can be fixed by *somebody* exists. Burying your head in the sand and pretending that exploitable or dangerous flaws in the code don’t exist is simply foolish. IMO, of course.

The OpenSSL thing showed it to be MORE true. It was found, now it is fixed. The alternative hasn’t changed.

Closed Source -> Less eyes -> same problems as open source -> keep the problem exploitable.

As soon as you start modifying anything related to the engine you are in a very much gray area. Manufacturers spend billion complying with emission standards and getting type approvals, if you modify anything related to the engine it is no longer what was approved and not really legal anymore

If you modify your engine, just have a modified ecu on hand, this works with about 90% of cars(if no there is usually a workaround) and the manufacturer will have no idea. It may be a slight cost increase, but when you remove the brains you also remove the security guard.

@Evotistical the manufacturers don’t care unless you try to get something fixed under warranty. It is the EPA etc. that cares, if you modify anything related to the engine you have no prof that it meets emission standards

@fonz having a non-modified engine/ecu also doesn’t provide you proof that it meets emission standards. See VW/Audi issues and other manufacturers who disclaim emissions outside of “normal operating conditions” which happen to line up with emissions test parameters, not real-world driving conditions..

@HackSdrA but having a modified ECU mean you have no proof what you are driving will pass the standardized emmisions test, which puts you in the same place as VW who was presented with a $18billion fine

Thats like saying all os/phone software should be open source. The Apple/toyota users would never allow it as they would loose their ‘superior’ walled garden.

with phones/wifi etc. it is also about preventing modified radio control code polluting the airwaves, or with cars modified engine control code polluting the environment

You forgot to use the word “more” twice.

MISRA C can be met easily, but you can write crappy code that is compliant easily. It just prohibits some things that will likely lead to errors.

Easily ? Have you tried ? Wanting MISRA only code dramatically reduce the amount of existing codes you can reuse, even if you pay for it. Any project with significant complexity will require a lot more resources to be fully MISRA compliant.

There are common safety standards for road vehicles, namely ISO 26262 and others. What the author of the blog entry investigated, is a violation of MISRA-C, which is not at all a safety standard. It’s more a coding guideline for the C programming language to avoid errors and ensure maintainability and portability between different compilers.

So what the author wants to tell us is, if Toyota choosed to write their software in English instead of Japanese, the accidents wouldn’t have happened. This is bullshitting.

MISRA is a bunch of rules for people who want to shut down their brain during coding. As can be seen in the picture, there are automatic tools that check if you adhere to the rules.

The standard contains rules like “use Yoda conditions”, “don’t use goto or continue”, and “you must use curly braces for single statement blocks”. Older versions of the standard said “errno shall not be used”. Recent additions to the standard now force the use of errno and also say “set errno=0 before calling any function that might set errno”.

Yea, I wonder how many here have actually written MISRA compliant code… Doesn’t seem like many have..

MISRA is a good idea, but _horrible_ execution of it. It has a bunch of downright stupid things in it to cater to compilers from the 80s, like “avoid enums”, whereas in any modern compiler using enums instead of defines would let the compiler do type-checking for you.

Also, goto and continues have their places in code. Just don’t treat C like line-based BASIC.. ;)

The same happened to Audi, and their excuse is the same “American driver are confused by the pedals” :

http://embeddedgurus.com/barr-code/2014/03/a-look-back-at-the-audi-5000-and-unintended-acceleration/

That article is by Michael Barr, who was one of the engineers that investigated the Toyota code. His articles on the Toyota case are well worth reading:

http://embeddedgurus.com/barr-code/2013/10/an-update-on-toyota-and-unintended-acceleration/

Standards do much more than give paper pushers a job. They give a baseline to aim for or above, and a way to ensure quality work.

I grew up in the days before car software was a thing. A lot of these types of problems could easily be solved by good ol KISS. Keep It Simple, Stupid. Mechanical and electrical engineers take heed….just because you can do a thing, does not mean you should.

You don’t need a ton of hardware and software to implement a speed control. In fact, the more complex you make such a system, the more likely to fail it becomes This holds true with other examples as well, such as the automatic window scenario above. The latest wiz bang feature usually ends up being an attack vector or a fault waiting to happen. Some parts of a car should not be electronically(note the term…electronically, not electrically) controlled (steering for a prime example). If the engineer DOES decide to control such systems electronically, they should have NO outside connections to any sort of network that can allow for malicious people to take control of them. This is not only common sense, but simple good security practice.

The point I aim to make with all this is simple. Any system can fail. The rate of failure generally goes up for every level of complexity. Thus, the simpler you can keep a system, the more robust it generally ends up being. This is not to say , never design a complex system, but rather to say keep the complexity as low as possible while still achieving you design goals.

complexity doesnt equal a higher failure rate, some of the most complex systems on earth seems to be some of the ones that fail the least, removing computers from modern cars is impossible if you want to keep the engine performance of modern cars, that is highly dependent on being able to vary and predict engine conditions several times a second.

a purely reactive analog system would always be worse.

I think you under estimate what is achievable with analogue. While your digital system can be correct 16 million times a second, an analogue system can be right all the time right down to the pico-second and beyond.

And you think you can achieve pico-second accuracy from analogue? You get all sorts of nasty artefacts on those timescales (undershoot, overshoot, ringing etc), plus you suffer from limited accuracy due to your physical components, and drift as they age.

Digital is in general much more repeatable, and the quantisation it introduces is less of an issue than the problems of analogue.

I’ll get closer to pico-second accuracy with analogue than you can get with digital. The laws of physics *are* analogue.

not necessarily, depends on what you want, you can have infinitely more data “points” (analog=continous), but the accuracy of those points can be called into question so you can have many more less accurate points or you can have fewer more accurate points, which is closer to the laws of physics?

sure one might have a continuous signal just like a naively seen real world(Planck length and time shows us that the universe probably is granular, so no nature most likely isnt analogue in the sense you think of it, there is as far as we know indivisible time units), but that continuous signal would also be inaccurate and that inaccuracy would only go up as it was manipulated, so is it really “closer to the real world” than a more accurate signal with less data points?

i don’t underestimate what is possible with analogue, i think you misunderstand my point, you are right that analogue in some ways can be more reactive, the issue isn’t a digital vs analogue signal type, with their respective pro’s and con’s, but with the fact that analogue systems have limited possible operations, there is simply stuff that is practically impossible to do with pure analogue logic.

in the real world of actual solutions this is well known, which is also why you find plenty of platforms that allow for mixed signals, using the pro’s of both systems to provide services that you simply cant without either of them.

which leads directly back to my point that one cannot avoid using digital, in the case of analogue logic one would have a very difficult time doing meaningful real time prediction, you would also have the constant noise issue that practically means that many analog signals today are digitized as close to the source as possible.

“time right down to the pico-second”,

Really!! What is the part number of this fabulous op-amp or instrument amplifier that work at that speed?

A steel cable from the accelerator pedal to the carburettor.

@Wilko

I can’t tell if you think cars still have carburetors, or if you think returning to the age of nothing-but-carb would be a good thing. Either way you clearly don’t know anything about cars.

He also doesn’t know anything about Young’s Modulus if he thinks pressing a pedal connected to a long steel cable is going to result in movement on a picosecond timescale.

Speed ? Who said anything about speed ? You’re thinking about a micro-controller again.

I actually regret my comments about analogue. Not because it is wrong but because it is more true than expected.

I started in the analogue era. We even had analogue computers long before digital existed.

Now when I mention analogue people think I am talking about signal rather than processing electronics.

Someone even mentioned that the universe is granular – well perhaps it is at a subatomic level but in anything to do with everyday electronics it is analogue just like the traditional laws of physics (ie not quantum mechanics).

So to finish up – here’s a tip –

Your micro-controllers cannot synthesize natural world processes that are of course based on the laws of physics.

An analogue circuit *can* synthesize natural world processes because *get this* analogue circuits are *also* based on the laws of physics.

Röb: When you’re in a hole I suggest you stop digging. Anyone who has ever used an electronics simulation package (like SPICE) knows you can simulate real-world physical processes digitally.

Control systems engineers (who are properly qualified to judge) prefer digital over analogue for anything other than simple systems. Digital is more accurate, more reliable and easier to change, but you get quantisation effects (there’s always a trade off in engineering). Those effects are almost always less of an issue than trying to keep an analogue system working reliably.

I didn’t dig a hole. I discoverer that modern engineers understand far less about analogue than even I expected.

There’s no point in talking digital to me as I understand that as well. I use (V)HDL all the time. I code micros. I started coding in the 1970’s.

The reason people are responding with comments about digital capabilities is that they understand that (and only that), and so do I. The point is that they *don’t* understand analogue.

Röb: You’re not getting any better. Anyone who has ever studied control systems engineering knows that it’s about solving the mathematics of physical systems. They will have covered both Analogue and digital systems, and will get to choose which works best for the system they are working on, but the first step is always to understand the physical process they are controlling.

There are good reasons that analogue systems aren’t used much any more, and your failure to recognise this shows that you don’t understand the subject properly.

@[Sweeney] My failure ROFL

You mean your failure to read!

I mentioned analogue and some thought I meant just signal as in analogue signal without realizing that analog can actually be a process.

My time is wasted with you.

So tell me do you do VHDL or Veralog?

How far back to do go with electronics, both analogue and digital?

@[Sweeney]

You also need to understand the difference between ‘synthesize’ and ‘simulate’ or ’emulate’.

@Röb: You have once again conclusively proved you don’t understand control systems. They always start and end in the analogue domain. They always convert analogue quantities into something that can be processed (voltage and/or current for analogue control systems, 1s and 0s for digital), the inputs get processed and the outputs end up as analogue quantities (typically a position or a time).

The important facts here are that it doesn’t matter how the processing is achieved as far as the controlled device is concerned, and that it takes them a finite amount of time to respond to a change from the controller. An engine ECU doesn’t need to compute outputs quicker than about 1000 times per second as the engine can’t respond to them any faster than that (the time it takes one cylinder to fire at max RPM). A digital system can apply sophisticated error detection and correction to the inputs, can handle more of them simultaneously (a modern engine is full of sensors telling the ECU how it is performing and what the environment around it is like), can change its response depending on other factors (has the driver selected Eco or Sport mode for example) and can monitor the position of output servos to check for errors.

Now in what conceivable circumstance would an engine control system need a continuously varying signal to the control servos that is accurate to the picosecond, how do you propose to keep the system inside of calibration limits and how are you expecting to handle errors and noise from the engine sensors?

@[Sweeney]

I am sick of your ‘straw man argument’. Someone said it wasn’t *possible* to use analogue and I just mentioned that it *is possible*. I didn’t say is was optimum or practical or useful or preferable.

Don’t start jumping down my throat with you crap if you can’t even read.

I would pit my abilities against yours any day – at least I can read!

So what was it that you use VHDL or Veralog and when did you start electronics?

Yes there’s an obvious reason that you didn’t answer these questions.

I have yet to see an analog fuel injection (be it electrical or mechanical) engine with a controlled catalytic converter that matches or exedes a digital one…

Analog would just add failure points, because you’d have to have compensations for outside environment on everything and that would require complicated INDIVIDUAL tuning of every car before it could be released from production. You’d have to have a bunch of ASICs for everything, cost of developing these would be astronomical. Nobody in their right mind would do this.

In the same manner, nobody is making analog vectoring VFDs, even though it should befinitely be possible…ever wonder why?

last but not least, picosecond response is pointless if the fuel injectors react in miliseconds on a good day…

Are you, like [Sweeney], suggesting that analogue be used for engine management?

Not sure whether e.g. a window opener or steering wheel on the same CAN bus (which is what Taylorian wrote about) really affects performance of the gas engine to the positive.

KISS and separation of tasks are essential steps towards more reliability. Not that a window opener separated from engine control makes that window opener more reliable, but one can be very sure it doesn’t affect the engine to the negative.

If each device, sensor or actuator has his own bus it is not a bus anymore. It is the work of the engineer to evaluate the bandwidh requirement of each bus and comply to it.

A faulty can driver (or software which drives the tranceiver incorrectly) can easily stop any communication on the can bus. Just as a physical short circuit of the wires.

The safety critical nodes also demand a much higher quality standard (hw & sw) which makes them more expensive.

So yes, it’s a good idea to make a split between safety critical nodes and the rest of the system.

There really is reason for power windows and infotainment centers to be sharing a bus with the engine control systems.

Key to your statement…some of. I would argue, based on experience, that MOST of the most complex systems have a higher failure rate than the lower complexity systems of equivalent use. Of course this is all dependent on factors such as quality of workmanship, quality of design, and quality of materials used.

I never said to eliminate the computer control from an engine. I said to keep the complexity of the system to a minimum, within the design goals. If you use two sensors in a feedback system where one should do the trick, all you have done is doubled the chance of failure. You now have two sensors that can fail at any given time. And the performance gain you get with both sensors may easily be outweighed by the performance losses when the system is trying to cope with the missing or incorrect input from the bad sensor.

Also, the performance has nothing to do with the reliability(ie failure rate). Remember that are always modes or levels of failure in a system, from minor glitches to complete failure. Rate of failure does not take that into account, as a failure is a failure at that point.

Complexity need not be tied to level or type of control equipment. A digital system need not be inherently more complex than an analog one or vice versa. Complexity is merely a measure of many steps need to be taken to achieve an outcome.

i disagree, having seen production lines that make even self driving cars look like simplified kids toys i can tell you that complexity and failure rate has very little correlation or the world would look very different, just think about some of our infrastructure and how complex that is, now compare it to something as simple as a car, if those two systems had the same number of control nodes then the car would probably have more failed nodes for a given time than the large complex one.

one also has to remember that in many of the more complex systems more is done, so one could take the view that one would have to see which system fails the most for the work done.

Oh look – a hair to split!

“If you use two sensors in a feedback system where one should do the trick, all you have done is doubled the chance of failure”

If you have two sensors (of same or different type, presumably for better feedback), and either sensor could be used alone to provide most of the necessary feedback, then you’ve potentially halved the failure rate (due to sensors) because you have a measure of redundancy. Of course this would be offset by the increased code complexity required to fall back to the single-sensor mode if one of the sensors is deemed faulty.

“If you use two sensors in a feedback system where one should do the trick, all you have done is doubled the chance of failure.”

Assuming the failure isn’t a drastic one (ie, 0V all the time, or something) If you use 3, you can pinpoint which one failed, making maintenance easier. With 2 or 3 (and appropriate failure modes) for critical sensors, the machine can keep working. Say if you were in Nevada on some backroad, a sensor fails. If you’ve got redundant sensors, the vehicle carries on. If you’ve got a single sensor, you are looking at a bad situation, possibly (since I picked Nevada) life threatening.

This comes at a slight cost of potential code. Said code would already be a point of failure, and adding a comparator (vs other sensors or expected values) isn’t going to introduce much more in terms of possible failure. If you think about all the car problems you’ve had, how many have been electronics related. I can think of two I’ve seen, and that was because the cars got partially flooded. Of those, one was an automatic seatbelt controller. The other dozens of times, it’s been mechanical devices or sensors. I’ve not seen a code error.

Automation is inevitable. It’s human nature to remove human control. We are in persuit of machines and devices that do all the work for us. I have assisted steering on my vehicle and it truthfully can be awkward when my car disagrees with what I want to do, but I realize I can’t be completely coherent to all situations. So we put a small amount of faith into things working. My car also has collision avoidance. It’s happened to me twice now. Both instances I may have actually hit the driver ahead of me, but I probably would have avoided it. What it’s taught me is to be more vigilant of things. It taught me to stop being careless, because it’s almost insulting to see a computer fix your errors for you. This isn’t spell check. I think this is reshaping human driving behaviour, and it should be required to be enforced on all vehicles. There are a lot of idiots out there who drive like they are dodging tornados.

But that inevitable automation only invites a lack of vigilance. I have nothing against automation. I just believe that it needs to be designed in a way to be as simple as possible while maintaining a certain level of redundancy and built in safety. Hows that old saw go…familiarity breeds contempt? When every car has accident avoidance tech and everybody just expects it, what happens when it fails? A small amount of faith at the beginning can turn into a mountain of disappointment later.

please define simple in this context, most people agree that unnecessary complexity shouldn’t be there but that isnt what most complexity is, most complexity is a consequence of function.

Simple is defined in this context as doing only those things that need doing. It is defined as keeping the number of components and the interactions between them to the minimum allowable by the intended outcome.

By keep it simple I mean don’t use a supercomputer to do what a calculator can accomplish. Do the thing you intend to do in as few steps as possible. Do it in the most straight forward manner possible. Allow for failure and design for graceful failure to a safe mode of operation. Don’t included features for the sake of including features.

I work in a production facility making components for complex machines.We use complex machines to make those parts. The most reliable machines in our particular facility are always those that are designed with as few moving parts as possible to do the job. They are designed to fail gracefully. When they do fail, it’s usually not a catastrophic failure.

To give an example, I currently run 2 inertial welders. One is older, but has simple control logic. The other is a newer state of the art machine, controlled by a computer system with two industrial robots feeding parts into it.

The old machine requires more human intervention, but makes parts at a faster rate than the new machine.

The new machine can run 24/7 with little intervention. It rarely does. It consists of 5 separate systems. The welder, the control computer, 2 robots, and the outbound conveyor system. A failure in any of the separate systems brings the whole system to a halt. When it runs great, it runs great. When it doesn’t people on the far end of the building get to hear the maintenance staff cuss.

The underlying welders are the same. They work in the same way and do the same job. The only difference is in the complexity of the control systems. The more complex of the 2 fails at a much higher rate than the simple one. And it need not be the actual welder that fails. If a robot loses a signal and attempts to run a sequence out of turn…catastrophic failure. A simple proximity sensor goes bad and the machine loses the ability to unload parts. A small ripple in the power system and the computer goes down. and on and so forth.

All the while the older welder is still chugging along with it simple system happily.

and how does any of that differ from the point i was making about unnecessary complexity, my point was that usually stuff is complex for a reason, in your welder example there is a critical factor missing, it does more, with conveyor systems and feeding robots the processes it can be a part of more numerous, i have worked on bottling plants so it isnt as if i haven’t seen complex machines at work, most of those use several automated feeders as well.

if you compare those to the older bottling plants there isnt even a contest, you would need several miles of old school non fed bottling plant to reach similar performances and as far as i can gather from the old timers the reliability of modern machines is a lot better as well.

not only because of less errors pr bottle made but also because of less machine failures pr bottle made, even if there were fewer days between failures it would mean more had been producd.

on top of that diagnosing and repairing modern machines can be a lot easier with the help of complex troubleshooting programs, better design and documentation, stuff evolves.

+1. I was just going to say the same thing. There are trade-offs to everything. As things get more complex, we become less aware of the underlying issues and potential dangers.

The (valid) point you make also doesn’t mention the programming language. For the C vs C++ people, idiots are supremely skilled at writing crap in any language…

You guys are going to think I’ve gone off the rails myself, but as an old dude, my thoughts keep going back to this: Back in the 40’s, I bought a clapped-out Model A roadster for $75. It was rusty and rattled, the top was gone completely, and the mechanical brakes were close to no brakes at all. The windshield wipers didn’t work, but that was OK because the windshield was gone also.

_BUT_: That old junker never failed to get me where I was going, no matter what. You get in it, turn the switch, press the starter button (on the floor), the engine starts, and off you go.

That old car just ran and ran. I don’t even recall having to put oil in it.

The thing is, none of the important stuff (even the brakes) never failed, simply because it didn’t have enough parts _TO_ fail. An engine, trannie, differential, four wheels and steering rods. That’s about it.

Today I drive a ’98 Buick. It’s a great car and the engine & running gear still work fine, just like the Model A’s. But the windshield wipers no longer start when it rains; the cruise control doesn’t work, neither does the fuel gauge, neither does the auto-dimmer on the rear-view mirror. The heater./AC control is doing strange things, and the key-in-lock alarm goes off whenever I open the driver’s side door. Oh, and the computer keeps saying “check tire pressures,” even though the tire pressures are fine.

In short, the stuff that was dead-nuts reliable on the Model A is still dead-nuts on the Buick. Every part that involves bits and bytes is failing, one thing at a time.

There’s a lot to be said for simplicity. KISS

Jack

You make a valid point. The root of these problems is that trying to make the standard ICE compliant with software control is largely an exercise of putting lipstick on a pig and we’d be far better off moving to a different prime mover altogether that does respect KISS.

And doesn’t the Buick have EFI? Quite a few bits and bytes there…

It’s not the bits and bytes that fail, it’s the purposely crappy PHYSICAL manufacturing that make them fail :P

I like how the blog focused on all the “potential” code issues, considering that up the top is PVS-Studio Static Code Analyzer for C,C++, C#.

There was no focus on Driver floor mat’s, mechanical pedal issues, the fact that there are TWO hall effect sensors for the pedal, how the Throttle works, what happens when the ECU detect’s an error.

Check out the V503 “error” on there example page, http://www.viva64.com/en/examples/

and a bit of perspective http://www.autoblog.com/2016/09/08/ford-expands-door-latch-recall-by-1-5-million-cars/

Issue could be with how the ECU read’s the pedal position at start up, Chapter 4.5 of ETCSi_Report_Sept242012 covers Pedal Position Learning and Related Vehicle Operating Modes.

If the driver start’s the vehicle with there hoof on the pedal the ECU may/will think that this is the new idle position of the pedal. the driver now has to press past this point for the engine to get off idle, the other effect is that the pedal is more sensitive to movement, 10mm of travel could be 50% throttle not 10% throttle.

Ok So I will reply to my own comment, Just skipped through some stuff on the report I quoted above from the blog that supplied the story, Chapter 12, the team could NOT find a fault with the TPS, ECU source, or the throttle position sensor.

The Dead Give away is that the article, of how many of you read? is from PVS-Studio-Static code Analyzer for C, C++ and C#, I doubt they even got to the summary or read the PDF they quoted.

“There are a lot of idiots out there who drive like they are dodging tornados”

Everyone should also be required to complete a course on the consequences of their actions if they exceed the speed limit. Perhaps a day out with the local paramedics for those caught speeding for the third time.

If you have ever had the misfortune to encounter a fatal road accident, it sharpens up your ideas for the future.

Having a license to throw a ton of scrap metal around at high speed comes with responsibilities which far too few drivers take seriously enough.

The same could be said of engineers who write software. They should understand the consequences of failure to test. Designing safe (and fail safe) systems is a hard, complex and expensive business. Open source allows more eyes to write, test, debug and QA the code.

Setting up Joomal for your aunties cake shop does not even start to make you qualified, it takes many man years of effort and experience.

Another thing is making sure that there are direct consequences that fall hard on those responsible up and down the chain.

I completely agree with you, I think there should be stiffer penalties for crimes here (N. America), while some crimes that are punishable are laughable. Did you know you can be fined and imprisoned for metal detecting relics on state park grounds? These are objects which were left behind and nobody was willing to go looking, but as a hobbyist if you discover them, you can be penalized for it. Flying your RC craft on state grounds is punishable. These are things that people are really getting into trouble for, but nearly killing people on the highway because of horrible driving skills, texting or talking on cell phones, goes unpunished.

That’s why all those great empires faded in the ancient times, middle age and also modern age. With such regulations and also with this invitation for us to be careless, the history will repeat again.

@skaarj

Ahahahaha. Which empires were these?

caught speeding? give me a break.

speeding in and of itself is not dangerous. emergency services drive above the speed limit regularly. I would also suggest most people have exceeded the speed limit with no significance.

“speed is a factor” is often quoted but it’s just that, a factor.

Driving faster than the conditions and your talent allow is when it becomes a problem, you won’t get a ticket for driving at the limit if its raining or foggy even though your stopping distances are reduced.

Sticking to speed limits with no understanding or consideration of how to drive a vehicle to the conditions is just another symptom of poor driving standards.

and so what?

what was the actual problem with the code? where was the bug? was the actual cause of the issue identified?

just because it doesn’t meet some apparently arbitrary standard doesn’t mean it’s bad. a standard that doesn’t allow unions in an embedded system? oh but there are exceptions right?

And what is the fix? well some here would suggest adding layers and layers of abstraction! nobody ever wrote bad software in rust or c++ right? its impossible! why not simply run the car on java!?

of course the car shouldn’t misbehave but any software developer will tell you you can’t catch every single bug its impossible. of course they are just lazy but the real problem is that people don’t know how to drive anymore.

The one guy who’s car was accelerating and he called 911 to report it as he was crashing?

how about press the brake, or take the car out of gear, or turn the engine off? I don’t know anyone who was taught that they have to pump a vacuum assisted brake system if the engine isn’t providing the vacuum, nobody has experience of what to expect when power steering is gone. any trained chimp can drive a car on the motorway and apparently get a license but have absolutely no control or idea when it really counts.

This is a good point. If there’s a standard, then people often cleave blindly to it. That’s sort of the point; you want people to always produce things with repeatable quality and safety… but in this Toyota case, not meeting the standard is still far from proving that there was a software defect that caused the acceleration.

Not trying to let Toyota off the hook, the code should meet industry standards, period. just stressing that not meeting a standard is insuffient proof by itself; the failure needs to be duplicated to confirm the fault.

pff, I think I disagree on every single one of your points. It’s the same argument Toyota stuck to: There are no system problems, only stupid drivers.

Yes, there are indeed stupid drivers, and they do stupid things, and by the way they aren’t just driving cars. They’re also driving boats and trains and airplanes and bulldozers and cranes on top of buildings.

But IMO that’s not the issue here at all. What’s the issue is software bugs that kill people.

Time was, stopping a runaway car was simple if you knew how. Even if the throttle stuck wide open, all you had to do was to turn the ignition off. Or simply put the transmission in neutral. Yes, the engine might blow, but you’re not dead.

Back when folks were complaining that the Audis were running away, I thought I had an airtight argument. Modern disk brakes, I argued, will stop any car at any speed. They are _WAY_ more powerful than the engine.

But then someone pointed out to me:drum brakes used to have a lot of servo effect, so you could stop the car with enough pedal pressure, even if the power brake system failed.

But disk brakes don’t _HAVE_ a servo effect. It takes a lot of pedal pressure to stop.

They also pointed out: modern power brakes are all vacuum-boosted from the engine vacuum. That surprised me a bit. I thought they’d have a hyraulic pump on the engine or something, like power steering, but they don’t.

So what happens when the throttle is wide open? Vacuum goes to zero.

Stomping the binders might stop an Audi in a parking lot, but a Toyota going 80 on an Interstate? Not gonna happen. First the brakes start to fade, then the vacuum disappears, and you’re jelly on a guard rail.

So still, no problem, you say. Turn the engine off. Except these days, there’s no switch, There’s only a button connected to the computer. Put it in neutral? Again, there’s no mechanical linkage, just a switch connected to the computer.

Digital computers _CAN_ fail, meaning that they can either get into a tight infinite loop, or simply freeze in place. Once that happens, none of those digital sensors and motors and solenoids mean a thing. Nothing works. Once the CPU stops reacting to interrupts, again you’re toast.

Now that I’m on the subject, I’d like to point out that there STILL are ways to stop a runaway car. The easiest is to do like a serpentine movement with the steering wheel. Your tires can scrub off a lot of speed by slewing from side to side. Better yet, just spin the car out. You might end up cutting doughnuts down the road, but that’s a whole lot better than flying off an exit ramp at 110 mph, as one family did.

You can also just sideswipe the guard rail, That’ll do it.

For now, that is. Now that some cars have the computer controlling the steering wheel, and laser, radar,and video-controlled collision avoidance, you might even be losing that last-ditch way of stopping the thing.

Better yet, maybe you should buy an old Model A, or at least a 1980 Toyota. ;-)

Jack

well if the computer freezes or stopped taking input I would expect the fuel injectors, spark timing and throttle control to go out the window too so you should be ok unless you are running a diesel in which case you better hold on tight. I know my throttle flap is sprung closed by default.

I would expect an automatic control to be on a separate module, certainly in my limited experience.

If you can’t stop the engine by standing on the brakes I don’t know how successful wiggling the wheels or scraping the sides will be, better off rolling it into a ditch!

As for push button start I have a good old turn key but I have been in a friends car at ~70mph when the question came up of what would happen if his plastic card key were removed from the dash. he insisted the car wouldn’t bat an eyelid and proceeded to show my, to everyones amusement the car died and refused to restart until it had come to a complete stop on a busy motorway.

Malcom Gladwell did a podcast about the “sudden acceleration” issues with Toyota, and other car manufacturers. Title was “Blame Game.” You should give it a listen.

Bottom line — people panic and floor the accelerator when they think they are braking. They can’t stop because they are flooring it, and their brain is so locked up that they don’t think rationally about other options — like lifting your foot off whatever pedal you think you’re pushing, and trying to brake again. It’s actually worth knowing this in case it ever happens to you.

A test was done with the one of the cars involved. With the accelerator floored and continuing to be pressed, the brake was depressed with the other foot. The car stopped. Even if the car really was doing runaway acceleration — which it wasn’t — brakes win.

It doesn’t mean the coding and other issues brought up here aren’t a concern. But they didn’t lead to death in the way the headline states.

Everybody here is missing the point. The real problem came in when the EPA set such high (and stupid) standards on a emissions at the manufactures decided that the human being no longer should be in control of the throttle plate of the engine. Nowadays the accelerator pedal is just a suggestion and government technocrats and engineers a continent away decide where that throttle should actually be based on several lookup tables. (I know this b/c I used to reverse engineer ECU’s for a living) anyhow, the point is that the human no longer has a hard-simple-and reliable connection to the engine. Multiply thiis by 10^7 units and it is a metter of WHEN not if a failure will occur. Does anyone see the problem here?

In the Mitsubishi drive by wire ecu’s there is a primary cpu (32bit SH-4) that, among many other things, runs the PID control of the throttle plate. Because Mitsu saw just how sketchy this is, they added an 8bit motorola cpu whose only job was to double check the throttle position versus acceleratoe position sensor. Even there I have my doubts about how good it was because there was not a one to one or even a simple correlation between pedal position and desired throttle plate position. The point is it was very complex and they spent a great deal of time and effort on safety and I’m still not sure that it is possible to get it 100% right.

I ended up designing and building my own ECU for a similar application that was completely drive by wire. I kept the throttle position code very simple and I used another CPU with a completely different compiler and completely different code to crosscheck the first CPU – and if things were more than 5% out of whack it would literally pull the power to the servomotor which had a spring to close it automatically. Even with that I’m still nervous to this day.

I would say about 75 to 80% of the code inside of those ECUs is there to meet emission requirements. It’s sort of like the cars today that are superlight and get great gas mileage. All of this works until you hit a tree that’s 100 years old that doesn’t really care about your EPA standards. Yes you may have a carbon fiber And aluminum frame but that old oak tree still weighs 40,000 pounds. It all sounds great on the chalkboards of the academics and bureaucrats thousands of miles away who don’t actually have to drive maintain or live with these things. In practice it’s another matter.

Dammit.. I misspelled emissions in the first sentence! (This is what happens when you let voice to text write for you – but I guess I am just making my point again.

Great comment. I got ya.

There’s a reason for EPA standards. Ask an older resident of LA for some background.

There were no emissions standards at a all when those people were younger so they were exposed to much higher levels of pollution.

Some of the latest standards made by CARB is just grasping at a straws and purposefully designed to fail small high compression engines in imports while giving large low compression engines in many domestics a passing grade.

The whole PPM vs grams per mile standard.