Culture Shock II, a robot by the Lawrence Tech team, first caught our eye due to its unique drive train. Upon further investigation we found a very well built robot with a ton of unique features.

The first thing we noticed about CultureShockII are the giant 36″ wheels. The wheel assemblies are actually unicycles modified to be driven by the geared motors on the bottom. The reason such large wheels were chosen was to keep the center of gravity well below the axle, providing a very self stabilizing robot. The robot also has two casters with a suspension system to act as dampers and stabilizers in the case of shocks and inclines. Pictured Below.

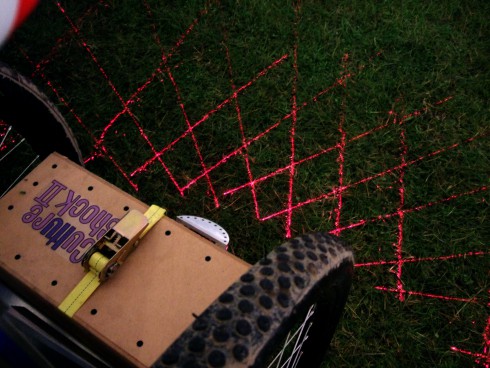

The next thing we noticed were the strange semi-circles mounted above the casters. Upon further inquiry we found that the robot uses 10 lasers to project cross-hairs on the ground so that it can use its stereo vision at night.

The robot has two stereo vision cameras from Videre, a brand that has been very popular at this year’s IGVC. The cameras overlap and provide the AI with a pixel-associated 3d point map. The team also came up with a clever way to adjust the camera to different lighting situations with two “candy canes” sticking out into the robot’s field of vision. The robot can look at these and use an algorithm to adjust the colors according. This helps greatly with white line detection. (The robots must stay within two white lines painted about 10 feet apart on the grass.)

Along with the stereo vision the robot also features an Omnistar-VBS enabled gps capable of sub meter accuracy and a digital compass. Aside from the driver software for the cameras, the robot is written completely in Java. The AI uses frame-by-frame mapping. Each frame the robot sets an objective out in the distance and moves towards it. In the next frame the robot checks to see if that objective is still reachable and moves towards it, otherwise it changes its path. To get around objects the robot hugs the obstacle until it is behind it. The system is a hybrid of mapping and reactive AI.

The robot’s brain is a 2.4GHz Intel Core 2 Quad running 32bit windows. It has a solid state drive and 4GB of ram. One cool thing to note is that all of the sensors and micro-controllers run off the ATX power supply for the computer. Instead of using an inverter they found a suitable 12V ATX supply.

The rear control panel is pretty neat too. It has a touch screen, switches for all the main components, and status LEDs. Below it you can see the very back of the computer, housed in a shuttle style thermaltake case.

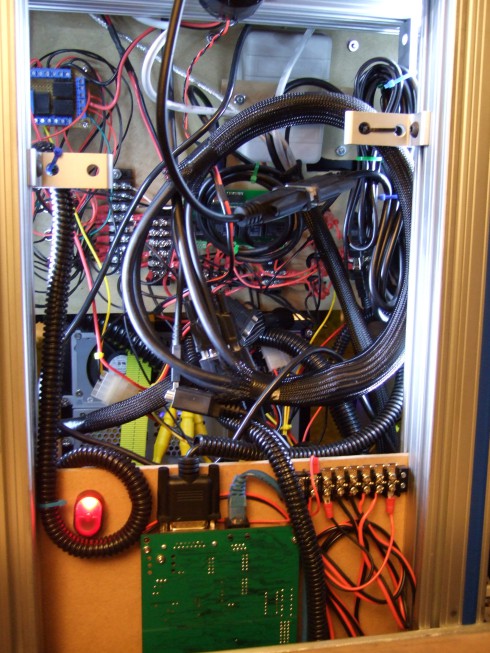

If you take the panel on the front off you are greeted with a view of the robot’s power and signal distribution. The green board is a Roboteq AX3500 which runs the motors and manages the PID feedback. The robot has 70lbs of sealed lead acid batteries in its base which allows it to run for approximately two hours. The remote E-stop (required by the rules) for this robot is actually a remote garage door opener hacked into turning the robot on and off.

We’ll finish with a shot of happy* engineers working away on their robots.

*depending on the state of their robot

It’s dampers not dampeners unless you’re talking about sprinklers.

thanks, corrected. dunno what I was thinking.

“hosed in a shuttle style thermaltake case.”

Should be housed – unless you’re talking about sprinklers ;)

Nice. Except for the fact that the Intel processor described, which is similar to what this guy uses, happens to be a 64 bit one. So it should be running the 64 bit version of Windows. It is available from most OEM shops, it probably isn’t available from Staples unless you’re buying a new system and stuffing it in there.

32 bit is used because it supports all of the drivers used on the robot.

I go to WPI and my friends worked non stop 120 hour work weeks for their first year entry, Prometheus.

Check out their work (and pictures from the competition) because its absolutely phenomenal what these teams did-

http://picasaweb.google.com/107537254206068337248/IGVC2010#

http://www.igvc-wpi.org/

pink text with rainbow handles… something’s special about this team.

Mount a sentry gun from http://www.paintballsentry.com on one and it would be awesome. you’d have a autonomous death bot.

I work for Videre Design, and it’s always cool to see what researchers can do with our cameras!

I’m also curious what OS the robot was running. Was it ROS or Player/Stage, or some other system?

Craig, I know this robot was programmed in Java on a Windows box. The guy says the only thing not written in Java is the software that came with the camera. I met a few other guys at the competition using your cameras and they raved about it (the Princeton team especially). My team is really considering buying one, our robot runs on linux and is programmed in python and C.

Gerrit –

SVS (the developer toolkit for the stereo cameras) runs quite well on Linux, and doesn’t use too much processor if you use the STOC model (has a Xilinx Spartan 3 on the camera to do most of the disparity identification).

I love seeing what people do with them!