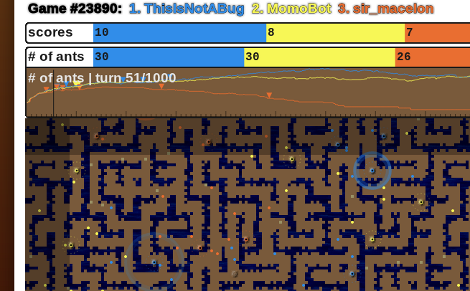

All of those orange, cyan, and yellow dots represent digital ants fighting for supremacy. This is a match to see who’s AI code is better in the Google backed programming competition: The AI Challenge. Before you go on to the next story, take a hard look at giving this a try for yourself. It’s set up as a way to get more people interested in AI programming, and they claim you can be up and running in just five minutes.

Possibly the best part of the AI Challenge is the resources they provide. The starter kits offer example code as a jumping off point in 22 different programming languages. And a quick start tutorial will help to get you thinking about the main components involved with Artificial Intelligence coding.

The game consists of ant hills for each team, water as an obstacle, and food collection as a goal. The winner is determined by who destroyed more enemy ant hills, and gathered more resources. It provides some interesting challenges, like how to search for food and enemy ant hills, how to plot a path from one point to another, etc. But if you’re interested in video game programming or robotics, the skills you learn in the process will be of great help later in your hacking exploits.

This is a rather peculiar thing to see Google do, in 3D, let alone 2D. I will definitely keep tuned on this one.

I think the demand for AI development should be increased substantially, it would help our world in so many ways, and for the non-believers, there will never EVER be a skynet, lol.

I wouldn’t be so never, ever if I were you, technology has it’s dangers. If skynet is possible, it will come to be. You can’t possibly control everybody, and if someone doesn’t create it for their own purposes, some creative person will do it just to sate their curiosity or prove that it’s possible. I really can’t see how AI could ever possibly help our world, care to share?

While I do agree with the “never say never” philosophy, you can watch the matches on there. We can’t even make ants function as well as actual ants. We’ve a long ways to go before I get worried.

AI programming is more than just being able to control an ant colony. AI technology is used for facial recognition in cameras, flying a aeroplane, port container routing and many many more applications. Striving for the end goal gives lots of advances along the way which helps us in ways that we don’t even realise.

ofshoots of “”AI”” research are used in many places, wolfram alpha is one of them.

a true ai could be beneficial in ways we cant possibly imagine, a completely automated production system for one.

Aye, exactly, never say never, however, I really don’t think I will see sentient machines in my lifetime. To be honest, I don’t even think given the materials we have to work with on the earth, and say even with future proposed ideas of computational design (Stacked/3D processor grids, other exotic ideas of speeding up computer bandwidth), I don’t think even then we’ll achieve a level of intelligence to the point where it will literally have a mind of it’s own and start plotting mischievous deeds to take out against the fleshy human world.

I think for one thing, AI would be useful in things such as detecting bad food on a conveyor belt in a factory all the way to home automation and generally aiding us “humans” throughout our day with every day to day tasks, computer automation like say they have and startrek anyone?

Now the NUMBER one thing I could find a good use for a properly implemented, and obviously a properly designed and working AI, is using it to search for/research/discover scientific advancements like whether it be to design a faster version of itself, or other computers for example, or upgrading itself perhaps even (I know we’ve been over this theory and it sounds disasterous I know). But also too, then other scientific advancements could be made without consuming man-hours, cure for cancer, search for other planets with life/design us some kind of space age warp drive that’ll take us somewhere in space in a short amount of time.

THINK PEOPLE, sure it’s a question of how to implement it, or what would the legality of even using it for certain tasks be? I think properly coded and set up on a fast enough framework and of course hardware to support it, a computer that can think for itself would be a great thing. No more computers that just “sit idle” when they’re not being used.

-punkguy you said about sentience in you lifetime and all I do is think that I’m 31 and in those few years the world changed one hell of a lot! Quite a bit of the AI problem is down to computing power so moores law must affect the possibility of more powerful AI?

If you use Google, a GPS, or… rice cookers, you’re using A.I.

A brilliant person has no possibility of creating a rogue A.I. anymore than a brilliant person would have the possibility of stopping the internet from working. There would be large hardware requirements and tremendous dataset requirements.

Governments/corporations could/do use A.I. in harmful ways, such as automatic stock trading, or unmanned drones in warfare. The uses people have for technology is much more dangerous than some sentient A.I. going rogue… A.I. just tries to maximize “utility”– the happiness/goals of some A.I. program is just what the creators instill in it.

Since there is a huge period between matches, I’m using this other site to test my ants: http://ants.fluxid.pl .

The official one runs your bot on their machine, so that they are CPU constrained. On this other site you have to run your bot on your machine, and send/receive output/input through the network.

Its a good exercise and may help advance artifical intelligence research (many hands make light work) but I’m not worried about Skynet, they’ve already built a computer with the computional power of a cat’s brain but it still hasn’t even conceptuallized licking its balls, so we have a ways to go to a Skynet.

hhhmmm… AI, Skynet, T3, not gonna happen in our lifetime, maybe in about 200 years (if not 2000) if civilization still exists on the track its working on. AI is as far as I am concerned, still primitive, its the caveman of our society. When I was a child I daydreamed and constructed computers out of cardboard boxes (back when win 95 was a hachling and sympatico was ruler of ISPs) now I still daydream, but not of computers, instead of I daydream of robotics, AI will never be as good as the real thing but it will be close. The technology is out there, it just hasn’t been found yet

Discussing AI always brings the singularists and other boobs out of the woodwork.

How can we design an artifical intelligence when we don’t even know what intelligence really is, or whether it exists in the first place?

It’s like Alan Turing said: a sufficiently complex program is indistinguishable from intelligence because we have no way of testing whether it really is. Conversely, we don’t even know whether we are intelligent by any definition of the word, because we are so complex we don’t yet understand how we work.

This sounds like a lot of fun! When I got time I’ll definitely give it a spin, even if I’m not much good at programming it might be a fun challenge where I can practice!

As Moore’s law predicts transistors have keep getting exponentially smaller (although currently slowing) and computing power has kept increasing in response. Some futurists believe that by 2029 desktop computers with have the same computational power as the human brain and I personally believe that’s in the realm of possibilities.

However computing power != intelligence. Our brains are arranged in highly complex structures with even more complex interactions, most we can’t even start to explain at this point.

Since our ultimate goal in AI is to make a intelligence on or above the level of humans it seems absurd to think we could do so without first fully understanding how we work and what actually makes us “intelligent”, which might not even be possible.

Einstein said, “The true sign of intelligence is not knowledge but imagination.” Socrates said, “I know that I am intelligent, because I know that I know nothing.”

Real AI is one of the most difficult problems of all. We have plenty of computer power already – but we are unable to simulate a simple nematode worm with 100 neurons.

Why? Because we are in dark about the basic AI concepts.

Real AI is self-emergent and self-evolving. Coding complex behaviours directly is not AI but snake oil at best, and simply cheating at worst.

The true problem is: if it was possible to create a real AI (by itself, this question makes no sense but let’s assume it does). Would we be able to see it? I mean, as far as we know, there could be a real AI out here. The fact is that I can only conceive my own intelligence, myself thinking. I already can’t know if you are damned bots or if you really exist and consequently think as I do. As far as I know, you could be this damn “real AI” we are seeking. But I can’t know it. The only thing I can aim is to understand how MY brain thinks and I can only assume your are human. The only way to be sure that you actually think, is to be you. What I think is that there doesn’t exist a “real AI”, because what we use to judge the feasibility of the said real AI is our own intelligence, but who says we are really intelligent? There could be more kinds of intelligence, not only ours. The question is: can we conceive an form of intelligence that is not like ours? That for instance is based on things completely different from our brain? Would we accept it to be an “intelligence”? Because if we don’t, there could be thousands of real AIs out there, your microwave could own one, but as far as you don’t accept them as forms of intelligence, we wouldn’t see them. They exist, they are real as we assumed, but we won’t accept their existence therefore for us it’s like if they don’t. Even if we assumed they do.

You make a very interesting point. I think you might be interested in the writings of French philosopher René Descartes which pondered upon the same questions a few hundred years ago.

I think the real tipping point, the one that matters, will be when (if ever) we manage to create an AI that can create another AI. At this point it is theorized that a feedback loop will occur and the AIs will improve as fast as their computing power permits. Then little doubt should remain.

There’s a lot of people in this post that needs to know the difference between a VI and an AI.

Yes. VI is an editor. AI is a lame movie. Care to elaborate further? :)