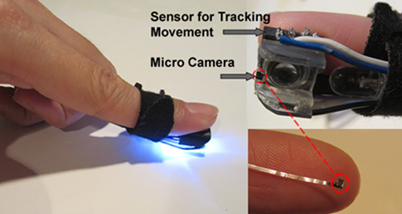

What if we could do away with mice and just wear a thimble as a control interface? That’s the concept behind Magic Finger. It adds as movement tracking sensor and RGB camera to your fingertip.

Touch screens are great, but what if you want to use any surface as an input? Then you grab the simplest of today’s standard inputs: a computer mouse. But take that one step further and think of the possibilities of using the mouse as a graphic input device in addition to a positional sensor. This concept allows Magic Finger to distinguish between many different materials. It knows the difference between your desk and a piece of paper. Furthermore, it opens the door to data transfer through a code scheme they call a micro matrix. It’s like a super small QR code which is read by the camera in the device.

The concept video found after the break shows off a lot of cool tricks used by the device. Our favorite is the tablet PC controlled by moving your finger on the back side of the device, instead of interrupting your line of sight and leaving fingerprints by touching the screen.

Wow that is the tiniest camera I’ve ever seen. Where can I get one? :)

Apparently it’s called the naneye:

http://www.awaiba.com/en/products/medical-image-sensors/

Cool, but does it work without being plugged into a pc or tablet? half the time it didn’t look like he had anything with him, were was he hiding it?

This would be really awesome with some augmented reality glasses.

In essence they extracted the sensor from an optical mouse but pretty cool!

cane to say the same thing, this is just an extension of Mouse Cam

and:

reading a PAPER magazine? storing for LATER?

What is this ? year 1998?

also it looks like you invented micro lens with infinite focus!

I’m not sure why it needs to be saved for later. Shouldn’t it be saved automatically?

Now that is just cool!

My favorite is using morse code for near field communications. Just a couple tin cans and some string and ….

Oh great now I’m gonna be paranoid about tiny cameras all day.

I wonder how his wife feels about him transferring data with that floozy

Now make it work for more than a week in a world full of grease, dust and lint.

Fun idea, but impractical since you’re probably spending your time cleaning the lens.

Make the lens out of something hard to scratch liek sapphire and with one of the new hydrophobic coatings, and just give it a scrub on your shirtsleeve if it gets grubby.

What if you linked the camera image to the display image? I.e. if you put your finger on the screen, the software will match the camera image to what it knows is on the screen and hence determine where on the screen your finger is. Combine that with the tactile sensor and you could turn any screen into a touch screen.

That is just what the now obsolete lightpen does. Except that is was directly connected to the display so pattern recognition wasn’t needed.

ET… phone… home…

This is a pretty cool device. Their presentation wasn’t all that great.

If they can get this thing down to being wireless and small then that would be the key.

This mixed with the google glasses would make for an absolutely awesome combo.

Exactly what I was thinking. Or the RasPi and MyVu setup.

Minority report, here we come!

sorry I own the patent for this, thanks for bringing it to my attention. time for the ole cease and desist.

that’s interesting… ITS called reverse engineering…i don’t think there stealing you’r Patent IDEA… DuHhhhhHHh

I’ll stick with the wireless ‘pen’ version thanks.

Interesting… the video was a little awkward but probably less so than if I had been the subject. It seems like they took some of the inner workings of an optical mouse and tethered the sensors to the guts…

In reality it is not a bad idea, but at this infant state it seems as though they have a little way to go before it is more useable.

No, they took old (2008 or older) Mouse Cam (google it) concept and made a stupid “this could be possible if we got grand money and actually did some real research” video

I wonder why there aren’t touchpads on the backs of tablets and phones? I have four fingers going to waste! Time for eight finger gestures!

you mean like ps vita? or many HTPC remotes? or iphone cases with touchpad?

A device like this could, in essence, make any display a “touch” display, and could be the bridge from desktops to tables for UIs like Windows 8. I like the concept, but am concerned about the ergonomics.

Is it just me or is the prototype primarily just the guts of an optical mouse strapped on with velcro? I see quite a bit of clever filming to hide the wires on his forearm and the USB cables to the external devices.

I totally get this is a proof of concept type prototype and I think the addition of the camera is brilliant, but why hack a corded mouse when wireless mice are so cheap?

How does one go about getting a NanEye camera?

So many possibilities. If they increase resolution these could eventually lead to better active camouflage, 360 up and down views around vehicles (or people!), etc…

there was no NanEye in this video

Thanks for commenting on our project!

We got our NanEye camera directly from Awaiba. It is indeed used in the video, I guess its easy to miss because its so small! We wouldn’t be able to recognize the textures or Data Matrix codes with just the optical mouse motion sensor, the resolution is too low.

We were aware of previous mousecam projects. Combining the low-resolution high speed mouse sensor with the high resolution lower speed naneye camera gave us the best of both worlds, and opens up new possibilities. If interested you can read the scientific research paper, the pdf is here:

http://www.autodeskresearch.com/publications/magicfinger

This could be used for perverted purposes.

Get a leather glove (they always look cool) and make this system wireless with bluetooth and a battery. Also I can see this being used by blind people to read regular books, lot cheaper than brail.

Not quite a leather glove – but a wrist band with multiple “fingertips” (plug ins, from 1 to 5). Ribbon wiring goes across the back of fingers.

If the wrist band is the cuff of a shirt or jacket, you are well on the way to a wearable computer.

Damn freaky tiny camera…

If I know right the optical mouse is a very low resolution (something at 16×16 px) camera that detects small changes in the surface pattern.

Also, this tiny camera mouse thingy seems a bit of overkill to me. Just imagine how many data the computer has to process from the camera in order to move your pointer around. At 256×256(64k) RGB colour pixels, an image should occupy around 590KB in memory (raw data), so 30FPS (average frame rate for a good moving picture) gives more than 17MB per second of imagery to be processed, and that is just to move the pointer around. Add the positional sensor said here and you got more data. I don’t know much about positional sensors, but I make a rough guess at 20MB/s for the whole thing.

I’m not saying that it’s not an impressive and cool hack, because it is. But thanks, I’m going to stick with my ol’n’trusty (and not spy) mouse.

optical mouse sensors do all the processing on chip, + they operate WAY higher than 30 fps. For example very old UIC1001 sensor scans at 20K frames per second.

Dumping raw pixel data is a side effect.

The trick to successful vision apps is to not store video data at all :)

look at http://chipsight.com/

Run the data through a circular

buffer and “pick out” blocks of

pixels?