Fans of the AMC show Breaking Bad will remember the Original Gangsta [Hector Salamanca]. When first introduced to the story he communicates by ringing a bell. But after being moved to a nursing home he communicates by spelling out messages with the assistance of a nurse who holds up a card with columns and rows of letters. This hack automates that task, trading the human assistant for a blink-based input system.

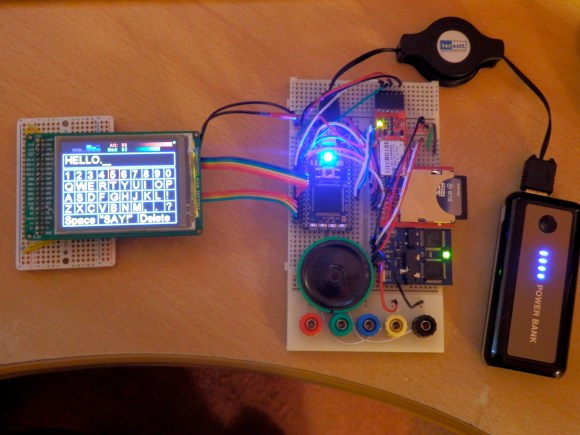

[Bob Stone] calls the project BlinkTalk. The user wears a Neurosky Mindwave Mobile headset. This measures brainwaves using EEG. He connects the headset to an mBed microcontroller using a BlueSMiRF Bluetooth board. The microcontroller processes the EEG data to establish when the user blinks their eyes.

The LCD screen first scrolls down each row of the displayed letters and numbers. When the appropriate row is highlighted a blink will start scrolling through the columns until a second blink selects the appropriate character. Once the message has been spelled out the “SAY!” menu item causes the Emic2 module to turn the text into speech.

If you think you could build something like this to help the disabled, you should check out thecontrollerproject.com where builders are connected with people in need.

So many possibilities with this. Amazing work!

Would be cool to see a branch project using a camera and eye tracking to select the letters.

Great stuff!

I think the neurosky headset generates random signals. I tested mine it wil a signal generator and a resistor as skin simulator, totally no effect between generator signal and EEG. I hope Bobs neurosky headset does work correctly.

There’s three levels of signal coming from Neurosky’s ThinkGear chip – the top-level, most-highly-processed signal is what they’re calling eSense, which it sends in a packet once a second, calculated on-chip using a proprietary closed algorithm, the details of which they’re not sharing. This gives you two values called ‘Attention’ and ‘Meditation’, plus some other processed stuff like quality-of-signal. With mine if I relax & breathe deep and easy, meditation does on the whole go up, and if I concentrate, attention does for the most part go up – you can see in the video where I’m trying to film, trying to spell out a word without messing up, and feeling a bit self-conscious about it all, the recorded attention level seems consistently higher than meditation. Although I’m receiving and visualising this data at the top of the screen, it’s not part of the blink detection at all.

The next level down is a set of signals recording different brain wave ‘frequencies’ as calculated on-chip, presumably by a fast fourier transform (FFT) of the raw power signal, possibly cleaned up a bit via the quality-of-signal analysis – Neurosky list some blurb about what each of these (gamma, theta, etc) mean but they do look all over the place to me. I graph them (top left, in blue) just because I can and because this program’s an evolution of an earlier one that was a full-screen EEG data visualiser, but I don’t trust them to tell me much meaningful (I looked up one of the most active ‘brain frequencies’ on Wikipedia, which told me that one was most associated with deep sleep…)

The least-processed and most-frequent (512 samples/second) data is a raw wave power reading from the contact. Everything else that comes from the headset has its origins in this raw wave. But the evidence that it’s clearly not merely a random number generator comes most clearly when you blink – the electricity involved in the muscle twitch close to the contact completely swamps the feeble signal from the brainwaves and creates a characteristic pattern in the wave signal, which I can easily detect with simple heuristics – will be a big spike high followed fairly quickly by a big spike low followed by returning to normal, pretty reliably, every time – so then it’s just a case of playing with the parameters of thresholds and timings to minimise the false positives. If I talk, screw up my forehead or eyes or other facial muscle stuff that might get read by the contact, I’ll still get false positives, but otherwise keeping a relatively blank face and normal, momentary blink, it’s quite reliable and no false negatives. But it is then using an EEG headset to read a muscle signal, so I don’t pretend it’s brain-operated in any way.

I have also used the same blink-detect capability in conjunction with an MPU-9150 9dof sensor stick on an Arduino Fio taped to the headset, to slave a motion-turret to my head movements and fire a laser in the direction I’m looking with a blink!

This is the beginning of sharks with frickin’ laser beams attached to their heads.

I had to abandon /that/ project when I discovered sharks don’t blink. http://www.sdnhm.org/archive/kids/sharks/faq.html#blink

Thanks for your extensive reply Bob maybe I was havent tried hard enough and I gave up too early when I saw attention and meditation going up and down while the two electrodes were shorted with a resistor. I actually owned a PLX Xwave, so that may be different.

What about sensing if left or right eye is closed, and using that for Morse-coding the letters (left for dash, right for dot, short blink of both eyes for symbol end, or so)? The encoding has symbol length matched to frequency of use in English, is easy to use, special characters can be escaped with sequences closing eyes in certain order without opening the other one, and so on. Would have the great advantage of not having to rely on visual feedback that takes time.

I don’t know about other people, but I’ve a difficulty blinking just my dominant eye without the other one also blinking.

That’s pretty cool. Could speed it up a lot with a binary search though.

Quality hack bro

If this would use an adjusted layout instead of qwerty it could be faster.

I thought the same…

Maybe a binary tree based on character frequency would be quicker, about all the commands would be start, left, right, stop.

A larger screen (or smaller font) would allow more choices, including common whole words. A word dictionary would permit smartphone-like auto-completion, and auto-correction would be good too. Recognising variations of blink, like a mouse-style double-blink, or triple-blink, or long-blink would give a few more options to the UI (assigning a cancel or undo option to triple-blink for instance). Could have multiple pages of choices, navigable by hyperlinking from other choices (like how your smartphone keyboard can select a page for numbers or symbols, for instance). Moving choices around based on usage might perhaps use Huffman encoding http://en.wikipedia.org/wiki/Huffman_coding to calculate shortest sequences for most frequently used combos, although once an optimised layout’s been chosen, best to leave it in place long enough to learn it rather than have it move about dynamically of its own accord.

it´s in breakibg bad, not the walking deads…

:p

Very very cool I would add multiple layers to the display say 10 or so with 30+ words on each layer with auto sentence creation to build complete sentences much faster. Also give it a basic AI program for sentence creation. So it can display a group of words that best fit next in the sentence. Also a remember option to save there most used full sentences and new words like names and such. The EEG is a little much not to mention it has to be tuned for each person since we don’t all process information the same way. I would build a pair of glasses and use a simple photo diode to detect the blink. Eyes reflect a good amount of light and lids do not. Also possibly speech to text incorporated with the basic AI to help chose better response words or sentences also if the person has hearing issues can display what people say to them. So a pair of glasses with display, photo diode, microphone and why not add audio out for ear buds or whatever make wireless connect to multicore smart phone. Think Google Glass for the impaired. With this and the scroll select can be incorporated it more complete control of phone for say music or videos or even the web on the phone or even things around them all by just blinking.

For my senior design project, me and my partner used an Emotiv Epoc headset connected to a custom UI(a .NET application) that separated options into the four sides of the screen. Thinking in a direction would select that side of the screen’s options and then separate those options into the four sides of screen. That would repeat until you selected a side with one remaining option(it’s alot easier to see than explain). We made a few different “apps” like a keyboard(with text-to-speech) and something that would control Chrome. The end result wasn’t quite complete due to limitations with headset software and misc bugs. The thought reading the headset did was really difficult to do consistently. My partner did the demo because I could barely get one thought recognized, let alone four.

Back to the post, I believe we found that similar commercial devices(might not have been blink driven, but based on focus) already existed but the price was rather high. I think this project would definitely have those beat. The blink recognition for the Epoc headset was super consistent compared to any thought recognition. Anyhow, cool project!

Instead of QWERTY http://en.wikipedia.org/wiki/Letter_frequency http://oxforddictionaries.com/words/what-is-the-frequency-of-the-letters-of-the-alphabet-in-english

Am I the only one who thought about Hector Salamanca’s DEA interview here?

*Ding-ding*