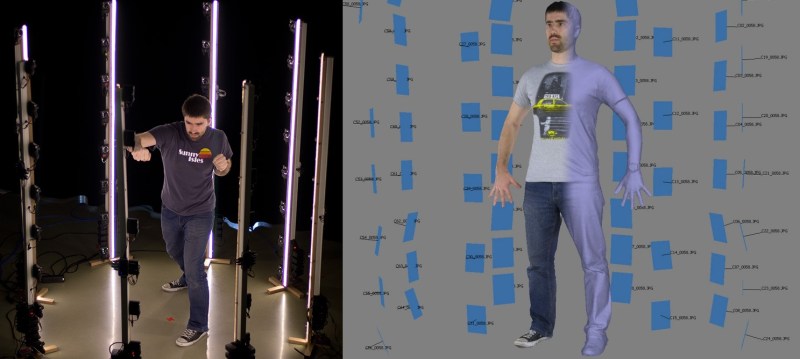

Looking for a professional 3D scanning setup for all your animation or simulation needs? With this impressive 3D scanning setup from the folks over at [Artanim], you’ll be doing Matrix limbos in no time! They’ve taken 64 Canon Powershot A1400 cameras to create eight portable “scanning poles” set up in a circle to take 3D images of, well, pretty much anything you can fit in between them!

Not wanting to charge 64 sets of batteries every time they used the scanner or to pay for 64 official power adapters, they came up with a crafty solution: wooden batteries. Well, actually, wooden power adapters to be specific. This allows them to wire up all the cameras directly to a DC power supply, instead of 64 wall warts.

To capture the images they used the Canon Hack Development Kit, which allowed them to control the cameras with custom scripts. 3D processing is done in a program called Agisoft Photoscan, which only requires a few tweaks to get a good model. Check out [Artanim’s] website for some excellent examples of 3D scanned people.

Oh yeah, so that title might have been a bit of a misnomer. It is affordable, but only compared to industry setups. The 64 cameras used in this project will most definitely set you back a pretty penny. A more affordable solution is probably the 39 Raspberry Pi Camera Scanner…

Still, pretty awesome.

$4,000 worth of digital cameras is pretty pricey.

Pocket change right? I think a lot of cheap 1080 HD webcams might be a little better.

1920×1080 is only 2 megapixels, and the image sensors and optics in webcams are terrible compared to ‘real’ cameras.

If you want to save money on a build like this, shop around for used point-and-shoot cameras. “Broken” ones on eBay look particularly inexpensive. (Who cares if the LCD doesn’t work or the case is damaged, as long as it still takes pictures and boots CHDK!) It will take a lot longer to gather 64 of them this way, but if they only cost $25 apiece you’ve knocked the parts cost down by $2400.

Webcams are a poor choice for this application for a number of reasons. First, they don’t have real shutters, so still images you take are more like captures from a video than pictures from a camera. If you’ve ever seen a flash go off in a video, you’ll notice it destroys the exposure for a second. That’s the frame you want to capture. 2nd picture quality- 1080p is great for video, terrible for photos. The reconstruction algorithms use pixel pattern matching to both figure out their relative locations and to rebuild the geometry, so the more detail you see, the better they work. Cheap point and shoot cameras blow any webcam out of the water on taking a single picture. 3rd control – while it may be possible to grab 64 frames from webcams simultaneously, it’s probably quite tricky. With real cameras, all you need is a way to fire the cameras simultaneously and deal with downloading afterwards. I’m pretty sure CHDK has triggering built in, but I haven’t spent much time with it yet.

All in all, props to the guys who did it. Quite a nice hack at a reasonable price for the task. [full disclaimer: I built a pro version for my job recently.]

What would be the tradeoff of mixing camera types? Could you get better quality from a cheap biuld by using a few nice cameras and a bunch of cheap ones?

While it’s definitely a possibility, I haven’t experimented too much with mixing camera models. The problems I first think of are timing (slightly different reaction times due to chip differences), white balance, zoom differences (while that’s not inherently bad, it doesn’t help the algorithms). Again, possible, but probably not worth the price/quality/hassle trade off.

other than timing errors everything else would actually help SFM algorithms pick more detail.

easy there photophile, go ahead and crawl back to instagram complaining about the filters

I took 640×480 px video from my Samsung ES30 and still it provides good results. I am planning to try with 1080p videos now.

So let me get this straight, they will spring for 64 cameras but not 64 wall warts?

It takes rechargeable batteries, even in my studio I can recharge 24 AA batteries in 2 hours with 2 chargers

Not to mention the camera will charge via usb.

Would you really want to replace 128 batteries every time?

Looks like that camera is a bargain and goes for only $60 – $70 USD. Meanwhile a power adapter for it seems to cost at least $12. And using these without batteries just seems like a logistical nightmare if they’re actually trying to get any shooting done..

Yeah, the DC coupler (battery stand-in) DR-DC10 is $14 each from a seller that has a quantity of them, 64 of them would be $896. The linked post said they didn’t want to wait weeks, but I found a seller that claimed to have 60 of them in NYC at $14 each. I guess it’s their choice. It’s a neat project, I need to think of what I can do with blocks like that.

Why not using some Kinect? 5 maybe. It will be much better result

Hahaha no. Kinect cameras are terrible compared to a proper digital camera, in so many ways it’s not worth listing them all.

It’s actually not unreasonable, but it is a very different approach to the problem. Camera rig will get you an instant scan, kinect is going to take longer as you have to pan over the whole body. Google “scan-o-Rama” by the great fredini to see what can be done with a single kinect.

By using multiple Kinect it can be scanned faster. And also more accurate. Because it has 3d data

Now just switch to video mode and have some real fun muhaha.

It sounds enticing, but what they didn’t mention was the lighting. My guess (read: how we do it with the pro rig I built at work) is there’s some flashes being used. Definitely not the only option, it’s the easiest to sync that many cameras while getting crisp and bright pictures.

To switch to video requires a few things- constant lighting ( photo flashes vs bright video lights is a cost jump), frame syncing (usually requires special timing hardware), and a way to store video streams from 64 cameras (yes- to their internal SD cards is an option, just a pain when it comes to getting them on your computer later.)

Actually, the lighting is visible and mentioned. They are LED strips (the bright stripes you see on the poles). We use continuous lighting provided by the very bright LEDs, so no flash. And it’s pretty flat and even lighting. We thought about diffusing it, but haven’t so far. We didn’t try video yet though.

Apart from being a usage of the “affordable” I wasn’t familiar with previously, isn’t there a way to just make 8 cameras move up and down some rails, reasonably fast enough to get almost the same data?

There seems to be enough light and with a high enough shutterspeed a bit of movement doesn’t matter that much.

Not wanting to go “it not a hack”, because it really is a hack, but a hack that is basically a rebuild of a pro-solution would tend to be still quite expensive, whereas a hack from a different perspective could dramatically slice the costs.

There are a couple of factors there that are a problem. For static objects that might be worth a try. Heck, in that situation you can take your high-end DSLR at similar cost and shoot how ever many photos you like from whatever angles you need. There you can take your time.

The problem is humans though. Tell them to stand still, and they will always move somewhat, especially so in some of the specific poses you use when capturing humans. This is a problem depending on your capture/reconstruction technology.

In this case where photogrammetry is being used, it would mess up correspondences between photos, giving pretty poor results. The issue is not blurriness in a single photo (adequate lighting will allow high shutter speeds), but faulty correspondences between photo pairs. You’re messing up the triangulation, so to say. So you really would like all photos to be taken at the same time.

I understand where the weakspots would lie, but in this case, would it really matter. I have done some photogrammetry in various processes and I see a lot of wiggleroom. You can actually make up for slight differences between sources, so from a triangulation perspective, this would not need to cause a fatal error. How much precision is needed from the model? Doesn’t that depend on the application?

I can see where such a system needs at least 2 cameras with a clear view of any point on the object being scanned. But would it really suffer that much with fewer cameras well distributed in a sphere?

Program they are using is already not able to handle additional data, look at model generated, its pretty terribab, Kinect terribad.

Iv seen better results from very sparse photosets taken from a drone

Agisoft is actually quite capable with more data than they’re using. I’ve been on mobile all day so I haven’t checked out the data yet, but my guess is the quality is suffering from poor image quality of cheap cameras.

You can not expect too much from normal camera to create 3d model. You need special camera such as Kinect. Where we can get more 3d data

Photogrammerty has been around a lot longer than “special” 3d cameras and can produce stunningly accurate results. Photogrammetric setups are used to scan artifacts like pottery with some hundred micron accuracy, preserving even fine texture details. The tech used in kinect types of devices, produce a much coarser 3d map.

These cameras are cheap, but not crap. The resolution is plenty high and it being a fixed lens, I’d bet the lens distortion is minimal and easily corrected, if necessary at all.

This looks a great rig for the cost, and the ability to “snap” 3d objects rather then rotating them around.

However…surely as its photogeometry based its going to have trouble with pure-colored objects? How can it tell what details in what images match up if the colors are even?

Hello,

Could I use a nikon camera model (coolpix S3400 20.1 Mp) to do the same professional 3D scanner?

I believe the Twinstant full-body 3D scanner from Twindom uses Canon Powershot cameras, but it has 91 of them. (found it at http://web.twindom.com/twinstant/)

Hello everyone I ask if anyone knows that lights have been used in this scanner? They are neon? Hello thank you all