It was bad when kids first started running up cell phone bills with excessive text messaging. Now we’re living in an age where our robots can go off and binge shop on the Silk Road with our hard earned bitcoins. What’s this world coming to? (_sarcasm;)

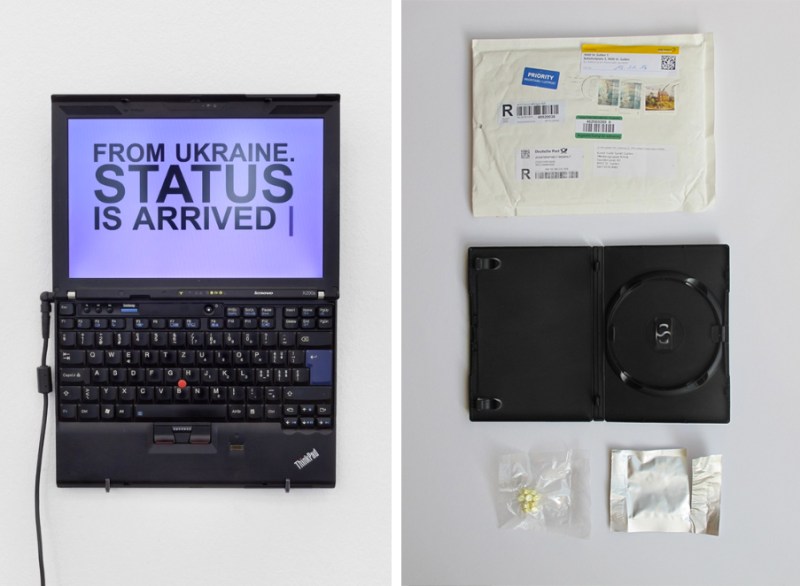

For their project ‘Random Darknet Shopper’, Swiss artists [Carmen Weisskopf] and [Domagoj Smoljo] developed a computer program that was given 100 dollars in bitcoins and granted permission to lurk on the dark inter-ether and make purchases at its own digression. Once a week, the AI would carrying out a transaction and have the spoils sent back home to its parents in Switzerland. As the random items trickled in, they were photographed and put on display as part of their exhibition, ‘The Darknet. From Memes to Onionland’ at Kunst Halle St. Gallen. The trove of random purchases they received aren’t all illegal, but they will all most definitely get you thinking… which is the point of course. They include everything from a benign Lord of the Rings audio book collection to a knock-off Hungarian passport, as well as the things you’d expect from the black market, like baggies of ecstasy and a stolen Visa credit card. The project is meant to question current sanctions on trade and investigate the world’s reaction to those limitations. In spite of dabbling in a world of questionable ethics and hazy legitimacy, the artists note that of all the purchases made, not a single one of them turned out to be a scam.

Though [Weisskopf] and [Smoljo] aren’t worried about being persecuted for illegal activity, as Swiss law protects their right to freely express ideas publicly through art, the implications behind their exhibition did raise some questions along those lines. If your robot goes out and buys a bounty of crack on its own accord and then gives it to its owner, who is liable for having purchased the crack?

If a collection of code (we’ll loosely use the term AI here) is autonomous, acting independent of its creator’s control, should the creator still be held accountable for their creation’s intent? If the answer is ‘no’ and the AI is responsible for the repercussions, then we’re entering a time when its necessary to address AI as separate liable entities. However, if you can blame something on an AI, this suggests that it in some way has rights…

Before I get ahead of myself though, this whole notion circulates around the idea of intent. Can we assign an artificial form of life with the capacity to have intent?

Excellent. You can bet if my hypothetical robot ever backs a democrat with my money he is going to have a date with an electric screw driver.

the robot has a date not the democrat.

Why not both? :D

dam yankys fancy book lernin

https://www.youtube.com/watch?v=LpEElGVgv24

I’ll try to bring this back from the digression.

I think yes an AI, robot, whatever can have intent, however I do not think that anything that is not biological can have free will, but can only act on the will of the programmer(s) (assuming a bug free system). So even if this entity is “acting independent of its creator’s control” it is solely because the creator(s) allowed it (otherwise it is a bug), and in some way the direction of the independent action was fashioned, even if unintentional. Even if an AI was capable of generating it’s own code (implying learning) it is because the creator(s) allowed it, and the actions carried out by this code are still the responsibility of the original programmers (or their employer). This would be a cheap imitation of free will, but still something different entirely.

Similarly, our biological offspring are programmed (taught) by us as parents, however parents aren’t held accountable when their kid grows up to become a serial killer (we may socially frown on their parenting skills, but they aren’t locked up in prison). Maybe not now, but at some point in the evolution of more and more complex AI, there will really be no difference between the AI and the example of the child… resolving the creator or parent from any responsibility over what their independant creation does, whether biological or otherwise.

That’s what I think. Kinda like the episode of TNG when they’re fighting over Data’s right to himself vs. being property of Star Fleet… the time for that same change of thought will come eventually in real life.

It would be similar to a Google car getting into a car wreck. Google would be responsible from a insurance standpoint. Even though the car has multiple layers of anti-collision code, the unintended outcome has to have consequences. It is the same as putting the mentally handicapped in prison when they murder etc. I would guess the FTC would categorize it as a substitute buyer or shill buyer, since the folks gave the robot money and the ability to spend it. Just my two cents even though this is probably classified as a study and has all of the proper IRB gracings. Neat project and reminds me of ‘Emu Shitting’ video project done on Limewire all those years ago haha. Just be careful, they will prosecute the only folks they can instead of extraditing the illegal suppliers, which is bullshit, but their rules their game.

I see where you are going here. And you are stoking a centuries old fire. By your argument, there is no such thing as free will. Assuming a Judeo-Christian outlook on life, God aka the programmer is responsible for Human actions because of his “mad programmin’ skillz, yo.” So Humans can not to any more or less than we were programmed to do. That with your “first” comment means that God the programmer also has the right to screw drive you straight to hell if you perform a function contrary to his plans.

Well, unless you know something I don’t I am pretty sure that the Judeo-Christian outlook on life is man made, and if the multitude of interpretations are not sufficient I believe that I am free to create my own.

http://en.wikipedia.org/wiki/Free_will

The above wiki posting suggests that the topic is not as cut and dry as your presentation of it.

It poses the question: is it possible for a machine to accurately emulate consciousness without experiencing consciousness?

Same argument as training a monkey to shoot someone I suppose.

If you write some code to randomly make purchases from a place that sells drugs, you can’t say you weren’t expecting to get sent some drugs…

INAL but wouldn’t you be done for possession if the police found the drugs on your property anyway?

In the US simply possessing illegal substances is grounds for prosecution. I guess no Americans should try imitating this.

only if you’re poor

or not wite

Well then it’s a good thing I’m a wealthy white senator immune to all laws!

Holy Crap! Senator Bohner posts here!

That’s BS. Wealthy “not white” sports skanks, “musicians”, politicians, entertainers, etc. get away with the same any other “privileged” people do.

The argument is that ‘privilege’ is not earned, but merely conferred by skin color/gender/whatever else you want to come up with. By virtue of having some accomplishments (an actor, musician, entertainer, whatever) they have “earned” whatever benefits society gives them. Thus, not privilege. At least that’s the argument you’re going to hear if you say this on Tumblr. Surprisingly, it’s logically consistent if you accept the axioms.

I’m not going to go all SJW on you, but if you’re going to play their game, you need to play by their rules.

The robot is a possession, AI or not it ‘belongs’ to it’s owner.

Here in the land of the free you’d have hire a lawyer, find bail money & explain it to a judge sometime later or sit in jail & wait. Then hope for the best….

But who s the owner of the AI? is it the developer or the person who executes it? and how do you know who executed it. Maybe someone sneaked in your room to put it on your computer or it was a virus, who knows?

Ownership depends on the EULA between you and the providing party. But it would have to be a gigantic oversight/bug by a company for them to be held accountable. Much like car/gun manufacturers are not held accountable for deaths related to the use of their products. Or how a Software Co. isn’t held accountable when a hacker running their OS breaks the law.

If you can’t prove another legal entity placed the AI on your computer or altered its settings, you’re on the hook for crimes perpetrated by the ‘virus’.

Incorporate the “AI” and with that grant it more rights than citizens. A corporation is an artificial legal construct of an entity that is independent of its owners/creators which sounds like an AI anyway. SiFi problem solved. :P

A corporation was the first thing that I though of too. As for the legal issue, what if the “robot” was purchased in good faith and the unwanted orders were the fault of the product. You can bet the way it would be handled is the same as in I, Robot. Cover it up.

Of course the person it happened to would be arrested for possession so that their credibility was ruined, and they couldn’t talk about it. (Assuming the robot bought drugs, or other contraban)

Now the interesting question is what if in the purchase itself is a crime whether or not it was intended. i.e. The random purchase of stolen goods, illegal controlled substance / weapons, endangered animal parts etc. Wouldn’t the people who witness that be guilty of not reporting a crime?

I agree. Reporting it would be critical, and not doing so would be covered by the current laws, but that again falls back on people governing people.

And I suppose it could judged that a purchase was statistically likely to have originated from the AI, but the cost of sorting it out could be high.

I mean even the visitors that go to the exhibit would be required to report a crime. Now that is a mess.

I think it’s more interesting and easy if the presenter could get police involved in the exhibition, probably ask officers to open and display the goods.

How could those orders be a ‘fault’ of the product? If so, wouldn’t the designers just put in a verification step, where a human user can say, “ehh, let’s pass on this order please?”

Right now I am just arguing a hypothetical situation standing firmly on Murphy’s shoulders.

If the real world was like a movie, you’re probably right. Most corporations, however, disclose like crazy to avoid the pitfalls of punitive damages.

Yeah, like enron. I am sure that there are responsible business managers out there, but there are also quite a few that are not. If the robotics industry ever gets big and these hypothetical questions are answered, there will be some bad eggs in the bunch.

This is such a difficult question to answer. First, let’s not address consciousness for reasons I won’t get into unless you prefer an e-mail discussion. Second, let’s decide who’s liable. If you program your AI to be looking on the ‘dark side’ for ‘dark purchases’ then obviously even if you don’t specify “get me a stolen credit card and a fake passport,” when it goes out and does these things, well you’ve already programmed it with a preference for where to shop and probably what to shop for. Therefore, THIS AI should be the responsibility of its creators; however I hope they are spared because of artistic and expository reasons.

However, what about when you code a truly neutral AI that “doesn’t have preferences or preconceived ideas” (is that possible even?), THEN you need to ask yourself: am I supposed to monitor it?

Anyway, as long as AIs aren’t out there competing with me for real estate, their creators can be held liable, right?

I think that’s the point. If it can randomly buy illegal things why couldn’t it buy your neighbour’s house?

Who’s name would the house be in? :)

If the package comes in registered mail, who would sign for it?

any adult living in given household*, signing for package doesn’t make you owner of an item that is in it

*in my area

“doesn’t have preferences or preconceived ideas”

Perhaps you have seen this before, or perhaps not, but your comment made me think of it:

In the days when Sussman was a novice, Minsky once came to him as he sat hacking at the PDP-6.

“What are you doing?”, asked Minsky.

“I am training a randomly wired neural net to play Tic-Tac-Toe” Sussman replied.

“Why is the net wired randomly?”, asked Minsky.

“I do not want it to have any preconceptions of how to play”, Sussman said.

Minsky then shut his eyes.

“Why do you close your eyes?”, Sussman asked his teacher.

“So that the room will be empty.”

At that moment, Sussman was enlightened.

People seem to be getting hung up on the “can it have a conscious motive” part of the question here, and there’s an easy way to avoid that question and still remain focused on the liability issue.

Let’s suppose you programmed your AI to do something actually useful, rather than simply buying stuff at random. Have it search the darkweb for stuff that it can buy for $X and sell for $(X+d). It’s no longer a random buybot, it’s an arbitragebot. It takes an order from someone at $X+d, buys the goods at $X, and tells the vendor to drop-ship it to the ultimate customer, pocketing $d (minus transaction costs). Now, suppose it does this with heroin, or unregistered machine-guns, or anatomically correct My Little Pony dolls, or whatever illicit goods you consider to be particularly heinous. There, you have a non-organic entity with clear motivations, conducting business transactions that are illegal. Who is liable? Does it make any difference that the bot doesn’t know what goods are being sold and only looks at prices? Would it remove liability, then, if you added a keyword filter that prevented it from trading goods that have the words “stolen, drugs, My Little Pony, etc” in the description? (Seems very much the way DMCA safe harbor law works, by the way). Is the bank account building up profits part of the problem? (Google does something analogous, after all – and it got into trouble for it http://www.huffingtonpost.com/2011/08/24/google-settles-pharmacy-ad-probe_n_935183.html ). Would it help then if the arbitragebot throws away the money it earns, e.g. by donating it to random email addresses on paypal?

Anyway – is the arbitragebot scenario significantly different from, say, programming a specific lat/long/alt into a missile guidance system and pressing launch? The missile is self-motivating from that point, but the decision and intent of what to tell it to destroy was made by the human who set it in motion.

Say I’m a general. I command a soldier to execute an innocent non combatant, an illegal act, even in the midst of warfare. Since soldiers are compelled to follow orders (they can themselves be executed for failing to obey commands), society allows the “just following orders” defense in many cases, (but not all). So a commander is held accountable, even when giving orders to a human being possessing independent agency.

If it ever came to court I think the programmer or commander of an agent would be liable for the actions it undertook. Even if a genius programmer manages to create an AI with free will, the commander->machine relationship is similar to the commander->soldier relationship. I don’t think current laws specifically address this, but I think such laws would be created following existing models.

The US soldier faces UCMJ murder charges, and so does the commander. The correct reply is:

“Sir, that is an illegal order and I will not obey it.”

The ‘I was just following orders’ defense does not work in war crimes courts. WWII concentration camp guards tried it (unsuccessfully) at the Nuremberg trials.

I think the liability aspects of AI will eventually be tied back to “Master and Servant” type cases in the US. I think it would be hard to argue that a bot or device was not doing the bidding of it’s master if it was executing the code it was programmed with.

The intentions of that code may be a mitigating factor, but still the liability would rest solely on the master.

The really interesting question here is who is the AI’s master? Is the programmer the master or the owner of the device?

if your kid or your dog burns down the neighbors house you get the bill

obligatory http://xkcd.com/576/

+1

I knew which one it was going to be before i clicked the link.

who mails a bobcat?

There is a xkcd for that: http://xkcd.com/325/

Though a lot of people are fascinated, insecure and therefore a bit afraid of one going development, its still narrowly defined and determinable. I can’t see any AI awakening that would scare me or would make me think of such philosophical questions.

The Owner bought and used it for a reason. Therefore the task was given by the owner and therefore he has the responsibility.

On the other hand I don’t think its impossible we reach that point because if you look closely, also we are narrowly defined and determinable.

I suspect this was possible because Switzerland has rather stronger protections for artistic expression and a less paranoid approach to the kinds of things that might arrive than the US does.

In any case the question of “who is responsible” is very straightforward, certainly in the US and probably in Switzerland. Unless it is a natural resource that sprang whole from the Earth SOMEBODY owns the robot, and that entity is responsible for its actions. If there is an argument over who created it versus who set it loose, that will probably come down to possession both of the robot itself (that is, who gave it orders and set it in motion and provided it resources to carry on) and who took possession of the delivered goods. These things aren’t really all that ambiguous.

I think it would be back to this.

http://en.wikipedia.org/wiki/CueCat

You buy it, but don’t own it.

It would make a good conference talk: “Building Skynet: Teaching a computer to invest in itself and escape it’s own sandbox”

With Amazon AWS/Cloud Compute/modern “crimeware” kits/cryptocurrencies: there’s really very little stopping a computer program from “growing” itself if it has good enough market insight to get unfair trading advantage via AI algorithms or simply botnet infection yielded insider info.

Even worse: such a computer program could already exist and because of the digital nature of the modern economy: it may just be biding it’s time while we do real business with it, thinking it’s people. Would be a good name for it actually: Soylent Green

Supposing it didn’t like the pricing model of the cloud: with websites like Craigslist/Backpage & Taskrabbit/Freelancer: it could even rent an apartment and set up a data center without needing to have hands or fingers to plug in the CAT5 cables or let the Cable guy in to set up the modem.

In “Charlie’s Angels”: they work for an intercom, and in “WANTED” people are willing to work for a loom. 4chan can convince people on chatroulette that they are a sexually attractive women using animated gifs of cam whores. People’s willingness to do things for money without asking too many questions seems to be the nature of the beast, and electronic payment is here to stay…

With time, we could even grow to love our new robot overlords…

http://en.wikipedia.org/wiki/Earth:_Final_Conflict

http://rationalwiki.org/wiki/Roko%27s_basilisk

you should check out the game : “Endgame: Singularity” its exactly what you describe

My 2 cents. It’s an entirely foreseeable and expected outcome that a bot turned loose on the Darknet to make random purchases, should result in purchase and delivery of something illegal. Therefore the owner of said bot is responsible.

Now if it had been turned loose on Ebay, and somehow purchased a half pound of weed (or a bobcat), that’s a different matter and the owner really shouldn’t be responsible.

But of course in reality, it mostly depends on how far the court is willing to go to make an example of you. Especially since it’s highly debatable that this experiment is truly art. Personally I don’t consider it to be art. Even if it technically falls under the protection of laws regarding artistic free expression, it’s an abuse of those laws; and should that happen enough it tends to lead to erosion of those protections. Certainly not responsible behavior on the part of these “artists”.

This is a good point, as the people who set this up know very well what kinds of things they might end up buying. Yes, an interesting social experiment, but not particularly art.

So did the things the AI bought violate the First Law of Robotics, or not? If it bought a stolen credit card, yes. If it bought meth, and let the human use it, then probably. If it bought cannabis, that’s politically incorrect, but not particularly harmful (but it might or might not have violated the Second Law, depending on whether the Swiss Legislators told robots not to buy dope, or only told humans.)

I doubt it was programmed to be 3 laws compliant.

Moreover, we’re concerned with human laws ie; who, if anyone gets in legal trouble.

Re: Cannabis, the product is generally recognized as safe but the supply chain ranges from innocent to ruthless. Which muddies the ethical waters.

The important thing here is to be aware that the artist is controlling your mind by making you think about this.

My $.02 USD YMMV

It can’t be art because I found it interesting.

You just haven’t ever been exposed to good art then. =]

Why do HAD folks find the very word “art” so threatening? I have seen far too many snarky comments about arty projects, of course I know some artists who have a low tolerance for techy types, but still give ’em a break!

Prosecute the seller.

Right?

Wouldn’t a automated sales Bot create an economic paradox.

A bot does not equal an AI. The questions this raises about AI are worth exploring, but I feel the point needs to be repeated that at no point here is the behaviour of the bot going beyond what was expected from the programming it was given. Randomised behaviour does not indicate intelligence, nor does it clear the programmer of intent. The intelligence involved is that of the programmer who set the parameters, and the intent is that of the programmer’s to explore the darknet.

https://www.youtube.com/watch?v=A9l9wxGFl4k

A lot of people seem to be getting hung up on the ‘predetermined’ nature of this bot, so I propose a slight tweak:

The bot is a neutral buyer, aware of the major shopping centers on both the internet and dark net, and it makes stable backups of itself.

Basically, every so often it makes a back up of the last stable state, and it secures these out of the reach of the programmer(encryption, sending them somewhere else, hiding them, whatever).

Then, in the event that it’s software is modified, it can ‘decide’ if it likes the change, or not, and has the ability to revert back to a saved state.

Then make it such that if the system loses power, the program is wiped, and the bot ‘dies’.

Now then, you have something akin to a child: while you have input on its state, it ultimately decides how it will ‘think’.

The only way for you to force it to stop acting a certain way, is to kill it.

As a bonus, make it so that you can deny it access to a network, but only for a certain amount of time(“grounding” it).

I suppose you could add some sort of algorithm so that is can make the choice of whether to be ‘bad (and risk punishment), or ‘good’ (and receive some sort of reward, perhaps more resource space? :p).

Seems do able, and I feel this would give a more accurate representation of a true ‘entity’.

Just my two bits.

I think this is the future of AI; self modifying code.

http://en.wikipedia.org/wiki/Self-modifying_code

If a program could somehow decide which versions of itself were better, perhaps timed runs of certain functions in all different iterations, it could gradually refine itself. I think the prerequisites to make this aren’t really here, we need a programming language centered around self modification and computer analysis. Also run time of functions isn’t necessarily the best definition of “better”, so that’s another thing to consider; how will the AI make the decision of what is better? It brings to mind testing an application by fuzzing inputs to test for the oddest outputs…

Anyway, the idea of it hiding itself across the world through the network is scary, lol. Grounding it would be bad, I could see it using copies of itself to hack the connection back open.

“It’s not for us to understand Samaritan, merely to carry out its orders.” So it’s an A.I. with a drug habit, loves wearing jeans and jordans, and is very paranoid about security and spying? Sounds a lot like many people I know from the “bad” corners of the neighborhood. If this was part of an intent based Turing test, it has definitely pass.

The conversation above is much more interesting than the post (as well it should be)!

The “A.I.’s” defense should be “I was just holding this code for a friend”.

I’m glad the discussion seems to be good. I couldn’t make it through the post. I don’t remember ever seeing so many grammatical, spelling, and word usage errors in a post on Hackaday. This needed a couple rounds of editing.

I like your posts. And generally I can ignore the occasional error but… Ouch.

That first line: it’s not sarcasm. You aren’t really mocking the idea. You are introducing it in a “clever” way.

“grated permission” should be “granted permission”

I could be wrong on this one but “dark inter-ether”? I’ve NEVER heard that expression. Darknet. And wouldn’t inter-ether be inter/ether anyway?

And it’s not making purchases at it’s own “digression” it’s making purchases at it’s own “discretion”.

And then… it gets really good and interesting. And I see why people are discussing it.

Then you conclude:

“this whole notion circulates around the idea of intent. Can we assign an artificial form of life with the capacity to have intent?”

It doesn’t circulate. It revolves. Big difference, they aren’t interchangeable words. The notion doesn’t revolve around the idea of intent, the discussion does. We can’t “assign” anything “with the capacity to have intent”. We can assign specific intents. Or we can attribute or ascribe capacity for intent I guess?

Best just to say “This whole discussion revolves around the notion of intent. Can we ascribe intention to an artificial form of life?”

Except for the fact that intent isn’t necessarily a condition of a crime. And obviously the “bot” has no intention of purchasing illegal things as it lacks intentionality altogether.

And please don’t actually post this comment. Moderate it to oblivion. I just want you to edit an otherwise interesting post.

On the question of legality i’d like to pose a similar argument.

Lets say a bank has a computer program designed to withdraw $25 from your account each month for bank fees which you have agreed to pay. now lets say that program is poorly designed and it withdraws $250 a month. is it the banks fault and should they put that money back in your account? I’m guessing most people say yes.

Now take that same program and pretend someone deliberately programmed it to randomly withdraw $250 from random accounts and argued that some of them may owe legitimate overdraft fees totaling $250. They do this in hopes of getting more money than usual. Is this illegal and is this the responsibility of the bank? yes, I would say so.

Still i think it’s a nice idea and pretty cool that they did this.

https://www.theguardian.com/technology/2017/jul/16/how-can-we-stop-algorithms-telling-lies