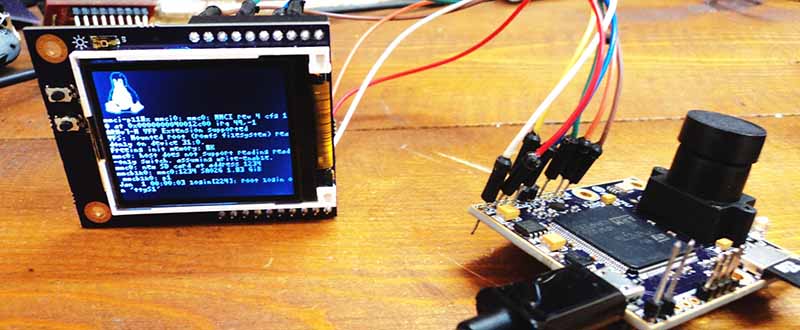

Computer vision is a tricky thing to stuff into a small package, but last year’s Hackaday Prize had an especially interesting project make it into the 50 top finalists. The OpenMV is a tiny camera module with a powerful microcontroller that will detect faces, take a time-lapse, record movies, and detect specific markers or colors. Like a lot of the great projects featured in last year’s Hackaday Prize, this one made it to Kickstarter and is, by far, the least expensive computer vision module available today.

[Ibrahim] began this project more than a year ago when he realized simple serial JPEG cameras were ludicrously expensive, and adding even simple machine vision tasks made the price climb even higher. Camera modules that go in low-end cell phones don’t cost that much, and high-power ARM microcontrollers are pretty cheap as well. The OpenMV project started, and now [Ibrahim] has a small board with a camera that runs Python and can be a master or slave to Arduinos or any other microcontroller board.

The design of the OpenMV is extraordinarily clever, able to serve as a simple camera module for a microcontroller project, or something that can do image processing and toggle a few pins according to logic at the same time. If you’ve ever wanted a camera that can track an object and control a pan/tilt servo setup by itself, here you go. It’s a very interesting accessory for robotics platforms, and surely something that could be used in a wide variety of projects.

Its awesome! I have already backed it on KS. I am collecting parts for my next robot. Already have two LIDAR-Lite sensors too.

My past robots are on my blog http://mobilewill.us 2 tabs on top.

Google cant find the word “Lidar” on “mobilewill.us”, so what Lidar are you using? Something of the shelf? Something out of your own kitchen? Please, more infos!

https://www.sparkfun.com/products/13167

Aw, I thought it would be some form of DIY Lidar in the form of a Sick Lidar (http://goo.gl/LLeibr) and not just a proximity sensor. I guess I rejoiced too soon =|

It was crowdsourced on Dragon. It came in a few weeks ago and then I saw SFE is stocking it.

Originally it was going to use IR but then switched to laser. Its pretty awesome. Good for long range and fast enough for scanning.

Here’s the cheapest one I could find a little while ago: $400 : http://www.seeedstudio.com/depot/RPLIDAR-360-degree-Laser-Scanner-Development-Kit-p-1823.html

How is this any better than a Raspberry Pi +camera?

It is stand alone, doesn’t require linux (or any OS), can act stand alone or be controlled separately, and has lower current requirements. It depends on the vision requirements of your project.

You can start with power consumption:

“Current draw is approximately 140mA, steady state”

http://elinux.org/Rpi_Camera_Module:

“The RPi + camera draws about 260 mA more current when recording video, than without the camera. The Model B is about 550 mA by itself, so camera use pushes it over 800 mA.”

But perhaps you too could actually bother to read about both to compare and contrast.

The absolute first thing that jumps out at me here is size; Seems this setup is a lot smaller than a raspi+ camera module. Then there’s the reduced power requirements, making it a better choice for battery-powered solutions.

It has 256KB of RAM — probably just what is on the microcontroller. That seems like a pretty limiting constraint. The sensor is 2M pixel, which means you can’t even hold a full frame in memory. It seems like the only way to do image processing is to downsample the image so it fits into the 256KB SRAM (less whatever working space your system needs).

What am I missing?

humans also throw away most of the visual information in their processing systems

adaptive machine vision tosses out most of the pixels anyway, you can collect and sample interesting pixels in the active field of view

“humans also throw away most of the visual information in their processing systems”

That seems like a big over generalization. Throwing away information after it’s somehow “evaluated” to be irrelevant is very much different from just throwing it away before you even start processing.

Human vision is remarkable.

Wiki worth a read http://en.wikipedia.org/wiki/Saccade.

Also http://en.wikipedia.org/wiki/Transsaccadic_memory

Almost everything… check out the forum

https://groups.google.com/forum/#!forum/openmvcam

It’s got 16MB of RAM, actually.

Bit disappointed he didn’t add a ULPI PHY to the HS USB port. At least that way you could stream raw information back to a PC at a pretty good clip. Other than that, nice project.

Sounds a little like CMU’s Pixy project…

Yes, a little like the Pixy, but this one does face-detection and a whole lot more, and is a fair amount cheaper than the Pixy. And it can be programmed in python (the Pixy is quite difficult to program beyond setting a few configuration/tuning values). I saw a demo of this at a Colorado Maker Faire last year, and it was quite impressive.

Yes, a little like the Pixy, but this one does face-detection and a whole lot more, and is a fair amount cheaper than the Pixy. And it can be programmed in python (the Pixy is quite difficult to program beyond setting a few configuration/tuning values). I saw a demo of this at a Colorado Maker Faire last year; it was quite impressive.

That was my first thought, this looks like the CMUcam ideas, updated and extended a little. But now I see that CMU hasn’t been resting on their laurels either, and their new generations are pretty spiffy too!

$25 for shipping to Canada ? C’mon !

Here’s a faster sensor:

http://pixhawk.org/modules/px4flow

168 MHz Cortex M4F CPU (128 + 64 KB RAM)

752×480 MT9V034 image sensor

L3GD20 3D Gyro

16 mm M12 lens (IR block filter)

Size 45.5 mm x 35mm

Power consumption 115mA / 5V

MT9V034 machine vision CMOS sensor with global shutter

Optical flow processing at 4×4 binned image at 400 Hz

Superior light sensitivity with 24×24 μm super-pixels

Onboard 16bit gyroscope up to 2000°/s and 780 Hz update rate, default high precision-mode at 500°/s

Onboard sonar input and mount for Maxbotix sonar sensors. (HRLV-EZ4 recommended, SparkFun Product Link)

USB bootloader

USB serial up to 921600 baud (including live camera view with QGroundControl)

Similar device, same MCU, yet 3x more expensive and sold out everywhere. Tells me Ibrahim is on the right track.

Anyone know how this compares to cmucam5 pixy?

( http://www.cmucam.org/projects/cmucam5 )

https://www.tindie.com/products/AP_tech/tsl1401cl-linescan-camera-/

For example, you can’t pull raw images from the Pixy’s SPI/USB. Instead it trasmits the objects tracking data. Maybe OpenMV can do both?!

Also, does it work with other video software like OpenCV, pixython, etc? I always thought OpenCV could only work on a desktop (intel, full linux), but I think recently it’s been made to work on an embedded arm platform (BeagleBone).