Lightning photography is a fine art. It requires a lot of patience, and until recently required some fancy gear. [Saulius Lukse] has always been fascinated by lightning storms. When he was a kid he used to shoot lightning with his dad’s old Zenit camera — It was rather challenging. Now he’s figured out a way to do it using a GoPro.

He films at 1080@60, which we admit, isn’t the greatest resolution, but we’re sure the next GoPro will be filming 4K60 next. This means you can just set up your GoPro outside during the storm, and let it do it what it does best — film video. Normally, you’d then have to edit the footage and extract each lightning frame. That could be a lot of work.

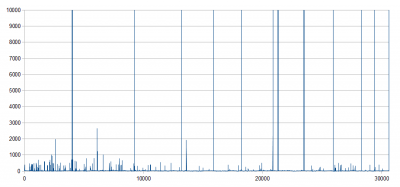

[Saulius] wrote a Python script using OpenCV instead. Basically, the OpenCV script spots the lightning and saves motion data to a CSV file by detecting fast changes in the image.

The result? All the lightning frames plucked out from the footage — and it only took an i7 processor about 8 minutes to analyze 15 minutes of HD footage. Not bad.

Now if you feel like this is still cheating, you could build a fancy automatic trigger for your DSLR instead…

So,- What are the white pixels that show up in the video with each lightning strike? Is it due to the magnetic field or is this some charged particle effect?

Never-mind, I see it is due to some crappy video compression. I don’t see it in the original animated GIFF.

Looks like WordPress went and messed it up. Sorry about that!

No worries, It had my imagination going for a second- Thinking about the magnetic field strength of a lightning strike.

You mean to tell me this couldn’t have been done with an antenna to sense the incoming strike and trigger the camera to take the pic? I remember reading about a lightning sensing antenna circuit that would sound when the static build-up was high enough to cause a strike and you would hear the build up then strike with a pop.

Isn’t that approach mentioned in the bottom of the article?

Also in my book – single simple device and software (opensource, yay) beats dedicated hardware as anyone can easily reproduce it this way.

Not really, that links to a device that checks for IR signatures that indicate that lighting will strike about a second before it does. What I’m taking about is the ionic buildup that occurs just before the breakdown voltage of air is reached which is when the lightning strike occurs. This can be sensed with a radio antenna and made audible. Once audible, you would hear the buildup as an increasing pitch whistle and then the pop just before the strike. If you would set the camera to trigger on the pop, you would capture the lightning strike.

Although, FWIW, someone in the comment section of that article did mention something about it.

There’s probably some gear/software that the star-gazing community have for meteor spotting that could be used for this with minimal adaptation.

1080 might not be the best resolution, but these pics look a heck of a lot better than the lightning pics I’ve tried taking with my old Canon S3-IS.

Since we’re so full of great ideas – how about setting it up to make a moving .gif (or at least isolate the 20 – 60 frames needed for one) to take in the whole lightning strike? You might find some interesting stuff – leaders etc. Since you have the peak it’s just center +/- .

Photographing lightning strikes has never required fancy gear. You just set up a tripod, a relatively long exposure for the ambient light, and shoot away. It doesn’t even cost anything since the advent of digital cameras.

You need a fancy enough camera to have a programmable timer that shoots hundreds of pictures in series without too much processing in between.

Regular point & shoot cameras take 5 pictures and then spend the next two minutes writing them to the SD card, not to mention the delays between the pictures longer than the exposure itself, giving a greater chance to miss the lightning than capture it.

I wonder if you could replace opencv code with simply analysing MPEG4 stream, it already has motion vectors present as part of the compression mechanism (unless opencv uses that instead of working directly on video data).

After scrutinizing opencl code, I realized how inefficient it is for sake of being generic. Many times, it invokes 10000s lines of code and only uses something that would have needed a few lines of code. So it could be optimized, I’m sure.

Any chance of getting your script please?