Just about the hardest thing you’ll ever do with a microcontroller is video. The timing must be precise, and even low-resolution video requires relatively large amounts of memory, something microcontrollers don’t generally have a lot of. HDMI? That’s getting into microcontroller wizard territory.

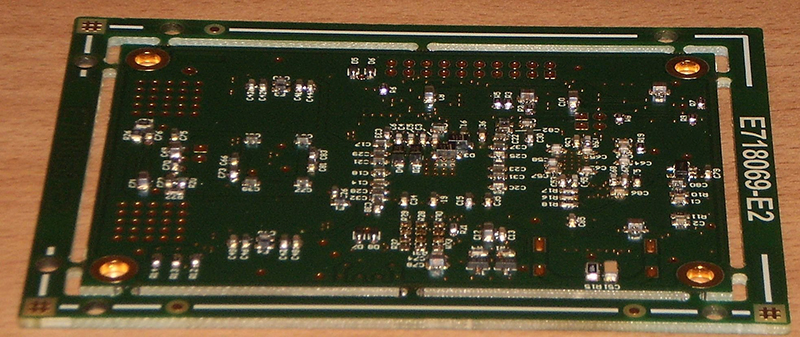

Despite these limitations, [monnoliv] is working on a GPU for microcontrollers. It outputs 1280×720 over HDMI, has a 24 bit palette, and 2D hardware acceleration.

It’s a very interesting project; usually, if you want graphics and a display in a project, you’re looking at a Linux system, and all the binary blobs and closed source drivers that come with that. [monnoliv]’s HOMER video card doesn’t need Linux, and it doesn’t need a very high-powered microcontroller. It’s just a simple SPI device with a bunch of memory and an FPGA that turns the most minimal microcontroller into a machine that can output full HD graphics.

This isn’t the only open source graphics card for microcontrollers in the Hackaday Prize; just a few days ago, we saw VGAtonic, another SPI-controlled video card for microcontrollers, this time outputting VGA instead of HDMI. Both are excellent projects, and if either makes it into production, they’ll both be cheap: under $100 for both of them. Just the thing if you want to play around with high-resolution video without resorting to Linux.

What’s the problem with a closed source driver ? As long as it works…. and it probably works better than trying to shoehorn everything through a slow SPI bus.

Sometimes running a whole embedded Linux system is not ideal or overkill for certain applications. If a cheap micro can run some basic video/animations at a fraction of the cost/power, I think it’s worth looking into.

The FPGA board will cost more than a raspi.

this!

its a nice project if you do it for learning experience or love, but commercially it wont fly.

Folks just don’t to get it. Unless you possess the tools and skills to modify the device, are provided with the source code well-documented enough for you to understand or you’re skilled enough to not need documentation, AND it’s worth the time and effort to modify it, then open source provides no advantage to you. It might as well be closed.

How many people – other than the creator – is this project effectively open source to? ZERO.

No, I’m not exaggerating. Yes, there are a few people here who do, in fact, possess the tools and skills to modify it. But would not invest the time or effort. I’ve seen about a half dozen open source FPGA-based graphics devices at this point. Surely it would be advantageous to use a prior open source device as a starting point for the next one, right? Nope. All developed entirely from scratch. Not a single one was built on the work of another. No open source material from prior projects reused or modified.

So thumbs down to this project, for failing multiple reality checks. It denounces other existing options, and proudly proclaims it’s open source, yet this benefits ZERO people in the real world. It won’t provide any advantages in performance, price, size, or (presumably) power consumption over other options, either. I’m sure it will be a wonderful learning experience for [monnoliv], but to claim any other positive attribute is bogus.

Being open source may benefit someone who wanted to look at how the code works as an example. I do not see any benefit for it to have been closed source.

..or just use a RasPi as a slave for a fraction of the cost.

Yup, better to use a Pi for HDMI…it’s way cheaper.

Or, use a Propeller for basic VGA….very cheap.

Good luck trying to make this board for $100. Double sided assembly (2 passes SMT/reflow), SMT with lots of pins – they charge per pin for low volume and or upfront set up charge for pick and place. If you managed to do this, please post where and QTY…

At he end of the day, you can’t beat the price of a Pi. Terasic FPGA boards would be a better deal.

“The point” train sped right past you guys.

The point, as usual, is to have fun and (probably) to learn some new things, which is a perfectly fine reason in itself. No need to pretend there’s more to it.

Ow, ow. Did you read my description/detail text? The purpose is NOT using Linux, then NOT using a RasPI (or whatever ARM board flavor) since there is no (or little) documentation about their graphic chip.

In my opinion, as there are no alternative of using Linux when one wants to go for some high level graphics, a lot of projects using Linux are overkill.

There are a big number of industrial embedded systems that have their own GPU around a FPGA (but usually this one is more complex then more expensive than the one I choose). Think also on the development time, for example using FreeRTOS (that match perfectly the power of Cortex-M processors) is definitively less time consuming. Think of guys that don’t want to learn Linux, that use Arduino and want to have nice graphics, …

Concerning the price. If with a kickstarter campaign I can produce 100 boards for example, the assembly will be no so expensive, you know that’s the startup cost that is no cheap.

I hope that this project will be produced :-)

>documentation about their graphic chip.

Does that matter if all you care about it getting stuff on the screen?

>Think also on the development time, for example using FreeRTOS (that match perfectly the power

>of Cortex-M processors) is definitively less time consuming. Think of guys that don’t want to learn

>Linux, that use Arduino and want to have nice graphics, …

You can pickup “linux” and SDL in a few afternoons if you already know C and you’ll have libraries that are easier to use, a ton more memory, a debugger that doesn’t go to shit because it can’t properly work out what your RTOS is doing… If you haven’t looked at SDL go and check it out.

>I hope that this project will be produced :-)

IMHO the project makes no sense. If you want a basic framebuffer on HDMI for a microcontroller then a breakout board for the HDMI framer the beaglebone black uses for it’s LCD->HMDI conversion and a microcontroller with a LCD controller built into it would make much more sense. If you want to have OpenGL level functionality then one of the of many ~$30 boards that can run Linux and have enough support for the GPU that it doesn’t totally suck makes more sense.

I thought this round of the hackaday prize was about changing the world or something…

Wow, what a comment!

Let me explain: I have 2 RasPI, I can use Linux, I’ve used SDL for a project, I know that some guys are building a 9$ chip, etc … And then? You don’t read the description of my project? Have you a solution for those guys that use Arduino or other microcontrollers boards and have to study Linux simply because they want to do some graphics? Let me know if any.

> Have you a solution for those guys that use Arduino or other microcontrollers

>boards and have to study Linux simply because they want to do some graphics?

If you were building a skyscraper would you use a kid’s plastic bucket and spade to do it?

Hey, have you considered that the SPI interface is rather hot-pluggable?

You could have a bunch of small microcontroller boards that are really cheap that just expose a header which you attach your (portable?) Homer w/ LCD to, and when you attach the cable a sense line detects 5V signal or something (remember to pull-down to prevent noise) causes the microcontroller to initialise or reset its graphics routines, clear the display (perhaps Homer has a reset line), then do whatever the thingy you were going to do was.

I’m actually pretty interested.

You could /also/ do this with a RasPi basically replacing Homer – but it’s not as cool, dammit! This is *hack*aday, hack something together for the sake of it.

“Have you a solution for those guys that use Arduino or other microcontrollers boards and have to study Linux simply because they want to do some graphics? Let me know if any.”

Sure. One solution is for someone to make a very simple, basic Raspberry Pi (or BeagleBoard or whatever device you want to use) distro dedicated to receiving SPI requests to draw to its framebuffer. It boots up, clears the framebuffer and sits there waiting for commands. For everyone who is a user of it, no Linux knowledge is needed, because it is just an SD card image.

With this solution, the mass production problem is then solved. Just wire it up and play, with no Linux knowledge necessary. Additional hardware acceleration functions can be added in whatever languages can interface with the SPI I/O pins and have access to the raw frame buffer.

Please note that I’m not saying that this project isn’t useful or interesting, and it is sure to have its uses. I’ve had similar thoughts myself a number of times, especially when working on retro gaming projects. However, I think it is important to realize two things:

1) Someone out there is almost certain to have other ideas on how to do something

2) Projects should always be for fun, and not to impress others ;)

I didn’t think about using a raspi like this, it’s a good idea.

Concerning your two things, at the beginning I decided to do this project for me only (I’m mainly a Cortex-M user), then I decided to share my work (it’s a lot of work) here to help other microcontroller users. Impressing others, if any, is a side effect!

Trust me, trying to impress doesn’t win votes. Been there, done that and only managed to get the T shirt. :P It is the stupidly simple projects that is within the average readers level that seems to get the most skulls. Do the project for yourself for what you enjoy and believe in.

Last year, it was Arduino something, 3D something, quad coptor something that hogs the attention. At least this year, they also have mini contests. The crowded Atmel one for the Arduino have a few times lower chance to win. lol.

>Think of guys that don’t want to learn Linux, that use Arduino and want to have nice graphics, …

The guys that use Arduino will have a hard time learning RTOS. May be a Arduino programming frame work that targets RPi is a better prduct.

RTOS is not mandatory. BTW Linux is not a RTOS but a simple Arduino is RT :-)

What Do you mean by RTOS? What do you mean by RT? This means different things to different people. And what Do you mean by Linux? There are real-time variants of the Linux kernel, e.g. RTLinux, RTAI and others. For some fairly low latency requirements, plain Linux as found in Raspbian might be sufficient (after all, the CPU in even a lowly Raspberry is _much_ faster than any Arduino).

Arduino framework is not RT as there are no max timing specs that say what to expect. It is dependent whatever haphazard user written library/framework that are copy/pasted together. The unsophisticated user bases code favors busy loops and as such doesn’t utilizes resources efficiently. If someone decided to make a library that disabled interrupts for extended periods of time, the target users might not even care/knows about it. For that market a SPI LCD would be enough for that market – enough to put their video on youtube and show how cool they are.

Linux can do limited RT if you use linux RT (is there a target for RPi?). That doesn’t stop someone porting an embedded RTOS to it.

>Arduino framework is not RT as there are no max timing specs that say what to expect.

I don’t agree with you. Now if you don’t use the timing functions like millis() or if you let some libraries disable interrupts or if you’re doing some delay with a for() loop, or… then of course you’ll not master timing.

http://www.freertos.org/about-RTOS.html

>A real time requirements is one that specifies that the embedded system must respond to a certain event within a strictly defined time (the deadline). A guarantee to meet real time requirements can only be made if the behaviour of the operating system’s scheduler can be predicted (and is therefore deterministic).

i.e, RT = deterministic time with upper bounds for servicing interrupts/task switching/os level calls etc. Since Arduino doesn’t do multitasking, upper bounds on interrupts latency and may be some core functions are probably what that matters.

delayMicroseconds(us) disables interrupts for the whole duration as it uses software timing loop, thus interrupts can’t be deterministic if you are using it in your main code.

BTW millis() etc uses hardware timer and no longer lock up the interrupts.

Thank you to remind me the RT definition :-)

I’m doing hard real time systems for a while now. I can tell you that even with a simple Arduino (in fact with every microcontrollers) *it’s possible* to master the latency just with the right use of interrupts and interrupt priorities.

I’ve to check the build in functions of the Arduino framework, I’m an Arduino beginner.

I don’t do Arduino code and would steer clear of anything that uses it. It isn’t worth my time. I was just trying to port something the other day and reminded of how badly written it is. That delay function was something I came across.

I wrote some win32 messaging and threads code before. I ported a RTOS when I was new at it. I was comfortable with coding under a RTOS and actually enjoyed using it. I am just about to port it to a non-supported ARM series again as that’s well suited for what I’ll need it to do. I could have be lazy to just use Arduino crap like everyone else and might get myself better ranking on the contest, but the code base is full of busy polling crud and not suited for something that needs long battery life and certainly not something I would entrust someone’s life on it.

I agree with you, the little I’ve done with the Arduino makes me doubtfully. I’m mainly using FreeRTOS on my projects. But Arduino is widely used in the hacker space!

Recently, I bought some moteino for automating some devices in my home. My Idea was to use ready made board and ready made Arduino code to avoid designing new boards, code… I’ve changed my mind when I tried to modify the code: poor IDE (but there is a Eclipse plugin), not OS friendly and, as you notice, what about sleep mode, battery consumption…

Arduino is for people that are not expert in C programming and it’s a very good thing. A lot of people are doing very good mechanical and electrical projects using Arduino…

Gosh here are some absurdly nonsensical projects, but this one explores new realms of nonsense. If I have space, power and money for a HDMI monitor, than I have space, power and money for a Raspberry Pi or similar.

Arduino folks not wanting to learn Linux? Say what? Whatever they learn in Linux they can reuse in later projects (Linux has been around for 20+ years and I fully expect it to be with us for quite some time) or, banish the thought, to actually solve real problems. Yeah, learning to use a one-off, crippled GUI just to avoid Linux sounds like a tempting alternative.

I definitively don’t answer to troll, sorry

Geeks everywhere cry out for a cheap, DIP IC capable of doing 720p HDMI.

I’ll take a dozen….

Since when was 720p considered “full HD”?

HD is not “full HD” ;-)

It sounds cool to be able to do this, the only issue I see is that (at least right now) the power requirements of an HDMI monitor are so large I don’t see the point of a uC being able to drive one (the main benefit being power consumption and cost reduction)

I suppose if this could be made very cheaply in the future that would be great.

Wow. So someone does a complex electronics project that’s not another quadcopter/3dprinter/arduino and gets trolled down by the commenters because he could buy a cheaper thing made by someone else. On hackaday. It’s not the same crowd that used to hang out here. You guys suck, go buy an adafruit breakout board for a soic chip that you can’t solder yourself and be a part of the maker community™. Bah!

And monnoliv, amazing project!

I just wanted to second your sentiment. Seeing how people build things they “could just buy” is why I’m here.

For lower resolution (480×272) and 8 bit microcontrollers, the FT800 is a good alternative. Here’s what it’s capable with an arduino: https://youtu.be/2iy_yUQd4Mw