[Conrad] was tasked with building a synthetic aperture multispectral imaging device by his professor. It’s an interesting challenge that touches on programming, graphics, and just a bit of electrical engineering.

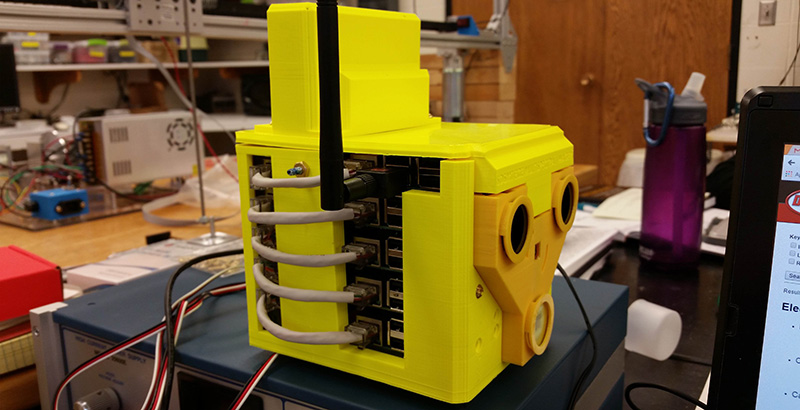

Tucked inside a garish yellow box that looks like a dumb robot are five Raspberry Pis, a TP-Link Ethernet switch, three Raspberry Pi NOIR cameras, and a Flir Lepton thermal camera. With three cameras, different techniques can be used to change the focal length of whatever is being recorded – that’s the synthetic aperture part of the build. By adding different filters – IR pass, UV, visual, and thermal, this camera can record images in a huge range of wavelengths.

[Conrad] has come up with a completely modular toolbox that allows for a lot of imaging experiments. By removing the filters, he can track objects in 3D. With all the filters in place, he can narrow down what spectra he can record. It’s a mobile lab that’s completely modular, and we can’t wait to see what this little box can really do.

I don’t get the ‘synthetic aperture’ part. OK it can capture a borader wavelength spectrum than a conventional camera, but synthetic aperture implies the stacking of several scenes (in a radar application) to artificially synthetize a much bigger sensor base. The article is light on details but I don’t see any such mechanism?

It’s not synthetic aperture, that’s why it doesn’t make sense.

It’s not synthetic aperture. If he had multiple time of flight cameras spaced apart at regular intervals, then he could gather phase data, and that would make it a synthetic aperture camera.

This is more like an imaging monochromator with multiple detectors.

Yeah, not enough info to figure out what he means or why. More to the HaD point, “why 5 Pi’s” would be nice.

Interesting project, but where is the synthetic aperture?

By combining the video from multiple cameras you are creating a ‘synthetic aperture’ in the same way you combine the input from multiple antenna positions in a SAR to enhance the resolution. Instead of moving the radar around you can use multiple receivers or cameras.

If the camera moves to create a large virtual “aperture” (as is done with moving-platform “synthetic aperture” radar) then you’ve got a synthetic aperture device (think of the “panorama” mode of your phone camera), otherwise what you’ve done is extend the spectral range by overlapping images from devices with different sensitivities which would better be termed “synthetic broad-spectrum imaging”. While each camera contributes a bit of apertural area the point of the build seems to be range of frequencies rather than covering a larger area.

The radar equivalent of the camera would be a stationary, fixed-aperture device that used both microwave and long wave signals to understand more about the target (multi-frequency radar). These are used for things like polar ice sheet analysis and the like, though the radar systems incorporate phase comparison and the like which the camera shown can’t do.

[dictionary.com] Aperture: a usually circular and often variable opening in an optical instrument or device that controls the quantity of radiation entering or leaving it.

The article is correct.

“panorama mode” on the other hand has to do with field of vision which is determined by the optics, not the aperture.

Now that i think about it, “panorama mode” while simulating something normally accomplished by optics, would in this case be considered a synthetic aperture. In any event, the term is still correctly used in this article because an “aperture” does not have to effect field of vision.

If you space antennas or receivers or lenses, you get the resolution of the greater aperture (spacing), but not the sensitivity of a single antenna or lens of that size. You also get the useful property that resolution is independent of range.

“used to change the focal length of whatever is being recorded”

what are you talking about????

Because there have been a few questions on the synthetic aperture part:

This video explains the application of a synthetic aperture well: https://www.youtube.com/watch?v=QNFARy0_c4w

This Paper explains some of the nitty gritty: http://graphics.stanford.edu/papers/sap-recons/final.pdf

This wikipedia article explains how you use an aperture to “blur out” occlusions: https://en.wikipedia.org/wiki/Depth_of_field#Derivation_of_the_DOF_formulae

Hope this helps!