Last month, GitHub users were able to buy a special edition Universal 2nd Factor (U2F) security key for just five bucks. [Yohanes] bought two, but wondered if he could bring U2F to other microcontrolled devices. he ended up building a U2F key with a Teensy LC, and in the process brought U2F to the unwashed masses.

Universal 2nd Factor is exactly what it says on the tin: it doesn’t replace your password, but it does provide a little bit of extra verification to prove that the person logging into an account is indeed the person that should. Currently, Google (through Gmail and Google Drive), Github, Dropbox, and even WordPress (through a plugin) support U2F devices, so a tiny USB key that’s able to provide U2F is a very useful device.

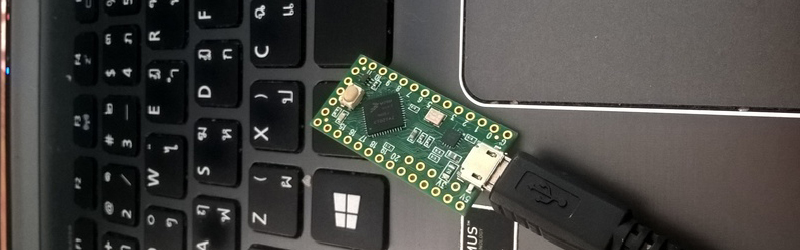

After digging into the U2F specification [Yohanes] found the Teensy LC would be a perfect platform for experimentation. A U2F device is just a USB HID device, which the Teensy handles in spades. A handy library takes on ECC for both AVR and ARM platforms and [Yohanes’] finished U2F implementation is able to turn the Teensy LC into something GitHub was selling for $5.

It should be noted that doing anything related to security by yourself, with your own code is dumb and should not be considered secure. Additionally, [Yohanes] didn’t want to solder a button to his Teensy LC, so he implemented everything without a button press, which is also insecure. The ‘key handle’ is just XOR encryption with a fixed key, which is also insecure. Despite this, it’s still an interesting project and we’re happy [Yohanes] shared it with us.

very very cool.

partly retracting that very very cool because of the key handle though… why not simply:

1) generate a UID and store it in the computer

2) make a SHA256 hash

If you’re talking about UUIDs or GUIDs, these ensure uniqueness, but do not guarantee randomness. They were purely designed to avoid collisions between disconnected systems, but didn’t guarantee unpredictability (and in some cases were deliberately predictable) in their sequencing. Even “v4” (random) GUIDs aren’t necessarily random enough that they aren’t predictable, although *some* libraries do use Cryptographically-Secure Pseudo-Random Number Generators to generate them.

Long story short, be very careful where the randomness comes from when generating keys, relying on UUIDs/GUIDs for randomness is a common (but understandable) mistake in a lot of cases.

“It should be noted that doing anything related to security by yourself, with your own code is dumb and should not be considered secure.”

That’s a bit overbroad – the main risk in crypto is inventing your own algorithms or protocols without proper review. Implementing well-known protocols and systems has its own risks (timing attacks, for instance) but is a whole lot less risky, on the whole.

That said, he’s exporting the crown jewels to the calling system with only trivial obfuscation, so this particular implementation certainly is rather insecure, and needlessly so.

Agreed, thanks for getting that liytle snippet, was going to respond to it but you got to it first. =]

Quite the opposite, making your own thing is the ONLY way to be secure, the rest is either soon hacked or comes out of the box with a ton of holes on purpose.

Not just a bit over-broad, but also disappointing that this idea that the unwashed just aren’t qualified to try their hand at crypto, any crypto, keeps being promulgated by those either arrogant or with a hidden agenda. That idea that only large corporates or governments dare compile code with XOR is insulting.

It’s not about how well one knows crypto, it’s about understanding risk. This helicopter-mom mentality over cutting any code to do with crypto does a dis-service to everyone but certain three-letter agencies. I say, dig in, get your hands dirty, and understand the risk that whatever you do should be considered utterly insecure. Don’t rely on it to hide anything important, and document that the code is experimental and it’s security is unverified.

Plenty of people cut insecure code that is released unverified, promoted as safe to use for real purposes, and not only given out but sold, and for some reason it is all okay because those people were collecting a salary. Understanding risk management (hint: it’s not black and white) is ultimately each person’s own responsibility in life, and if that concept is beyond you, having your Facebook account hijacked is likely the least of your problems.

If I’m understanding this correctly, using this would allow anyone to trivially U2F-authenticate to your accounts. The key handle, which is effectively publicly available, is simply your private key XORed with ” -YOHANES-NUGROHO-YOHANES-NUGROHO-“. Anyone can request the key handle, XOR it to get your private key and authenticate as you to the server.

So? Unless you download malware or someone has direct access to your machine or the dongle, you are probably fine. It’s not meant to be the end all security, it’s the security to add to your arsenal of security.

The handle is sent to the originating service, so anyone who compromises the service could recover the private key fairly trivially, which completely eliminates the point of using two-factor authentication.

They don’t even need to compromise the service, as far as I can tell – the key handle is given out to anyone who attempts U2F authentication. All someone has to do is attempt to U2F-authenticate to your account and if you’re using this as your second factor, that’ll give them your key handle and therefore your private key they need to U2F authenticate successfully as you.

No, each service gets a different key handle (or certainly ought to!), so you’d have to be able to impersonate the target service in order to get its handle.

I don’t think you’re quite following here. During authentication, the service sends the U2F key handle to whoever’s trying to authenticate to it, not the other way around. See the overview here: https://fidoalliance.org/specs/fido-u2f-overview-ps-20150514.pdf

Oh right, good point. I was thinking of the initial authentication flow, where the key generates the handle and it’s sent to the service.

Right,

But my point is, they still need your private key, no?

In this implementation, the handle _is_ the private key, weakly ‘encrypted’ with XOR against a constant string.

Just to note: as far as I know, Yubico doesn’t mention if they keep the master key for each device or not. You can change the key to 64 bytes random value that ONLY YOU know (which should be random), so the “private key” part would be secure (unless someone has access to the U2F device itself).

“It should be noted that doing anything related to security by yourself, with your own code is dumb and should not be considered secure.”

Really? honestly anyone here can read the books and learn what is needed to be a IT security expert. It’s comments like that above that only serve to harm the hacker movement and dissuade people from learning and tinkering. I guarantee that with enough research there are several HAD readers here that can do their own security at a higher level than what most corporations rely on.

Although I think the warning’s a bit overbearing, it’s not as unreasonable as you seem to think: To paraphrase Bruce Schneier, anyone can devise a cryptosystem he himself cannot break.

Getting security right is hard. The best way to do it is to avoid inventing new stuff yourself unless absolutely necessary, and rely on others’ work that’s been tested already.

Years ago. I was asked to test a “new” crypto tool from a startup. The tool was designed to transfer files from user to user so a public key system was devised. I was given the tool and within ten minutes of reading the source code I realized the developer had used (IIRC) used Deflate with a fixed table, XORed the data, and packed it up. The public key was a reference to the table.

The kicker was the deflate tables were included with every copy of the software while the table reference was hidden within the packed file. All that was left was to discover what the XOR value was.

Ten minutes later, I created an automated tool to decrypt the files that could deduce the XOR value and rebuild the tables just from the file itself.

I was paid for my work but was not hired to back for v2 of the released tool. I don’t believe they’re in business anymore.

Ten minutes? They should’ve hired you.

“honestly anyone here can read the books and learn what is needed to be a IT security expert.”

No – that’s exactly the issue. People who read the books and write code without experience and peer review make bad crypto – time and time again. There’s a ton of ugly math and non-obvious caveats and so on with doing it right, and no book is going to give you the skills alone – you need the books, plus experience, plus peer review, plus a truly rare mind, and even then it gets cracked in time. Making something that looks like crypto is easy, as is banging the bits together – but making something that is actually secure, and more importantly being able to tell the difference, is hard.

Anyone without public, peer-reviewed crypto who thinks crypto is easy is squarely in Dunning-Kruger territory. Go google it. (I exclude certain individuals at NSA and elsewhere who are reviewed internally by peers)

That’s not to say you cannot, and should not, play with crypto – playing is how you learn. But – depend on real crypto, which your crypto might be, someday, after is is published and peer reviewed – not before.

Disclaimer: I do IT Security (operations) professionally. I am by no means an expert – which is why I know how hard it really is. Being savvy enough with security and crypto and the state of the art to expand the field is a full time job, and then some. I am neither smart enough or educated enough for that, and if you think you are and have not published – you probably aren’t. Sorry.

There’s a note in the article that i do not understand at all: “It should be noted that doing anything related to security by yourself, with your own code is dumb and should not be considered secure”.

So the mass-deployed products with known (sometimes, for a months or years when it comes to government agencies) vulnerabilities are supposed to outperform a home-cooked, made-to-fit and custom solution?

In a word, yes. Why do you think a single person working on their own would be able to produce a product that’s more secure than a large team with lots of external review?

“Lots of external review” is a problem here. Windows and Linux despite (or rather, because of?) having lots of external review still get 0-day stuff that is being secretly used and circulated among interested parties for long before it finally gets patched up. When we were running large farms of servers in mixed OS environment (ISP, so most of them directly exposed to the Internet) the longest standing were the exotic OSes that did not gain enough interest in the hacking community or were simply to different for known things to work. As simple as that. Especially in the context of the original note, i see nothing wrong with the idea of individual person protecting his/her own assets with a custom solution. When it comes to deployment in a commercial environment it is an entirely different story, though.

If your threat model is “nobody cares about me in particular, so they won’t expend the effort to try and compromise your system”, sure, you _might_ be slightly better off running something weird and unique. That’s not a good principle to secure a system based on, though – it’s literally security through obscurity.

Those obscure systems aren’t more secure than the well known ones you’re complaining about – they just get less attention, so the security holes they do have take longer to be exposed to the world as a whole. You’re less secure, you just don’t know it.

I like your end note so much that i am almost to dump any further discussion, yet i believe that the original warning as being placed in a note relating to a single individual and addressed to hacking community is a little bit far fetched.

Thanks Brian for another interesting article.

I wonder if one could do the same with a Pro Micro :)

Very good idea, might could have added the button but as long as it works, and is fun who cares.

I hate how the hackaday writers are super negative. We all put a lot of effort into these projects and you cut us down. Why do we keep trying? Every time we send you something you repost it and capitalize from it. You could at least be nice to us? I have read hackaday every day for over 5 years now and I keep seeing post like this over and over again. This community needs positive reinforcement, not negativity. You are squashing creativity, and making some fear sharing their ideas. This has to stop.

What’s so negative about the post? I see a bit of constructive criticism at the end, but other than that it seems pretty evenhanded to me.

I am not offended at all by this article, but someone pointed to me about the usage of the word “dumb” in implementing your own crypto.

I think it depends. Do you think its smart to give your trust to a company (Yubico) that knows your master key, email, delivery address, and your credit card information? A company consist of people, and you have to trust that all of them are honest.

Could a software publisher use U2F for copy protection? I.E. require a U2F dongle for their application to work.

Sure, though the U2F protocol specifically isn’t designed for this, the basic idea works, and software dongles are as old as the hills. They’re user-hostile, though, and trivial to bypass by modifying the software to not perform the check.

This would probably work quite well actually. Official U2F dongles have attestation keys embedded in them that can be used to prove to services that they’re genuine, official hardware that can be relied on to safeguard the keys they’re entrusted with.

“This is insecure, but its ok for me for testing.” – [Yohanes]

For anything more serious, reimplement Google’s Java U2F reference and use their test suites.

The Google U2F reference implementation uses some kind of “database” to map key handle to private key. This is secure, but you need EPROM storage for every key handle (teensy only has 128 bytes of EPROM).

My implementation also passes the U2F test suites by Google. But the test suites by Google doesn’t really cover the security quality of the implementation.

I bought the you github yubikey for $5 but could never get it to work on either windows or linux.

I have added this text to my blog post:

Update: some people are really worried about my XOR method: you can change the key and make it 64 bytes long. It’s basically a one-time-pad (xoring 64 bytes, with some unknown 64 bytes). If you want it to be more secure: change the XOR into anything else that you want (this is something that is not specified in the standard). Even a Yubico U2F device is compromised if you know the master key, in their blog post, they only mentioned that the master key is generated during manufacturing, and didn’t say if they also keep a record of the keys.

Regarding the buttonless approach: it’s really easy to add them. In my code, there is an ifdef for SIMULATE_BUTTON. It will just pretend that the button was not pressed on first request, and pressed on second request. Just change it so that it really reads a physical button.

I’m sorry, but it’s not a one time pad, because you’re using it repeatedly. If someone obtains two encrypted strings, they can XOR them together to get the XOR of the two plaintexts. From that, they can use internal structure to the plaintexts to attempt to derive the plaintexts, and then simply XOR one with a ciphertext to get your key.

I am aware of the XOR attack. But the one that is encrypted is the private key which the attacker doesn’t know.

So what the attacker have is P1 Xor SECRET, P2 XOR SECRET, P3 xor Secret and so on. They don’t know any bytes of P1, P2, .. PN,, all of them are random.

I am happy if you can prove me wrong. I can send you 1000, a million or a billion encrypted text if you can get my secret key.

Please see my earlier reply: the attacker doesn’t need to know the keys, as long as they’re not entirely random, which they’re not – there are invalid ECC key components. Using this, the attacker can determine what your key was.

I am aware of the XOR cryptanalysis if you have a clue regarding the plaintext. But in this case the plaintext is the private key itself.

So when you register first time, you get a P1 (which is 32 bytes private key XOR-ed with SECRET), next time you register you get P2 (which another 32 bytes private key, different from first one XOR-ed with SECRET), and so on. There is no header, trailer or any structure in the key, just pure 256 bit numbers.

I am glad if you can prove me wrong, that you can get SECRET from P1^SECRET, P2^SECRET and so on, with P1, P2, … PN completely random. I can send you a million or a billion encrypted keys if you want to prove it.

The point is that if your cryptosystem depends on the randomness of the plaintext you’re encrypting, it’s a bad cryptosystem. Again, as per Schneier, anyone can design a cipher he himself can’t crack – your inability, or mine, to break it says next to nothing about its security.

Why would you use something that you know is weak, when it’s trivial to implement something much, much better?

To be secure, the key for each client when registering should be random. So the plaintext (the private key) is random, if this part is not random or can be predicted then the XOR part is the least of our concern. I am not a completely noob in cipher, I’ve read a lot and solved many CTFs crypto challenges.

I choose the XOR system for two reasons: one is easy to disable to check my implementation (just xor with 0), and second: it is simple but should be strong enough for any practical attack to be feasible.

The components of an ECC private key are not uniformly random: they’re a series of random words, each of which has a maximum value defined by the curve being used. So, given several ‘encrypted’ private keys under your scheme, I can try out possible values for the shared secret key on a word-by-word basis. For each candidate, I decrypt the keys I have with it; if any of them are outside the valid range for that key component, I discard that attempt. With enough ciphertexts – really not very many, in all likelihood – I can narrow down the search space to the single shared secret you used.

Again, the point isn’t whether or not _I_ was able to find a vulnerability. The point is that you’re using a scheme you _know_ is weak, just because you personally can’t see how that vulnerability applies to you. Anyone can devise a scheme they themselves can’t break, especially if you insist on using techniques you know are bad.

That’s the problem with rolling your own crypto – it’s non-obvious as to how broken it is. Does knowing the result of XORing two ECDSA private keys together allow you to obtain the private keys? Who knows, because this isn’t something security researchers have thought about, because why would anyone do that in the first place. How about the fact that there’s no integrity on the key handle, so an attacker can flip any combination of bits in the private key and request signatures with that modified private key – does that break it? Almost certainly, but it’s an obscure attack because it’s not like any well-designed system is going to let you do that.

So, I did some research and coding. Turns out that all it takes to extract the private key is 256 key signing requests with 256 modified key handles that each have a single bit flipped, plus 512 public-signature verification operations by the attacker in order to compute the original private key from the signing results. Once you’ve done that, you can recover the XOR key used to encrypt the private key, decrypt any other key handles to obtain their private keys too, and U2F authenticate as the device to any website where it’s registered. Oh, and an attacker can probably do this over the internet from a website that the user isn’t even registered on by requesting key handles from other services and modifying them.

Don’t write your own crypto code, or this could happen to you.

Cool. I stand corrected.

Next time I will try to implement the algorithm as used by Yubico instead.

Otherwise

Old thread…. but i only found it now.

I created a similar project for stm32 uCs – https://github.com/avivgr/stm32-u2f

I currently use the expensive stm discovery dev board. But ultimately can use something like a 2$ blue pill stm32 board or a maple mini….

BTW I export the private key using AES… which is possible since i use mbed-tls lib which has AES encryption