With the ability to run a full Linux operating system, the Intel Edison board has more than enough computing power for real-time digital audio processing. [Navin] used the Atom based module to build Effecter: a digital effects processor.

Effecter is written in C, and makes use of two libraries. The MRAA library from Intel provides an API for accessing the I/O ports on the Edison module. PortAudio is the library used for capturing and playing back audio samples.

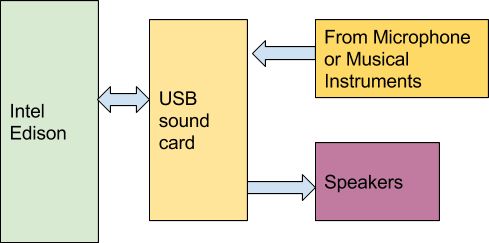

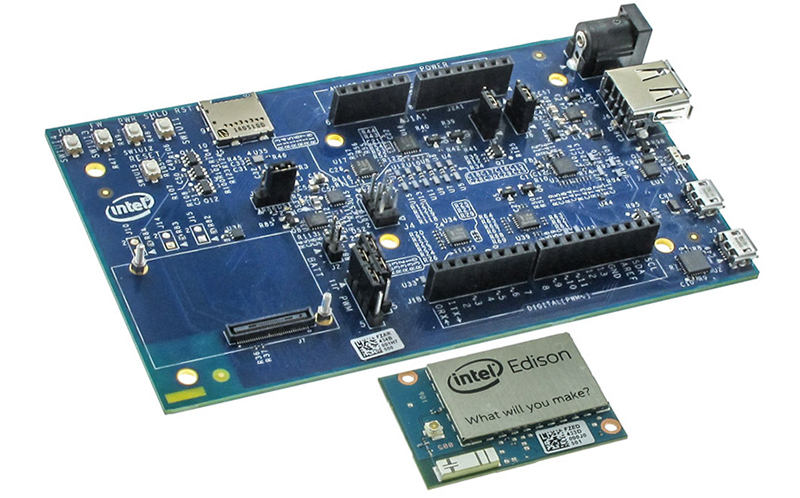

To allow for audio input and output, a sound card is needed. A cheap USB sound card takes care of this, since the Edison does not have built-in hardware for audio. The Edison itself is mounted on the Edison Arduino Breakout Board, and combined with a Grove shield from Seeed. Using the Grove system, a button, potentiometer, and LCD were added for control.

To allow for audio input and output, a sound card is needed. A cheap USB sound card takes care of this, since the Edison does not have built-in hardware for audio. The Edison itself is mounted on the Edison Arduino Breakout Board, and combined with a Grove shield from Seeed. Using the Grove system, a button, potentiometer, and LCD were added for control.

The code is available on Github, and is pretty easy to follow. PortAudio calls the audioCallback function in effecter.cc when it needs samples to play. This function takes samples from the input buffer, runs them through an effect’s function, and spits the resulting samples into the output buffer. All of the effect code can be found in the ‘effects’ folder.

You can check out a demo Effecter applying effects to a keyboard after the break. If you want to build your own, an Instructable gives all the steps.

I skimmed the instructable, but found no info on latency. Is it realtime?

Hi,

It is real time. The effects itself are simple. If you check the effects implementation (https://github.com/navin-bhaskar/Effecter/tree/master/effects), no effects function spans beyond 25 lines of readable code.

Well, how many lines of code it is does not really say much about how fast it runs… Anything above ~20ms of latency is unusable for live audio.

That’s right. It was to give an idea of complexity level as in number of instructions that would get generated.

The real pain points are the multiplications and all those calls to the sine functions this may be mitigated using look up tbales (and I am not using a lookup table in the code itself).

make that any* look up table

If the YT video and audio are in sync, and at 1:56 it seems so, it gets hundreds milliseconds latency which makes it unusable for anything serious or not.

That is because the setup that I am using. I am using the keybaord in midi mode and the computer (which is running Abelton) is not capable of generating realtime piano tone. Have a look at this another video https://www.youtube.com/watch?v=5RVHdKsuG_k (you will have to read through the annotations to know what is going on).

Have you actually measured the total signal latency, for example with an oscilloscope on the analog input and analog output?

Unfortunately, no. I do not have one ;)

Just connect the output to the input and let it self oscillate: the measured frequency will tell you the latency for being inversely proportional to it. Remember to set speakers volume at minimum first!

So if you can run Linux, you can do real time digital audio processing? Nice to know that that’s the limiting factor.

I did initial development on a Linux laptop right till I dropped in the button, pot and the LCD onto the project. All effects were developed and tested on a Linux Laptop.

My older i7 4 core, running Ubuntu Studio which has Jack and a light rt kernel, could get below 20ms for certain effects that just affected line in sounds. Getting it to generate audio from MIDI was also quick (low 20s) but if I started chaining things it went pretty high pretty fast. But, I was using most of the tools in GUI mode.

You can even go much lower than that. Today the biggest problem is setting up a proper kernel+Jack+tools environment on non dedicated distros. To me It took some hours on Debian, but I wanted to use Reaper and some Windows plugins under Wine through ASIO emulation. Works like a charm with very low latency, just a few clicks here and there when playing heavy synth instruments, but I’m on a 7 years old Core2 Duo PC.

Things are getting better and better on the Linux side, the only problem is making them doable for non tech guys, I mean non linux-techies. There is still a lot to do in that field.

I have a rpi2 and a cheapo ($2) usb sound dongle from china running a 4 oscillator fm-synth with 18 voices (using 3 cores to calculate the audio) at 48000 khz sampling with 96 samples latency (2 milliseconds) and about 50% cpu headroom per used core. Your i7 can obviously beat this.