In 2003, nothing could stop AMD. This was a company that moved from a semiconductor company based around second-sourcing Intel designs in the 1980s to a Fortune 500 company a mere fifteen years later. AMD was on fire, and with almost a 50% market share of desktop CPUs, it was a true challenger to Intel’s throne.

AMD began its corporate history like dozens of other semiconductor companies: second sourcing dozens of other designs from dozens of other companies. The first AMD chip, sold in 1970, was just a four-bit shift register. From there, AMD began producing 1024-bit static RAMs, ever more complex integrated circuits, and in 1974 released the Am9080, a reverse-engineered version of the Intel 8080.

AMD had the beginnings of something great. The company was founded by [Jerry Sanders], electrical engineer at Fairchild Semiconductor. At the time [Sanders] left Fairchild in 1969, [Gordon Moore] and [Robert Noyce], also former Fairchild employees, had formed Intel a year before.

While AMD and Intel shared a common heritage, history bears that only one company would become the king of semiconductors. Twenty years after these companies were founded they would find themselves in a bitter rivalry, and thirty years after their beginnings, they would each see their fortunes change. For a short time, AMD would overtake Intel as the king of CPUs, only to stumble again and again to a market share of ten to twenty percent. It only takes excellent engineering to succeed, but how did AMD fail? The answer is Intel. Through illegal practices and ethically questionable engineering decisions, Intel would succeed to be the current leader of the semiconductor world.

The Break From Second Sourcing CPUs

The early to mid 1990s were a strange time for the world of microprocessors and desktop computing. By then, the future was assuredly in Intel’s hands. The Amiga with it’s Motorola chips had died, Apple had switched over to IBM’s PowerPC architecture but still only had a few percent of the home computer market. ARM was just a glimmer in the eye of a few Englishmen, serving as the core of the ridiculed Apple Newton and the brains of the RiscPC. The future of computing was then, like now, completely in the hands of a few unnamed engineers at Intel.

The cream had apparently risen to the top, a standard had been settled upon, and newcomers were quick to glom onto the latest way to print money. In 1995, Cyrix released the 6×86, a processor that was able – in some cases – to outperform their Intel counterpart.

Although Cyrix had been around for the better part of a decade by 1995, their earlier products were only x87 coprocessors, floating point units and 386 upgrades. The release of the 6×86 gave Cyrix its first orders from OEMs, landing the chip in low-priced Compaqs, Packard Bells, and eMachines desktops. For tens of thousands of people, their first little bit of Internet would leak through phone lines with the help of a Cyrix CPU.

During this time, AMD also saw explosive growth with their second-sourced Intel chips. An agreement between AMD and Intel penned in 1982 allowed a 10-year technology exchange agreement that gave each company the rights to manufacture products developed by the other. For AMD, this meant cloning 8086 and 286 chips. For Intel, this meant developing the technology behind the chips.

1992 meant an end to this agreement between AMD and Intel. For the previous decade, OEMs gobbled up clones of Intel’s 286 processor, giving AMD the resources to develop their own CPUs. The greatest of these was the Am386, a clone of Intel’s 386 that was nearly as fast as a 486 while being significantly cheaper.

It’s All About The Pentiums

AMD’s break from second-sourcing with the Am386 presented a problem for the Intel marketing team. The progression from 8086 to the forgotten 80186, the 286, and 486 could not continue. The 80586 needed a name. After what was surely several hundred thousand dollars, a marketing company realized pent- is the Greek prefix for five, and the Pentium was born.

The Pentium was a sea change in the design of CPUs. The 386, 486, and Motorola’s 68k line were relatively simple scalar processors. There was one ALU in these earlier CPUs, one bit shifter, and one multiplier. One of everything, each serving different functions. In effect, these processors aren’t much different from CPUs constructed in Minecraft.

Intel’s Pentium introduced the first superscalar processor to the masses. In this architecture, multiple ALUs, floating point units, multipliers, and bit shifters existed on the same chip. A decoder would shift data around to different ALUs within the same clock cycle. It’s an architectural precedent for the quad-core processors of today, but completely different. If owning two cars is equivalent of a dual-core processor, putting two engines in the same car would be the equivalent of a superscalar processor.

Intel’s Pentium introduced the first superscalar processor to the masses. In this architecture, multiple ALUs, floating point units, multipliers, and bit shifters existed on the same chip. A decoder would shift data around to different ALUs within the same clock cycle. It’s an architectural precedent for the quad-core processors of today, but completely different. If owning two cars is equivalent of a dual-core processor, putting two engines in the same car would be the equivalent of a superscalar processor.

By the late 90s, AMD had rightfully earned a reputation for having processors that were at least as capable as the Intel offerings, while computer magazines beamed with news that AMD would unseat Intel as the best CPU performer. AMD stock grew from $4.25 in 1992 to ten times that in early 2000. In that year, AMD officially won the race to a Gigahertz: it introduced an Athlon CPU that ran at 1000 MHz. In the words of the infamous Maximum PC cover, it was the world’s fastest street legal CPU of all time.

Underhandedness At Intel

Gateway, Dell, Compaq, and every other OEM shipped AMD chips alongside Intel offerings from the year 2000. These AMD chips were competitive even when compared to their equivalent Intel offerings, but on a price/performance ratio, AMD blew them away. Most of the evidence is still with us today; deep in the archives of overclockers.com and tomshardware, the default gaming and high performance CPU of the Bush administration was AMD.

For Intel, this was a problem. The future of CPUs has always been high performance computing, It’s a tautology given to us by unshaken faith in Moore’s law and the inevitable truth that applications will expand to fill all remaining CPU cycles. Were Intel to lose the performance race, it would all be over.

And so, Intel began their underhanded tactics. From 2003 until 2006, Intel paid $1 Billion to Dell in exchange not to ship any computers using CPUs made by AMD. Intel held OEMs ransom, encouraging them to only ship Intel CPUs by way of volume discounts and threatening OEMs with low-priority during CPU shortages. Intel engaged in false advertising, and misrepresenting benchmark results. This came to light after a Federal Trade Commission settlement and an SEC probe disclosed Intel paid Dell up to $1 Billion a year not to use AMD chips.

In addition to those kickbacks Intel paid to OEMs to only sell their particular processors, Intel even sold their CPUs and chipsets for below cost. While no one was genuinely surprised when this was disclosed in the FTC settlement, the news only came in 2010, seven years after Intel started paying off OEMs. One might expect AMD to see an increase in market share after the FTC and SEC sent them through the wringer. This was not the case; since 2007, Intel has held about 70% of the CPU market share, with AMD taking another 25%. It’s what Intel did with their compilers around 2003 that would earn AMD a perpetual silver medal.

The War of the Compilers

Intel does much more than just silicon. Hidden inside their design centers are the keepers of x86, and this includes the people who write the compilers for x86 processors. Building a compiler is incredibly complex work, and with the right amount of optimizations, a compiler can turn code into an enormous executable which is capable of running on the latest Skylake processors to the earliest Pentiums, with code optimized for each and every processor in between.

While processors made a decade apart can see major architectural changes and processors made just a few years apart can see changes in the feature size of the chip, in the medium term, chipmakers are always adding instructions. These new instructions – the most famous example by far would be the MMX instructions introduced by Intel – provide the chip with new capabilities. With new capabilities come more compiler optimizations, and more complexity in building compilers. This optimization and profiling for different CPUs does far more than the -O3 flag in GCC; there is a reason Intel produces the fastest compilers, and it’s entirely due to the optimization and profiling afforded by these compilers.

Beginning in 1999 with the Pentium III, Intel introduced SSE instructions to their processors. This set of about 70 new instructions provided faster calculations for single precision floats. The expansion to this, SSE2, introduced with the Pentium 4 in 2001 greatly expanded floating point calculations and AMD was obliged to include these instructions in their AMD64 CPUs beginning in 2003.

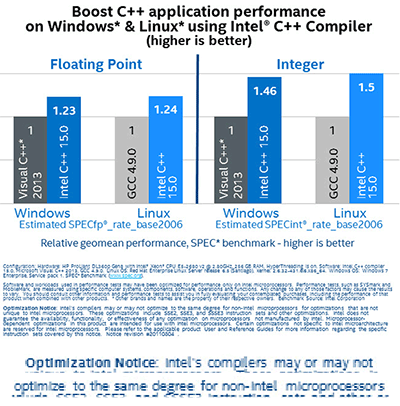

Although both Intel and AMD produced chips that could take full advantage of these new instructions, the official Intel compiler was optimised to only allow Intel CPUs to use these instructions. Because the compiler can make multiple versions of each piece of code optimized for a different processor, it’s a simple matter for an Intel compiler to choose not to use improved SSE, SSE2, and SSE3 instructions on non-Intel processors. Simply by checking if the vendor ID of a CPU is ‘GenuineIntel’, the optimized code can be used. If that vendor ID is not found, the slower code is used, even if the CPU supports the faster and more efficient instructions.

This is playing to the benchmarks, and while this trick of the Intel compiler has been known since 2005, it is a surprisingly pernicious way to gain market share.

We’ll be stuck with this for a while. That’s because all code generated by an Intel compiler exploiting this trick will have a performance hit on non-Intel CPUs, Every application developed with an Intel compiler will always perform worse on non-Intel hardware. It’s not a case of Intel writing a compiler for their chips; Intel is writing a compiler for instructions present in both Intel and AMD offerings, but ignoring the instructions found in AMD CPUs.

The Future of AMD is a Zen Outlook

There are two giants of technology whose products we use every day: Microsoft and Intel. In 1997, Microsoft famously bailed out Apple with a $150 Million investment of non-voting shares. While this was in retrospect a great investment – had Microsoft held onto those shares, they would have been worth Billions – Microsoft’s interest in Apple was never about money. It was about not being a monopoly. As long as Apple had a few percentage points of market share, Microsoft could point to Cupertino and say they are not a monopoly.

And such is Intel’s interest in AMD. In the 1980s, it was necessary for Intel to second source their processors. By the early 90s, it was clear that x86 would be the future of desktop computing and having the entire market is the short path to congressional hearings and comparisons to AT&T. The system worked perfectly until AMD started innovating far beyond what Intel could muster. There should be no surprise that Intel’s underhandedness began in AMD’s salad days, and Intel’s practices of selling CPUs at a loss ended once they had taken back the market. Permanently disabling AMD CPUs through compiler optimizations ensured AMD would not quickly retake this market share.

It is in Intel’s interest that AMD not die, and for this, AMD must continue innovating. This means grabbing whatever market they can – all current-gen consoles from Sony, Microsoft, and Nintendo feature AMD chipsets. This also means AMD must stage their comeback, and in less than a year this new chip, the Zen architecture, will land in our sockets.

In early 2012, the architect of the original Athlon 64 processor returned to AMD to design Zen, AMD’s latest architecture and the first that will be made with a 14nm process. Only a few months ago, the tapeout for Zen was completed, and these chips should make it out to the public within a year.

Is this AMD’s answer to a decade of deceit from Intel? Yes and no. One would hope Zen and the K12 designs are the beginning of a rebirth that would lead to a true competition not seen since 2004. The product of these developments are yet to be seen, but the market is ready for competition.

https://np.reddit.com/r/pcmasterrace/comments/3s5r4d/is_nvidia_sabotaging_performance_for_no_visual/cwukpuc

Not sure why all the Intel bashing. They simply have better parts; invested billions per year in advanced fabs (now 14nm); have excellent compiler technology to leverage advanced instruction sets; expanded into ancillary markets away from PCs; make superb solid state memories (see Cross Point); develop new products like mini PCs (NUCs) and out marketed and outsold AMD at every turn i the last ten years. Even with the Zen vs. Skylake, Zen will not succeed because they are just too far behind the Intel curve. Some company (Nvidia?) should gobble up AMD, remove the best of its technology, and let the rest go. Put AMD out of its misery.

Skylake? That’s the one breaking under heatsinks, right?

That’s like saying we shouldn’t get new iphones cuz’ they are slimmer and bend easyer. ;)

Except based on the legal filings that isn’t true at all. Stating facts is not bashing. Much of Intel’s success came from far more questionable investments than new fabs.

How naive. In a previous Opteron era (when Intel and co pushed Itanium – remember that better part?) AMD reined supreme.

Yes we all bask in Intels R&D but you should thank AMD for x64 that started over a decade ago. They had there knees taken out by a company dealing in underhanded business practices.

Watch out ARM…

Intel is where it is because of the dirty practices they performed. They’re not Intel bashing, these are facts. They should also include how they reverse engineered the AMD Nehalem FX chips when they bought the AMD Fab to create the first Core i7 at the Nehalem plant and then immediately closed the plant after they manufactured the first set of Core i7 chips.

Sure, intel invested a lot (A LOT) of money to further the progress of lithography equipment but it’s not all intel. TSMC and Samsung also invest a lot in the same company doing all the lithography development (ASML)

Intel isn’t intrinsically better – Except at playing Monopoly.

In addition to the compiler shenanigans, much of their recapture of the market can be attributed to Intel cock-blocking AMD on the newer process sizes. They’ve been gaining exclusive licensing to the lithographic technology that allowed them to do the last few <32nm process shrinks. It's only now that AMD found a 'workaround' to license the IP through a 3rd party, who is reselling the license to them, that they have been able to tape out the 14nm chips.

It's shenanigans all the way down at Intel. Unfortunately for AMD, when it comes to Intel's obvious monopolization – What you can prove in reality, and what you can prove in court are two different things.

People dislike Intel because the used underhanded and illegal methods to put their competition in a place where they couldn’t viably compete. And that sucks for the consumer because competition results in better, cheaper products.

It’s 2019 now. AMD has 56% desktop CPU share now, faster multithreading and higher I.P.C. than Intel. Just thought I’d let you know. 😁

Queue fan war in 3 2 1 …

But seriously I still buy AMD. $180 for an 8 core 4GHz gives me plenty of performance at a decent prices. The biggest downside is the heat production, stock cooler didn’t work for crap.

8 cores sounds great until you realize AMD 4GHz = Intel 2.8GHz in real world, and on top of that people do NOT write multi threaded code, so you end up with maybe 2-3 cores doing actual work.

Your Ghz comparison is a bit misguided, as it is application specific. It also depends on the intel CPU you are comparing it too. I7 definitely has better single core performance than the FX series, I5 slightly better, while the FX is still significantly cheaper than both and outperforms the i3. (this is based on some benchmarks I looked at a while back when shopping) The fastest I5 i could find on Newegg was 3.5ghz quad core at a cost of 239. There was only one 8 core i7, coming in at just over $1K. An I7 quad core 4ghz comes in at 339. My 8 core FX at 4ghz is currently only 169. Performance per dollar, I still believe I got a better deal.

Also, as far as cores, it depends what you are doing. Battelfield 4 saturates all 8 cores. Slic3r can also be configured to an arbitrary number of cores. And while most applications may be single threaded, the point is that you are never running one thing at a time.

But all in all it depends what you are doing. Doing some scientific calculations where the extra throughput is invaluable? Better go with the $1K I7. Me playing games and slicing some models for printing, FX seems just fine.

Actually the I5,I3,and even pentiums all outclassed AMD as far as single threaded performance is concerned. Look up the Dolphin Emulator benchmarks. AMD only wins in heavily multithreaded benchmarks and even then the I5 and I7(haswell and later) still win a majority of the time even though they have half the cores. IPC matters a lot. Although I’m really interested in how well Zens IPC gains are.

AMD FX cores are different than Intel cores an AMD FX 8 core is really a quad core with 2 clustered threads making each thread work on separate tasks unlike the i7 that has both threads per core work on the same task. AMD Zen will be implementing a similar thread design to the i7 but because of Intel’s patent on hyper threaded cores AMD is forced to call each thread an individual core.

“AMD FX 8 core is really a quad core ”

That’s incorrect.

I can’t help but point out that the comparable i7 to an ‘8-core’ FX would be a 4 core i7. The 8 core FX chips are actually 4 core chips with 8 threads. They use similar technology to hyperthreading with differences they believe define more individual cores, that’s debatable though. The 8 core i7 is literally an 8 core processor, with a total of 16 threads. That being said, I don’t think the performance between 8 thread i7 and FX chips is a big difference and I think the FX series are a good deal

I can’t quite help to point out that this is wrong. They’re “similar” in nature, but completely different. Intel’s HyperThreading is not two full and true separate integer units with a FPU tied at the hip. It’s a scheme to present a virtual CPU with pipeline tricks. AMD’s designs present two full integer execution units with a FPU that is dispatched to much like the coprocessors of older times. The simple understanding is that they’re “similar” in nature, but the truth is that if you have a pure integer instruction stream, the AMD design will have an edge over the Intel one under ideal circumstances. Instead of a peak of 30%, you’d see a 80-90% increase- if your cowpiler doesn’t do imnical things to your cache, pipeline, etc. In AMD’s case, your description’s crap- because it’s not even close.

“The 8 core FX chips are actually 4 core chips with 8 threads. ”

That’s incorrect. The FX has 8 cores and 4 FPUs.

Unless you `make -j9`. That’s one of the few things that actually use all your cores.

Yes, for me especially. I mostly just use vim for an editor, and Firefox/Thunderbird for web and email. But every now and then I need to compile a different version of something (like a newer version of avr-gcc) and adding the -j flag makes ‘make’ a lot more fun to watch. :)

I have a 8-core 4Ghz FX processor.

You’re saying you primarily use a text editor, surf the web and send and receive e-mail most of the time — all of which could be easily handled by a 500MHz CPU from the 90’s. This is true for a huge number of users worldwide, especially if web sites didn’t load their pages down with ad brokers and hidden tracking scripts.

This business of who is leading the performance “race”, who has more cores, and whose compiler ekes out a 50% gain is senseless. Nobody except professional video editors need it.

Myself, I buy AMD on principle. I’d compute on a used K6-II before I’d buy an Intel product. For the past several years they’ve had the same mindshare as the RIAA for me: pariahs. I refuse to ever deal with them again.

@Randall Krieg

Unfortunately, JavaScript is loading down modern browsing a lot. Some websites which use heavy client-side scripting like Angular.js etc. probably wouldn’t even work if you disabled JS. So unfortunately yes, I think nowadays a fast CPU is required.

I think multicore helps a lot as well, since if you have a single core and something locks it up, your whole system freezes. On multicore, just one core freezes while the others take over and the OS and other applications can run. I didn’t do any benchmarks or anything like that to confirm, but I’m quite sure it makes sense.

I can share some of my experiences with running a 700 MHz Pentium III Coppermine 700MHz in around 2012 (a while ago). It worked with an ATi Radeon 9200SE AGP card or some sort of PCI graphics card (older ATi or maybe S3 Virge or something like that). I wanted to use this setup for a HTPC, but it didn’t work very well.

It didn’t handle HD video decoding very well. It did decode, but there was (from what I remember quite frequent) stuttering during playback. I think back then I only tried decoding below-HD quality streams (it would’ve been at least something like 360p or 480p, but maybe HD as well) as I didn’t have a HDTV, but in either case they probably wouldn’t have worked too well if I did try them.

The HTPC-oriented distros and self-created setups (Geexbox 2.0 Enna, Debian+XBMC) were not fluid enough even when it came to navigating the HTPC shell itself (without playing video), but that might been more because of the low-power graphics card for the AGP/PCI slot and not the CPU itself.

Window managers and desktop stuff was fine as I ran a 2012 Linux distro on 1998 hardware (Pentium III Coppermine 700MHz), so the software was not period-correct. Unfortunately browser performance and video decoding made it somewhat impractical to use for most use cases. Now that the RPi 2 was released a couple years back or so, the performance/power consumption probably makes the whole thing even less economical for power draw than back, when the RPi was still getting started.

@Randall Every now and then I do something that requires a faster CPU, such as run Atmel Studio in a Windows VM while also compiling some random software package that hasn’t been updated in the repos recently enough. :) And Atmel Studio prefers a fast computer…

Compiling Linux kernels on AMD FX 8 core, more like -j 12 to 16 and done in less than 50 seconds (hybrid drive. SSD might be a bit faster the first time). I don’t measure an increase after 12 but one wold think twice the number of cores would be good. Things to do can be lined up or in cache while hard drive access is happening.

Huge RAM makes a big difference for me, like 18 or 24G. A second compile with a couple small changes is mighty quick. I’m guessing loads of the source is cached. Is there a way get all the source in RAM and compile from there? Not as a pseudo RAM disk with emulated drive access, but straight up RAM resident source code?

Well, aside from that, the 8 integer cores on the AMD whip thought text processing (compiling a kernel) with pretty amazing quickness. I suspect the 4 FPU’s are rarely called on.

The clock rate comparison and statement about multi-threaded code really do not make sense. I cannot speak for you, but I typically do not run a single process at a time. Maybe you should look in to a multitasking operating system?

I dont know about you, but when I play games I usually run only one application at a time.

Even things like browsing the web – there are no multithreaded javascript engines.

You run one application at a time, but chances are that app has multiple processes. Also, that app is running in an OS with many, many tasks running in separate processes. Unless your game is running on the bare metal with no OS whatsoever, you are definitely using more than 1 process.

If you are using Windows open ‘Task manager’ count the number of process running even before opening any application. You can do the same using ‘top’ command in linux. A modern operating system is multitask and distribute them around any available processing nodes (cores and trheads withing each core). The bottleneck of modern computer is not processing power but DRAM access bandwidth. As games are concerned the big processing is graphic rendering which is done by the GPU, so CPU cores 4 or 8 shouln’t make much difference.

I might be running 100 processes at the same time, but 99 of them take 1% of processing power while the last one (my game) takes 99%.

or more likely 99 processes take 1%, game takes 50-70%, and the rest is unused thanks to imperfect scaling.

Plenty of games pretend to scale up to 8 cores, but when you look deeper all the cores run at ~30% utilisation while the game is still cpu bottlenecked.

I usually run multiple processes, but frequently I am waiting for just one in particular.

http://www.fmg.sk/relax/wp-content/uploads/2015/02/theory-programing.jpeg

More accurately, the photo should depict the dogs waiting for the master filling the plates (DRAM filling L2 caches).

Although this is probably more like the reality

https://www.youtube.com/watch?v=D7GbYmfuDDE

Oh god I can’t stop laughing! That video is the greatest explanation ever!

You are dead on about the frequency performance per core. 4.0 AMD FX is Equivalent to 3.2 AMD Phenom but Windows 10 utilizes all threads and cores, but the FX is not a true eight core, it is a quad core with 2 CMT threads per core.

The AMD FX is a quad core renamed quad module two threads per module with 2 modules sharing the same FPU. There are only 2 128bit FPUs in the FX 8 core not 4. Before Bulldozer was released in 2011 AMD released several videos of the architecture.

Cue grammar nazi comment.

I’m liking these kinds of articles HaD. Keep em comin

Maybe a dumb question, but: why didn’t AMD build a compiler for their AMD chips?

Even if they did, that doesn’t exactly help when all the closed-source software out there is being built with the opposition’s compiler. You’re still stuck with a sabotaged Fallout 4 executable.

A better question is why they haven’t built a programmable CPU ID. Perhaps it is even just a numeric code and no law against copying Intel ID directly?

Yeah. A customizable CPU ID would be pretty nice. While Intel may be able to stop them from setting it to GenuineIntel, I don’t think they can prevent customers from being able to set it to whatever they want (including GenuineIntel).

That would probably cause AMD CPUs to become a lot more popular.

Copyright and DMCA. Intel owns the copyright to the ID, and copying img it would circumvent Intel’s “protection” from others copying the function of the compiler.

It’s 09 F9 11 02 9D 74 E3 5B D8 41 56 C5 63 56 88 C0 All over again.

A CPU instruction is a binary number. Many instructions need extra binary numbers for the instruction to make sense. For example, 0xe9 is a jump instruction opcode in x86. But the instruction needs more data to tell the CPU how to change the address in the instruction pointer register to jump to the new place in the code.

Those extra instruction sets like SSE2 are Intel specific, many of them are not duplicated by AMD. You can sort of think of them as a co-processor that is built into the CPU. One of those instrictions might be 0x803791 which could be a valid instruction, but different on an AMD CPU, or be total garbage and cause a CPU exception.

Even if you set that CPUID to GenuineIntel, you would not have the silicon necessary to decode and execute those instructions.

The VIA Nano has programmable CPUID, which helped find Intel compiler bad manners :

http://ixbtlabs.com/articles3/cpu/via-nano-cpuid-fake-p1.html

Still, Sega had similar issue back, I think in the early 1990s. Megadrive software checked for “Copyright Sega” in a game before it would run it. Other game developers took them to court, and won the right to include “Copyright Sega” in their games, even though the copyright didn’t belong to Sega. It was seen as a functional code that was necessary for the product to work. Dunno if they made it a lprecedent, but it’s almost exactly the same principle here. Aside from the fact that law mostly works on money, on principle they may well be able to do it.

That said, if this is the case, why does anyone use Intel’s compiler? There are others. Don’t Microsoft sell most compilers nowadays? Or what about GCC? There are plenty of customers out there with AMD CPUs, enough of a percentage that I wouldn’t want to lose it for a game I was spending millions on producing.

I presume the compiler’s been changed now, or at least that software houses know better. So it’s only software from a few years ago affected, and that should still run fast enough anyway on modern CPUs.

Don’t suppose Intel have had to pay any compensation for all this monopolism? Microsoft did a similar thing back in the early Windows days, back when other OSes existed. If a supplier endeavoured only to sell Windows, none of the alternatives, they’d get a better pricing deal. Since the business is competitive, paying full price for Windows would cost a lot of sales, so they went along with it. I think that’s part of the famous case against MS in the 1990s, you know, the one that basically changed nothing.

@Matt

Actually many of the Intel instruction set extensions have been implemented by AMD, in fact AMD has been the first with several, and several were not duplicated by Intel (3DNow! being an early example). There are also a few cases of Intel and AMD instructions that serve the exact same function but are neither compatible nor available on the others’ processors.

This is all a bit beside the point though, since applications aren’t supposed to use the vendor string alone to determine what instructions are available. The CPUID instruction also provides detailed information on available features, and the (e)flags register also has a few feature bits that are even older than CPUID.

“Sabotaged” executable? Are you *high*? The vast majority of games are either built with Microsoft’s Visual Studio build tools or GCC. IC++ is a niche compiler that’s hardly ever used in the game industry.

This biased article makes it seem like ICC is some massive industry juggernaut that everyone uses, and that couldn’t be further from the truth.

AMD didn’t have a staff of compiler writers. They eventually bought a compiler from a compiler company, and open sourced it, it is now known as Open64 and is still available, but still rarely used, as most of its best parts were integrated into gcc I believe.

Relatively few developers used the Intel compiler. I don’t know of any commercial “shrink-wrap” software for MS-Windows or MacOS / OS X that was compiled using Intel’s compilers. AFAIK the only market where its usage was common was in High Performance Computing (HPC) aka Supercomputers. Their Fortran compiler was better supported for a number of years, but the C/C++ compiler did catch up as more HPC source code was written in C/C++.

Having seen inside the beast that is Intel, I have seen that they do work with MS to optimize performance. the MS compiler team does get help from Intel.

Intel spends more on R&D than AMD’s entire gross. This is a nice narrative, but I think something crucial is missing.

Could this have been because Intel stone walled them from the OEM market? Crippling their income and ability to generate funds to dump into R&D. Think about how many millions if not billions of dollars AMD lost out on, because Intel paid companies (ie… Dell) to not sell AMD’s products.

No its because I have cooked eggs on AMD’s cpus and had them explode, Intel’s I can’t get the egg to cook and the software won’t crash. My choice was clear when I got 2nd degree burns from a cyrix, had my AMD die when the cooling fan failed because of wire entanglement, and the Intel kept going even with no heatsink. To this day I admire AMD from a distance but won’t sink any money in an inferior product even if that means Intel is a monopoly. I have NEVER had any of my core business servers ever die due to cpu issues when running Intel gear. I have had several AMD systems develop “issues”. That’s not to say that I haven’t had systems die, just not from the CPU.

How does your lack of kitchen skills translate into a viable argument?

They did have a small team in Portland contributing optimizations to GCC, but it was not enough. But to do an optimizing compiler from the ground (like Intel did) is huge investment, taking many experienced and supremely talented talented PhD-level people. It not just another web perl/php/ruby web app ya know? Plus intel had a large headstart. This is something that takes years of performance analysis, tuning and continual refinement.

I still buy AMD.

Yes, their CPUs are not as good as Intel, but they are cheaper, much more affordable, and generally more hack friendly.

Intel may rule the performance roost, but AMD will always have my support. I just built a quad core Mini ITX system from AMD for under $300 and it plays Kerbal Space Program with ease. Money well spent.

^ This. Exactly.

AMD has my support for this reason, and for the reasons outlined in the article. Intel is a turd hole of shysters and swindlers, and has been for as long as I can remember. I’ll happily live with a slightly slower AMD CPU. It’s better for my conscience.

I’ve only recently moved from AMD back to Intel, for hardcore number crunching Intel is the only player in town at the moment. I’ll happily move back to AMD or to ARM if they can catch up.

SDR probably is one application where turbo mode (overclock all cores for a quick application start time, and then underclock by a lot until the heat has dissipated) is a very bad thing.

Click on “processor ” at http://stats.gnuradio.org/ AMD does not get a look in.

You may say use GPU’s, but they are junk in the area that is of interest to me. It takes far too long to get the data to the GPU, and back from it. I hope AMD makes a comeback, but right now for me they are the underdog.

Performance is a per-dollar issue, unless you’ve got unlimited money. All my PCs have been AMD, except this one, which I chose based on an even comparison. Intel’s prices have had to catch up a lot with AMD in the mid-range market, where AMD have always done their best.

I remember when AMD were third-place after Cyrix. Cyrix made cheap but pretty horrible CPUs, with less cache than the competitors, their 486 had 1K where the competitors had 8K. It made a lot of difference.

Also had an AMD 386SX-40. Was maybe half as fast as a 486-33. Sure it was the fastest 386 made, but suffered a lot. Particularly because, as clock speeds went up, RAM at the time was still severely limited. You needed cache, and the 386 had none on-board. Some motherboards included it, but only high-end ones from when the 386 was new. When it became low-end, expensive cache wasn’t worth including, so it spent a fair bit of time free-wheeling waiting for RAM.

I suppose back then the few thousand transistors you’d need for a K or 2 of cache were significant chip usage. My PC now has more memory on-board the CPU chip than my first and second PCs had in total RAM put together.

Now I remember the 486 “write-back” cache chip scandal! Cache chips on motherboards, that were solid plastic with pins attached! No silicon in there at all. The fuckers! Unfortunately I had one of the boards in question. Damn Chinese bandits, any way to rip people off, they’ll do it.

There’s a really good writeup of the PC Chips 486 fake cache fiasco here:

http://redhill.net.au/b/b-bad.html#fakecache

The really sneaky bit was the BIOS modification: it would pretend to allow the user to enable Write-back Cache, but never actually did, which hid the fake chips deception! They even soldered the BIOS chip to the board so you couldn’t easily remove it to discover the fraud.

So Intel’s dispatch function can be overridden, levelling the Intel / AMD playing field. Any C / C++ developer should give this excellent PDF a read, but the instructions on overriding dispatch are on page 131. http://www.agner.org/optimize/optimizing_cpp.pdf

x86 becoming the dominate ISA is so not a case of the cream rising to the top but of marketing over merit.

Today it is an example of if you throw enough money at a pig you came it fly. You can thank AMD for that as well.

x86 success is a result of enormously successful Intel marketing campaign, it was called CRUSH.

https://www.youtube.com/watch?v=xvCzdeDoPzg

The ISA is decent enough, and overall price/performance of intel was better than anything else. Intel also has been pretty good at keeping backwards compatibility, which is very valuable to the market.

Over the years x86 CPUs have really been RISC cores, with a hardware instruction decoder converting x86 into their real native code. They switched back to native CISC at one point when that became faster again. But just imagine how powerful PCs would be now, with all this advanced silicon technology, without the x86 ISA sat on top like 6 tons of concrete.

I’m not sure why nobody’s built an ARM that runs at 3GHz or more, 1.6GHz seems to be about the limit, for some reason. I’d like to know why.

Nowadays people are coding for multiple cores, and there’s really no limit to how many of those you can have in a computer. Even if you run out of silicon on one chip, dual and quad-chip motherboards have been around forever, they still use them for servers, they could come to the desktop if need be. There’s plenty of room to the side!

The extensions to the x86 architecture to make it 64-bit came from AMD. Had it not been for those guys, 64bit computing would never have come to the masses as it is today and would have been stuck with the IA-64 architecture which was not backward compatible to x86. Even Intel had to ultimately license their instruction set.

I’m surprised the AMD64 architecture didn’t get more coverage in the article, this deserved more than just a name mention.

Absolutely, can we also hear about how the Athlon 64 beat the P4 into the ground like the little bitch it was? In my opinion Intel CPU’s weren’t worth while until 2006 with the Core architecture, up until then I was in the Mac world enjoying high end PPC silicon (G4/G5 I was spoiled wanna fight about it ?) and those machines took a massive dump on all my friends P4 systems even up to the Prescott.

The Core CPUs aren’t that great. Core 2 is where Intel started getting it back. I have a Core 2 Quad that is still pretty stinking fast and the 2.5Ghz Core 2 Duo in my laptop from 2008 with 4 gig RAM and nVidia GPU with its own 256 meg RAM runs Windows 10 quite nicely.

Intel DID NOT license x84/64bit from AMD, Intel reverse engineered it and they own their version and pay AMD NOTHING.

AMD over paid Billions for ATI but they can’t find the funds to create their own compiler, REALLY, and then they send out their misinformed puppets to cry about Intel’s compiler.

The New World Order, ARM vs Intel, Debt Laden AMD NOT NEEDED, Debt Doomed AMD is Expendable.

There is a cross licence agreement between AMD and Intel for all the instructions they invent in x86 (including SSEx and all the new SMID stuff, and the 64bits mode)

Intel cross licensed AMD64 and in return cross licenses x86 to AMD without question.

Intel did NOT cross license AMD64 they reversed engineered their own version (google it) just like AMD reverse engineered x86 32 bits without a license from Intel, the cross license is lawsuit protection and for extensions but it has expired. Now Intel can add extensions WITHOUT sharing them with AMD, this is HUGE for Intel and DOOMING for AMD.

After years of running AMD and building systems for others with AMD I gave up. I want a system that runs cool and the latest Intel processors do a better job. I paid about $100 more but spread over 3 or 4 years it not that much. I really hope AMD gets back in the game.

Brian you overblown the compiler thing. Yes, you could use Via C3? processor to demonstrate Intel compiler cheating (via let you define cpuid manually), BUT, and this is a big butt – almost NOBODY used Intel compiler on the desktop. Games and apps? almost all compiled with MS compiler.

>Am386, a clone of Intel’s 386 that was nearly as fast as a 486

clockwise, not mips wise :) plus AMD was sued for copying Intel microcode verbatim and afair lost. am386 wasnt fast because it was clever, it was fast because AMD clocked it faster while Intel already moved on to 486 and stopped releasing faster 386s.

Nineties was a time when AMD was in DEEP trouble, their 486 clones were slower than Intels, and their Pentium clones (K5) were plain TERRIBLE. AMD lacked internal talent, NexGen was a godsend acquisition. It wasnt the first, and definitelly not the last time AMD got rescued by bringing in third party. Athlon also wasnt a fully home grown design, it was a reengineered DEC Alpha build by a team of former DEC engineers led by a former DEC lead engineer :)

ps: am386dx40 was my first x86. I ran on mix of overclocked Celerons 300a@450 – 766@1100 and durons/athlonx XP up to ~2007. Never used Pentium 4 in my life and proud of it. Intel Core killed AMD, Sandy Bridge buried it so deep there is almost no hope. AMD simply doesnt make any sense anymore :(. Console deal is the only profitable part of their business, even GPU unit is losing money.

Throwing away $400m on seamicro? wasting 2 years on Bulldozer? another 2 years on arm? all abandoned by now. AMD is Commodore of this decade – some good engineering, some terrible engineering, and WORST MANAGEMENT EVER.

I hope Zen turns out to be decent, and doesnt suck like everything AMD did in last 7 years :( They need to equal at least Intel i3 IPC.

Some AMD-Intel background can be found here: https://www.youtube.com/watch?v=XWJrsM4DxDU

Intel crush campain: https://www.youtube.com/watch?v=xvCzdeDoPzg

AMD didn’t cloned Pentium nor 486 to make k5. They used an old friend, AMD29000: was a true RISC CPU. they modificed microcode to match X86 instructions. Then, we can conclude two things:

k5 is REAL RISC and pentium not.

AMD was competing with Intel, with a 10 (or more) years old product, intended to be a microcontroller, and performed Near Pentium at lower cost. also, AMD Invested way few resources developing k5 than intel Developing pentium, so k5 was a “small victory”.

I was noticing that this article did not mention the 29K series too – that was a very cheap and __very__ well designed family of ICs. The AMD designers have serious bragging rights even to this day.

From Wiki:

https://en.wikipedia.org/wiki/List_of_AMD_Am2900_and_Am29000_families

Didn’t AMD also have an ARM chip a few years back that they sold to Qualcomm?

Might you mean DEC and Intel? (StrongARM)

Not ARM, but MIPS. You might be thinking of the Imageon graphics core that came along with the ATI acquisition and was later sold to Qualcomm, becoming the Adreno GPU used in many mobile SoCs.

AMD bought Alchemy Semiconductor in the early ’00s for some reason (set top boxes?) and sold them about 5 years later after doing absolutely nothing with those products. Alchemy made a MIPS32 compatible SoC.

Is there anything in Zen that will help AMD get by the Intel compiler issue?

there is no intel compiler issue, NOBODY uses intel compilers on the desktop

i keep seeing this, no one uses the intel compiler, not sure why thats being said (or how people know), it’s totally untrue, i’ve been using the intel compiler for a decade at lots of large game dev studios. have been using it along with vtune, tbb etc. it used to blow away the other compilers on the market.

its got a drop in to replace the standard ms compiler, and has for ages, makes it dead easy to use.

It has been a while since I’ve looked at id’ing the compiler used for executables in commercial software, but at the time I didn’t have a single executable on my system that I could id as not being Visual Studio, or gcc, and couple of other minority compilers, but no Intel. This was some time ago, circa 2005-2010 I think, but matched comments from others at the time.

I think the two examples given of commercial software compiled using Intel’s compiler was MATLAB, or similar professional number crunching software, and a 3rd-party Photoshop plugin. No one mention games, and I didn’t find any on my system at the time I checked.

I am not surprised by the usage of the Intel development tools like V-Tune in particular at a game studio, but I haven’t heard of shipped game executables being compiled by the Intel compiler. I would also be a bit surprised to hear of a game developer using TBB (Threaded Building Blocks) because AFAIK, TBB has always been quite clear in its strong Intel bias.

TBB was introduced into the unreal engine around 2010 iirc, there was a big intel press release with it, and epic. they partnered on their tool sets.

Intel has been sending out compiler engineers and such to game devs for as long as i can remember, they’re (As the article is about) the dominant force.

TBB is also used in a lot of commercial renderers (ours uses it)

As a past game engine developer, i have written code that uses different paths based on the processor its running on, its not difficult to do at all, we’ve known and worked around the bias for a long time and if you can simply load a different library or code path to gain better performance, why wouldn’t you ? There is fluff in the article too, even with the old version that didn’t do as well on AMD, it stil did perform better than other compilers.

My AMD hex core is more than 5 years old.

Not a single game on the market has made it lag or forced me to drop quality.

It was bought on a low budget.

Now correct me if i’m not wrong but didn’t Microsoft end up trying to bury or “crush” linux too?

either you are lying, optimistic and delusional, not a gamer, or own a very weak GPU

pick one or more of the above :(

DirectX is SINGLE THREADED (dx12 will finally be multithreaded), this forces games to cram all GPU state upadates into single thread, AMD single threaded performance SUCKS and kills game framerates. This is why Mantle was developed (and already abandoned).

my $30 Intel G3258 @4.4GHz murders AMD in most games (except modern ones finally optimised to use more than 2 threads).

as for you, try World of Tanks, good luck :))) this is a single threaded game (written in Python :P :D)

directx 9 is single threaded, so one thread to the gpu, dx11 is one core per core gpu, dx12 is many core to gpu.

s/not a gamer/not a very specific kind of gamer that spends a lot of money for not a lot of game/;

WOT is a blatant example of a company being too cheap (even when they can swim in the profits) to just buy a better engine and instead keep kludging the crap one that seems to have been written for something of the P4/core Duo era…

Take a look at how Warthunder looks (and plays) and how much crunching power it needs to do it.

Of course, that may be one of WoT’s selling points – being able to play a game that doesn’t require the latest and greatest graphics cards and processors to have a worth-while experience. I am firmly convinced that much of the drivel sequal-machines these companies pump out as “games” are simply marketing tools to sell this year’s graphics card.

>and already abandoned

In other words it was integrated into vulkan which was then copied by microsoft to turn it into dx12.

I have been an AMD fanboy since the k6 days and I hate to say it but the next time I build a new computer I will probably go with an Intel cpu. AMD has been lagging behind with their enthusiast cpus the last few years, and unless their next cpu recaptures the socket A days (e.g. 90% performance for 50% price) I’ll be going with an intel processor.

I’ll still root for AMD though. Hopefully all those modified A6’s they are selling to microsoft and sony will keep them going.

“AMD was on fire, and with almost a 50% market share of desktop CPUs, ”

I have never seen that claim before, does anyone have suitable references?

nope, because he just made it up :)

Intel had everything, CPU, chipset, interfaces, and the already existing culture.

AMD has cpu’s. most of them copies of intels designs, with something new thrown in, chipsets where from SIS or VIA, and when you put everything together, there was always something missing with AMD.

Maybe it was the thing that Intel actually had more to say in all the standards than AMD, so in essence, they, AMD, were always playing catch-up to Intel.

Intel could undercut AMD’s prices so most of the pc manufacturers would go for Intel, together with the fact that, run side by side, the amd’s always tended to get a little left behind by the intels.

Since Intel had more integrated, CPU and chipset integration, they were better able to deal with timing issues and bugs than AMD. (And Intel also had some stupid bugs on some motherboards, but go try to find some info on that now in this commercial internet age, it’s as good as impossible, though still possible)

Also AMD was going for the lower market, with more problems for cpu’s, less quality motherboards, less quality power supplies, bad capacitors, which intel also had to deal with, yet I guess, a bigger problem for AMD.

and yes amd also had some of their own chipsets, all of which had their own annoyances with standards, mostly at the time USB, but also some PCI and AGP quirks, (btw Intel also had a few of them, yet they were better hidden, and obfuscated in software, the amd’s sometimes had a patch you could download, for windows )

to summarize, i’m still working on an intel pc, over ten years old, still going strong, was originally used in a shop for over eight hours a day, only added some memory and a different (nvidia) graphics board, still has the same psu. wonderful machine! whilst the amd machine that was bought was acting up already after a year, giving machine check exception errors in windows, with no hardware modifications whatsoever, (maybe it overheated because the heatsink got clogged up with gunk or maybe it was pebkac) has already been decommissioned since it has become too sluggish and too prone to failure.

enjoy the rest of your evening.

My quad i7 3.6ghz 5 year old desktop is as fast as anything I can buy right now brand new. Processor speeds have been stagnant for 5 years now.

if Intel wants more of my money give me 8 cores at 5ghz. Most software is still single threaded so we need far faster processor clocks to see any speed differences. The biggest boost I found was using a unauthorized patch to permanently disable hyperthreading. This gave me the full processor clock speed for single thread apps and made a huge difference for me.

Plus HT off lowers temps so I can overclock it to 4ghz and leave it there.

What is needed is a utility to manually assign cores for specific apps to use when launched.

what you need is called “task manager” and it’s been around for some time now…

It’s actually not at all as fast as machines you can buy today. Intel has been steadily improving its IPC with each new generation of i7. A modern Skylake-based i7 at 3.6GHz will positively run rings around your first-gen i7 at 3.6GHz. Sorry that you’re one of those people who thinks clock rate is all that matters.

Little issue since you mention PSU… isn’t it about time PSUs came out that support 1.8V, or 1.1V, or a configurable voltage? Putting a whole extra SMPS on motherboards, with dozens of amps demanded, was a kludge to start with, now it looks ridiculous. I know case manufacturers tend to lag behind a bit with technology, but all that extra stuff is really something that belongs in the PSU.

It’s not easy at all to transfer over 100A at such a low voltage over even a few inches.

Call me naive, but wouldn’t a bit of thick copper wire do? With maybe an extra power plug on the motherboard? It is only a few inches, and would save the complexity, expense, and weight, of having so much power circuitry right next to the microchips.

You could manage the voltage drop with feedback, which is how SMPS works anyway.

yes, you are naive! it’s hundreds, not dozens! And i would prefer a mobo manufacturer to handle my CPU voltage than a cheap chinese PSU maker… What if they skimp on the caps and fry your cpu in a few days? What about the connectors? 100A connector? If there was a cheaper alternative, it would have been chosen already.

AMD lost because of the management. the engineers do not want to share a FPU with every 2 cores, they want 8 cores and 8 FPU’s

The fools that run the company are the main problem with AMD.

As an Ex-AMDer, I can’t agree more. Great engineers, terrible strategy.

Ultimately that depends on the demands of software, no use in having FPUs around if they’re not doing anything. Which is an analysis engineers should make, of course.

Yeah, that’s a very fair assessment. A lot of their managers and CEO’s were just plain terrible. I was shocked when I read the history of what they did. Hector Ruiz did an interview where he said that he fought with Jerry Sanders about wasteful spending and the unnecessary construction of a fab that would just be a financial drain (now Globalfoundries) and how things could have been better if they’d just been smarter with their money, and that’s only just off the top of my head.

I feel like Rory Read did well and now Lisa Su has been doing well so far, but I guess we’ll have to see if it’s too little, too late. PC Gamers and just PC buyers in general haven’t really seen an Intel / Nvidia monopoly yet (for CPU’s and GPU’s) and I think that many people who are glib about mocking AMD aren’t really thinking about how terrible it would probably be if AMD ever went under, or became a console and custom / semi-custom enterprise provider that stopped making consumer stuff.

Look at every monopoly out there (especially serious bad actors like Comcast) to see how bad it could get.

Not really, most of the consumer software uses the Integer cores, not the FPU unit.

Yeah, as long as you exclude games from “consumer software”. Idiot.

So, we know games are FPU-bound on the processors in question? It makes sense to remove components that aren’t used, with games there’s always a bottleneck somewhere, so some parts will go unused. Less parts = better yields and cheaper prices. They have to make a profit after all. Not like Microsoft made all their money through being the best at writing software, other things are more important in a marketplace.

Idiots like you make me laugh LOL

Nice pic, but the tank would’ve been better with a laser turret, rather than the explosive-projectile thing they’ve got there. Or maybe some coils for a Gauss gun. Railgun too, but railguns don’t really look like anything special from the outside.

two words:

1. powersaving

2. driver

We are past the era of laptop, now we are in the mobilphone era.

Also the driver from AMD is a closed source crap, and was alwas a piece of crap.

So they missed the server market, missed the laptop market (and also the two year lifespan netbook market), and they also missed the mobile phone market (although intel missed too).

So they are trying to sell processors to desktop pcs and video cards to desktop pcs.

Basically the niche desktop gamer market.

Can’t believe why anyone wondering they are sinking like a submarine.

Correct me if I’m wrong (may very well be the case), but do the linux compilers also neglect to optimize for AMD? What makes me wonder, is when I see binary packages with either i686 or amd64 in their filenames. If this means “built for Intel” or “built for AMD”, then another factor enters in here — linux. I know linux doesn’t have desktop market share, so this may not matter in the larger scheme of things, but it does dominate servers. Does anyone have more on this?

am64 is the name of the architecture because AMD came up with the 64 bit extensions to x86. Intel didn’t want their x86 CPUs to compete with the Itanic. So no, amd64 binaries are not necessarily optimized for AMD – they’re just 64 bit x86.

BTW – the compilers are GNU, and they don’t play favorites.

The open source compilers (e.g. gcc and clang, etc.) don’t have a check for the manufacturer id (“GenuineIntel vs. “AuthenticAMD”) for compiler optimizations. They correctly produce code that checks for feature bits to see if a processor says it supports SSE, SSE2, AVX, etc.

The i686 vs amd64 is whether the program is compiled for 32-bit versus 64-bit, The i686 being a unofficial reference to the Pentium Pro or its P6 microarchitecture. The “amd64” being an unofficial synonym for x86-64, that won in popularity within the Linux / open-source community.

The only case under Linux (or Windows) was in the case of Intel Compiler suite (Fortran or C/C++) up to version 10.x. I believe that version 11 did not include the vendor id check, but I believe the anti-trust settlement agreement may of ran out in 2013 or 2014, as Intel has a very strange disclaimer about compiler optimization at present at their website for their entire developer / software section of their website.

Off-topic, but there is also an x32 “architecture” (ABI) which is 64-bit code using 32-bit pointers. Useful for when you don’t need >2GB RAM, but can benefit from the extra CPU registers. It’s part of the Linux kernel, but few things actually use it.

its = it possesses

it’s = contraction for it is

“Motorola’s 68k line were relatively simple scalar processors” – not the whole line – the m68060 is a superscalar processor that has better performance per clock than the original Pentium.

For me it will allways be the best bang for the dollar.

I have been buying AMD since day one.

Must of had over 100 CPUs. all the computers in the house are AMD.

Intel to much money.

and I have allways gotten ATI video cards.

I still have a Cyrix 5×86 that I used to upgrade my 386

How did you do that since the 5×86 was an upgrade to 486 boards?

Intel DID NOT license x84/64bit from AMD, Intel reverse engineered it and they own their version and pay AMD NOTHING.

AMD over paid Billions for ATI but they can’t find the funds to create their own compiler, REALLY, and then they send out their misinformed puppets to cry about Intel’s compiler.

The New World Order, ARM vs Intel, Debt Laden AMD NOT NEEDED, Debt Doomed AMD is Expendable.

While It’s true that Intel pay nothing to use the AMD64 instruction set, AMD pay nothing to use the X86 instruction set too. The agreement was symmetrical. Crating the AMD64 instruction set was certainly the most valuable move of AMD. Without this, Intel could have terminate the agreement to let AMD use the X86 instruction set and this would have be probably a fatal consequence for AMD.

I don’t believe that the compiler was the main cause of AMD problems. From my point of view the AMD main problem was that there take to much time to crate a new family of core after the Athlon class family and the newer cores was not leader compared to Intel. There tried to improve, but that was always too little and to late. I hope that the next core family will be competitive not only regarding speed but also regarding power efficiency.

Too much users only wants to buy from a leader without realizing that this only kill competition and unbound the price to uncontrollable raise.

I believe Intel lost a lawsuit years ago, that meant that instruction sets can’t be copyrighted. There’s so many clones of so many CPU architectures out there.

Patenting might be different, but the core (not Core [tm]) x86 stuff goes back to the 1970s, so that ship’s long sailed.

The agreement was specifically for the instruction set, read the points 3.3 and 3.4:

https://www.sec.gov/Archives/edgar/data/2488/000119312509236705/dex102.htm

Excellent symptom of our social decay: People will excuse anti-competitive, unethical, and illegal behaviors for some extra perceived economic/performance metric. All values are for sale to the highest bidder!

It’s not “people” who let companies get away with this shit, it’s governments. As long as money buys power, including elections, and endemic corruption is expected, and wildly successful with only a nominal legal risk, society’s gonna be fucked and we’re all the property of the super-rich.

Some harsh sentences for corruption, and some actual investigation and enforcement, might help. Also change taxation laws so companies can’t just claim a cabin in the Cayman Islands as their global HQ. For companies over a certain size, taxation is optional, and none of them opt for “yes”. Everybody knows about this, Starbucks and Amazon being famous examples. Yet they’re still getting away with it. Why?

Global companies picking jurisdictions that suit them is one of the biggest problems in the world today. Why does nobody change this? Probably because the rich and the powerful are now the same people. Democracy means nothing, most of the things affecting your life, you can’t vote on.

Indeed.

I figured that out years ago

My 2 cents, Intel is condemned by their own success. their real value proposition right now it the concept of platform. I can reasonably expect my app to run on the family of OSes for which is was designed. Furthermore, I can reasonably expect to obtain an installer for my OS of choice and get it installed on a range of hardware without having to author or build parts of it on my own. The OS can obtain all necessary information about the hardware from the hardware itself in a standardized way.

In that regard, AMD fired a big shot over Intel’s bow. The ARM64 server platform spec AMD drafted that is currently evolving will come the challenge Intel. If something similar happens for laptops and desktops, it will allow OS authors to target a spec and a range of hardware makers to target that same spec without needing to directly interact.

When/if this happens, Intel will be in trouble. AMD doesn’t have to make x86 chips, and then Intel just becomes another single source chip supplier. They will go the way of so many other tech titans before them who decided to tightly contol their architecture and strangled themselves.

>The ARM64 server platform spec AMD drafted that is currently evolving

they already abandoned it :)))

I know they abandoned their micro server acquisition. I did see an AMD Arm64 reference platform last year, but I haven’t kept up with them. Cavium had an interesting looking platform too.

I’m involved with enterprise level equipment firmware so what I know comes form that. One part of the spec that was maligned was UEFI and ACPI.

ACPI is much maligned because of the old MS antitrust case where MS was found tampering with the spec to break competitors’ OSes.

UEFI is maligned for 2 main reasons, one is its origin at Intel and Itanium. My 2 cents is that bringing it to x86 was an attempt to migrate things to Itanium. Instead of extending x86 to 64 bit, they were betting on their EPIC architecture to move to 64 bit computing. But the EDK2 seems to be pretty firmly in the hands of the community which is big enough to make sure Intel will have a hard time if they want to cause trouble.

But the origins of UEFI and ARM coming to the table a bit later means that there are some aspects of the spec that don’t work on ARM. AFAIK, those are being worked on.

If it picks up, it’s one hell of an attack by AMD on Intel. Intel desperately wants in the embedded space, but a server platform spec potentially allows those players under attack to go after Intel’s bread and butter. Server products focused on ‘density’ are making their way out there and being adopted, I hope to see ARM based modules in the not too distant future.

what about the code? it’s all x86.

In ancient times, AMD processors were on par or just slightly less in performance to clock-equivalent Intel CPU’s up until the introduction of the Athlon. Intel’s P4 was plain awful. Athlon’s were the performance leader for quite sometime (for a few years before and after Y2K), and they sold for a premium. But like everything AMD, Athlon’s ran ridiculously hot. Pull the heatsink off an Athlon processor for less than a second and it goes up in smoke. AMD came to market first with the x64 as well. But soon intel caught up with their “core” CPU’s and AMD just kinda lost it since. AMD is in trouble, but there’s an ominous storm brewing up for Intel. They have completely failed in mobile, their IOT initiative is a joke, their 14nm yields are not good, Taiwanese and Chinese fabs are rapidly catching up, and Intel’s best friend Microsoft is a shell of its former self. Oh, and Microsoft uses AMD processors in their Xbox one, but nobody’s buying that either.

Believe it or not, we have a 3d printer, a dimension BST768, one of the first ever produced, and the motherboard is somewhere between a B and C size piece of paper. The backup of the software for the hard drive is on a 3.5″ floppy disk, known to millenials as the save icon. It uses a 80186 CPU. It is built like a brick chicken coop, and has worked reliably from day one.

I had Cyrix CPUS and AMD as well. We could overclock them, take the sides off and blow fans on them and run them way past the rated clock speed.

However now, we are strictly Intel where I work, and there is only 1 time I can peg my quad quad. It happens when I am rendering in Autodesk Inventor. Every other command for Inventor ties up 1 core and it will remain so for the foreseeable future because how the software is written.

As you design a part in 3D, everything has to happen in order. It is part of the way the CAD software is written and partly because they are not ready to completely re-write the software. It is just legacy code going way back. That is starting to change but it is slowly changing. Some 3D software companies are starting to write multiple core software but by doing so, it changed the way we think about creating 3D parts. Not to mention the GUI is completely different and although major CAD companies offer upgrades every year, we don’t do the upgrades because we have to re-learn the GUI. This is why we run Office 2003.

Oh wait…there is another place I can peg the CPU’s, during FEA in Inventor, using it to do thermal analysis of LED lighting. Other than that, I have not seen a good use for it.

Our biggest productivity booster now? Flash drives, I was skeptical, but my boot times and re-boot times have gone down significantly.

My take on this article.

1. Nicely written

2. It just goes to show that competition is a good thing

Use this article as a watershed for publishing articles HAD.

Nice use of the outlines of the polish concept battle tank :P

Weren’t the clean-room party suits from an Intel advert? Or are they somehow attacking the AMD tank by standing in front of it?

Intel have their horrible little microjingle all over the place, I don’t think most people know who AMD even are. Maybe just a little bit of advertising might help ease them into home computers, where being price competitive is most important, something AMD have always been good at. When Intel aren’t product-dumping, that is.

It’s Echo AND the Bunnymen

https://www.youtube.com/watch?v=KnVkRvOQY-8&spfreload=10

What’s this fish doing in my bed?

The Intel being unfair while true leaves out the funny fact almost all of AMD is based on the illegal copying of the 8080 :). The agreement to second source didn’t come until after AMD copied the design.

AMD also failed to get enough fab capacity for K7 and K8 or else they might have sold enough processors to seriously damage Intel.

In all cases a great article – thank you!

As someone who was there during the start of the AMD downfall, I assure you keeping the fab full wasn’t the root cause. Hubris at all the success of K8 and K8L led to schedules being drastically pulled in for Greyhound / Barcelona while adding too many new features, and the chip was over a year late to market. 4 months of 14 hour days doing bringup, squeezing as much validation we can out of silicon we know is broken is one reason I’m no longer in the semiconductor industry.

During that time, Intel was regaining ground with Core 2, and by the time Barcelona was released Intel already had a decent quad core part on the market. It didn’t matter that the first quad core Core 2 parts were MCMs with two dual core parts inside, because marketing.

First PC I built myself was an AMD (Athlon 1900+) and I was loyal to them for several more builds (Athlon 64, Opteron, Phenom). About a year ago I went over to the dark side (Intel) when I built my first Intel PC with an i7. My newest PC build is an i7-4790K. I consider it a beast (one of the highest single core performance CPUs out at the moment) and the price was not bad. $280 for a top-end performing CPU? Yes please. I really hope the Zen CPU from AMD gives me a reason to consider AMD outside of the price per performance angle. I miss those days when AMD was whoopin’ Intel.

I typed a nice long comment on here, and then wordpress wanted me to log in and then after it logged me in it ate my fricken comment and I am not typing it all over again.

short version: I built several AMD machines from 2002 to 2013 and then went Intel. I miss those days when AMD beat Intel.

It isn’t clear where the “ethically questionable engineering decisions” are here. If Intel paid to write a compiler that supported the fancy extra instructions and optimizations, they aren’t necessarily obliged to provide that to other people, especially competitors.

Now, if AMD had some licensing deal with them that should have included access to that, then that’s something to talk about. Even if Intel made the modifications to gcc, they might then only be guilty of license violation, which is a different ethical ball game than the implied sabotage.

Around 2003 the GHz race came to an end. Right now, the Moore’s law is struggling to survive. In just a couple of years, the IPC race WILL end! HSA is the way forward. As someone working on simulations (and high-performance computing by deduction) I can tell you with 100% confidence that the ideas that AMD have been pushing over the last decade are revolutionary. Anyone tells you Intel has better technology, to them I say it’s a temporary solution to a bigger problem. All chip makers are bound to go HSA-like, and truth be told Intel with all its money and muscle could and should have been there already! But Intel (and nVidia) has nothing but held back the development of computing technology. I only hope that AMD is afloat to see its tech coming to fruition!

I missed the part where Intel made poor assumptions about the P4 architecture, AMD found a weak spot, then Intel corrected course. It takes a lot for a company that size to turn the ship, and just as Intel did in 2006, hopefully we’ll see AMD do the same in 2016. MISTAKES WERE MADE! But give credit where credit is due.

Small change builds giant machines, and AMD has always been at the heart of every build of mine. I started with a K6 right after they came out, and I never looked back. Since 1996 I have built amazing, long lasting budget machines that play everything, are expandable, repairable, and exciting to own. These machines have never cost more than $800 US, and those were because I got a tax return windfall at the right time. Go win your epeen wars super gamers, I never did care for such measurements.I currently just built my new machine, an 8 core AMD, and it just purrs along. I had to build it in AU dollars, and it came in under $750AU. 8 core FX, 16g fast ram (no, not the fastest lol), a GT 730 card that handles my single monitor just fine, and on and on. Space, speed, power and all for a budget. ASRocks Mobos are solid, AMD CPU’s are cheap and solid, the card is solid, the memory solid, the power supply solid, all perform great, and well under any type of visible strain. I run windows for convenience, I throw Puppy Linux on it when I need pure speed for processing purposes. I will stay in the 25%, thanks, and be quite happy. Ibanez beats Fender (Thank God for the RoadStar II series,) AMD beats Intel, Public funded news beats crap Big Channel news, Linux beats windows (except for games), and I love them all. I never used Explorer either, Netscape, Seamonkey, and only recently have I crawled around with Chrome suit. I may or may not stick with it.

I just never saw the reason why Intel was so much better, since I was measuring my performance in more of a dollars per year system. I have never had to pay more than an average of 100 bucks a year to play all the games others play, use all the programs other use, to experiment and have a place for my wee DE-9 connector to have a home. My machine tends to last 7-8 years before I feel the squeeze of a bottleneck, so whats all the fuss about? I continue, and will continue, to build as I have taught myself, and enjoy the hell out of machines that are consistent, reliable and cheap, and preferably Advanced Microchip Designed.

Obviously, if this changes, so will I. I use to use MicroLinux, and before that Knoppix. Its all in the measure of what it is worth, and what it will do. AMD could be dropped, but I aint seeing it happen soon, personally.

Small change builds giant machines, and AMD has always been at the heart of every build of mine. I started with a K6 right after they came out, and I never looked back. Since 1996 I have built amazing, long lasting budget machines that play everything, are expandable, repairable, and exciting to own. These machines have never cost more than $800 US, and those were because I got a tax return windfall at the right time. Go win your epeen wars super gamers, I never did care for such measurements. I currently(one year ago,) just built my new machine, an 8 core AMD, and it just purrs along. I had to build it in AU dollars, and it came in under $750AU. 8 core FX, 16g fast ram (no, not the fastest lol), a GT 730 card that handles my single monitor just fine, and on and on. Space, speed, power and all for a budget. ASRocks Mobos are solid, AMD CPU’s are cheap and solid, the card is solid, the memory solid, the power supply solid, all perform great, and well under any type of physical strain. I run windows for convenience, I throw Puppy Linux on it when I need pure speed for processing purposes. I will stay in the 25%, thanks, and be quite happy. Ibanez beats Fender (Thank God for the RoadStar II series,) AMD beats Intel, Public funded news beats crap Big Channel news, Linux beats windows (except for games), and I love them all. I never used Explorer either, Netscape, Seamonkey, and only recently have I crawled around with Chrome suit. I may or may not stick with it.

I just never saw the reason why Intel was so much better, since I was measuring my performance in more of a dollars per year system. I have never had to pay more than an average of 100 bucks a year (for my system costs, not power, games ect.,) to play all the games others play, use all the programs other use, to experiment and have a place for my wee DE-9 and MIDI connector to have a home. My machine tends to last 7-8 years before I feel the squeeze of a bottleneck, so whats all the fuss about? I continue, and will continue, to build as I have taught myself, and enjoy the hell out of machines that are consistent, reliable and cheap, and preferably Advanced Microchip Design’ed.

BUT… Be fluid, be organic in your choices friends. Life is to short to sell our decisions to any company. I just happen to be in a niche where AMD is king. ARM could change that, but not in the next five years. Also, desktop is still greater than anything else, and that is because of standardization. Mobile may be the way to go in a decade, but I still can’t see how my Note 4 Edge is gonna be fun to play Pillars of Infinity on.

> inevitable truth that applications will expand to fill all remaining CPU cycles

Isn’t that May’s law?

I’m not sure why people get so emotional over these two companies or instruction sets, operating systems etc to be honest… It’s like a bunch of 10 year olds in the playground arguing that megadrive is better than SNES or whatever because it has blast processing.

I don’t really care who did what 10 or 20 years ago. I’ll buy what fits my needs and fits my budget at that point in time.

that’s why you should stick to your iphone and gtfo.

What I have found odd and very annoying about AMD CPUs over the years is they hold their value far longer than Intel CPUs. It took me forever to buy a dual core Athlon 64 x2 Socket 939 at a non-insane price a few years ago. Finally bagged one for a mere $17 off a mis-categorized eBay buy it now sale – when the same CPU in the right category was selling for near $100.

Today I’m hunting a ‘right priced’ 3+ Ghz Phenom II x4, Socket AM3. These are, in electronics time, very OLD CPUs. But just look at the prices sellers put on them VS contemporary Intel quad core CPUs. Same story, I can pick up Intels from circa 2009~2010 for pocket change, but a Phenom II x4 is going to cost near $100.

Right now I’m sitting at a PC with an AM3 Phenom II 550 x2 on an AM2+ board. The CPU may be x4 unlockable but the chipset on the board is the very last revision unable to unlock cores. :(