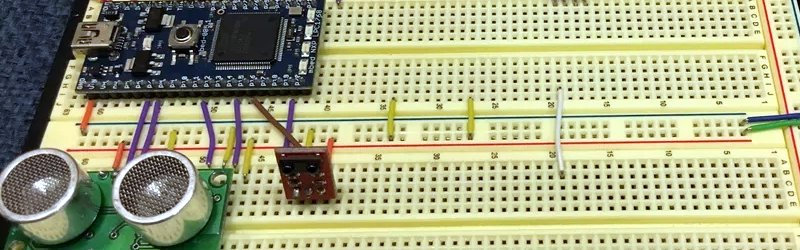

When we wave our hands at the TV, it doesn’t do anything. You can change that, though, with an ARM processor and a handful of sensors. You can see a video of the project in action below. [Samuele Jackson], [Tue Tran], and [Carden Bagwell] used a gesture sensor, a SONAR sensor, an IR LED, and an IR receiver along with an mBed-enabled ARM processor to do the job.

The receiver allows the device to load IR commands from an existing remote so that the gesture remote will work with most setups. The mBed libraries handle communication with the sensors and the universal remote function. It also provides a simple real-time operating system. That leaves just some simple logic in main.cpp, which is under 250 lines of source code.

The remote reminded us of a Hackaday project we featured earlier this year that used the same SONAR module for a PC volume control. If a TV isn’t to your liking, you can always use gestures to control your drone.

I just got a Samsung S5. When I wave my hand in front of it it goes left OR right depending on the mood while I an showing pics out of the gallery. I haven’t figured it out yet.

Sensitivity!

It is “mbed” not “mBed” …

Thank you for your thorough and constructive comment on the the article. We are all better people for having read it.

An arm based gesture remote control is certainly more useful than a leg based one :)

You just mad my day ????