Motion control is a Holy Grail of input technology. Who doesn’t want an interface that they can control with simple and natural movements? But making this feel intuitive to the user, and making it work robustly are huge hills to climb. Leap Motion has done an excellent job creating just such a sensor, but what about bootstrapping your own? It’s a fun hack, and it will give you much greater appreciation for the currently available hardware.

Let’s get one thing straight: This device isn’t going to perform like a Leap controller. Sure the idea is the same. Wave your hands and control your PC. However, the Leap is a pretty sophisticated device and we are going to use a SONAR (or is it really SODAR?) device that costs a couple of bucks. On the plus side, it is very customizable, requires absolutely no software on the computer side, and is a good example of using SONAR and sending keyboard commands from an Arduino Leonardo to a PC. Along the way, I had to deal with the low quality of the sensor data and figure out how to extend the Arduino to send keys it doesn’t know about by default.

The Plan

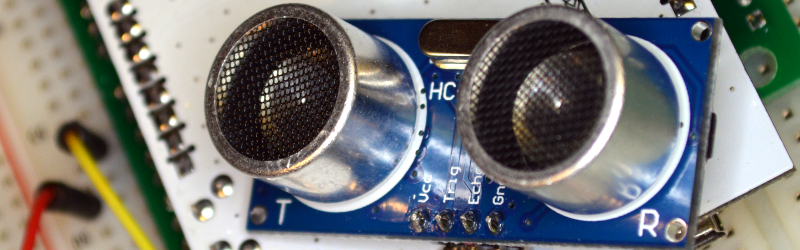

The plan is to take an inexpensive SONAR module (the HC-SR04) and an Arduino Leonardo and use it to perform some simple tasks by mimicking keyboard input from the user. The Leonardo is a key element because it is one of the Arduinos that can impersonate a USB keyboard (or mouse) easily. The Due, Zero, and Micro can also do the trick using the Arduino library.

I wanted to determine how many gestures I could really determine from the HC-SR04 and then do different things depending on the gesture. My first attempt was just to have the Arduino detect a few fingers or a hand over the sensor and adjust the volume based on moving your hand up or down. What I didn’t know is that the default Arduino library doesn’t send multimedia keys! More on that later.

How the SONAR Works

The SONAR boards come in several flavors, but the one I used takes 4 pins. Power and ground, of course, are half of the pins. In fact, my early tests didn’t work and I finally realized the module requires more power than I could draw from the Arduino. I had to add a bench supply to power the module (and, of course, I could have powered the module and the Arduino from the same supply).

The other two pins are logic signals. One is an input and a high-going pulse causes the module to ping (8 cycles at 40kHz). There is a delay and then the other pin (an output) will go high and return low when the module detects the return ping. By measuring the time between your signal to ping and the return, you can judge the distance. In my case, I didn’t care about the actual distance (although that’s easy to compute). I just wanted to know if something was farther away or closer.

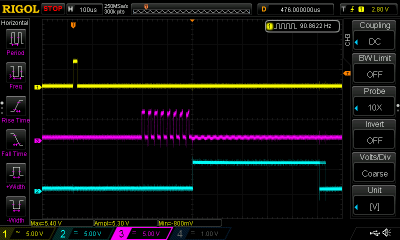

The scope trace to the right shows the sensor pointing at something relatively near. The top trace is the start pulse and the bottom trace is the input to the Arduino. The center trace is the output of the SONAR transducer. All the signal conditioning is inside the sensor, so you don’t need to worry about the actual signal processing to generate and recover the audio. You only need to measure the width of that bottom pulse.

The scope trace to the right shows the sensor pointing at something relatively near. The top trace is the start pulse and the bottom trace is the input to the Arduino. The center trace is the output of the SONAR transducer. All the signal conditioning is inside the sensor, so you don’t need to worry about the actual signal processing to generate and recover the audio. You only need to measure the width of that bottom pulse.

The scope has persistence and you can see that the bottom trace does not always come out right at the same time (look at falling edge and you can see “ghosts” for previous samples. It shouldn’t come as a surprise that it may take a little effort to reduce the variations of the signal coming back from the SONAR.

Noise Reduction and Actions

Averaging

I wound up trying several different things to attempt to stabilize the input readings. The most obvious was to average more than one sample. The idea is that one or two samples that are way off will get wiped out by the majority of samples that are hovering around some center value. I also found that sometimes you just miss–especially when looking for fingers–and you get a very large number back. I elected to throw out any data that seemed way off when compared to the majority of received data.

Verifying

One other tactic I used was to verify certain elements with a second reading. For example, the start event occurs when the SONAR reports a value under the idle limit. The idle limit is a number less than the reading you get when the SONAR is pointed at the ceiling (or wherever it is pointing) and you don’t have anything blocking it. To recognize a valid start, the code reads twice to make sure the value is under the limit.

The code inside the Arduino loop is essentially a state machine. In the IDLE state, it looks for a reading that is below the idle limit. When found, that causes a transition to the sampling state. When the reading goes up or down more than some preset value, the code in the sample state sends a volume up or down key via the keyboard interface. If the sample goes back over the idle limit, the state machine returns to IDLE.

I got pretty good results with this data reduction, but I also found the NewPing library and installed it. Even though it isn’t hard to write out a pulse and then read the input pulse, the NewPing library makes it even easier (and the code shorter). It also has a method, ping_median, that does some sort of data filtering and reduction, as well.

You can select either method by changing the USE_NEW_PING #define at the top of the file. Each method has different configuration parameters since the return values are slightly different between the two methods.

I said earlier that the code sends volume up and down commands when it detects action. Actually, the main code doesn’t do that. It calls an action subroutine and that subroutine is what sends the keys. It would be easy to make the program do other things, as well. In this case, it simply prints some debugging information and sends the keys (see below). I didn’t react to the actual position, although since the action routine gets that as a parameter, you could act on it. For example, you could make extreme positions move the volume up two or three steps at a time.

Sending Keyboard Commands

I wanted to send standard multimedia keys to the PC for volume up and down. Many keyboards have these already and usually your software will understand them with no effort on your part. The problem, though, is that the default Arduino library doesn’t know how to send them.

Fortunately, I found an article about modifying the Arduino’s library to provide a Remote object that wasn’t exactly what I had in mind, but would work. Instead of sending keys, you have methods on a global Remote object that you can call to do things like change or mute the volume. The article was for an older version of the Arduino IDE, but it wasn’t hard to adapt it to the version I was using (version 2.1.0.5).

The action routine really only needs the UP_IN and DN_IN cases for this example. However, I put in all four branches for future expansion. Here’s the action subroutine:

void action(int why, unsigned value=0)

{

Serial.print(value);

switch (why)

{

case START_IN:

Serial.println("Start");

break;

case STOP_IN:

Serial.println("Stop");

break;

case UP_IN:

Serial.println("Up");

Remote.increase();

break;

case DN_IN:

Serial.println("Down");

Remote.decrease();

break;

}

}

The Final Result

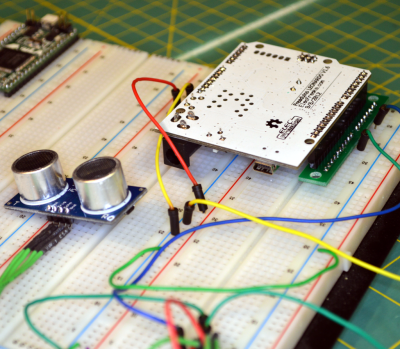

The final result works pretty well, although the averaging makes it less responsive than you might wish. You can turn down the number of samples to make it faster, but then it becomes unreliable. You can download the complete code from Github. The first thing you’ll want to do is check the top of the file to make sure your module is wired the same (pin 3 is the trigger pin and pin 8 is the echo return pin). You’ll also want to select if you are going to use the NewPing library or not. If you choose to use it, you’ll need to install it. I flipped my Leonardo upside down and mounted it on a breadboard with some adapters (see picture to right). It really needs a more permanent enclosure to be useful. Don’t forget to give the SONAR module its own 5V power supply.

The final result works pretty well, although the averaging makes it less responsive than you might wish. You can turn down the number of samples to make it faster, but then it becomes unreliable. You can download the complete code from Github. The first thing you’ll want to do is check the top of the file to make sure your module is wired the same (pin 3 is the trigger pin and pin 8 is the echo return pin). You’ll also want to select if you are going to use the NewPing library or not. If you choose to use it, you’ll need to install it. I flipped my Leonardo upside down and mounted it on a breadboard with some adapters (see picture to right). It really needs a more permanent enclosure to be useful. Don’t forget to give the SONAR module its own 5V power supply.

If you look near the top of the loop function there is an #if statement blocking out 3 lines of code. Change the 0 to a 1 and you’ll be able to just get averaged data from the sensor. Put the module where you want it and see what kind of numbers you get. Depending on the method I used I was getting between 4000 and 9000 pointed up to the ceiling. Deduct a bit off of that for margin and change IDLETHRESHOLD (near the top of the file) to that number.

The DELTATHRESHOLD is adjustable too. The code sees any change that isn’t larger than that parameter as no change. You might make that bigger if you have shaky hands or smaller if you want to recognize more “zones”. However, the smaller the threshold, the more susceptible the system will be to noise. The display of samples is helpful because you can get an idea how much the readings vary when your hand is at a certain spot over the sensor. You can try using one or two fingers, but the readings are more reliable when the sound is bouncing off the fleshy part of your palm.

If you want to add some more gestures, you may have to track time a bit better. For example, holding a (relatively) stationary position for a certain amount of time could be a gesture. To get really sophisticated gestures, you may have to do some more sophisticated filtering of the input data than a simple average. A Kalman filter might be overkill, but would probably work well.

If you look around, many robots use these sensors to detect obstacles. Makes sense, they’re cheap and work reasonably well. There are also many projects that use these to show an estimate of distance (like an electronic tape measure). However, you can use them for many other things. I’ve even used a similar set up to measure the level of liquid in a tank and earlier this week we saw ultrasonic sensors used to monitor rice paddies.

If you really want to get serious, [uglyduck] has some analysis of what makes spurious readings on this device. He’s also redesigning them to use a different processor so he can do a better job. That might be a little further than I’m willing to go, although I was impressed with the 3D sonic touchscreen which also modified the SONAR units.

Well done, sounds like a fascinating project.

Are these modules similar at all to the ones used on some Polaroid land cameras?

Well, this is basically off topic, but have you ever stopped to think about how strange it is that the mouse fits the human hand?!?!? I mean it’s like our hand was designed to neatly fit around and interact with a mouse. And yet the mouse wasn’t invented until recently.

It’s just eerie.

I’m not saying that it’s proof of intelligent ancient alien design of genetically modified protohumans, but really, what other explanation is there?

Isn’t the exact opposite true? We designed the mouse to easily interface with humans hands. For those who don’t have the ability to use their hands, we design other interfaces.

You’ve been trolled, silly boy.

Wow! That’s like. Completely BANANAS!

You have me REaaLly worried now. So will we eventually lose our opposable thumbs?

Oh. OH! Race war between the 1, 2, 101 or 303 fingers races?

https://media.giphy.com/media/FqdGGgugkC4Xm/giphy.gif

I don’t know if motion control is really the ultimate form of interactive technology. Perfect speech recognition and natural language processing would be more intuitive, I think. And if we’re really talking about a “holy grail”, how about thought-control? My computer just does what I want it to before I can even vocalize the command?

I mean, come on. Think bigger.

motion control is great when what you want to input is that motion. replicating a physical control input or a movement that you want emulated in software for examples.

Outside of that, you want the densest, most accurate, easiest, fastest and most adaptable information transfer you can get from your idea to the system. Motion control isn’t that interface. Voice, is as close as we can reliably get until some sort of actual “thought control” hardware exists, A “perfect” natural language processing capability allows incredible density and flexibility, even with today’s limitations. The potential is easy to see.

If motion control was optimal, we could raise children by waving our hands….:P

Maybe there is a way of transferring information more densely using our hands than you think. For example, we generally type on keyboards (using our hands) because it’s pretty easy once you pick it up, and you can be reasonably quick and precise.

Now, if we weren’t tied down to pressing little squares on a two dimensional surface, then perhaps we could invent a new mode of input whereby you can enter things other than just text and pointer coordinates.

All we need to do is think it up.

What would be REALLY exceptional is the use of the INVERSE.

* Physio-Therapy: Stroke victims, Car/Bike accident victims, Even people that might have healthy immune systems and be healthy but in a coma (preventing atrophy of muscles) (And yes, coma & long term unconscious victims might find themselves waking up and swimming in salt and ph balanced bath)

* Athletic Training: Stamina, Strength and Form Training. (Starting at mild jogging, proper weight-liftingall the way to “I know Kung-Fu.”)

I have used the exact same setup for a simple theremin. It was fun, as I have managed to link it through virtual MIDI to LMMS, so it behaved like a real controller… With a free harp plugin (Etherealwinds Harp) the effect was quite cool. Too bad I am not much of a musician :)

They are so dirt-cheap it might be an idea to just throw another sensor in, to get samples at double the rate. And it would maybe enable to detect not only vertical but horizontal movement.

If the extra sensor(s) are in close proximity, adding more wouldn’t help with the sampling rate with a simple range finder. You have to wait until the echoes dies down to get rid of false reading before you can fire another ping. So the minimum delay would be around 5m/340m/s * 2 = 29ms or about 34 sample/s. With a sliding window averaging as a simple filter, you can get a reasonable sampling rate.

I have done some work in reverse engineering these modules, fine tune the analog circuits for 3.3V and interface them directly with ARM chip and RTOS. A simple range finder would only return the first echo. My project also use high speed ADC to capture the analog data of multiple echoes. That along with some autocorrelation might be more useful to identify more complex sonar data with multiple echoes.

My project is here: https://hackaday.io/project/5903-sonar-for-the-visually-impaired

Is it completely impossible to modify the kHz on these? One ping could be one tone the second & third different tones.

Sounds like a great project. Only issues I can think of is interference from another interface that is pinging as well.

When programming against noisy data sources like this, I find Kalman Filters to be indispensible. You can choose to throw out outliers pre-Kalman, but the Kalman filter does a pretty good job of ignoring outliers anyways. There’s a tradeoff in that you need to set the error sigmas higher to ignore outliers, which slows the response of the filter overall…

Nice, work, and I get the cheap ultrasonic component….but other things can be cheap as well and allow for even more degrees of freedom: there’s the 3d capacitive ones and the 3d optical ones. Why not these?

Done that! 4 sensors to make an arrow keypad.It was fun but too unreliable. I find it more useful to get data like if you are sit in front of the computer, invoke macros with gestures, or get is there is someone approaching the door

I dont’ think controlling things without touching them is ‘natural’ at all.

But it can be fun though.

That ultrasonic sensor can best measure distances above 50 cm. For shorter range is better the sharp ir reflective ranger that is also more expensive. Anyway for hand gestures is not important to know the exact distance, it would be enough to use a ir photo transistor and ir emitter and quantify the amount of ir light bounced back (with an algorithm to cancel out the ambient ir light).