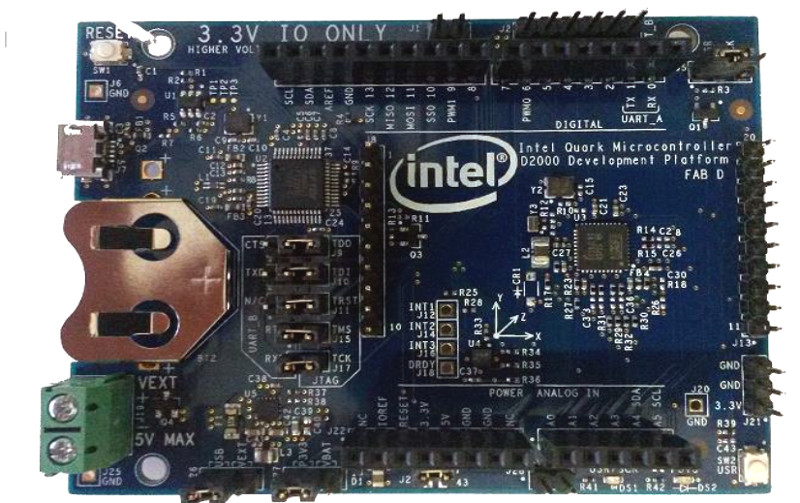

Intel have a developer board that is new to the market, based on their Quark (formerly “Mint Valley”) D2000 low-power x86 microcontroller. This is a micropower 32-bit processor running at 32MHz, and with 32kB of Flash and 8kB of RAM. It’s roughly equivalent to a Pentium-class processor without the x87 FPU, and it has the usual impressive array of built-in microcontroller peripherals and I/O choices.

The board has an Arduino-compatible shield footprint, an FTDI chip for USB connectivity, a compass, acceleration, and temperature sensor chip, and a coin cell holder with micropower switching regulator. Intel provide their own System Studio For Microcontrollers dev environment, based around the familiar Eclipse IDE.

Best of all is the price, under $15 from an assortment of the usual large electronics wholesalers.

This board joins a throng of others in the low-cost microcontroller development board space, each of which will have attributes that its manufacturers will hope make it stand out. Facing such competition the Intel board will have to be something rather special to achieve that aim, so why should it excite your interest? We would point to the low price, the x86 code if that is your flavour of choice, and the relatively tiny power consumption.

Stepping back from the dev board for a moment, consider this processor as an illustration of technological progress in semiconductor fabrication. Over twenty years ago this chip’s Pentium ancestor ran on 5 volts and got so hot you could fry an egg on it, here is a Pentium that can run on a few milliwatts from a coin cell. Fortunately you won’t be running Windows 95 on it though.

We’re sure we’ll see plenty of projects here in the future using the Quark. Intel’s previous effort in this space, the Edison, has made several appearances. We’ve covered its launch in 2014, looked at someone running Doom on it, and examined its use with audio effects.

Thanks [Nolan M] for the tip.

“the x86 code if that is your flavour of choice” — Does the underlying instruction-set really matter? I’d hazard a guess and say that almost no one goes in and writes assembly-code by hand, and if it’s a compiler that does all the hard work then you have no good reason to care. Power-consumption and performance do matter, but they aren’t necessarily related to the instruction-set being used.

It does to some people, though for my own use I’d agree with you.

However, compiled code size does. And on devices with small amounts of memory, that counts.

Writing assembly directly has indeed become rare, but a whole lot of bare-metal software uses very hardware dependent C (often wrapped in C++ classes). Like writing bytes or single bits directly to registers, like macros which resolve to specific machine instructions, like writing interrupt handlers. C is powerful enough to allow writing code which translates into machine instructions very predictably, still it’s much more readable. And occasionally looking at the compiled assembly is a must for those caring about performance.

On bare-metal there is no OS abstracting away all of this, so the underlying hardware certainly does matter.

Intel was supposed to release an open source RTOS to use with these chips. Was that Zepher or is something else forthcoming?

It count if one want to run MS-DOS on it.

And why going for Eclipse when Turbo C 1.0 can do the job :D

..or Turbo pascal the whole IDE and compiler ran from a floppy disk and compiled in about 2 seconds

Anyway to shoot down anyone’s hopes any old PC programs would need the PC BIOS loaded and 4K RAM would not run even DOS1.0

If they make a version with large enough memory to run MSDOS and with an external storage device, it could be self hosting. :)

The peripherals, real hardware debugging and the compiler can make a difference.

If you are going to write any assembly, then yes, it matters. Programming a 68K is bliss compared to just about everything else, for instance.

What’s power consumption on this compared to an UNO?

“I’d hazard a guess and say that almost no one goes in and writes assembly-code by hand, ”

Um, no, I’d disagree.

The Quark D2000 is really sitting in a very odd performance space. It’s not really fast enough to compete with the faster ARM Cortex microcontrollers at the 100-ish MHz mark, so it’s really just slightly faster than the PICs, AVRs, MSP430s, and 8051 clones of the world.

And people hand-write assembly for those chips *all the time*. In a lot of cases, you have to. Compilers can be buggy or stupid, and the C language just doesn’t contain the tricks you need to really get performance out of those chips – namely, the one intrinsic it really is missing is a way to access or test on the carry bit directly, so you can’t use rotate-through-carry, which is stupid-useful for bit manipulations and serialization/deserialization. And 32 MHz just isn’t quite fast enough that you can waste cycles trivially.

So yeah, I think the underlying instruction set matters. Although that being said, I think the x86 portion is actually an advantage, because obviously x86 compilers are *very* advanced at this point.

Would’ve been cool 20 years ago.

I’d say, buy one, salvage the FTDI, the coin cell holder and the pinheaders, desolder the micro, and then use the thing as a compass, acceleration, and temperature sensor board for your Arduino (Due).

Seriously, I never considered anything x86 related for a low end embedded project.

32MHz, 32kB of Flash, 8kB of RAM, Pentium without FPU

Why would that look more desirable than ARM/AVR/PIC?

Did anyone actually _liked_ the x86 instruction set?

Does it have better speed per MHz or code density than competition?

or maybe interrupt latency?

It *should* have slightly better code density. I suppose we can assume that the toolchain is going to have a really kick ass compiler since *gobs* of time and effort has gone into x86 optimizing compilers. The reason it’s x86 is because this is from Intel and they don’t want to reinvent the wheel. Turning your question around: why would x86 be less desirable than $RISC_FLAVOR? Almost everyone that uses this thing is going to be insulated from the ISA and when they aren’t? I would bet they would have no trouble finding a thousand books on x86 ASM.

Because as there has been spent gobs of effort making compilers for X86, there has been spent gobs of effort making ARM work well an embedded environment (meaning GPIO, power usage DAC, ADC, SPI, etc), a completely new world for X86. And Intel _is_ reinventing the wheel, it’s just a different wheel, for the X86.

ASM for X86 is a b… nightmare, so you _need_ thousands of books to explain the spagetti of legacy cruft crammed into the ISA.

And what makes any $RISC_FLAVO[U]R less desirable?

Funny but people said the same things about ARM, MIPS, Coldfire, and so on. I mean really why do you need anything more than an 8051 people.

Give it some time and let’s see what people make with this.

8051 are at least 30x slower than avr’s and even more than ARM. Is the bare x86 faster than any cortex-m* (per MHz), or have any other advantages ? (I could find only intel marketing gibberish so far)

@jnk0le I would argue that modern/fast 8051-compatible devices, such as Silabs EFM8 (particularly their Laser Bee series) are a significantly better choice against an 8-bit AVR. Also, even the oldest (ie. ‘classic’ timing) 8051 devices were more like 8-10x slower than AVR for the same clock rate (going by my own experiences with both of these chips). Cheers.

It was joke people. Lots of people used are are still using 8051s for a lot of projects because they are fast enough and you can get free soft cores for it.

The point is stop all the hating and wait and see if people make cool stuff with this.

RISC is generally not suitable for assembly-language programming. The ISAs of RISC machines were conceived to make compilers more efficient, not people. While X86 has some arcane characteristics, it was, at least, created by people who expected others to program directly in assembly and the ISA demonstrates this. However, anyone who had programmed both in X86 and 68K knows how much difference an ISA can make as 68K is about as good as ISAs ever got for human-readable assembly code.

Pretty sure there’s plenty of Thumb assembly out there, proving you wrong.

How does the existence of ARM+Thumb assembly code prove me wrong? I didn’t say no one does it. I just said CISC assembly, 68K assembly in particular, is more pleasant to directly program in. That is a subjective statement that can be neither proven nor disproven, At most you could say you have experience with 68K, X86 and ARM+Thumb assembly and you prefer ARM+Thumb or you have personal knowledge of such people which means you or they fall outside of my “generally” statement. For people with such experience as I suggested, I think you’ll find my generally statement holds. At least for me, 68K was the most elegant ISA I’ve ever had to work with.

The 68008 was a pleasure to use. The Motorola peripheral chips were rock solid and the crazy expensive Macro language was very readable.

It also took forever to get anything done.

I kept an old dev board on the wall until my last move. It was pretty. My wife didn’t share my esthetic.

This RISC is not for human programming is a BIG PILE OF BS! Don’t eat it! I really enjoy programming RISC machines (POWERPC, ARM), and I really hate x86. I write assembly for RISC for critical codes (encoding, decoding, cryptography etc.), my codes are at least 40% faster than the best C compiler code out there.

(64 bit x86_64 is a much better platform, than a 32 bit x86.)

If it’s the same as the previous Quark chips it’s not even a Pentium without the FPU – it’s a 486 with a couple of Pentium instructions buggily added on, and it has awful integer performance compared to modern chips as a result. Intel advertise the Quark as “Pentium-class” because it can run code that uses the Pentium instruction set (but only if it’s compiled specifically for the Quark with a toolchain that can work around its brokenness), and because it sounds better than “486-class”.

If this is equivalent to a Pentium without the FPU it easily outperforms the ARM/AVR/PIC on a per MHZ basis. AVR32 gets 1.5 Mips/MHz, PIC32 gets 1.8 Mips/MHz, ARM m4 gets 1.25 MIPS/MHz. The quark is getting 3.55 MIPS/MHz (and I’m assuming they’re comparing this chip to the slowest Pentium at 60MHz), it is a beast for something you’re poking into an Arduino form factor.

This intel document states

http://www.intel.com/content/dam/www/public/us/en/documents/training/soc-x1000-introduction-seminar.pdf

that the x1000 is a 1.26 DMIPS/Mhz with 16Kb L1 cache

The D2000 is a 5 stage single core in-order machine with a single instruction in flight without L1 cache. And also there is a 2 wait state flash. I don’t think it can get even near 1.26 MIPS/Mhz.

Intel is lying through its tooth about it having the performance of a pentium then. More like a shitty pentium that’s down clocked. Makes this board even more disappointing.

Actually, I’m considering it because it has an instruction set that I used in the early 80’s and I still have some of the old assembly code kicking around. Who knows? Perhaps there is a large niche group of us old codgers pining away for the good ol’ days! I’m thinking Li Chen Wang’s Palo Alto Tiny Basic and Hunt the Wumpus.

How does it compare to Arduino 101/Intel Curie, which is also based on the Quark (though the Arduino 101 seems to have more RAM and flash, as well as BLE)? Is this board a stripped-down version of Arduino 101?

Probably pretty similar, but for a subtly different market.

The Arduino 101 is like the other Arduinos a board aimed at makers, while this board by comparison is a dev board for the Quark processor itself. I’m guessing if you asked Intel they’d tell you this board is intended for designers who want to evaluate the standalone Quark and integrate it in their own designs. If we as makers also get our hands on it then that’s a happy side-effect.

Seriously, this board is as cheap, if not cheaper than a 9DoF IMU and an *original* FTDI chip put together. They are obviously subsidising the board.

actually, economics of scale, and leave out the middlemen.

you, we are seriously overpaying for all those 9-10 DOF boards, just because.

32MHz, 32k FLASH and 8k RAM, oh my! This beast is as powerful as average one-dollar ARM micro.

25 IOs out of 41 pins? Are you serious, Intel?

Perfectly suitable for some applications. But.

The problem is when you find you need, for example, a couple more I/Os or a little more program memory – with ARM you just move up the line to the next bigger chip in whatever line you’re using. With this thing, you’re stuck adding SPI GPIO expanders or external flash chips at best. Or migrating to ARM, which you probably should have been using in the first place.

The only actual reason for anyone to buy one of these boards is the name “INTEL” written on it; nothing else can make anyone (that can do simple math) buy it.

Perhaps an MS-DOS port might be handy?

MS-DOS with 8k RAM? Good luck with that.

The D2000 programmer’s guide documenting its instruction set is behind an NDA-wall, but if it’s anything like the D1000 then its instruction set isn’t actually x86-compatible enough to run DOS (or Linux for that matter):

“The CPU borrows IA-32 instruction encoding, but is not an IA-32 processor and is not compatible with existing IA-32 applications or operating systems. Specifically, the Intel® Quark™ microcontroller D1000 CPU supports only a subset of the full IA-32 instruction set. Likewise, the CPU architecture excludes many legacy features such as segmentation. The CPU implements system software features not available or solved differently on IA-32. Software written for IA-32 processors requires porting to the Intel® Quark™ microcontroller D1000.”

This is different from the original Quark-branded CPU core which was mostly compatible with the Pentium ISA. It can’t execute code from RAM either, which is rather unusual for x86. On the positive side, the D2000 apparently finally has single-cycle multiply like ARM Cortex-M always had which is a first for Quark, though I’ve no idea what the performance of other instructions is like because it doesn’t seem to be publicly documented anywhere for either the D1000 or the D2000 (which appear to have different performance characteristics). It’s possible that the D2000 is a more capable CPU than the D1000, but because Intel’s documentation is so useless and PR-focused I just can’t tell.

Surprised by this unfamiliar name, I did a quick Google search. Looks like the D1000 and D2000 are Harvard architecture processors, which means separate code and data ROM, a la Microchip PIC. x86 with Harvard architecture, seriously? Though it makes sense, good for security and perhaps easy to design by stripping down uOP-based current Intel IPs.

Everyone outside Intel don’t even know how Edison’ CPU core is called(originally dual-core Quark, swapped last minute to unnamed custom Atom), or Curie’s. Then even before sorting that here’s D2000. Looks like Intel really think Atmel/Arduino a threat.

Not even slightly an IA-32?

Then that thing is a deadbirth, at least for me.

The only benefit of having a x86 would be that you can execute MS-/DR-/Novell-/PTS-DOS and run TP/TC/QB/… on it. Maybe even, if VGA can be outputed, running Win3.11 for GUI stuff.

x86 is really not a very space-efficient instruction set; I don’t know about the word alignment constraints on that particular chip, but effectively, 8kB RAM with x86 is *usually* less than the same amount of RAM containing Thumb2 instructions; so that’s a downside. This is really surprising, as ARMs are a RISC architecture that usually takes “more smaller instructions” to do the same thing as a CISC (x86) instruction, but: Thumb (2) uses 16bit instructions for *everything*, whereas x86 uses… ah… well, can use instructions from 8bit to (good question, I think its 15*8) bit length, but seriously, you’ll be stuck with 25% 8bit, 50% 16bit and 25% 32bit instructions, in the end (you probably won’t use many of the original 8 bit instructions, because if you did, you’d just buy any 8 or 16bitter, or a cheap-as-hell 8050 clone).

All in all, as [1] finds, code density in x86 and non-thumb ARM instructions are pretty comparable; the point is that ARM has spent billions on optimizing thumb to get more cpu per watt compared to their fully fledged ARM instruction set, given you operate in a microcontroller setup (and not on a ressource-rich environment such as a desktop or a multimedia controller, smartphone etc).

All in all, yes. Nice. An c86 in a what would be a classical ARM target, but with less promising hardware aspects. I don’t think that this will find broad adaption in industry (honestly, Intel doesn’t care about a few 100k tinkerers), because it’s taking an instruction set that was never meant for that application to the microcontroller world, where we have a whole ecosystem of competing microprocessor architectures that are actually designed for that world.

The single argument I can think of is

> well, this used to be controlled by an 486 computer, running hand-optimized x86 software, but nowadays we should be able to do the computing on something much much smaller, can we get the core algorithms without porting to a different architecture?

And the answer pretty certainly is: You should really re-write (and if necessary, re-model) your software if that is your problem, 40 years later.

[1] https://research.cs.wisc.edu/vertical/papers/2013/isa-power-struggles-tr.pdf

The x86 architecture has ruled desktop/server market for decades as all IO was at identical memory addresses and it allowed the same software to run of various hardware platforms (different CPU’s, mother boards etc… all work with different operating systems) and its trivial for the OS to locate UARTs, PCI buses etc… ARM is just the core and different silicon manufactures wrap the licensed core with different IO interfaces to make their products unique/value added. Such standard IO is not required for highly embedded systems where all off-chip IO is generally hardware unique and the software is generally application specific.

Intel is clearly trying to get some traction in the highly embedded market (IoT if one want to sound cool…) with their Quark/Edison adventure. ARM already “owns” this markets space with a large selection of cores targeting many markets from many silicon manufactures.

Good luck to Intel, IMO, they have a lot of ground to gain with nothing special to offer….

Aside, when looking at highly embedded systems one would look at Mips/Watt instead of Mips/MHz.

Cheers,

Div

do I have to triple fault to switch into protected mode? such lovely legacy …

only $15? wow, just five times more expensive than STM32 maple clone

$15 will buy you an Orange Pi PC, with quad core A7, Mali400, 1GB RAM, Ethernet, USB, HDMI, mic, audio out, etc.

This is all well and good, but I don’t see a BIOS. How will I run DOS 6.22?

Probably the same way you would do it using a regular Arduino Uno – tack on say, 128KBytes of external SPI SRAM + SD card slot via the GPIO header and then upload a minimalistic 8088 PC emulator sketch. Good luck! :-)

I went last week to an Intel talk. They were presenting similar boards like this. What I got was.

1 all arduino libraries are ported to their ide or SDK (I’m not a pregramer probably I’m expressing wrong. (In basic words you can use arduino libraries)

2 what they are really selling is a frame work based on the cloud where you can control all you IOT devices. It’s a cool interface you can reach the console of the device. And use knobs buttons graphics to show the information gathered by the board and control the board. It is based on a McAfee software to administrate servers.

3 it has nothing innovative to compare of what national instruments was showing us 15 years ago. Ah yes the “cloud administration”

I had a fast look at D2000 datasheet… one better stick with the numerous ARM base MCU. I don’t think Intel will conquer the market with it.

The Orange Pi One board is $13.77 shipped and the specs are way better than this. 1.6ghz Quad core Cortex A7, 512mb ram, sound, 4k video, ethernet, usb, runs android, runs linux.. You can even run DosBox on it and still get better x86 performance than this quark 486-class thing.

If you need a traditional MCU, the STM32F030C8 goes for $1 per chip and the specs are still better. 48mhz , 64k flash, 8k ram. And it’s ARM, not x86, which is a good thing.

You can still get 8051 microcontrollers but for the most part embedded intel/x86 anything died years ago. It’s an instruction set that dates back to the 70s. It’s fine for a big power hungry PC or server, not so great for much else. Overall, Intel’s foray into IOT has been a complete failure and this overpriced quark board wont help them.

Do you have a link for that $13.77 shipped Orange Pi?

http://www.aliexpress.com/store/product/Orange-Pi-One-ubuntu-linux-and-android-mini-PC-Beyond-and-Compatible-with-Raspberry-Pi-2/1553371_32603308880.html

Hmm, but that board won’t fit inside a MIDI plug, so it’s useless :p

“””32-bit processor running at 32MHz””” – so a 386 :)?

souped-up 486 apparently :)

It doesn’t seem to have any direct, low level GPIO access, just like all the other Intel boards. Super disappointing.

Complete nonsense. Open the easily obtained D2000 datasheet and read chapter 17.

Ehh… And ‘some how’, though I think Intel really ought to be able to do it better than anyone else out there, at least for ‘basic features’, the deeper I get into embedded the more attractive TI, Cypress, ST, XYZ + FPGA becomes if not simply necessary, also obvious/attractive for certain applications. I don’t think it *needs* to be programmable logic, per-say, even as Altera is now in the fold… But Intel, give us bad ass high speed signalling, a high speed external memory interface, *something*. Perhaps these chips don’t command the ‘premium’ of an ARM licence, but just a *few* added peripherals would double or tipple their weight in existence.

Do you mean 8GB? I’m pretty sure you’d only use Intel if you wanted compatibility with some existing PC software, what’s 8K going to do? How many billion times is the core going to run over the same 8K of data? Can’t see why this is better than an ARM.

It’s good they are trying, I wonder if the price is cheap enough to challenge the ATMEL chips? How does it compare to a Cortex M0 or the even faster and fatter M4?

If I see one when I am next shopping for gadgets I ‘might’ grab one, but only if my hands are empty. :)

If I can plug in a VGA monitor, PS/2 mouse and keyboard and run DOS 6.x, then I could play my old shareware games.

Guys again what they are selling is the SDK. The board it has not much to offer. That’s all check that has arduino form factor for compatibility. But the real power is on the SDK cloud service not the board it self. Read about it

When I read in the datasheet, that the processor has

No support for Atomic operation

I lost all my last tiny interest in this chip.

How you going to make a real os without these feature?

This chip will never get into the cortex M3/M4 territory. Even the M0 is a better mcu.

Err? Atomic in regards to what? This is a CPU with a single core, never intended for multi-socket motherboards. Afaik all sane CPUs use atomic operations in regards to interrupts. I can’t say that I’m all that impressed, but this CPU is no worse than a 386 in terms of operations. The amount of memory might be a serious hindrance though, but hey, it might make for a fine FORTH system for serious retro computing …

What on earth is Intel thinking? take an ancient architecture tweak it to make it incompatible and reduce memory to make sure it can’t run the old code that would be the only possible justification for even considering it

The Nucleo_F446RE board has a 32-bit cortex-m4 microcontroller onboard with an FPU, 512KB of flash and 128KB of RAM. It has superior peripherals and comes with a USB2Serial, STLink debugger/downloader and a USB Mass storage programmer. The board costs $12 and comes with free eclipse based dev tools.

I fail to see the point of this board.. the offering is quite inferior to what current ARM micros, even when focusing on low power alone and not just performance

The advantages of x86 chips are high performance (relative to most low-power ARM chips) and ability to run an existing x86 OS. But with a 32MHz clock and 8KB RAM, this board negates both those advantages completely.

What’s the point?

Energy efficient DOS or CPM/m box with an old serial 56k and use it as a BBS.

Waste of silicon

Where do you actually buy it? The Sample and Buy page seems to only link to the CPU, not the dev kit (the one that says it’s the dev kit appears to actually be the CPU when you go to order it.)

Just thought I’d add the Mouser link to purchase this board:

http://www.mouser.com/Search/Refine.aspx?Keyword=intel+d2000

Can I program it using arduino IDE and how do I access the compass and the accelerometer