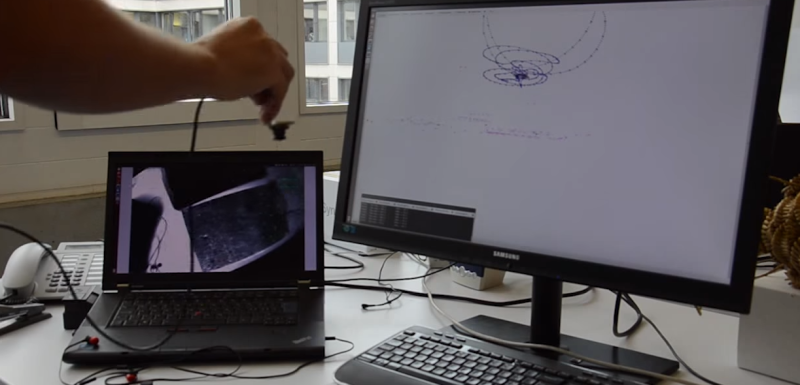

Let’s face it: 3-dimensional odometry can be a computationally expensive problem often requiring expensive 3D cameras and optimized algorithms that can be difficult to wrap our head around. Nevertheless, researchers continue to push the bounds of visual odometry forward each year. This past year was no exception, as [Christian], [Matia], and [Davide] have tipped the scale in terms of speed with an algorithm that can track itself in 3D in real time.

In the video (after the break), the landmarks are sparse, the motion to track is relentlessly jagged, but SVO, or Semi-Fast Visual Odometry [PDF warning], keeps tracking its precision with remarkable consistency, making use of “high frequency texture” as a reference. Several other implementations require two cameras or a depth camera variant, but not SVO. It uses a single camera with a high frame rate between 55 and 300 frames per second. Best of all, the trio at the University of Zürich have made their codebase open source and available as a package for ROS.

Indeed awesome that the code is actually available. Way too often researchers just demo the results while leaving out too many details to even allow a reimplementation.

This is very impressive IMHO. Especially if you attach further sensors combined by for example kalman filtering you got a very robust position.

BTW if anyone is interested the embedded system used is an “Odroid-U2, ARM Cortex A-9, 4 cores, 1.6 GHz”.

From the page describing how to use this with PX4 flight controller:

“Unless you do all of this, you will have a hard time recreating what you’ve seen in the videos. This is true for all research – the results presented there represent what had worked a few times. Just because standard packages are available, you shouldn’t assume that it will “just work” or “mostly work”. Assume that it “sometimes works”, under controlled conditions.”.

Fair enough, it’s experimental research, not a commercial software project. This is hackaday, not the app store.

beyond that it’s common for all free software to have a strong disclaimer to avoid liability as you never know how your software will be used when you release it into the wild.

the code is here: https://github.com/uzh-rpg/rpg_svo

It sounds similar to how optical mice work. It probably also looks at the scale of the tracking features to ascertain the distance away.jf

The devil is in the details. Optical mice is much easier since you only care about x and y motion, have a very high speed image sensor, only care about relative motion, etc.

Looks like match-moving, from the visual effect world.

And match-moving is just object tracking, which is just point matching….which is just nand gates.

Yep, I’m impressed!

How does this compare to the LSD-SLAM : https://youtu.be/2YnIMfw6bJY ?

I was thinking of using the one i mentioned for a rpi2 powered quadruped.

yea … wrong link. This is LSD SLAM : https://www.youtube.com/watch?v=GnuQzP3gty4

Thanks for the link, looks amazing!

From a quick glance it seems that LSD SLAM works on lower frame rate cameras than SVO.

I’m guessing that SVO might be less computationaly intensive if they were able to run it on a tiny embedded device. (The quad copter)

There is a quite a visible delay on LSD SLAM, this might be because it uses SIFT/SURF.

Anyway, like SVO they also provide the source code: https://github.com/tum-vision/lsd_slam

Strikes me as a possible solution for inside-out positional tracking of VR headsets.

Oculus did it in the past on one of their devkits (based on input & prototypes from Valve) and it wasnt precise enough, the problem is mostly the interplay between resolution+speed, if you want to reliably follow certain points in space (thats likelly going to be a wall a monitor and a desk) your gonna need a decent resolution (say 1920×1200 or something at the low end of the scale) however most cheap cams wont do such a resolution at speed, they will just cap at 24fps. Problem with that is you cant track at high speed.

This is kinda what makes me laugh at the whole Oculus ordeal now, i know for a fact (know/chat with several Valve employees near daily) that Valve was very aware the camera approach was subpar, it was just one of the ideas at the time, they where already working on and gonna share this lighthouse tech with Oculus, but then Palmer went for the moneybag, and Valve pmuch gave him the finger (for those unaware, Valve is trying real hard to become ‘Facebook for gamers’) now Oculus is still trying to make something out of the camera approach (they just moved the cam from the headset to a ‘base station’) and even though they do apparently use a nice high speed camera now, it just has nothing on the lighthouse system, in the time Oculus gets your position once, the Vive gets it like 7 times.

Long story short, camera tracking for VR is not the way to go at this time, proper high res high speed camera’s are still just too expensive, for the Oculus to have tracking as fast and precise as the Vive but with a camera you’d be talking 1000’s of dollars.

Not saying Oculus is bad or anything btw, its just positioning itself differently/aimed at different people.

you can make camera approach fast and computationally cheap, Jeri did it for castar* – fpga/custom silicon listening directly on camera input line (serial data) = no latency, you dont have to wait and compute whole framebuffers because you see spikes immediately at the time IR tracker led light hits scanned pixel = almost instant location data with enough trackers in the scene.

precision is solved if you put camera on your head/hand/wand and track room, tracking few small leds on headset with camera on the desk is total fail and requires stupid resolutions = bad latency.

* castar :/ proof VC is a cancer, I wager a guess this will never launch, VCs are too scared to release product ‘not good enough’ because it would influence valuation, so they will sit on this until it becomes obsolete or until they can flip whole company to a bigger sucker. Poor Jeri, millionaire on paper :(

According to the PDF, the camera they used is called a Matrix Vision BlueFox. That’s one of those high-dollar industrial items where the manufacturer’s site doesn’t even list a price, just a link to get a quote. Wah wah.

It looks like it might be this one: http://www.globalspec.com/FeaturedProducts/Detail/DigitalNetworkVision/mvBlueFOXIGC_super_compact_USB_20_camera/169148/0 Only $375, not as bad as I expected.

I haven’t been this impressed with tech in so long, this is utterly fascinating.

All I can think of is imagine what it will do to VR, perhaps I’m just not creative enough, but still.

Valve’s lighthouse is wonderful as to how low demanding it is, but configuration in a diy setting’s a bit iffy.

If you can just stick a few high fps cameras on handheld controllers, that’d make DIY setups much easier.

it wont do anything for VR because bom alone is >$100 = end proce would be ~$300

valves tracker brilliance manifests in simplicity, its extremely cheap to make one.

I’ve been toying over how hard it would be to DIY a lighthouse for months now. It’s utterly simple, yet for a DIYers perspective a playstation eye and some duct tape is a little easier than a custom made case with accurately positioned photodiodes, then software with an accurate internal model of those diodes.

I still can’t wait till we see the first diy lighthouse setup.

(Also might be nice for cardboard style vr, with some phones and their built in 240fps cameras)

I wouldn’t write it off entirely just yet.

Impressive, and awesome to see it available in ROS

Oh, a ROS dependency? Oh … goody.

That just implies that it will be largely inaccessible to most people, and will require hundreds of hours of hacking at an expert level just to get it to function. All you have to do is install all the packages, set environment variables, run mixed build system using catkin and legacy make, use two different tools to resolve dependencies between packages, then also have to solve some dependencies manually, likely have to roll back to another branch of a particular package, or start getting packages from the latest git repos, and have such a dependency hell that you’ll have to pretty much rebuild all of ROS from source, use a mix of packages from the past 3 “releases” of ROS, must run on exactly Ubuntu 14.04 directly on hardware (not a VM), custom author a .launch file, get a 5-layer deep tree of directory structures all correct, host your own private wifi network, have local DNS working, and have exactly the same camera as them or error messages will flood the logs.

Other than that … awesome!