Retrocomputing is an enjoyable and educational pursuit and — of course — there are a variety of emulators that can let you use and program a slew of old computers. However, there’s something attractive about avoiding booting a modern operating system and then emulating an older system on top of it. Part of it is just aesthetics, and of course the real retrocomputing happens on retro hardware. However, as a practical matter, retrocomptuters break, and with emulation, you’d assume that CPU cycles spent on the host operating system (and other programs running in the background) will take away from the target retrocomputer.

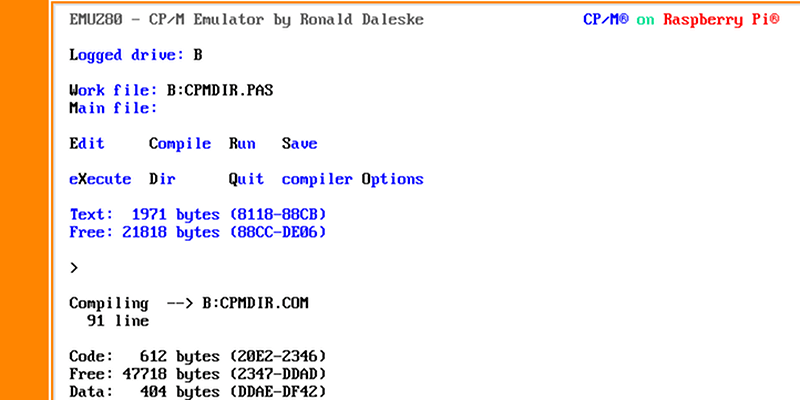

If you want to try booting a “bare metal” Z80 emulator with CP/M on a Raspberry Pi, you can try EMUZ80 RPI. The files reside on an SD card and the Pi directly boots it, avoiding any Linux OS (like Raspian). It’s available for the Raspberry Pi Model B, A+, and the Raspberry Pi 2 Model B. Unlike the significant boot times of the standard Linux distros on the earliest models of Pi, you can boot into CP/M in just five seconds. Just like the old days.

The secret to this development is an open source system known as Ultibo, a framework based on Open Pascal which allows you to create bare metal applications for the Raspberry Pi. The choice of Free Pascal will delight some and annoy others, depending on your predilections. Ultibo is still very much in active development, but the most common functions are already there; you can write to the framebuffer, read USB keyboards, and write to a serial port. That’s all you really need to make your own emulator or write your own Doom clone. You can see a video about Ultibo (the first of a series) below.

We’ve covered other bare-metal projects in the past. If you’d rather go old school, you could try reproducing the Rum 80.

Interesting concept, though pi should be plenty fast enough to emulate z80 on top of OS. However, 3rd or 4th gen console emulation on it might be helped a lot by this.

True, you could run this in Raspbian, but then, when you turn it on, you have to wait for the OS to load.

You could also replace a 555 timer with a tiny AVR that costs about as much, but I will leave the aesthetics of either choice as a discussion for later.

Well I’d be a little more impressed to see a native port of CP/M to AVR, but since getting source to port any/everything else you’d want to run would be a PITA, I guess there isn’t a whole lot of point.

I hope to see bare metal classic computer emulators emerging from this! TRS-80s, ZX-81s, MSX and why not Amigas and MACs!

But then an emulator is going to be an OS anyway.

But since it’s Hallowe’en soon, for real horror, get an android, install DosBox, run linux on dosbox, run wine on linux, run winUAE on wine, run amiga c64 emulator, inception!!!!111oneone.

Are you sure about the Linux-on-DOSbox part?

http://puppylinux.org/wikka/Pup4DOS

That, and other ways. Just bear in mind current mainline kernels won’t run because they require PXE, i.e. Pentium 2 or better.

@Dan#942164212

Sure, loadlin.exe has been around for two decades but is DOSbox capable of providing proper environment?

@RW

PXE like in “preboot execution envrionment”? As far as I can tell 80486 is enough (i386 support was yanked from v3.8).

Whoops I mean PAE..

https://en.wikipedia.org/wiki/Physical_Address_Extension

You may be able to use the right compile options and run it, but you’re probably not getting a ready compiled recent kernel very easily that will run.

Yes, of course, but it will be ready to work in a fraction of a second, just like the real machines.

Back in the day, when the OS was not in user’s way. DOS. Today’s OSes think they own the computer.

I wonder why most fast action games were booters, until mid 90s when DOS4GW began to be used…. must be nothing to do with the fact that DOS was in the way.

I don’t remember these “booters” you mention. Can you provide some examples please?

https://en.wikipedia.org/wiki/List_of_PC_booter_games

Well, back in the day when you played a game on an Apple ][, C-64, VIC-20, etc; and booted up a game, it took over the entire computer. It bypassed alot of the system ROMs to do it’s own thing for both performance and security reasons. Some games would install their own disk controller software to tweak settings to make it harder for people to copy games. On the C-64 this was done using the 1541 (as an aside, how can I remember model numbers like this for years, but forget the name of people I work with in my office?) disk drive which had it’s own controller in there, which would be tweaked to do funky things to sector counts, etc. On the Apple ][ it was even simpler because the AppleOS did all the disk controlling, the drives were quite dumb.

One of the early Borland succeses was sidekick, an early TSR (Terminate and Stay Resident) program which let you run your main application, but then hit a hotkey to get into Sidekick to take notes, run a calculator, etc. I forget all the things it let you do. But this just show how most early desktop computers were quite limited due to the OS not really supporting multitasking at all.

The AmigaOS was one of the early consumer OSes to support this, though it was cooperative multitasking, since programs could still stomp all over memory if they weren’t careful. Unix and other more commercial or bigger system OSes had hardware support for memory protection and could offer true multitasking from the get go, though of course some dialects of Unix would drop that if the hardware didn’t support it.

But Unix/Linux/Minix/etc all really didn’t take off until the advent of the Intel 386 with memory protection support built into the chip, which made them much much much more robust for running multiple processes at the same time and not having quite as much chance of bringing everything to a screeching halt if something crapped all over memory.

Though fork() bombs were one way people could still screw up and kill systems….

John

Yeah, it was unfortunate that AmigaOS had no way of enforcing things other than “programmers honor”, so the Amiga environment as a whole got a rep for behaving badly, when it was down to badly behaving programs.

that is just total horse shit!!!

on an Apple II, Commodore anything, TRS-80, the “OS” is in ROM, you can’t “unload” it, it’s in a totally different area of addressing space.

Even on the IBM PC, the MS-DOS versions of Flight Simulator did this. The initial program load was done under MS-DOS, then the app just completely took over and reused the memory previously occupied by MS-DOS.

The Amiga was definitely not cooperative multitasking – it had a real-time executive with AmigaDOS on top of it as a process. The amazing demo in the 1.0 days was the bouncing beach ball running on a foreground screen while there were numerous app windows running in the back, visible by pulling down the front screen. None of the apps had code to relinqish the CPU – Exec just took it when you blocked on I/O or when you’d run long enough and it was another process’s turn.

MacOS _did_ have cooperative multitasking, where your application had to tell the OS toolbox that it was OK to let another process run for now. You had to work that in and “play nice” with other apps on the machine and it was up to the developer to decide when that was.

(tried to edit and could not)

The Amiga had preemptive multitasking (where cooperative multitasking means that apps have to give back the CPU for some other app to run), but it’s true that most Amigas had no MMU and no enforcement of security or memory boundaries, and only one address space, so, yes, the apps had to play nice and not screw with memory they hadn’t allocated lest they clobber another app, but that’s different than preemptive/cooperative. Neither were all the memory requests gathered in one nice list, so unlike UNIX, AmigaDOS programs had to free up all requested memory or it would never get returned to the pool. We spend a lot of time tracking down memory leaks that in UNIX were solved by stopping the process and spawning a new one – memory clean!

I remember you would have lost a few hundred K of chip ram on an Amiga, after you’d been running a few things, there were utils to clean up.

The 80286 had protected mode and *could* address more RAM than the 8088/8087 but Intel screwed up the design to where it could not switch out of protected mode without a hard reset. Never fixed that problem, just tossed it behind as they went on to the 80386.

A far better OS for the 286 could have been done by going into protected mode ASAP after booting then staying there. Of course all software running on it would have had to be able to operate in protected mode. That would have excluded all normal DOS software.

A protected mode GUI OS on a 286 would have required rebooting and dual booting to be able to run DOS software – but would not have required a reboot to first boot to DOS, run some stuff there, then launch to the GUI OS. That would have just cleared all the DOS stuff out of memory while taking over the PC.

Had Microsoft decided to make Windows 3.1 a pure protected mode system 100% 16 bit (with extended 32 bit support on a 386 or 486), kicking DOS program support to the curb so it didn’t have to run in Standard Mode on a 286, the history of Windows would have been far different. Instead, Microsoft held onto full DOS and 16 bit Windows program support for more than another decade. Some DOS programs (and some 16 bit Windows ones) can still be run in current 32 bit versions of Windows.

Even better would have been a pure 32 bit only Windows for the 386 and later. Windows 95 still supported 16 bit Windows 3.1x printer drivers. (Dunno if Win 98 did, never tried that.)

Complete separation from that ‘legacy’ support has only happened in 64 bit versions of Windows.

Aw, heck. I can’t count the number of times I played King’s Quest on my school’s PCjr. Wore the 5.25″ floppy disks right out!

I place I worked at had a PCjr, one of the guys couldn’t figure out why the keyboard had AA batteries, when I told him there was an IR link between the keyboard and system unit, he had to set it up and boot it to see for himself!!!

the (GROAN) MFM harddrive “upgrade” for the jr was a bitch, right up there with the Panasonic “portable” HDD “upgrade”

I got offered a free PCJr system unit in about ’89, turned it down when I realised it needed about $2000 of Jr specific crap to actually make it useful.

It was mostly to do with hardware with 640 k limitation, not DOS. Many games wanted to use as much bellow 640 k memory as possible. For years afterwards, many games would actually require running in DOS/DOS mode to get more performance of bogged down Win95/98 systems. DOS was fast.

Pretty much guaranteed that games released in 95/98 era they were using a flat addressing mode extender like DOS4GW to get DOS out of the way when you “used DOS”. DOS was less in the way than Windows, sure, but that just made it easier to get out of the way almost completely. So when we say “DOS was faster for games” what we really mean is, “DOS could be kicked out of the way with an extender so the game could benefit from no OS limitations.”

Yeah. It is difficult to kick Win or Lin out of the way for such purposes. They are holding onto the hardware like leeches.

There was though the benefit that windows virtual memory looked enough like hardware that you could test stuff that needed more RAM under DOS than was physically installed. It was stupid slow and you’d hit a brick wall every time it swapped, but you could do it for testing or giggles. If you’re feeling particularly masochistic for example you could try running DN3D under win3.11 on a 4MB RAM machine with 8MB virtual memory. It might even be marginally playable with a fast CPU and HDD.

lol

seriously??

when did DOS ever “get in the way”????

our ages are showing, yours and mine!

DOS is only there for booting DOS and RWTS(read, write, track, sector)

the reason those of us who still boot DOS on a x86 machine still do so, is because we want all the machine.

if you’re still running DR-DOS or freeDOS, you will understand, minimal OS footprint and total control.

Am I the only “older guy” here who wonders just how fast a “modern machine” would be if we could still type BASIC/Forth into the command line??

Am I the only one that misses the days when we could turn on a machine (not boot, just turn the fucker ON) and type something like

?SIN(37.456)*10

you “boyz” that think “DOS got in the way” have no clue…

You are partly right. Truth is though unless you have multitasking support your CPU is spending most of it’s time waiting for you at the command prompt. Also that 640K memory limitation was a real nightmare. Sure you could use something like QEMM or 386Max to give you more RAM but seriously you might as well boot into linux without a GUI.

I’m a bit bit rusty but sounds correct, DOS works in the kernel space of the 86 chip (if it has one) and was really just a collection of disk, memory and screen functions you could call via INT commands and a simple user shell command.com.

if you compiled while(1); and overwrote command.com with it your CPU would run the loop at 100% and nothing else apart from the DOS timer interrupt (which you could switch off) would be running. So assuming you were/are happy with 640KB it was perfect.

You guys seem to be mixing up BIOS and DOS functions INTs were BIOS calls.

int 21 bro.

DOS was barely an operating system as we understand it today. More of a program loader and a thin and easily ignored standard library. It’s vastly smaller and less functional than, say, modern EFI firmware.

The OS’s of today are like big government. They get in the way, they tax your CPU, and eat up valuable resources that could be used to help you do your job.

lol

I like where you are coming from!!!

I agree.

And provide no: console, disk, filesystem, network, graphics, sound etc. whatsoever.

wish someone would get OS400 running on something other than the base hardware of an AS400 getting more people learning that dead system is vauable

Whatchootalkingaboutwillis? iSeries is running strong… http://pub400.com/ seems fairly alive, and by looking into some office windows in the “city” it looks like it’s still running …

Calling the Machine Room “a city” because of the towers in neat rows like skyscrapers…

I never heard of one referred that way before. I like it…

Oops, I didn’t notice your reply. No idea if you’ll read this either. Ah well, I can’t let a correction go to waste ;-)

“The city” refers to the City of London ( https://en.wikipedia.org/wiki/City_of_London#Headquarters ), the traditional place for banking/financial services in the UK.

Fun fact, my first proper programming gig was writing a real-time production control system for … IBM, specifically their hard disk manufacturing plant in the UK, which is now under the ownership of Toshiba (IIRC). PL/1 and DDS files … I miss those days!

As for the machine room being a city because it looks like a collection of sky scrapers because of the racks of computers is kind of poetic. I might use that! **grin**

IBM sold their disk drive manufacturing to Hitachi, it became Hitachi Global Systems Tech (I might be wrong about the wording.)

When Western Digital bought it out from Hitachi, they renamed the division HGST.

I like things like this and will have to try this out after work/weekend. I was looking everywhere for bare-metal emulation systems like this but: google and friends… They never revealed anything!

Now just waiting for at least a proof of concept: A bare-metal minimalist x86 platform emulator with support for 8086 and maybe through to the 486.

8086 emulator https://www.raspberrypi.org/blog/8086tiny-free-pc-xt-emulator/

486 emulator http://rpix86.patrickaalto.com/

It should be easy. You just need to map every i486 command and register to corresponding command and register of RPi CPU. And because RPi has 64-bit quad-core CPU that runs twelve times faster than fastest i486, it should be quite fast emulation. One might even consider dedicating one of cores to perform all the IO, disk access and instruction fetching operations in such a way, that each of remaining cores deals with each third instruction, so the load is distributed…

Not quite that easy. The arm CPU is going to be missing a crap load of 486 equivalent instructions, that’s what RISC means, and the 486 will have instructions that can only be emulated in many instructions on the arm, that’s what CISC means, and then you’ll have timing issues between instructions that are meant to execute in one or 2 clocks and don’t, and ones that do. So to be timing compatible, you’ll have to slow it down to the speed that the slowest emulated 486 instruction can be emulated in.

it’s not that easy

I’ve been running “Warcraft Orcs v Humans” on my Raspberry Pi, it kinda, sorta feels like running on a 33 Mhx 386, but top says the process is running at 95%, on an 800 Mhz ARM.

that is a whole lot of something going on!

back in the day I wrote a disassembler for what ever processor, running on my Mac, under 6.0.7.

“all you had to do” was build an instruction set list in resedit, it took me a few hours to do the 6502 and 6809 instruction sets.

It took a couple of days to do the Z80 instruction set.

$00 to $ff got used up, then they started using 16 bit instructions, on a 6502 there are 157 discrete instructions, with 14 addressing modes.

the tricky bit with emulation is addressing modes.

I probably went about it the wrong way with my emulator, but some of the 6502’s addressing modes made things really tricky.

I got a job where I had to reverse engineer a Z80 based plotter controller, that kinda, sorta spoke RDGL.

I dumped the ROM, then added the instruction set for this weird Z80 variant into my disassembler, there were a few hundred discrete instructions, with (maybe) 6 addressing modes.

maybe I’m thinking of it the wrong way, but getting some x86 code into an area of memory, then running it through an software instruction decoder/executor, which is how i did it, is pretty time consuming.

Where there’s enough speed, there’s a way. Back before CPU’s hit 1 Ghz someone wrote a fully functional NES emulator in Quick BASIC (not the QBASIC that shipped with MS-DOS). It was horribly slow, the author said it would take a 1 Ghz CPU to get full speed.

Sure’nuff, when the first 1 Ghz CPU was released, someone tried that emulator and got it to run at pretty much full NES speed.

Dunno where that one has gone, but here’s one done in QB64, with some adjustments to make it run in Quick BASIC.

http://www.qb64.net/forum/index.php?topic=11869.0

I agree to a major extent. It definitely not easy, that’s for sure.

However, modern X86 is a (hardware though) CISC to RISC converter with a RISC core and uses microcode for optimizations.

I haven’t a clue what will make the emulator/translator hard to do at bare-bones level. The biggest speedup would be using one core for translation and pipelining of the CISC set and the other core for the RISC. The hardest part I suspect will be the actual pipelining of the instruction set (Remember Pentium 4, Even the pro’s struggle sometimes) and also the need to make a custom RTOS to interface the hardware with the simulated environment.

PS, to D, I was thinking more standalone bootable RTOS based translator program (hence bare-metal). However 8086-Tiny-Free is interesting out of the two.

P4 design brief

1) Pipeline up the wazoo.

2) ???

3) Profit!

CP/M—wow! That brings back memories. Could I run WordStar on a RPi?

And SuperCalc!

I wonder if there is any meaningful MMU support in the “bare metal” environment provided by ultibo? I’ll have to look into it. (And dust off my Pascal brain cells which still retain a handful of dialect-variations from UCSD Pascal on P-System to Think Pascal on 680×0 based Macs to Borland Pascal on 80×86 based DOS machines). This probably dates me but I haven’t written anything in Pascal since I took the AP exam for CS in high school (back then AP CS was universally taught in Pascal).

I run free Pascal on all my machines, Raspian, Debian, Win98 and XP

Ever do anything with Pascal on a TI-99/4A?

Feature request – Please add audio of 5.25 floppy accesses. Otherwise it’s just doesn’t feel right :-)

I recently bought a replica of the old IBM Model M keyboards and while the feel is very close to the real thing the sound is completely different and not very satisfying.

Pascal – a bad idea whose time never came.

really???

the difference between 4GLs is pretty trivial.

You poor ignorant child.

what I refer to as “dinner party computer science”

it goes something like

“Pascal gives you an abstraction of the machine, C gives you the machine”

which is total crap, with a 4GL, the stack starts at the bottom memory, goes upwards, the “heap” starts at the top of memory goes downwards, when they hit, it’s bad!!!

I don’t remember Pascal ever being a 4GL as it compiles direct to machine code same as C (hence TFA)

I think the idea is, that properly implemented Pascal you shouldn’t have to worry about it, C you probably do. So depends on how good or bad or close to standard the compiler is.

Some compilers for Pascal “compiled” to p-code. I thought the pascal compilers back in the day all did this. That’s one reason I stopped using Pascal. ( That and the fact that a program I wrote in Basic kicked the Pascal version in the pants)

I am afraid most people writing abou OSes “getting in the way” don’t get right the way operating systems work. Take for example Linux. You can boot it directly into a shell by adding init=/bin/bash in a bootloader. What you get is a single process (except for several kernel threads depending on supported options) running and the kernel acts as a library supporting fileystem, network etc. The only difference between library functions like printf(3) and syscalls like open(2) is that the former cannot access all the resources (memory, devices) in the system. The other tiny difference between DOS and a “single process” Linux is the scheduler running every few miliseconds which of course doesn’t have much to do.

This difference becomes significant when you start some more processes like network servers or printing services because they need to use some CPU cycles.

nope!

with DOS, it was all about the “disk”, in particular RWTS, read, write, track, sector.

the “shell” as such was in BIOS, when you turned on the machine you could write BASIC code, on an Apple II, you could type “call -151” and you got the “machine code” monitor.

it used to be that with no disk interface you could do stuff, ok, there was no way to save a program, except maybe cassette (groan), but you could do “stuff”.

on a “modern” machine, without a disk interface, the machine is basically a paper weight/room heater.

even with PS2 machines you still got a basic command line and could type “cload” and the dopey thing would still go looking for a cassette port.

with the original IBM PC/XT/AT you got a 2 ring binder manual with the BIOS listing, I haven’t tried it lately but all those BIOS calls still work, even under XP, edlin and debug are still there and work.

I’ve built quite a few 286 and 386 systems where I pulled the BIOS ROM, culled the “cruft”, burnt my own code onto an EPROM to build a “turnkey” system.

with DOS, DR-DOS, freeDOS there is nothing happening until you run/execute something

> with DOS, it was all about the “disk”, in particular RWTS, read, write, track, sector.

It was definitely more than that: https://en.wikipedia.org/wiki/MS-DOS_API

I can’t say anything about BASIC on PC because my only experience with it was “NO ROM BASIC SYSTEM HALTED” message when, AFAIR, I tried to run a game (DOOM, Heretic?) on an underspeced machine.

> with DOS, DR-DOS, freeDOS there is nothing happening until you run/execute something

Or press a key, or trigger any other supported interrupt (e.g. receive a data packet over an ethernet card). In case of lack of multitasking this the timer interrupt is not “supported” of course. On the other hand, if there is only one process (ok, today there may be some kernel threads too) the scheduler doesn’t have much to do so on any modern hardware the overhead is negligible.

ROM BASIC was included in most of the Model 5150 IBM PC’s. There were four ROM chips on the motherboard. BIOS in two, BASIC in the other two.

If the PC was turned on without a bootable floppy or fixed disk, it would go to ROM BASIC, which by default used the cassette port (next to the keyboard port). I don’t recall if ROM BASIC could use the floppy on a 5150. It could on a PCjr. There were far better ‘soft’ BASICs available and for the PCjr there was a cartridge BASIC with more features than its built in BASIC.

The PC/XT also supported ROM BASIC most of them shipped with the BASIC chips not installed. IIRC IBM changed it from a standard feature to an option. Perhaps IBM expected that people upgrading from a PC to an XT would pull the chips and transfer them?

For a long time, PC clones kept the BIOS call to go to BASIC even though the hardware had no provision to install BASIC on chips, so turning them on without a bootable disk would get the NO ROM BASIC message.

There’s a hack idea. Take the 5150 BASIC ROMs and put them onto a PCI or PCIe board in a way that the motherboard will see it as a bootable device and boot to BASIC when there’s nothing else bootable.

edlin and debug are included* with Windows 10 x64. WTF they are, who knows? They’re 16 bit and won’t run.

*Unless they’re leftover on this box from XP, somehow surviving through using PC Mover to migrate everything from XP x32 to 7 Ultimate x64 then the free upgrade to 10.

It all depends what you are doing. For most things I want to do, linux absolutely gets in the way. Not that I don’t appreciate linux as a desktop, but as a platform for embedded design — unless you are building a network appliance or something of the sort, it is hard to imagine a worse choice. A classic and concrete example would be accessing gpio or adc through the filesystem on a BBB – fine if you want to turn on a desk lamp when a network packet arrives or catch a single button press or something, but when you start wanting to do things at rates beyond 1 Hz things get pretty ugly.

Have you tried running in a “single process mode” with init= on kernel’s command line set to your programme?

Have you tried increasing or decreasing HZ in the kernel config?

Have you tried accessing the devices with inb(2)/outb(2) functions or some inline assembly code?

Have you tried writing a kernel module to do the time-critical job?

I’ve never touched BBB nor tried to do time-critical tasks on any other ARM board and I am really curious.

All these are tire patches on a square peg that is trying to be forced into a round hole. No inb/outb on the ARM, sorry. :-) But ultimately you are talking here about writing your own device drivers, which is a method I have long advocated for solving some real-time issues with linux. You are looking at an impossible fight trying to access hardware from user space via inline assembly so it would be kernel modules for sure. But why go this road at all. Use a real time OS of some kind or get down on the bare metal. The main reason to use linux is because you want things like a solid network stack, your favorite interpreted language, and all the other leather seats and fine upholstery. I am just saying linux shouldn’t be the only tool in your box. A system like ultibo here might be just the thing, but I will get a cramp holding my nose due to the Pascal …..

PIP of an blog posting.

Gary Kildall…..Pascal MT+….. Z80 Assembler

Misty water-colored memories

Of the way we were

Every few years, I get an itch to I turn on one of my old computers – KIM, ELF, etc. or load an emulator for ones I used to use, CP/M / TRS, etc… load a program, run it, and then sit for a while wondering why I did that.

I knew I was going to be disappointed, but I did it anyway.

Was installing Multi-user Concurrent DRDOS in small businesses before GUI’s took off. Could run one copy of Word Perfect and Group Wise to 12 terminal on a 386…. Very cost effective.