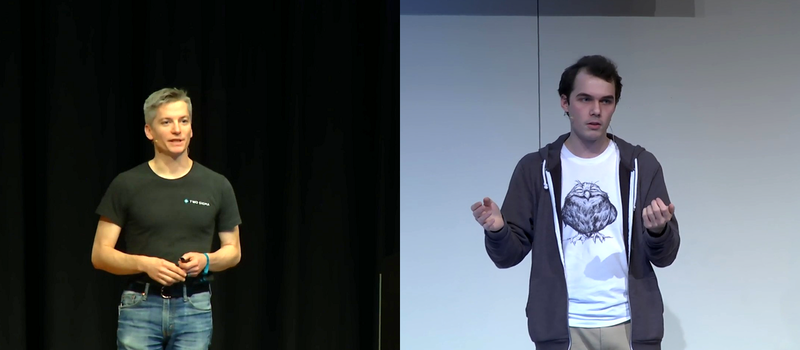

It’s a sign of the times: the first day of the 33rd Chaos Communications Congress (33C3) included two talks related to assuring that your own computer wasn’t being turned against you. The two talks are respectively practical and idealistic, realizable today and a work that’s still in the idea stage.

In the first talk, [Trammell Hudson] presented his Heads open-source firmware bootloader and minimal Linux for laptops and servers. The name is a gag: the Tails Linux distribution lets you operate without leaving any trace, while Heads lets you run a system that you can be reasonably sure is secure.

It uses coreboot, kexec, and QubesOS, cutting off BIOS-based hacking tools at the root. If you’re worried about sketchy BIOS rootkits, this is a solution. (And if you think that this is paranoia, you haven’t been following the news in the last few years, and probably need to watch this talk.) [Trammell]’s Heads distribution is a collection of the best tools currently available, and it’s something you can do now, although it’s not going to be easy.

Carrying out the ideas fleshed out in the second talk is even harder — in fact, impossible at the moment. But that’s not to say that it’s not a neat idea. [Jaseg] starts out with the premise that the CPU itself is not to be trusted. Again, this is sadly not so far-fetched these days. Non-open blobs of firmware abound, and if you’re really concerned with the privacy of your communications, you don’t want the CPU (or Intel’s management engine) to get its hands on your plaintext.

[Jaseg]’s solution is to interpose a device, probably made with a reasonably powerful FPGA and running open-source, inspectable code, between the CPU and the screen and keyboard. For critical text, like e-mail for example, the CPU will deal only in ciphertext. The FPGA, via graphics cues, will know which region of the screen is to be decrypted, and will send the plaintext out to the screen directly. Unless someone’s physically between the FPGA and your screen or keyboard, this should be unsniffable.

As with all early-stage ideas, the devil will be in the details here. It’s not yet worked out how to know when the keyboard needs to be encoded before passing the keystrokes on to the CPU, for instance. But the idea is very interesting, and places the trust boundary about as close to the user as possible, at input and output.

If you cannot trust the CPU, why can you trust the FPGA?

Well, presumably the FPGA would be easier to audit. Assuming you can take a microscope to the silicon, and your chosen model of FPGA doesn’t include anything too complex in the way of embedded controllers, etc, you can actually tell that it’s what it claims to be by eyeball.

> Well, presumably the FPGA would be easier to audit.

Bwahahahaha…. oh that’s a good one alright.

Despite what others have said, it SHOULD be easier to audit an FPGA — or at least most of it.

Modern FPGAs have a bunch of “extra (but necessary)” stuff added to them — processor cores, SERDES, block memories, multipliers, DRAM and network interfaces, etc. Those are pretty much like any other piece of silicon — you can’t easily tell if they have been compromised.

However, most of the area goes to the programmable logic part, and that should be dead easy. Every logic block should look the same. Everything should be nice and uniform. Any deviation from regularity should be suspect. I am not sure how the routing differs between the center of the chip and the edges, but that should be easy to figure out.

Now, let’s assume that some random bad actor DID want to snoop on your FPGA by modifying the silicon. Guess what? HOW WOULD THEY DO THIS? On a processor, it is easy because you KNOW where the data is and what the address lines are. On an FPGA, each logic block performs its own purpose and has NO IDEA what its role in the big picture is. Using just a bitstream, it would take a human some effort to figure out where the address and data lines are hidden, but to automate this? Just by changing which pins controls the address lines, you could move significant portions of the logic to completely different portions of the chip! This makes it impossible for an attacker to make a generic attack that will work on any logic on the FPGA — the attack would have to be specific to particular loads.

Now, as I stated, the weak link is in the hard-coded IP. Maybe buy an FPGA without an embedded processor, or ignore the process and roll your own in logic gates. For things that you need (interface logic), you could scramble your data to make things harder. The Ethernet interface is one obvious weak spot.

Just my $0.02.

iCE40’s fit these restrictions pretty well, although the BGA models are only up go 8k 4LUT gates, and the QFP models are only 1k/4k gates.

The problem with both of these is that you are going to be limited to DDR2 memory if you only use 16 bit wide buses. The clock rate dropping based on your required logic width, with anything narrower being limited to the 533mhz maximum clock (assuming perfect routing and the highest rated chips.)

I haven’t found a simpler device available today and as mentioned in previous hackaday articles they have an open source toolchain. If you can make do with the extremely limited number of gates available and chain them together you could get performance levels acceptable for a general purpose (barring multimedia and games) computer with auditable code from the ground up.

The long term solution still requires a series of independent fabs, geographically, politically, and financially independent providing manufacture and support of the same designs. And ensuring transistor perfect verification between chips would require all the fabs to be running essentially the same process with process errata being shared between fabs. Given that we are this far into the 21st century and it seems farther away today than it was 20 years ago, I will not hold my breath on it happening, at least until hackaday is running an article on fabbing 6502 sized hardware at home :)

They aren’t as impressive but are open source. so a FPGA will probably never (price competitively) beat a CPU in speed but it can be peer reviewed and people can decap/scan fpga’s and make sure on average they aren’t tampered with. I’m more then willing to spend a couple extra hundred dollars ensure my system is truly as secure as this concept.

Soft CPU on FPGA are worse, as the silicon itself leaves no trace of the loaded gate structure.

Also, some FPGA tried to prevent this live jacking by encrypting the external memory blocks, but it was eventually broken.

The obvious solution is to jettison the companies who compromised their own integrity by hiring active foreign and domestic agents. When Broadcom was sold to a US based firm, it was also subject to the current surveillance laws rooted in Despotism:

https://www.youtube.com/watch?v=TaWSqboZr1w

> Well, presumably the FPGA would be easier to audit

FPGAs are as far from open source as it gets.

You can’t even write an open-source compiler for any modern FPGA… even the binary format is kept secret.

Xilinx in the past opened up the CLB binary information for some (then current) FPGAs so dynamic stuff could be done in place. Virtex and could even rewrite their own logic on the fly IIRC. The part they kept completely propitiatory was the routing. Aside from Lattice ICE is none of this sort of information available anymore?

because monitor cables don’t have an internet connection. oh wait hdmi you sneaky little…

I guess anyone using ‘buisness’ hardware is ok because all monitors still seem to be vga for some inexplicable reason. maybe I just hang around in really outdated government departments.

EDID is easily exploited…

And unidirectional.

EDID Can be Bi-directional,

We have to reflash stale VGA monitors with a common fault: EDID ROM stored bits fade over a course of about two years.

We don’t replace the ROMs because we want them to fail often enough so we can make money on them, yet not too quickly to arouse suspicion of foul play. (Our customers recommendation as their customer pays them well without knowledge).

We flash them over the VGA cable (albeit via a black-box programmer).

ROM that fades in 2 years? What absolute nonsense, I mean perhaps ROM from the 1950’s, but come on.

There is such a thing as a bad batch, wether that was with the ROMs or how they were powered are a different matter altogether.

Also it only affected one brand and model of VGA monitor.

Just how things pan out sometimes.

Look at the fail articles for example of things that should work but don’t.

All the recalls for things like say the samsung note 7, dell batteeries, car recalls for misprogramming, VW scandal, that DOA HDD someone got, Seagate’s whole range*, planes dropping out the skies due to human-error+failures, bla bla, bla, etc, so-on, so-forth.

So extremely far from nonsense!!!! It can and has happened!

*OK a lie about seagate, but i just don’t trust them due to bad experience… my luck I suppose)

If a unit of encryption is a single character typed on a keyboard or delivered to a text terminal, it’s not really possible to have strong encryption.

Nope. That’s incorrect.

https://en.wikipedia.org/wiki/Stream_cipher

Quote from your Wiki link:

“are combined with a pseudorandom cipher digit stream”

is the bit that came to mind immediately when reading Hanelyp’s comment.

At that point wouldn’t it be cheaper to just place an entire CPU in the FPGA and add Linux support for standard open CPU cores?

It would be, if you could trust that the FPGA doesn’t already load some code from internal ROM before loading your code.

If you do all of your email using an encrypted email client, running on an Apple ][, Commodore 64, or TRS-80 Color Computer, I mean an original, not a copy using modern chips, then you can be reasonably sure that the chip doesn’t have any spyware on it. This is probably safer (and doesn’t require any decapping) than modern FPGAs. But of course THEN you have to take measures to ensure your video output isn’t radiating enough energy to be picked up in the next room.

If you don’t want to deal with aging hardware, then another reasonably safe approach is to use a modern 8-bit microcontroller as your email client. These are too power- and resource-stingy to be doing much beside what you’re telling them to do.

Or one can buy a computer made before all that “backdoor in hardware” BS Intel is doing. Add some old Linux distro that will work on that old hardware and you are as secure as possible, not counting all 0days, exploits and backdoors present in that old distro. If one is really paranoid, she can write all her secret emails as limericks in Klingon…

ना, सौद इत! वाई नौत त्राँन्सलीत्रेत?

Copy and paste that in google translate and select Hindi for the source writing, then click the speaker to hear it spoken!

Now that mixed with the Klingon you suggested (then encrypted) would become a little bit of a challenge for NSA/GCHQ and friends to decode. also if everyone started in one go with this, it’ll clog up their spying with trying to correctly decode every transliteration into their respective languages and/or ciphers etc…

P.S. Firefox isn’t displaying Devanagari writing properly on my system for some reason (Still!)

And the monitor and keyboard are safe? Wouldnt be hard to add some code to those microcrontroller in there.. A simple microcontroller just for sending and receiving encrypted mail seems more secure. A microcontroller with a window to ensure die integrity off course. Using leds as dsplay en encased in lead to prevent eavesdropping.

There is a difference between spyware on silicon and companies that sell you hardware openly admitting they run programs you can’t alter, audit or even stop short of pulling the power cable. When these programs can even connect to the network independently and can see into your memory space this isn’t about paranoia.

Make certain to use open source “1”s and “0”s instead of proprietary.

I recently bought a bag of locally sourced biological farmed ‘1”s and ‘0”s. Because of the build in self organizing data structure its a breeze to assemble them into a program. Only I’ve found out the bits are incompatible with the gluten free hardware. Who knew…

Intel ME – protected by encryption, so it *must* be uncrackable – the first ME malware and people are going to be screaming about what a bad idea ME is, I just can’t understand how few people can see this before it happens…

+1

Encryption would have to be end-to-end. Anyone remember this talk by Bunnie Huang at 30c3 in 2014?

https://youtu.be/Tj-zI8Tl218

TL;DR; There is a “small embedded controller in every managed Flash device.”

It is not easier to audit the FPGA but it is easier to “control” one FPGA or a chain of FPGAs.

Once you use an FPGA, as the approach written about does, the solution is obvious and simple: Open source CPU.

Meaning: Once you use FPGAs, why use another CPU at all?

Also, when using soft cores, manipulation of data with secret hardware on the silicon is hard to do; almost impossible for the following reason: The fabric and the IOs have to behave as specified. For example, you cannot have a secret hardware TCPIP stack on every IO pin just for the case that this particular pin is connected to a PHY. This is totally different for silicon, the final functionality of which is fixed during production. Also, you can use a second FPGA to verify the consistency of the (encrypted) data of the first one. Obviously, one exception are hard core macros (CPU, MAC)

With FPGAs, you have other concerns:

1. What does the synthesis tool do to your opensource softcore CPU and your softcore MAC? Again, it is very hard to do modifications without changing the behavior of your components.

2. Can you trust closed source cores from the vendor?

3. Modification, readout,… of your configuration data, meaning: Once your system is tested and in daily use, how the ensure that no one replaces your configuration with a different configuration?

Damn it, you have revising my “calculator” idea again…

Now I’m thinking of a dumb terminal variant, acting as a USB hub, with LCD screen.

Text to screen is shown “raw” on LCD, then encrypted and sent to PC for display on standard screen.

Selected text on main screen is copied to USB device, decrypted and shown on LCD.

[Oh, and given the correct key combination, it can still act as a calculator… my original idea]

Up to now I was considering a microcontroller, but this makes a case for [open source] FPGA.

The best idea is to talk to people…in person… in a loud bar. Mostly because the the noise is helpful and the alcohol will help to control your paranoia that that the government is out to get to get you. Sooner or later as all the devices are infiltrated we will have to talk to other people rather than text them from across the table.

I get that it is good that people are looking at this and validating if these systems are safe. But I think that when you’re at the point of not trusting your CPU then you’re one step away from the funny farm.

Here is how to make a tin foil hat…

https://www.youtube.com/watch?v=PS8dNzRhMgk

Miss the point much? We’re all wearing tin foil hats, yours has slipped over your eyes.

Most gather we’ve been losing privacy since database nation came out, but with that being said a society can’t function without trust, and there’s only so much time and resources for validation so the line has to be drawn somewhere. Do the low hanging fruit and trust the rest, meanwhile even though politics and social are dirty words get involved in those so that future generations will understand just how important privacy is.

Funny farm or not, just because you’re paranoid doesn’t mean they AREN’T out to get you.

To be fair, no govt’ is out to get anyone, just out to USE everyone.

Both situations are similar in what they pursue:

You see, we use tools to make our products cheaper and better than the usual commercial stuff, thus leaving more money in our pocket.

The govt’ use people for self entertainment and for the purposes of creating movement of money so MP’s can have expenses pay for several houses, a bunch of cars, but at least they only want one paid-holiday-a-day. source: UK news and multiple sources, also very personal experiences with the social services dept!

Yet again, no tinfoil hats involved!!!!!!

.,

.,

Seems like the words “conspiracy theory” has the same offense as say, being anti-Semitic or calling someone racist names. WHY?!?!?

.,

.,

I say there is a rumor of something at work to my father and he says: be careful… Then the rumor happens… Didn’t affect me.

I hear another rumor and say to my father a conspiracy theory about something is about to happen is spreading around work, and he replies: What a load of rubbish!!!! Don’t go about believing any conspiracy theories!!!!….. Then the rumor happens and didn’t affect me.

Not at, but in addendum to BrightBlueJim, this is aimed at the original commenter.

The way that this is starting to go, I am only a few bad news articles away from dumping anything “new” and going back to the AMD dual-core generation. Before everything and a kitchen sink was integrated onto the cpu and it is all hidden behind black boxes.

I am also intently watching the outcome of the open-source POWER8 architecture very closely as it looks like a nice upgrade for my current hardware, and it is not outwardly trying to spy on my.

I’ve heard of sending a bunch of junk data along with your valid data to make it harder to tell the wheat from the chaff.

If we’re going full tin-foil hat, why not something like an old computer acting as a thin-client to a modern system but it also does the encryption/decryption where needed.

Since we’re distrusting mass produced ICs, wouldn’t the logical step be to figure out how to produce your own ICs? It would be costly at first but so was 3d printing. We have the tech (high speed lasers and spinning mirrors) and I think we can fab our own wafers on a scale close to 100nm which would produce 2001 era chips which isn’t too shabby.

Yes!

The real solution is to let ‘makers’ make their own hardware. It won’t be easy to house your own clean room. Many of the required tools will require precision miniature parts (wire bonders etc). The task is daunting, but why don’t makers try the Waldo route? The cleanroom could be miniature, and tele-operating miniature Waldo’s (and miniature lathes, mills, drills) means we don’t constantly contaminate the clean “room” with our hairs/dust/pizza by virtue of never letting people walk in to them…

Sure the first versions would only be low resolution patterned, .. but the 4004 feature size seems like a feasible start…

Once we have some miniature Waldo’s, it will be a lot easier assembling precision parts for the miniature lathe/mill/drill etc, and since the device sizes shrink the material costs will as well, so a couple of makers collaborate on a specific device and sell/trade with another band of makers that made another device…

since you cant trust other humans, you cant trust anything you didnt build entirely by yourself.

What is entirely? The design as well? I think we can come to trust open design, with verifiable claimed properties, we can delegate verification of their proofs. The manufacturing itself sure, we need the tools to manufacture our own integrated circuits. If we can reach the point of open source micromanufacture then over time those who fabricate their own IC’s and those (from who did not want nor care to fab themselves) who succeeded in identifying trustworthy sources will prosper, so over time open micromanufacture wins…

(as they were not spied upon for cybernetic gain)