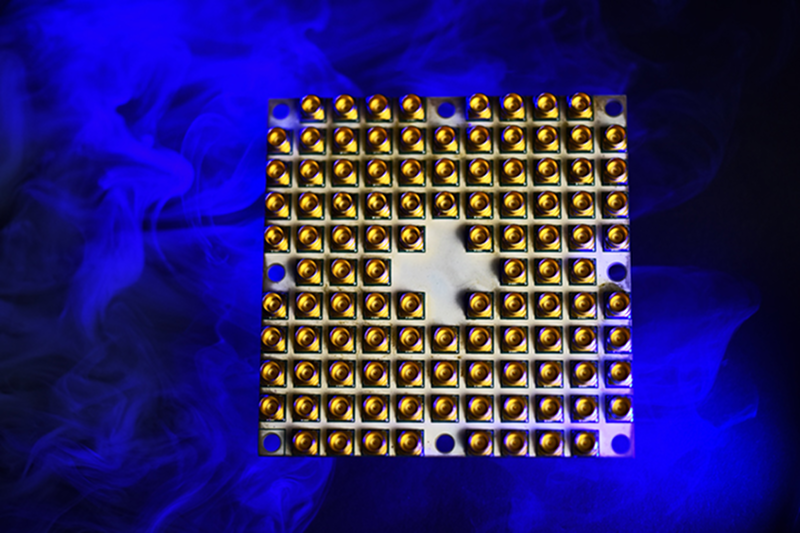

With a backdrop of security and stock trading news swirling, Intel’s [Brian Krzanich] opened the 2018 Consumer Electronics Show with a keynote where he looked to future innovations. One of the bombshells: Tangle Lake; Intel’s 49-qubit superconducting quantum test chip. You can catch all of [Krzanch’s] keynote in replay and there is a detailed press release covering the details.

This puts Intel on the playing field with IBM who claims a 50-qubit device and Google, who planned to complete a 49-qubit device. Their previous device only handled 17 qubits. The term qubit refers to “quantum bits” and the number of qubits is significant because experts think at around 49 or 50 qubits, quantum computers won’t be practical to simulate with conventional computers. At least until someone comes up with better algorithms. Keep in mind that — in theory — a quantum computer with 49 qubits can process about 500 trillion states at one time. To put that in some apple and orange perspective, your brain has fewer than 100 billion neurons.

Of course, the number of qubits isn’t the entire story. Error rates can make a larger number of qubits perform like fewer. Quantum computing is more statistical than conventional programming, so it is hard to draw parallels.

We’ve covered what quantum computing might mean for the future. If you want to experiment on a quantum computer yourself, IBM will let you play on a simulator and on real hardware. If nothing else, you might find the beginner’s guide informative.

Image credit: [Walden Kirsch]/Intel Corporation

Ok, so I have a real question: Why are all these qubit chips weird sizes, like 49qubits, or 17? What happened to powers of 2? Y’know, boring numbers like 16, 32, or 64?

17 and 49 are Prime numbers…

I love that 49 is now a prime number. “Tough titties 7.” :)

It’s a hardware bug and a future security hole. :-D

Your not supposed to fact check my claims. ;P

You’re*

You’re not supposed to spell check them either.

wow

It wasn’t always this way with regular 0-or-1 bits either. Some early computers used 6 bits per byte. or 9 bits per byte. So your fancy 1960s computer with 8 bytes of memory might have had anywhere from 48 to 72 bits. We finally settled on 8 bits per byte because it was a convenient power of two, and we only cared that it was a power of two because, as more bits are added, the number of combinations (ie, addressable numbers) scale as a power of two.

That doesn’t apply to qubits, which have any number of possible states and potential combinations. I don’t even know if it would make sense to group qubits into “qubytes” or not. So with no grouping of qubits being done, there’s no point in targeting any specific number of bits per package.

I guess it’s because 49 is the amount they can pack into their design. Power of two is due to the revolution of the 8 bit byte (there have been other byte sizes) and having 8 bit bytes plus byte addressable memory means easier porting of software.

There’s not much software for quantum machines, in fact there isn’t really any software at the moment AFAIK. Think analog computers with dials and wires.

They are aiming for a million qbits next. The real problem is that qbits and bits are not the same, you may as well be asking why are computer clock speeds not multiples of 2.

Does this mean the Intel ME Subsystem will be 500 trilion times easier to exploit?

Well. Quantum entanglement sounds like new kind of side channel for attacks. At least they freeze the quantum computers to near 0K to prevent Meltdown.

Your joke was so subtle and clever…. Nobody had even suspectre thing!

The world where attacks can be taken out on computers via long-distance entanglement is one i’d want to study.

this would not be good for unix.

if you cat a file, it might delete it!

(lol)

Oh jesus.

It might or might not be deleted…..

It’ll be in a state of both deleted and not deleted… just don’t look!

Quantum computers can’t replace classical CPUs, only supplement them. Much like you still have a CPU along with your GPU.

I mean, technically Quantum computers will be classically Turing Complete (in addition to being quantumly weird), so would be capable of replacing classical CPUs. Now, practically you’ll still have classical computers for doing classical computations because there’s a LOT less overhead involved.

“if you cat a file, it might delete it!”

Not if you cat > Schrödinger > filename

You can only sudo delete it

You can only read the contents of the file or its location, not both.

Was happy to see hackaday covering this as I wanted a in-depth analysis and a technology review/update. Hence I was very disappointed by the actual article…

Is it just me or has everyone been quite rude in 2018? Since I don’t read “the usual tech rags” I actually was glad to see this and I will play with the IBM online thing. Maybe you get your own website see how that goes?

“You can catch all of [Krzanch’s] keynote in replay and there is a detailed press release covering the details.”

I want the details not covered by the press release.. you could atleast included some technical aspects in the article? I would think that researching a good article would be easy as there is no lack of papers on the subject and Quantum Computation in it’s current state is a hack of a hack of a hack that only just might work and then only around 0 Kelvin.

What if any impact does the development in this article have on the optimism of the next article regarding the future of bitcoin and blockchains?

“” a quantum computer with 49 qubits can process about 500 trillion states at one time. To put that in some apple and orange perspective, your brain has fewer than 100 billion neurons.””

Can anyone explain this sentence to me? One part talks about the number of states the other the number of neurons… what am i missing?

Thanks,

Like it said, apple and orange perspective. The two don’t really compare in any meaningful way.

Though I suppose if you followed it up with “and each neuron can have X number of states, for a combined Y states in total for your brain” then it could start getting interesting.

I read that some neurons have up to 10000 connections.

How much states do we have?

If we have 100 billion neurons and each neuron has 10000 connections and we treat them as binary

then 2^(10,000 * 100,000,000,000) approx one with 3e14 zeros

I love you. So many states. Sexy.

https://www.sciencedaily.com/releases/2014/01/140116085105.htm

Quantum might have something to do with it.

“what am i missing?”

Well, to put it another way: “If it takes a day and half for a hen and a half, to lay an egg and a half, how many pancakes does it take to shingle a dog house?”

42…

So… this is an announcement from CES, so take with the appropriate pillar of salt.

That said, from what I gather, if this is real, it puts practical implementations of Shor’s algorithm easily 2-3 years closer than I thought was expected. Is that a fair estimate?

If so, then from where I sit that means that practical attacks on RSA and EC will be feasible now *before* NIST even approves the first post-quantum public key cryptosystems.

This can’t be good.

Wouldn’t be surprised if the NSA has something a tad more advanced.

I would be surprised. Cuz bitcoin is the canary in that particular coal mine. But the bird is still singing.

Is it?

Nah, we’re still at least a decade away. It takes thousands of logical qubits (and even more gates) to run Shor’s algorithm, and those qubits need to be error-corrected to be useful for this case, so increase the number of physical gates by one or two orders of magnitude, so we’re talking 100,000 to 1,000,000 physical qubits, perhaps.

HOWEVER, someone could just be sucking up encrypted data over the Internet right now and storing it for the “Quantum Jubilee” when the first large-scale, encryption-breaking quantum computers are able to unlock the encrypted data.

So it’s really not that long from now, and we need to be thinking about this NOW because there are lots of things that we’ll still want to be secret 15 years from now.

Highly doubt it about “sucking up data”/etc. Even a small/low bandwidth uplink of ~10Gbit/s ~ 10 petabytes a year (with 50% average load, and counting that encrypted traffic is ~50% of that load).

So – unless there is a lot of filtering going on – it just would not work for long term plan.

There are, indeed a lot of things that we need to remain secret for more than a decade, but the overwhelming majority of encrypted traffic today world-wide has a useful lifetime more on the order of at *most* months. Just for example, it’s unlikely there will much interest in the fact that read this page and posted this comment ever again.

I thought we were up to 512 boxes of dead cats.

If that is a D-wave reference, then no they don’t have 512 boxes of dead cats, they have one single box with 512 dead cats piled up inside it.

D-Wave machines can only solve one type of problem called the lowest energy state determination.

Their machines are not capable of manipulating each qbit individually, instead they manipulate the state of all of them to setup the problem to solve, and let them find the lowest energy state of the system to provide the solution, which they read out as a singular result.

This is why they can have such a massive number of qbits, they don’t need to deal with them individually.

A horrible analogy would be magnetic hard drive platters. Current day drives cram a metric shitton of bits in a tiny physical space, but the drive can read and write each one individually (this would be the IBM/Intel/Google quantum model)

If you instead took a huge bar magnet to the platter, you could still read and write it but using a ton of those bits as a single entity (d-waves model)

This analogy breaks however due to the fact the d-wave can still exploit quantum features of those qbits to solve certain problems.

His first name Ian Brian, right? Not Brain, albeit fitting…

dyac*

Is, not Ian

What is that number 500 trillion based on? “In theory” we can have an infinite amount of states with one qbit. We just cant read em.

Ah never mind its just 2^49 5,6 x 10^14 sorry

It is important to understand what quantum computing is good for. Their strong point is not in performing logical operations, like current computers, but in finding patterns, such as finding the factors of large numbers. So, a low error rate 49 qbit quantum computer can theoretically factor a 49 bit number in a single step. That immediately makes decryption much faster. The more qbits, the faster the factorization. We are getting closer to the point where the best current encryption is breakable in some reasonable time period. Such pattern recognition could also be very important for AI.

Intel, you say? Would anyone like to speculate [pun intended] what sort of Spectre and Meltdown attacks are possible on this thing? Spectre and Meltdown already feel like weird $#!+ is happening in alternate universes because they’re not allowed in the real world, yet still detectable from the real world :-O

If you understand quantum computing, then you don’t really understand it.