If you ever needed proof that class-action lawsuits are a good deal only for the lawyers, look no further than the news that Tim Hortons will settle a data-tracking suit with a doughnut and a coffee. For those of you who are not in Canada or Canada-adjacent, “Timmy’s” is a chain of restaurants that are kind of the love child of a McDonald’s and a Dunkin Donut shop. An investigation into the chain’s app a couple of years ago revealed that customer location data was being logged silently, even when they were not using the app, and even far, far away from the nearest Tim Hortons. The chain is proposing to settle with class members to the tune of a coupon good for one free hot beverage and one baked good, in total valuing a whopping $8.68. The lawyers, on the other hand, will be pulling in $1.5 million plus taxes. There’s no word if they are taking that in cash or as 172,811 coffees and doughnuts, but we think we can guess.

qubits9 Articles

Quantum Computing: The First Taste Is Free

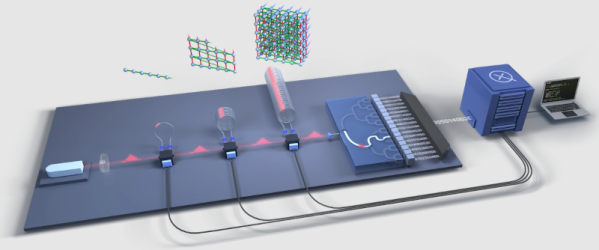

There are a few ways to access real quantum computers — often for free — over the Internet. However, most of these are previous-generation machines that have limited capabilities. Great for learning, perhaps, but not something you could do anything practical with. Xanadu, however, has announced what they claim to be a computer capable of reaching quantum advantage that is free for anyone to use, within limits. Borealis — the computer in question — uses photonic states and has the capability of working with over 216 squeezed-state qubits.

The company is selling time on the computer, but the free tier includes 5 million free shots on Borealis and 10 million shots on an earlier series of quantum computers. You can also buy pay-as-you go service for about $100 per million shots on Borealis.

While a few million shots may sound like a lot, we noticed that the quickstart demo consumes 10,000 shots and that’s presumably something simple. That’s still about 500 runs of that on Borealis — not bad for free on a state-of-the-art quantum computer. You will be wanting to debug with a simulator, though.

We presume the developers are Beatles fans given that you use software called Penny Lane and Strawberry Fields to access the machines. Your job is controlled by Python and there is a cloud simulator to save your shots.

We won’t pretend to understand all there is about squeezed light qubits and the Borealis architecture. But you can get some general practice in our series on quantum computing. Or there are a few lectures around including one that aims at different levels of experience.

Continue reading “Quantum Computing: The First Taste Is Free”

Noise: It Turns Out You Need It

We don’t know whether quantum physics proves the universe is truly a strange place or that we are living in a virtual reality simulation, but we know it turns a lot of common sense into garbage. Take noise, for example. Noise — as in random electrical noise — is bad, right? We spend a lot of time designing to minimize noise. Researchers in Austria, Germany, and Australia recently published a paper that shows that noise can actually improve the flow of energy. While the paper is behind a paywall, the Focus article is available and, of course, you can probably find a copy of the paper if you want to read the entire thing.

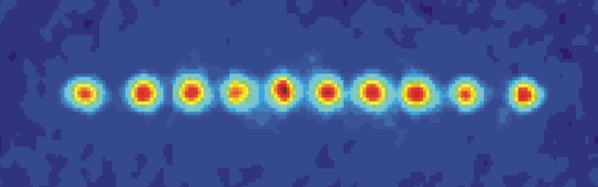

The paper, titled “Environment-Assisted Quantum Transport in a 10-qubit Network” uses trapped calcium atoms to study an effect suspected of being a key factor in high-efficiency energy transfer such as the transfer observed in optical fibers and photosynthesis.

Quantum Computing For Computer Scientists

Quantum computing is coming, so a lot of people are trying to articulate why we want it and how it works. Most of the explanations are either hardcore physics talking about spin and entanglement, or very breezy and handwaving which can be useful to get a little understanding but isn’t useful for applying the technology. Microsoft Research has a video that attempts to hit that spot in the middle — practical information for people who currently work with traditional computers. You can see the video below.

The video starts with basics you’d get from most videos talking about vector representation and operations. You have to get through about 17 minutes of that sort of thing until you get into qubits. If you glaze over on math, listen to the “index array” explanations [Andrew] gives after the math and you’ll be happier.

Continue reading “Quantum Computing For Computer Scientists”

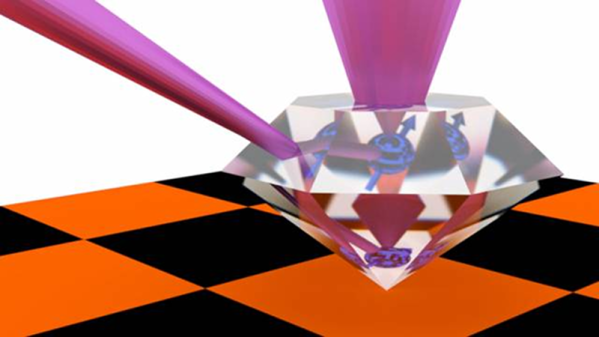

Flawed Synthetic Diamonds May Be Key For Quantum Computing

If you’ve followed any of our coverage of quantum computing, you probably know that the biggest challenge is getting quantum states to last very long, especially when moving them around. Researchers at Princeton may have solved this problem as they demonstrate storing qubits in a lab-created diamond. The actual publication is behind a paywall if you want to learn even more.

Generally, qubits are handled as photons and moved in optical fibers. However, they don’t last long in that state and it is difficult to store photons with correct quantum information. The impurities in diamonds though may have the ability to transfer a photon to an electron and back.

Continue reading “Flawed Synthetic Diamonds May Be Key For Quantum Computing”

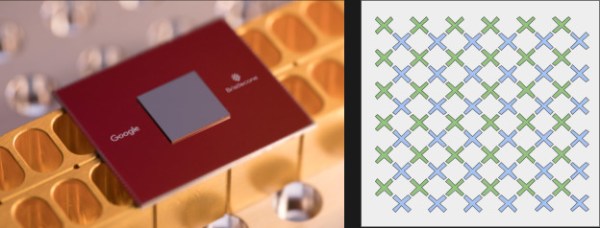

Google Ups The Ante In Quantum Computing

At the American Physical Society conference in early March, Google announced their Bristlecone chip was in testing. This is their latest quantum computer chip which ups the game from 9 qubits in their previous test chip to 72 — quite the leap. This also trounces IBM and Intel who have 50- and 49-qubit devices. You can read more technical details on the Google Research Blog.

It turns out that just the number of qubits isn’t the entire problem, though. Having qubits that last longer is important and low-noise qubits help because the higher the noise figure, the more likely you will need redundant qubits to get a reliable answer. That’s fine, but it does leave fewer qubits for working your problem.

Quantum Weirdness In Your Browser

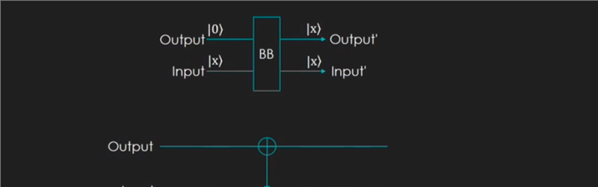

I’ll be brutally honest. When I set out to write this post, I was going to talk about IBM’s Q Experience — the website where you can run real code on some older IBM quantum computing hardware. I am going to get to that — I promise — but that’s going to have to wait for another time. It turns out that quantum computing is mindbending and — to make matters worse — there are a lot of oversimplifications floating around that make it even harder to understand than it ought to be. Because the IBM system matches up with real hardware, it is has a lot more limitations than a simulator — think of programming a microcontroller with on debugging versus using a software emulator. You can zoom into any level of detail with the emulator but with the bare micro you can toggle a line, use a scope, and hope things don’t go too far wrong.

So before we get to the real quantum hardware, I am going to show you a simulator written by [Craig Gidney]. He wrote it and promptly got a job with Google, who took over the project. Sort of. Even if you don’t like working in a browser, [Craig’s] simulator is easy enough, you don’t need an account, and a bookmark will save your work.

It isn’t the only available simulator, but as [Craig] immodestly (but correctly) points out, his simulator is much better than IBM’s. Starting with the simulator avoids tripping on the hardware limitations. For example, IBM’s devices are not fully connected, like a CPU where only some registers can get to other registers. In addition, real devices have to deal with noise and the quantum states not lasting very long. If your algorithm is too slow, your program will collapse and invalidate your results. These aren’t issues on a simulator. You can find a list of other simulators, but I’m focusing on Quirk.

What Quantum Computing Is

As I mentioned, there is a lot of misinformation about quantum computing (QC) floating around. I think part of it revolves around the word computing. If you are old enough to remember analog computers, QC is much more like that. You build “circuits” to create results. There’s also a lot of difficult math — mostly linear algebra — that I’m going to try to avoid as much as possible. However, if you can dig into the math, it is worth your time to do so. However, just like you can design a resonant circuit without solving differential equations about inductors, I think you can do QC without some of the bigger math by just using results. We’ll see how well that holds up in practice.