For the last thirty or so years, the demoscene community has been stretching what is possible on computer systems with carefully crafted assembly and weird graphical tricks. What’s more impressive is hand-crafted assembly code pushing the boundaries of what is possible using a microcontroller. Especially small microcontrollers. In what is probably the most impressive demo we’ve seen use this particular chip, [AtomicZombie] is bouncing boing balls on an ATtiny85. It’s an impressive bit of assembly work, and the video is some of the most impressive stuff we’ve ever seen on a microcontroller this small.

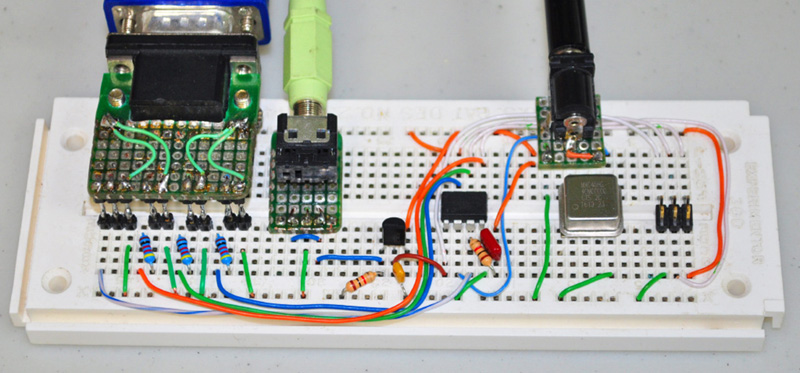

First, the hardware. This is just about the simplest circuit you can build with an ATtiny85. There’s an ISP header, a VGA port with a few resistors, a 1/8″ audio jack driven by a transistor, and most importantly, a 40MHz crystal. Yes, this ATtiny is running far faster than the official spec allows, but it works.

The firmware for this build is entirely assembly, but surprisingly not that much assembly. It’s even less if you exclude the hundred or so lines of definitions for the Boing balls.

The resulting code spits out VGA at 204×240 resolution and sixty frames per second. These are eight color sprites, with Alpha, and there’s four-channel sound. This is, as far as we’re aware, the limit of what an ATtiny can do, and an excellent example of what you can do if you buckle down and write some really tight assembly.

Now I don’t feel bad piddiling around with NTSC again… It’s ridiculous how much power so many chips have when it takes years to really understand their full potential.

The hardware and software industry are dancing the “add more memory, processor power, whatever” so the new version of (insert here your OS or software) will work better/only with those minimum resources, this following the Moore law somehow. Most programmers got lazy and do not thing about optimization, restraining resource usage (mainly RAM memory) and the worst: testing the code is not done properly.

Optimization isn’t portable, and using chip-specific tricks to make your code work with the bare minimum resources means spending 10x more resources in development for 0x benefit in sales.

Demo tricks like this oversell the capabilities of computing machines. We all know the tiny85 can’t do anything else than bounce those balls, and even that it is doing by clever shortcuts that don’t translate to other similiar tasks.

I bet you’re fun at parties.

You can always spot the people who used to own an Amiga, by how upset they get when someone points out that fancy demos don’t extrapolate to real world usefulness.

“Racing the beam” was a neat hack on the Atari 2600 to get the most out of the least hardware, but it was also the most difficult machine on the market to program, forcing programmers to rely on actual bugs and glitches in the hardware to get what they want, so the available software was very very limited by what the machine could actually do and the software was trapped to the hardware since the same tricks would not work on any other machine.

For example:

http://www.digitpress.com/library/interviews/interview_rob_fulop.html

” Rob Fulop: You would have loved some of our last resort byte saving tricks, when we were trying to crunch the code to fit into 4k. By the end, we were DESPERATE. One of my favorites, was changing a sound (or graphic) table to begin with hex code $60, which would allow that byte to do double duty .. as a RTS at the end of a subroutine, and as the first byte in the sound data table.”

You know you’re in dangerous waters when your data is your code is your data.

Sometime portability isn’t the issue. e.g. spacecraft, satellites, etc. Wasting expensive resources isn’t an option. Nor is Java’s “code once, run everywhere” a desired goal. e.g. console.

Re: your comment,

“You know you’re in dangerous waters when your data is your code is your data.”

How do you like LISP? haha

Luke said: “using chip-specific tricks to make your code work with the bare minimum resources means spending 10x more resources in development for 0x benefit in sales.”

Bullshit. Clearly you’ve never designed anything for production. “Chip-specific tricks” can make or break the difference between an $.85/CPU BoM and a $8.50/CPU BoM. Without exception, doing in software what was previously another $.10, $1.00, $10.00 is *ALWAYS* money/time well spent.

“We all know the tiny85 can’t do anything else than bounce those balls,”

the fact that you’re not at all impressed only demonstrates how little you know about what’s happening here.

“even that it is doing by clever shortcuts that don’t translate to other similiar tasks.”

Wut? There’s likely a million clever ways to reduce a BoM through software. Who gives a rat’s ass if these tricks don’t cover them all. There’s a DIFFERENT shortcut for those problems.

Exactly. As someone who also develops hardware using microcontrollers, there’s a BIG saving to be made if you can swap to a cheaper/smaller part just by optimising the code. When you can also (as in this case) optimise the use of external components, it translates to the difference between making a profit or loss.

“Luke”, however likely has no experience in this, and probably never will.

Firstly, the code consists of a rendering core with sprite engine, and although it’s running here on an ATTiny85, it IS easily portable to other AVR microcontrollers. The fact you’re unaware of this shows you probably don’t know what you’re talking about. Secondly, it can do a LOT more than just “bounce those balls”. Along with the 4 channel sound, it has a very capable sprite engine, and the guy is working on adding an IR receiver so that a remote can be used as a joystick input. Like all the other killjoys that crawl out from under their rocks in threads like this, I’m guessing you’ve never really accomplished anything of worth. Of course, you can prove me wrong – show me something more impressive than this, that YOU have done… I’ll wait…

“Most programmers got lazy and do not thing about optimization, restraining resource usage (mainly RAM memory)”

I recall an editorial in Byte decades ago lamenting exactly this same problem.

While wasting computational resources is offensive to people who appreciate optimum utility, fast implementation of new features and flashy stuff usually win out since they have to sell an actual product in short production cycles and putting together robust, optimized code for all platforms takes a lot of resources for very little customer-visible return. It’s cheaper to bodge code together and tell the user their platform is inferior – and the hardware industry doesn’t mind the push to keep buying new stuff.

The whole “Wintel” thing, not to mention the “good enough” philosophy. Some may say that’s all coming to the Makerspace.

The hard reality is that some hardware is just too slow for the application to be useful. For example, trying to get a video editing suite to work fast on a netbook is futile, because the same hacks won’t give you an equal speed advantage on a desktop PC – it is already fast enough.

And while the netbook is alltogether too inconvenient for video processing in the first place, why optimize for something that will never have any actual use?

One of the rules of systemantics is that highly efficient “tight” systems are actually dangerous. For example, when your program’s memory footprint is squeezed to absolute minimum, the probability of a buffer overflow or memory leak issue causing a serious crash or a security hole will increase.

@Luke said: “For example, trying to get a video editing suite to work fast on a netbook is futile”

And yet by middle school daughter edits video in the browser based OS on her school provided sub $300 netbook.

>the same hacks won’t give you an equal speed advantage on a desktop PC

Key weasel word: “same”

No one said this is some genius one size fits all, make an ATTiny85 rival an 7th gen i7. Your comparing lesser computing platforms to a desktop PC is a straw man fallacy.

DIFFERENT hacks CAN give a suitable experience, even on anemic processors.

“And while the netbook is alltogether too inconvenient for video processing in the first place, why optimize for something that will never have any actual use?”

My god the Dunning-Kruger is strong with you. I bet you haven’t actually spent any reasonable time with a modern netbook, have you? CHILDREN are doing what you’re claiming isn’t possible.

“One of the rules of systemantics is that highly efficient “tight” systems are actually dangerous.”

“Rules”. Are they written in stone? This smacks of absolutist bullshit I see pedaled by educators who haven’t kept up with technology, and have little real world experience.

“when your program’s memory footprint is squeezed to absolute minimum, the probability of a buffer overflow or memory leak issue causing a serious crash or a security hole will increase.”

What a steaming pile of horse shit. The *AMOUNT* of memory doesn’t increase the probability of a buffer overflow or memory leak. The probability stays the EXACT same for the same code. The difference is, you’re more likely to manifest an already existing flaw. The flaw is there regardless of whether you have 512 bytes of RAM, or 512 Gigs.

Inversely, increasing memory and CPU resources to counter inexperienced or lazy programming is a bandaid that only obfuscates the problem of poorly debugged code. The problem is there regardless of target. The choice of processor isn’t the problem. The lack of quality control is.

Shit, i’ve just realized something: the change of the industry to more ads (online) drives also shorter product live cycles and enshitification; by requiring the new fancy feature / UI redesign for attention, change for the sake of attention. Which again requires more ads. What monster did Google rise…

Charles, do you want to work on a combined project. I think the two of use together could come up with something pretty amazing with composite output.

What platform were you thinking? I JUST started playing with trying to do NTSC out with nosdk8266 running at 173 MHz.

Specifically because theoretically you can output audio in ADDITION to the chroma and luma information if you crank up the clock.

Heck… Theoretically at 173 MHz, you could even transmit the 8VSB and some some realllyyy crazy ATSC stuff :-p But that is kinda reaching for the sky, and wayyy beyond my ability to troubleshoot with my dinky RTL-SDRs

Eager to see this new stuff. nosdk8266 and your various I2S code was very inspiring and useful for a bunch of personal projects, thanks ! With it, a NodeMCU board with a couple of logic ICs were enough to get some nice RGB video output, which is indeed far less tricky than doing composite or broadcast like you, but was a lot of fun for a (quite old) newbie like me who did almost no programming before :) : https://youtu.be/rbVDYLhc94Y

Nicolho, You gotta do a proper video on that. Pal is awesome.

Oh – I am very much AVR based but starting to get back into discreet electronics a bit. Send me a PM and I’ll show you some of my more interesting AVR stuff.

Cool, do you have a place we can discuss NTSC generation ? I have a few questions about this, having done a VGA game console and wanting to dabble with exactly that (ntsc on either a esp8266 or cheap 100MHz stm32 …) I have a bunch of (simple) questions for now, like how do you have pixels of different luma narrower than chroma carrier, …

Hop on my discord, go to cnlohr-projects. https://discordapp.com/invite/7QbCAGS

The short answer is that you can’t.

The long answer is that your results will vary depending on the input type and circuitry.

If you use a RF modulated signal the demodulation stage will filter out the higher frequencies and the results will be very poor.

If you use a composite input then your results will vary depending of the quality/bandwidth of the composite decoding stage and that will vary for different hardware as you are working in an area that is outside of the specification.

To some degree you can improve quality by playing with phase and amplitude which is an order higher than most video generation techniques.

The old IBM CGA standard was like this. The early CGA cards had both composite and RGB outputs and the colors were different on each because of phase issues.

I always enjoyed doing NTSC as well, often using propagation of gates to create the color burst phase shift.

Here is one of my older NTSC project that uses nothing but an XMega, all NTSC created fully in software…

https://www.youtube.com/watch?v=CXFOTpM2Jn4&t=46s

Overclocked to 57.272 MHz in order to get 16 colors.

XMega can easily clock to 68MHz, so it’s not pushing too hard!

Brad

This brings back fond memories of the 80’s and 90’s era demo scene.

Cool demo ! Do you have some project notes ? besides, what’s your resolution? VGA /RGB (thanks EU scart) / component are way simpler to generate (and better quality) but it seems those are on the way out while composite is (alas) the one interface that is still available – well apart from hdmi

I haven’t seen the code for this but there’s some cleverness here. Clocking out pixels/sound is no big deal when you’ve got 40MHz to play with, I’m more interested in how it copes where the balls overlap each other.

There could be some sort of preprocessing in the vertical blank period but with only 512 bytes of RAM to play with you can’t store much information per line of video. Maybe it’s in the horizontal blank but I doubt it because that’s where the sound will be done, there won’t be enough CPU left over.

I’ll have to think about this one.

I can’t say for sure just from that video but I get the feeling that the vertical movement of the balls is cleverly synchronized so there’s never more than 2 balls on a single line of video. That would help a lot.

Also the “sound” isn’t the background music you hear, it’s the noises you hear after he touches the volume knob.

Looks like the code only draws every other line, and the next line is calculated while drawing a black line. One line of graphics is drawn to RAM buffer at a time, and the later drawn sprite just overwrites the prior ones. The ball sprites are 8 pre-rendered images of the rotation sequence, and the same sprite is shown in different colors.

Wow!

Makes me wonder how portable this would be to the ATTiny861 … the 861 is just like the 85, but has more pins, so in theory, you’d have room for reading sensor inputs.

After hours of debugging, just when you pat yourself on the back for implementing an SPI slave interface on an ARM Cortex you see this. Respect. Wow.

That’s just about where I’m at right now. Got a crummy OLED screen the size of a thumbnail wired up to a nano via I2c and making some graphics. Drawing pixel bimbos and tiny spaceships. Acting like I’m hot shit or something. But for shame, this is absolutely ludicrous. I love what he’s squeezed out of that minuscule doodad.

Here’s one of mine:

https://www.youtube.com/watch?v=i2tqXP8jvhY

Now how about using seven of these chips to do a 640×480 display, with some pixel power left over for???

1 chip 240×204 = 48960 pixels

4 chips 480×408 = 195840 pixels. 111360 short of VGA 640×480 = 307200

7 chips 342720, 35520 extra pixels.

Are you serious there?

You can’t just add up pixels by adding chip you know?

Tell that to 3Dfx and their Scan Line Interleaving system.

As long as you’re adding up analog signals, one chip can remain quiet while the other is spitting out the rest of the scanline.

even without using two cards thats basically what they where doing on the cards even of. remember correctly.

MY god, where to start… I’ll try to put it simply: You can’t multiply the pixels by adding more ATTiny85s. The limiting factor is the CLOCK SPEED. More ATTiny85s will still be limited by the CLOCK SPEED. The one that’s being used is already pushing as many pixels as it can at 40mhz. you could try offsetting 2 devices by 1/2 clock, running an 80mhz osc and alternating the output between 2 chips, but the inter-chip communication would become more of an overhead each time you added more – you’d need at least a 3rd chip to control the 2 tinys, and adding more would become ridiculously expensive and over-complicated when you would obviously just use a different MCU instead. Probably one with DMA, actually. The whole point of this project was to show what could be done on a single ATTiny85.

To be fair, he is running the ATTiny at 40Mhz, double the specified maximum of the chip.

Without any cooling. I wonder if it could be pushed farther.

Maybe? the key is the die temperature- since this is running at room temperature, there is a lot of margin available. The die is surely running very hot- which is why this uses a clock and not a crystal- the crystal oscillator would be having issues running at 40MHz. At some point, physics takes over and the margin available on the internal circuitry isn’t there, and things like drive strength and capacitance will not keep up.

There is zero temperature increase from overclocking any ATTiny, or XMega.

I have done extensive testing for many years.

Needs too run at 40Mhz because VGA is so fast. He is outputting one pixel every 5 clocks. That is the metric to look at. Pixels per clock. You can’t do much better than 5 clk/pixel without hardware help on an AVR. Tops you can do is 3 and that is just LD/OUT

Yep. I’ve written a few demos (including some on Tiny85) so I have a good idea of how many clock cycles are needed for video signals.

I was watching this and thinking “not possible” until I saw the “40MHz” at the end. The real surprise here is that a Tiny85 can run at 40MHz (twice its maximum rated speed).

wonder what voltage hes running at.

5 volts.

I’m on my knees… And happy to see there are still people interrested to code tight.

Some of us still do. :-)

these days im happy when people dont use a virtual machine.

There is another cool demo for AVR chip by lft, 1st place winner at Breakpoint 2008: https://youtu.be/sNCqrylNY-0

Linus is one of those guys that drives me to try harder!

His music routines and talent are next level.

Brad

Have you already seen lft’s Bit Banger?

Yes, I always keep up on his projects.

I hope to do something like his “Chip-o-Phone” to make music for my Quark-85 Demos one day.

Just need endless free time!

A trought hole decoupling capacitor in a breadboard runing at 40Mhz , unbelievable¡

How about 100 decoupling capacitors on a breadboard running at 40MHz?…

https://www.youtube.com/watch?v=HqSYwKYwtOo

Don’t underestimate what one can make on a breadboard!

Brad

But at this frecuencies the capacitor is more like a inductor,plus the inductance of the wires.To me is strange that it works,but i rarely use breadboards.

This very cool. I once did an NTSC overlay using a PIC. We needed our ham radio ID to be on a camera going in a high altitude ballon. I could put nearly any metric on it I wanted from some sensors and I used the font from an Apple II. But this is way cooler. This could be set up to run as a “graphics card” to another AVR or PIC. Using serial/I2C/SPI to send drawing primitives.

I don’t think it may be possible to use it as a “graphic card” for other chips.

The attiny is busy generating video signal, and timing is critic here.

Maybe by turning off the display while it updates?

And even then, your RAM is so small that it would be close to impossible to store descent / usable thing in there.

It’s a nice demo, but I don’t think it can be used for Something else.

He might draw retro lines as in my TTL computer and tun the application during those.

Thanks for posting my Quark-85 Project.

This version of the code is just the beginning.

I have since added selectable waveforms to the 4 voice audio, text, lines, and even an RLE graphics decoder.

I am also trying to decode a TV remote on the fly to add joystick capabilities.

Having only 4 IO pins makes it difficult, but that’s why I chose this chip.

Hope this insanity helps promote what can be done with a little bare metal programming.

I often use what I learn on these fun projects in the real world… minus the overclocking!

Cheers!

Radical Brad

Wait! You can’t do that! Breadboards are limited to 1MHz.

To break the 1MHz breadboard barrier, I sealed my entire house and evacuated it to 1 Torr. I then submersed the breadboard into 50 gallons of liquid nitrogen.

This is the only way a breadboard can ever exceed 1MHz.

Brad

I hope your bed is dry? ;)

If you can even work with 1MHz on a breadboard youre doing something wrong.

You can get upwards of 10MHz with good layout, good decoupling and short wires.

I am getting 40MHz on that massive 48 panel breadboard by adapting to the problem rather than trying to get around it. Some of my hi frequency wires span over 20 inches, and carry nanosecond critical timing. Registering data, using gate delays, matching wire length, and planned chip placement all help.

The board in the photo now has almost 150 ICs on it, and it runs at 40, 25, and 10 MHz all the way through. It displays perfect 640×480 video with 4096 colors, and includes a 20 MHz bandwidth alpha aware bit blitter.

The breaboard is made by chaining 48 breadboard together.

All part on the board are 7400 series logic and 10ns SRAM.

I laugh when I head that “few MHz” breadboard claim!

I can’t remember the last time I did a board less than 20 MHz.

Even did a few RF boards in the 100-200MHz range.

Brad

I have seen many of your projects and they are extremely impressive. I even have a DigiRule.

Your breadboard wiring is very neat and very well laid out as far as clock distribution, phase and propagation delays are concerned and you very much push the boundaries of what can be done on a bread board.

For almost anyone else, attempting upwards of 10MHz will probably be met with disappointment unless they have a sound knowledge of analogue principles.

Though having a higher clock driving something like a mcu or shift register or counter can work well with shorter wires.

On longer wires and at higher frequencies what may start as a square wave at one end may well be closer to a sine wave at the other end. This may still drive a TTL chip especially a schmitt triggered input but will introduce a lot of phase delay which can have unpredictable results and is difficult to debug.

Keep up the good work!

Thanks for your comments.

Yes, I do believe that careful planning and neatness does count for something on a breadboard.

The only contradiction has been this ongoing project…

http://sleepingelephant.com/ipw-web/bulletin/bb/viewtopic.php?f=11&t=8734&sid=7d07ab8727945367542106df2db6d463&start=150#p99426

For the first time, I have broken the 640×480 barrier, and did it with this wiring mess. I always make a mess when initially testing, my thinking is that if it works like this, then it will work much better with shorter, carefully laid wires.

That board is only 25 MHz, but it does pump 36 Address lines and 24 Data lines at that speed, and is sub-nanosecond critical.

I guess my point is… don’t be afraid to try anything on a breadboard!

Brad

Today I learned what “Racing the Beam” means.

Of course on a Propeller you can probably do this with 4 or maybe even 9 monitors all running at 640×480, and let the balls go from one screen to another. But yes, the Propeller has 40 pins and this microcontroller only has 8, so color me impressed!

===Jac

The largest hurdle I found was the 512 Bytes of RAM… less than the number of bytes in your mouse pointer graphic!

The ATTiny85 also has only 4 usable IO pins, not 8. There is a 5th pin, but it can only be used as input.

Other than that, it is a mini supercomputer!

Brad

Get a high-voltage programmer (serial for the ATtiny85?) and set that last pin free!

I can’t even think of what madness you’d be up to with one more pin available. (Can’t help with the RAM.)

You read my mind!…

https://www.avrfreaks.net/comment/2421051#comment-2421051

Brad

Each cog has 512 longwords (not bytes) and the hub has an additional 32KB.

But yes, agreed.