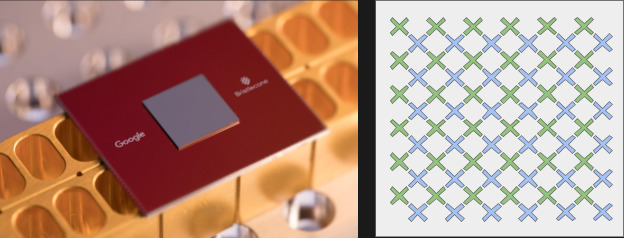

At the American Physical Society conference in early March, Google announced their Bristlecone chip was in testing. This is their latest quantum computer chip which ups the game from 9 qubits in their previous test chip to 72 — quite the leap. This also trounces IBM and Intel who have 50- and 49-qubit devices. You can read more technical details on the Google Research Blog.

It turns out that just the number of qubits isn’t the entire problem, though. Having qubits that last longer is important and low-noise qubits help because the higher the noise figure, the more likely you will need redundant qubits to get a reliable answer. That’s fine, but it does leave fewer qubits for working your problem.

The previous Google device had a low error rate and the new chip uses the same topology. Google hopes to demonstrate similarly low error rates on it, too. You can see a graphical representation of the layout in the right-hand side of the graphic at the top of this post.

This is the latest in the race to reach what is known as quantum supremacy. Quantum computing simulators exist that compute state information for all possible states of a multi-qubit system (something a quantum computer does naturally). That works, but at about 50 qubits, the computation and memory load is too great for modern conventional computers. That means a successful quantum computer in that range could solve certain kinds of problems that modern computers can’t, and everyone wants to be the first to demonstrate this.

Speaking of simulations, you can experiment with quantum computing in your browser. Even Microsoft is in the game, with a simulator they claim is more capable than others.

I’m not saying that quantum computing is bullshit in it’s entireity… but it seems that the more qbits one adds to a system the more unreliability is introduced. it is my admittedly limited understanding that this unreliability is in the chaotic nature of quantum phisics itself itself and not simply an engineering problem to be solved to stabalize these pesky atoms.

Let me ask a simple question: how many qbits are necessary to attempt to decrypt SHA256, and at what measure of error rate?

About three-fiddy.

depends how soon you want it done

you could do it on a 4004 if you don in waiting a wee while

dont mind

“a wee while” = exactly how many universe deaths?

That’s a meaningless question, because there are series and parallel quantum machines.

These chips, as far as I know, operate on “quantum annealing”. You load the domains up with magnetic or electric fields, and then drop the temperature down to where they become superconducting, at which point the energy tensions you introduced into the system re-arrange themselves into superpositions, and when you bring the temperature back up you get a new arrangement that depends on the probabilities of the wavefunctions. You repeat that over and over, and you get statistical patterns that correspond to an answer to some question you were posing to the system.

Decrypting SHA would need you to put the system in some sort of tension where the lowest energy state is the correct decrypted message.

Kind of reminds me of the field of DNA computing and the attempts to solving the Traveling Salesman problem.

The chips Google and IBM produce are most certainly not quantum annealers but “real” qbits as there already are quantum annealers with multiple thousands of qbits that are available as buyable products (see D-Wave).

Not even close. Qubits don’t exist above a few milli-Kelvin. Thats .002 above absolute zero. The quantum state is read by another, non-quantum, circuit at a slighly higher temperature and the data is then carried out to room temperture via wires.

Cycling the temperature would require the better part of a day. Possibly two for each computation.

The compute element that controls the quantum domain runs on SW. Not some magical or manual, manipulation of magnetic fields. In fact, magnetic fields disturb the quantum effect and render the chip useless.

Quantum error correction is a thing:

https://en.wikipedia.org/wiki/Quantum_error_correction#The_Shor_code

You can perform error correction every so often, though obviously this requires more gates/bits. If your error rate for each gate is low enough, you can theoretically have any size circuit.

The idea that one can correct errors to compensate for noise added when scaling is called the threshold theorem, if the error rate is below the threshold it is possible to compensate but requires more qubits.

However that is only the noise correction part of the problem, then there are the problem of interconnect between the qubits. I’ve read that it’s possible to build a quantum computer in a modular manner so that should also be solvable – but requiring even more qubits.

Bad question, SHA256 is a hash algorithm, not an encryption one.

BUT the general question of “how many qubits do we need to do decrypt our current encryption algorithms” is a good one:

RSA 2048-bit – 4098 qubits

Elliptic curve 128-bit – 2330 qubits

So, we’re still quite a bit off, and your stability point is also important.

So how many for RSA 8192 bits?

For RSA is about 2X the number of bits, no matter the key size (plus a few). So we’re talking around 16k qubits.

Thanks!

There is no way to “de-crypt” hash like sha256 – to a single hash many original text original texts correspond… it is one way

To quantum computers themselves – there is known reason the should not work in future and theoreticaly one could find some tricks to keep the coherence much longer… but it will take some time

“That means a successful quantum computer in that range could solve certain kinds of problems that modern computers can’t, and everyone wants to be the first to demonstrate this”

Not with the example given at least: it doesn’t matter if a quantum computer can “simulate” (read: run) a quantum machine faster than a conventional computer but it matters if the quantum machine is faster in a useful problem than the POCS* can.

(* Plain Old Computer System)

Still be awhile before we’ll have quantum cards to plug into our machines.

When the casinos get quantum cards, will it be easier or harder to cheat at Blackjack?

It will be in a superposition of both easier and harder, until you try it.

If casino security comes to tell you your business is no longer welcome, it was easier.

Where is Apple with their 20 qubit offering that is covered in glass and costs six times as much?

They are just finishing up the marketing materials and responsive webpage for it now.

When will they run Linux?

It won’t, well actually, it will run some form of Unix/Linux/Minix, but Google will call it “proprietary” and call it

“Quandroid”..

My limited understanding on the subject was that the advantage to quantum computers was their ability to analysis patterns. The story I read stated that quantum computers would have advantages doing face matching and picture comparison. Humans brains are design to recognize patterns and most of our ability to learn comes from this.

I think of a quantum computer as a digital computer with a integrating analog system. Instead of loading in a digital code you load in a 2D waveform and somehow get a statistical answer on how well it matches the answer your looking for. I dont think decrypting a exact key is the advantage of a quantum computer. As encryption algorithms are known as waveform. I would guess the quantum computer advantage would be in discovering the encryption method, waveform, from a series of encrypted transmitted. Once they have the encryption method, spiting out the decrypted data would be done by the digital processors via the encryption waveform.

The similarity between Human brains processing patterns and quantum computers advantage in pattern analysis means they will also be plugged into the big AI computing battle. The first AI to really work could require something like this to really learn at a accelerated rate.

Possible the future Isaac Asimov positronic brain.

And just because $ony likes to do things differently, they will have a negatronic brain.

A ‘negatron’ is just an electron.

Will they be able to correct your English?

But can you run Doom on it yet ?

It will be a superposition of Doom and Crysys, and it will/won’t run until you try it.

As, it is beyond our conventional understanding of the Time-Space Continuum, you have already run it in a different multiverse.

“This is their latest quantum computer chip which ups the game from 9 qubits in their previous test chip to 72 — quite the leap.”

One might even say, a

I’ll show myself out

this just in: using html tags in a comment will simply blot that part out. haha.

one might say a “quantum leap”

lol bazinga!

Zimbabwe!

Waiting for the day when Musk gets ahold of one of these, drops it in a strong Brownian motion producer (say a nice hot cup of tea), and we can finally go check out those plans in Alpha Centauri these guys with bouncy antennas told me about in the middle of the Nevada desert.

Beware of leopard.

I liked the original without the “obvious” bits