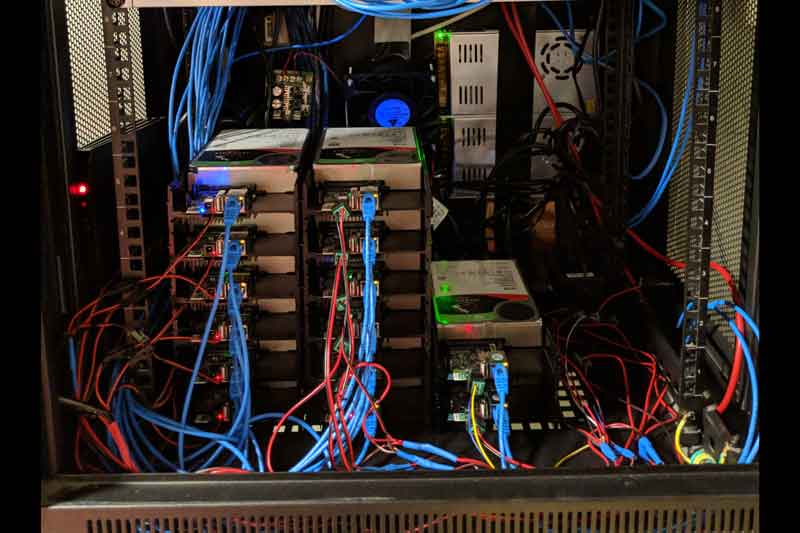

Most of us accumulate stuff, like drawers full of old cables and hard drives full of data. Reddit user [BaxterPad] doesn’t worry about such things though, as he built an impressive Network Attached Storage (NAS) system that can hold over 200TB of data. That’s impressive enough, but the real artistry is in how he did this. He built this system using ODroid HC2 single board computers running GlusterFS, combining great redundancy with low power usage.

The Odroid HC2 is a neat little single board computer that offers a single SATA interface and runs Linux. [BaxterPad] acquired sixteen of these, and installed a decent sized hard drive on each. He then installed GlusterFS, a distributed network file system that can automatically spread the data over these drives, making sure that each bit of data is stored in multiple locations. It presents this data to the user as a single drive, though, so they don’t need to worry about where a particular bit of data is stored. If any of the drives or HC2 systems fails, the NAS system will keep on working without a hiccup.

A system like this isn’t new: software like FreeNAS and UnRAID allows you to easily build a computer that spreads data over multiple drives and keeps running if one of them fails. The difference here is that this system is easy to expand and can survive the failure of one or more of the computers. If the computer running your FreeNAS server dies, your data isn’t accessible until you get it back up and running. If one or more of the Odroid HC2 computers in this system dies, it will keep on going. It’s also easier to expand: just buy another HC2, slap in a hard drive and run a couple of commands and it will be seamlessly added to the available storage.

[BaxterPad] also points out that this system doesn’t use much power: each HC2 consumes about 12 W, and the entire system (including a rackmouted PC running VMware) takes less than 250 W. That’s a lot less than a typical high-end server system.

GlusterFS rocks.

Might use it when I put together a NAS with a few scrounged PCs

I was trying to add armv7 nodes to my ceph cluster (much the same as gluster but I’ve used it before so familiarity++) however recent releases only have packages for armv8 and so far it’s been resistant to my attempts to compile it. I mention this because the Odroid HC2 boards are armv7.

Although it’s not hard to “fix the problem” I really wish the HC2 had PoE built into it.

OK external adapter (only $5), but better built in.

There is a discussion about this on the Reddit thread. They concluded that adding PoE would up the cost fairly significantly, and would not offer that much benefit. For this sort of use, I agree. Powering an NFS over ethernet doesn’t make a lot of sense, as you adding too many links and single points of failure. It would definitely be useful for other stuff, though.

Not really Having the typical wall wart is not an efficient power supply at all. Since we are talking about a NAS here, using PoE does not create single points of failure. The NAS devices each require a network connection to be operational in the first place making the Ethernet connection a single point of failure. PoE running over the same connection changes nothing. The centralized PoE supply in the Ethernet switch is definitely more power efficient and most likely more reliable than the typical wall wart supply.

Looks like they are using 2 12v 30a supplies to run all nodes.

Exactly, it’s not something run-of-the-mill wall wart stack.

Now imagine the conversion loss and supporting HW from going from mains AC to about 50 volt carried over probably cheap cat5/6 since too many skimp there and further down to 12 volt & 5 volt.

And then when just going straight from mains AC to desired regulated DC with a properly specced switchmode.

I’ll bet by ass the difference is noticeable so much that the cost of also adding PoE makes it far more impractical than including a UPS circuitry supporting either lead-acids or lithium batteries for scenarios where uptime is crucial.

Not to mention I doubt the intended target demographic are those with their houses rigged up with Gigabit PoE or intend to cluster while having gotten their hands on a inexpensive (when compared to a “vanilla”) Gigabit PoE switch.

I’m not too sure it adds significant cost but it depends on what your point of view of significant is.

That said, it would be enough to give us a header. the RPI guys finally caeved in to doing that.

Something off the shelf rather than in house. Such as modules from silvertel which are used by hundreds if not thousands of OEM’s as drop in PoE solutions.

As for powering a server over PoE not being sensible I’d suggest that far more mission critical devices are being powered over PoE commerically than a redundant file storage cluster that can happily lose a few nodes and survive.

IP cams, Access control, Intruder systems, and so on.

A decent quality 24 port gbit class 4 PoE managed switch can be had for sub $300.

It’s not enough to add a header, the magnetics should also be capable of handling PoE.

Cost versus efficiency would obliterate PoE as a choice.

It makes sense for remote IP cameras, VoIP phones or part of a alarm/home automation system, but not something like a low power NAS.

for a cluster setup a power rail supplied by a switchmode power supply with remote feedback from the rail is far more efficient and cheaper than a gigabit PoE switch or bunch of injectors + normal gigabit switch.

Well, if you already have a PoE capable switch running your phones and cameras then it would be pretty cost effective to use it to power your NAS device as well. Your switch mode power supply idea works if the NAS devices are all in the same area as each other. Consider that the NAS device requires an Ethernet connection in any case and it makes sense that PoE might be desired.

Just as long as one sticks within POE+ limits.

Well, since it is your own system and you know the power needs and limits, use DIY PoE, not the standard with communicating PoE chips. Just use PoE power inserters after your network switch like these https://andahammer.com/index.php?route=product/product&path=72&product_id=70 and these https://andahammer.com/index.php?route=product/product&path=72&product_id=71 and if you need a lot of 12V and can handle some fan noise https://www.ebay.com/itm/HP-Proliant-DL580-DL785-Server-1200W-Power-Supply-Unit-438202-001-440785-001/113042428149?hash=item1a51da90f5:g:5BQAAOSwj2Za14lh You can use a $1 splitter at each device.

Depends on the application.

If the HC2 came with PoE I already have an application for a clustered sotrage sytem specifcally for IP cameras. Being able to have drives distributed around the premesis as a secondary redundant storage option is quite tempting.

The method used by the industry today is uSD cards. Which no one seems to understand are a service item in a constant write scenario.

Could neaten up a bit on the wiring.

I would be very interested in seeing what happens when one of the two power supplies drops out. I can’t tell if they are wired redundant or not.

OMG! That is ALOT of cording and poor power management. You can more easily get that done with something like this… https://www.kickstarter.com/projects/1585716594/bottledwind-portable-cloudtm

Besides being a spamming of the thread, it is overpriced and low on space. That seems just a case for a bunch of raspberrys, the same as have been pictured in a lot of places.

Overpriced? it is actually priced $50 over what you would have to pay over market value for ALL parts considering the power management boards. And you want something small, not the same size as one of your towers (see picture above)

Overpriced? Its that is actually the same MARKET PRICE for all parts. Thats the same price you would pay if you built it yourself! How is that overpriced??? I think you are confused since it uses power management boards which avoids the vast amount of cording and improves I/O.

It also allows it to be small (instead of being the size of a tower)

You would spend that much on parts alone to build that. I think you are confusing the cost because of the power management boards which reduce the cording and improve I/O. And being SMALL is not a disadvantage… I don’t like hauling around towers when the power of a tower can fit in my hand.

Typical useless PEE garbage, looks nice, slower than single 1/3 price laptop.

This might be the first ARM based cluster that actually makes sense posted on HaD.

This actually beats my home server bare x220 motherboards on total cost and convenience :o

Actually have you checked out the ‘portable cloud’ kickstarter… https://www.kickstarter.com/projects/1585716594/bottledwind-portable-cloudtm

no I dont, garbage you linked looks like typical home shopping scam

one of the main problems with this approach is that shifting data between disks is slow – as it’s going at network speed instead of sata speed, and gigabit networks are pretty slow given current hard disk speed…

When I was setting up my data store a while ago I went for more powerful controllers – small low power motherboards – and put 8 disks on each, and then connected them together in network… Then within each node is much faster to move stuff around…

Networks – and even hard disks – are too slow for the amount of data we are now storing. It takes me literally days to copy one node to another one at gigabyte speeds..

Okay but remember that you don’t want one node to hold multiple copies of the same data so all communication *should* be over the network.

Distributed filesystems reduce the risk of total failure by duplicating resources and being aware of failure domains. That means if you have some data on machine 1, machine 2 should be the one to store the second copy of that data as it’s a whole other machine with a whole other set of components. The downside is that if a disk fails in machine 2 it will always have to talk to machine 1 over the network to make extra copies of the at-risk data. If you want to keep communication off the network for speed reasons you’ll be increasing the risk of data loss by putting all your copies in one failure domain.

The real solution to a slow network is to get a faster network. 10gbit ethernet’s fairly cheap these days, 40gbit infiniband is even cheaper.

That’s a nice setup, but “Single file reads are capped at the performance of 1 node, so ~910 Mbit read/write” only allows it for glusterFS testing purpose, even for home use its way slower than any raid based alternatives.