It’s now possible to not only see people through walls but to see how they’re moving and if they’re walking, to tell who they are. We finally have the body scanner which Schwarzenegger walked behind in the original Total Recall movie.

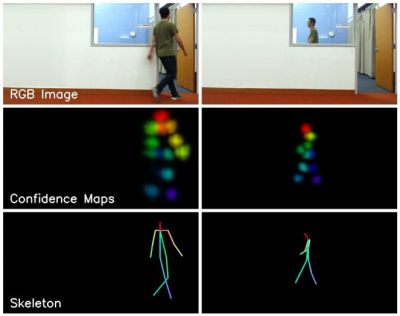

This is the work of a group at the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL). The seeing-through-the-wall part is done using an RF transmitter and receiving antennas, which isn’t very new. Our own [Gregory L. Charvat] built an impressive phased array radar in his garage which clearly showed movement of complex shapes behind a wall. What is new is the use of neural networks to better decipher what’s received on those antennas. The neural networks spit out pose estimations of where people’s heads, shoulders, elbows, and other body parts are, and a little further processing turns that into skeletal figures.

This is the work of a group at the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL). The seeing-through-the-wall part is done using an RF transmitter and receiving antennas, which isn’t very new. Our own [Gregory L. Charvat] built an impressive phased array radar in his garage which clearly showed movement of complex shapes behind a wall. What is new is the use of neural networks to better decipher what’s received on those antennas. The neural networks spit out pose estimations of where people’s heads, shoulders, elbows, and other body parts are, and a little further processing turns that into skeletal figures.

They evaluated its accuracy in a number of ways, all of which are detailed in their paper. The most interesting, or perhaps scariest way was to see if it could tell who the skeletal figures were by using the fact that each person walks with their own style. They first trained another neural network to recognize the styles of different people. They then pass the pose estimation output to this style-recognizing neural network and it correctly guessed the people with 83% accuracy both when they were visible and when they were behind walls. This means they not only have a good idea of what a person is doing, but also of who the person is.

Check out the video below to see some pretty impressive side-by-side comparisons of live action and skeletal versions doing all sorts of things under various conditions. It looks like the science fiction future in Total Recall has gotten one step closer. Now if we could just colonize Mars.

It’s not using WiFi, it’s only using the WiFi frequency ranges. I quote:

« Our RF-based pose estimation relies on transmitting a low power RF signal and receiving its reflections. To separate RF reflections from different objects, it is common to use techniques like FMCW (Frequency Modulated Continuous Wave) »

So at no point they are actually using WiFi…

I am going to go out on a limb and say that if they are using it with WiFi frequencies, they specifically targeted that range in order to build a passive device but to start with and prove their concept they are starting with FMCW which is just a basic carrier wave with no other signal.

Their next trick will be tweaking the algorithm to make it work with the existing background WiFi signal

That is a great idea. I hope somebody does try that.

I don’t think it’s so great of an assumption though.

WiFi is in the range it is (in the US) because that is an ISM band. It is a range of frequencies that was set aside by the FCC for non-licensed use. Can you imagine home routers ever having gained popularity if users had to purchase a license from the FCC to use them? That’s why routers are on those frequencies. That is likely the same reason the researchers chose them too. Only certain, limited ranges of frequencies are legally available for unlicensed use and the WiFi bands happen to be the ones that also have the proper physics making such a device practical.

WiFi bands were the only logical choice!

As for other countries, most have similar rules with similar, even overlapping frequencies.

Fore once I hope this technology is super-closed source.

Oh? Who exactly would you like to see this technology restricted to?

Do you think that only good guys can do science?

I wish to believe that pursuing a discipline, such as martial arts or science, makes one a good person, eventually. Bad guys just don’t have enough humility to succeed.

It’s good to understand that while wishing to believe whatever one wants is ok, actual belief should always be backed by evidence. Otherwise you are just lying to yourself.

When will the Cobra Kai Dojo ever learn? I guess only when both the good guy and bad guy sustain life altering injuries.

I’d love to believe that too, but it’s wrong.

Medical scientists still cite Nazi studies.

No. If there is something someone can find out about me, I want to have access to that, study it and learn how to counter it, how to learn to walk and move like someone else.

Just decorate your walls with aluminium foil. Problem solved

Wont be an effective Faraday Shield unless you ground all of the foil. Actually tight-linked chicken wire fencing that is soldered at the base and then attached to a grounding rod would work better. The POTUS uses one for his privacy when talking on his phone in the field. Well at least POTUS 44 did. #45 not so much, as he may not have been exposed to one yet, as he doesn’t seem to be aware of OpSec procedures.

Oh, yah, so only one group of people get those abilities. Sounds great!

The only thing worse I think than everyone having the ability to violate everyone else’s privacy is exactly what you are suggesting, one limited group having such an ability but nobody else.

Sure, and that group is the one developing the actual project, and moving forward with it. Sounds bad to give out this “ability” for script kiddies to use, and not even contribute.

I just read the paper and can think of at least two ways to totally block a future passive version of this from working, or at the very least jack up the price of getting it to work so much to effectively make it useless. But since you do not want people to know how the active system works, maybe they should not know how to stick a spanner in the works either. Could be profit in keeping that super-closed source.

Praytell!

Compared to what info is already being collected about us in real-time, this is pretty tame. The time to fight for privacy was like ten years ago. It’s dead and deep in the ground by now.

Gadgets already report your exact location 24/7, this thing just gives away what pose you’re physically making with your limbs.

So far, but with a higher frequency, say 802.11ad/aj/ay at 60GHz (5mm/0.2″ wavelength), the ultimate target being a totally passive system would still be able totally side step the fourth Amendment (since you are just looking at publicly available information), it should be able to read facial expressions and maybe even read lip positions. The Stasi back in the 1950’s would positively drool over the modern American spying capacity.

WHY WOULD ANY OF YOU THINK ITS A GOOD IDEA TO HAVE THIS TO BE USED ON FREE AMERICANS?

YOU HAVE TO BE CRAZY TO NOT SEE THE REAL TRUTH THAT IS HAPPENING NOW… OUR HOMES SHOULD BE OUR SAFE PLACE… BUT MOST OF YOU ARE LETTING IT ALL HANG OUT AND DON’T EVEN KNOW IT!!

Too lazy to look it up, but there is already a commercial product that is far superior to this home made version. If memory serves, the device used millimeter wave frequencies. It was developed for law enforcement/military applications.

I thought millimeter waves were only good for a few centimeters distance. They are great for scanning over someone’s clothes to find hidden objects or for creepy airport security to lear at the exact curves of you, your significant other, your young child’s or your grandparents’ private parts.

I didn’t think they could be used for looking several feet away to see into a different room.

I’m a fire fighter. I wonder if this technology could be used to keep tabs on fire fighters working inside a structure fire. Other data, such as thermal data on the fire itself could be combined to give directions to us while fighting the fire, i.e. where to go, or even locate victims. It seems like applications could be endless.

Possibly. The issue with this version of AI, I would believe, would be that initially it would be to trained to the initial environment. I would encourage you or the author to reach out to the person who put the system together to see if they could partner with another organization (such as WPI.edu fire protection department) to work on these problems….. perhaps with your department making the initial connection?

That sounds like a very worthwhile avenue of research, though radiation from the fire itself might make the answer “no.” You might want to send this article to some national level group with the resources to fund research.

I don’t think fires give off Wi-Fi bandwidth waves? Would likely alter things though. Could that be accounted for? Where would you even get the training data for this exactly?

Hmm, I wouldn’t know exactly, the last time I looked at blackbody curves was in Astronomy and we didn’t talk too long about microwave. I was looking at the other commenters, and it sounds like this system uses weaker signals than wifi, which is encouraging — they have space to increase the power and it’s likely already operating around signals stronger than its own.

IIRC, firefighters practice both with burn rooms (structures made with a heat-resistant frame and heat containment system, then outfitted with typical flammable furniture, etc.) and they also burn structures. Those activities should get enough data to fine-tune the network fairly quickly.

I’ve just looked at the paper abstract and I see that the net isn’t actually trained around walls! That makes me curious how it would do around walls with lots of wires in them. They’re using a camera to train though, which might make gathering data in a fire difficult or impossible. But, the net might be ready to go with its current weights, or they might just amp up the power and retrain.

I could be wrong, but I suspect that a problem would be that fire is a plasma. Plasma is an ionised gases, and electrically conductive so it would attenuate RF, just like metal shielding would. And a fire inside a building is also going to behave like a turbulent fluid, so under those circumstances it may not be possible to passively track people inside a building. I suppose ultimately it depends on the level of attenuation created by a fire compared to a human body.

This is an old idea, There’s Range-R that the military and FBI have access to and IIRC there was a wifi backscatter technique from the late 2000’s that gave a loose map of areas of the room that were occupied.

Total Recall? You’re passing up the other great Schwarzenegger movie, the 1996 classic ‘Eraser’ with railgun sniper rifles and scopes that see through walls.

Leithoa – Cool!

“L-3 CyTerra – RANGE-R is a handheld sensor capable of sending Radar signals through the walls and locating people inside buildings. It is powered by 4 AA batteries and weighs less than 1.5 lbs.

The basic RANGE-R concept of operations is shown in Figure 1. The sensor is held against the wall and activated via a pair of buttons (one hand operation). A series of Radar pulses is transmitted through the wall. The pulses are reflected from objects inside the structure. These returns are analyzed as Doppler radar returns, allowing detection of any moving objects in the structure. The sensitivity of the RANGE-R is sufficient to detect people breathing, making it difficult for individuals to hide from RANGE-R. The range to a target is displayed on the user-friendly graphic display. RANGE-R covers a conical field of view of 160 degrees, sufficient to cover an entire room (or small building) in a single scan. The entire scan/detect sequence takes only a few seconds. “

http://www.range-r.com/tech/sensor.png

(figure 1)

The actual L-3 CyTerra Range-R unit (suggested retail $6,000 USD):

http://www.range-r.com/images/ranger-30br-nocoin-450px.jpg

Once the blobby images are received back from the radar and digitized, it’s over to the neural network side of things.

Very reminiscent of existing image-based systems doing a similar job, like OpenPose but with the ability to see through walls.

Very little is given in the paper about the physical layer radar, but we know it’s an FMCW system with an array of antennas, at ~6 GHz (5cm specified in the paper), and TX power of 0 dBm EIRP (since it’s “1000 times less powerful than WiFi”, which is typically a regulatory maximum of +30dBm EIRP depending on country.)

That seems like a very low transmission power – but then again you’re not expecting it to have massive range.

I think the reason to use Wi-Fi bands (2.4 and 5 GHz) is because they are ALREADY “mostly” there in the target environment and they can penetrate walls too. They act like “backscatter” does only you don’t need to generate it nor does anything else in nature – only the local routers, PC’s, tablets, smartphones, etc. do. The neural net can interpret the colorful blobs as actual human shapes and do it in near real-time. I think the US Navy is already doing this but with towed array passive sonar versus radar. I think they can see the enemy walking around in their submarine. Or may be able to see hidden subs in thermals by using a distributed sonar backscatter – I think the backscatter part is called LFAS. The towed array is called SURTASS.

And then the navy crashes into giant ships clearly visible, over and over.

Or subs and ships into each other for that matter.

But they are on the cusp of not doing that, using carbon nano-tubes or buckyballs I’m sure. :)

Sure we can have the greatest technology for collision avoidance but not the personnel to use it properly. Its called operator error. All of the collisions you mentioned were all due to personnel error not technology failure. https://en.wikipedia.org/wiki/Ehime_Maru_and_USS_Greeneville_collision

Scientific fraud. See my website.