When we first heard Nim, we thought about the game. In this case, though, nim is a programming language. Sure, we need another programming language, right? But Nim is a bit different. It is not only cross-platform, but instead of targeting assembly language or machine code, it targets other languages. So a Nim program can wind up compiled by C or interpreted by JavaScript or even compiled by Objective C. On top of that, it generates very efficient code with — at least potentially — low overhead. Check out [Steve Kellock’s] quick introduction to the language.

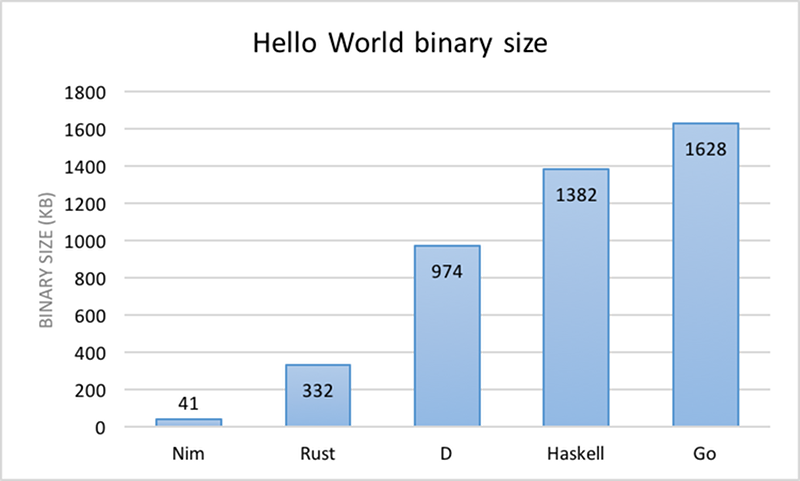

The fact that it can target different compiler backends means it can support your PC or your Mac or your Raspberry Pi. Thanks to the JavaScript option, it can even target your browser. If you read [Steve’s] post he shows how a simple Hello World program can wind up at under 50K. Of course, that’s nothing the C compiler can’t do which makes sense because the C compiler is actually generating the finished executable, It is a bit harder though to strip out all the overhead yourself.

In addition, nim offers a lot of modern features and package management. There is garbage collection but you can opt out of it and also pick how it runs and when. There’s a pretty robust library for most things including wrappers for OS-specific code and an external GUI library.

The syntax is straightforward and borrows a lot from Python (including the indentation sensitivity which we have mixed feelings about). Here’s an example from the main website:

import strformat

type

Person = object

name*: string # Field is exported using `*`.

age: Natural # Natural type ensures the age is positive.

var people = [

Person(name: "John", age: 45),

Person(name: "Kate", age: 30)

]

for person in people:

# Type-safe string interpolation.

echo(fmt"{person.name} is {person.age} years old")

If you think compiling to C is cheating, don't forget that's how C++ started, too. The language itself doesn't impose any dependencies on your executables unless, of course, you use a library explicitly. Of course, we are more likely to want to build our own language, but it probably wouldn't have so many features. By the way, Nim is one of the many many choices on the "try it out" website we covered before.

Mrs. Frisby would be proud!

;-)

“Brisby”

Fisby in the book, Brisby in the movie.

Or Frisby in the book, when typed correctly.

The “H” is missing.

An answer in search of a problem.

I can show you a bare metal hello world program in C (and assembly) that compiles to a few hundred bytes, so I have no idea what these inflated figures are all about. That includes a simple uart driver. And just now, JUST NOW, I coded up hello world in C and compiled it on my linux system. The ELF file is 8168 bytes, so these figures are inflated and ridiculous.

Steve mentions the 8.5K number (although it is hidden in a title). Note the other comparisons in the chart are not C and I even said “this is nothing the C compiler can’t do.” The point is, though, that the C compiler doesn’t give you the feature set. Me personally? I’m not going to trade in C for this. But I’m also not using Rust, Go, etc. etc. It is still interesting and the original post wasn’t trying to compare it to C for size.

OK then, you and I can stick with C and be happy. It would be interesting to know what Go does to end up with 1.6M !! It must static link and entire runtime or a whole interpreter, or a whole something or other. Just one more reason to “say NO to GO!”. Rust I have also heard disappointing things about on other fronts. I do like a new language now and then, but for language features that twist my mind in new ways. I’m not expecting anything to replace C for getting work done.

strip bin_exe_file

Blame the writer of the article; small hello world size is hardly the essence of nim; nor the syntax of writing a for loop in it.

But a compiled language with a modern type system, and that incorporates the pragmatic elements of functional languages, and has a very promising approach to metaprogramming? Without giving up speed compared to C? I think nim is the first language that ticks all the boxes to be a serious contender for the niche the C++ currently occupies.

It’s much worse. Using GCC 7.3.0 a minimal Hello World compiles to 8296 bytes, but that’s unstripped. It’s actually just 6120 bytes, and with a small number of GCC and linker options I got it down to 4536 bytes in two minutes. A minimal C++ Hello World (using iostream) is 8768 bytes and 5184 bytes after the same tiny optimizations.

No idea what you have to do to end up with an 41K binary after translating the source to C. I wrote an image processing tool yesterday which actually does something useful and links against an external library (libpng), it’s 364 lines of code and 18336 bytes unoptimized. The boilerplate Nim generates for a Hello World must be huge. And I don’t even want to know what Go does to end up at 1,6 MB.

Shouldn’t it just be a single system call and a fe bytes for the string constant? Where do all those *kilobytes* of junk come from?

It is a system call when (dynamically) linking to an OS library. However, that requires other features that adds to the overhead and tends to increase binary size. For example, to load a library dynamically, you need to have a static interface to the OS which requires the full API for at least loading a library, then you need the static code to ask for a library to be loaded, and then the interface to the library. It can be kept low in some cases, but generally not. Keeping it simple to console means less overhead for that interface, however it does require linking in the basic communication library. There are multiple such libraries, some are smaller or more efficient than others, but they tend to offer fewer features. You can go more barebones (and thus more architecture specific, and use the bios, but then you have to know what features that system offers, how to interface to it, and sometimes work around bugs in the particular system to use the bios or equivalent. However, in such tailored cases, yes, you can shave off a significant number of bytes.

http://timelessname.com/elfbin/ has an interesting read on minimizing a usable Hello World binary in Linux. The result is 142 bytes.

And https://www.muppetlabs.com/~breadbox/software/tiny/teensy.html gets it down to 45 bytes–however it too uses ‘dirty’ tricks to abuse the executable format.

Not sure I follow you. Syscalls can always be made without any additional libraries, and you can tell the OS to load a dynamic library through a section in the ELF header.

I’m a simple guy…

DS = segment holding string, DX = offset to $-terminated string, AX = 09H, and DOS interrupt 21H prints the string.

Followed by AX = 00H, and another 21H interrupt call to end the program.

… want to know what Go does to end up at 1,6 MB. … NSA needs you

Interesting, https://github.com/VPashkov/awesome-nim#embedded

A hello world compiled from a C source on an Atari-ST could be below 100 bytes (I forgot the exact size). This probably still is possible on today’s systems if you know your compiler good enough.

Do we still know our tools well?

Probably not!

Do we need to?

There are situations where we need to, which tends to be called embedded, but for a lot of computing, which is more valuable: Programming time, or storage/ram space?

Yes we do! get off my lawn! http://tonsky.me/blog/disenchantment/

This is an age old problem. I never understood why our coding department knew so very little about implementation. It got worse in the late 90s with a round of new hires that seemed to have come from an environment where they just baffled em with bullshit to keep their jobs and drag projects out for months (most likely county or state contracts). They were gone within a year. The new batch of hires did a bit better, having actually worked with SoC and embedded systems and not having a lot of resources or a server in Palo Alto doing the heavy lifting. I am not sure who to blame for the current model in which everything is just a frontend for a data scraper. Rather than properly plan and implement an algorithm to compute and predict, they would rather make sure it requires a 4g connection to scrape data that the customers have to pay for and definitely gotta have a social network presence to be a “real” product smdh. I am thankfully a consultant for the hardware division, so I can do my own ‘social networking’ of living humans that control the purse strings on the project to poo poo that garbage. Oh well. 2008, IoT, Cheetoheads, and the great need for datamining every second of a consumer’s use has tarnished baremetal coding and optimization. It is cheaper and easier to plug in a more capable processor/memory vs paying a team to optimize the code and hardeye security/privacy. I could see how Nim would be interesting to the coding world though.

as soon as I read ” indentation sensitivity” I didn’t bother following the link. That’s a killer feature for me, I have no interest in using a language that is going to change processing depending on how many spaces I have before a line..

I realy haven’t understood why python has become popluar – as php is a much better scripting language and can be used for small scripts up to big systems….

Yes indeed, the mystery of python popularity. Personally, I will take ruby any day in that arena. But there are some nice python libraries. So far though I am getting along fine just ignoring python. Now Haskell, there is a reason to change your attitude about about white space sensitivity. Don’t expect to get any real work done using Haskell, but it is a lot of fun to play with. One of the Haskell slogans is “avoid success at all costs”, which in itself is enough to get me interesting. You have to admire the honesty right up front.

All those libraries are the reason for its popularity. Most Python code just glues together modules written in some other language.

Honestly, that turns me off more than anything else. It was funny. A few years ago I had a team working for me doing software development on a hardware project. They loved Python. I saw where you could now get Python that had braces (probably this link: https://python-with-braces.appspot.com/) and I sent it to them. They responded “Are you crazy? We like the indentation.” I could never get an articulate reason why. I mean, sure, some C programmers are sloppy indenters (I’m guilty) but you CAN indent and you CAN run cb on anything to get it indented however you like. All it takes is one tab/space fiasco and your code is going to in flames, I’d imagine.

Nice, if i ever have to do stuff with Python, i’ll use the PWB. I hate, hate the indentation crap. I have changed a python program or 2 and if i hadn’t had some sort of memory about the indentation thing i’d been scratching my head for who knows how long. It did bite me when i edited the script, but it didn’t take that long to fix.

Although now that python rulers have gone full retard, i won’t be doing any of my stuff with python.

I hate the indentation crap too. I cannot believe that any reasonable, sane, modern person would *PURPOSELY* go out of their way to INTENTIONALLY CREATE a language where indentation was a requirement. Freakin ludicrous.

Maybe someone should create a language where parenthesis drives one crazy?

Yes, I kind of agree with that one too, but at least there IS a realistic point to them.

“language where parenthesis drives one crazy?” That was done a long time ago – see lisp.

I’m not saying it wasn’t done before– I’m just saying that it is a DUMB idea which should have been relegated into the ashcan of history rather than being resurrected to menace the world again. ;-)

I have only briefly looked at Python but I saw the weird indentation in the code and wondered what the deal was with it. Never knew it was a requirement. I am a C coder personally, and indentations would piss me off because you are going to end up debugging a problem by counting empty spaces. That’s just wasteful for time management. Really bothers me. By the way did anyone find the extra space in my short paragraph here? That would break a Python program sadly.

@Al: All it takes is one ; fiasco and your compiler is going to spill out incomprehensible error messages. Or using “=” instead of “==”, which is even subtler. Or…. It’s just a matter of _which_ pitfalls you’re used to avoiding, no?

C requires the use of “;” _and_ braces to delimit blocks and commands. On top of this, every C coding style guide that I’ve ever read requires consistent indentation and spacing.

Python requires the indentation.

@Elliot – Not for nothing, but you can actually SEE a semicolon and braces. Indentation is simply white space.

@JWhitten, if you can’t perceive the whitespace you might be better advised to find another occupation rather than another programming language.

I have only briefly looked at C but I saw weird brackets in the code and wondered what the deal was with it. Never knew it was a requirement. I am a Python coder personally, and brackets would piss me off because you are going to end up debugging a problem by counting closed brackets. That’s just wasteful for time management. Really bothers me. By the way did anyone try to compile this short paragraph? That would break a C compiler sadly.

@Elliot, we are out of reply space so I’m replying above. I would argue that WordPress and many other well-known systems don’t gobble up my semicolons and equal signs. Moreover, semicolons and equal signs are readable. You can’t look at a printout and tell a tab from a space vs some non-printable nbsp character.

I know you know as well as I do how badly WordPress mangles our code. In C, I can pull that code out, run it through cb or whatever other thing I want to get it in the indent style I want and it works if I do or even if I don’t. If I mess up a brace or a semicolon, I will be able to see that.

Now I’m sure in my emacs or your vi I can replace spaces with some magic dot or something, but that seems like not a great answer. If I were objecting to how functions were defined or something I’d take your point. But invisible characters should not be significant and that shouldn’t be a personal preference.

Due to it’s ubiquity Python is rapidly moving towards the top of my “must learn” pile. I’ve resisted this long in large part because I too dislike the idea of whitespace affecting function.

Does it always get updated whenever upstream does? Is it compatible with existing libraries? I would only learn Python to be easily compatible with all the Python stuff that is out there. If I wanted to be fighting to make things work all the time I could just use some sort of Python to sane language bridge and do my coding in the sane language.

Troll harder, n00b.

I am happiest with semicolons and braces, but I’ve been doing more with Python, and I don’t hate the indents.

I’m finding that Python with it’s semi-strict typing is more suited to some lower-level stuff than PHP (eg streams), and it has many good libraries with examples. It seems I can usually get something working quite fast in Python.

When I started reading this, I thought about Vala. That’s also a language that compiles to C. It’s not designed to compile to JavaScript though.

Vala is a very good design. The potential of GObject introspection is exceptional to bind different languages. https://wiki.gnome.org/Projects/Vala/LibraryWritingBindings

Hey Al – I’m not sure where you sit in the whole ‘CompSci’ world but as a hardware engineer who only dabbles in the software world when embedded firmware is needed in a uC, I find it hard to see why all these languages exist as one can find any number of articles picking a select list metrics and extolling the virtues of “their language of choice” and why it’s amazing.

It could make an interesting discussion to just go through where each language shines best and when it could be used as I’ve often found myself in debates of “Verilog vs VHDL” or “C vs C++ vs C# vs …”, when it generally falls down to the intended application, existing code or even what is available (and irrespective of the language – bad code is bad code, it’s rarely solved by changing the syntax).

So while I can appreciate that Haskell, Go, D, Nim, etc are programming languages, I’ve yet to see any sort of overview of them and where they shine (outside of being talked at by a CompSci major who may not appreciate what constitutes a high-level perspective or impartiality).

Okay, [Steve Kellock] highlights the portability of Nim but also notes that Nim doesn’t have any big-business backers (which can lead to limited/patchy support and potentially a short life so personal investment may be a dead-end).

But how about the others? Why did Rust develop?

From my outside perspective they often strike me as either falling into a “computer science navel gazing” or “not invented here” category.

[Steve Kellock’s] quick introduction to the language. His writing style is awful.

Holy shit, I can’t believe how emotional people are over Python’s indentation requirements. It is juvenile to reject an entire language and its ecosystem out of hand due to indentation. (Mom! You can’t make me eat it! I don’t eat anything that is green!)

98% of the code I’ve written in my life has been in languages that don’t care about indentation, yet in every single case, you’d be a fool to not use inconsistent indentation. The only way that Python is different is that it requires indentation. In return, you avoid all the curly braces, begin/end pairs, and semicolon clutter.

If you want to complain about Python, complain about its native speed.

all serious coding guidelines require proper indentation a modern ide could easily display python code with visual indications of where blocks begin and end they might even be brace characters where some see clutter others see helpful visual indicators that make code easier to read

Night be the difference between *requiring* a “modern IDE” vs any old text editor will do.

This.

Alas, “the only wining move, is not to play”.

According to [https://medium.com/practo-engineering/execute-python-code-at-the-speed-of-c-extending-python-93e081b53f04], he only way to speed up Python is to use C library in it.

But the beauty of Python is not its “Marmite” alike spacing (in my case, what bother me is not the fact that it’s compulsory, but the fact that you can use EITHER space OR tabs.) but the fact that it will run more or less the same in each system that can execute Python code.

But, yess, a more optimized interpreter could be great.

This is quite impressive. I really don’t like Python though I did run it once inside a GM862 GPS. It only had Python 1.5.2 IIRC and didn’t include support for exception tracebacks but we were able to *add support* by uploading the required code. It compiled (slowly) itself.

See my reply to Elliot for more about that. It isn’t the indentation per se. It is the lack of visibility of the spaces and the well-known penchant some tools have for gobbling spaces or converting them to something else.

Now my real big complaint about Python is that every time I install something pythonesque that doesn’t belong to the distro I’m using it insists on some package that breaks some other python program on my system and I have to pick. Or use some virtual-like thing to have multiple python systems at once.

Even in the C community where we do tend to indent, everyone does it a little bit differently and the compiler doesn’t care at all.

There are several factors which contributes to the size of your executable.

linking type (dynamic, static )

memory model (large, medium, small) (on x86_64 large model your) the symbols

debug information (symbols)

etc.

And beside that you running environment aka OS is one of the most important factor. Just check out the 256 byte demos or the 4k intros http://www.pouet.net/prodlist.php

Also OS executable file format, like elf or PE determines all sort of things.

Btw. On our OS Threos ( https://threos.io ) a simple hello world with large model on x86_64 (we need large model as we relocate every program on load time) and dynamic linking takes 4372 bytes without any tweaking or optimization. (we use elf format)

How does its feature set compare to modern C++? The introduction of C++11 changed things immensely, and once concepts, coroutines, and modules hit in the near future, C++ will have the near-complete feature set of virtually any high level programming language, at a fraction of the runtime cost!

If you find nim cool take a look at haxe [1], vala [2] and Genie [3]. Where vala is my favorite one :)

1 https://haxe.org/documentation/introduction/compiler-targets.html

2 https://wiki.gnome.org/Projects/Vala

3 https://wiki.gnome.org/Projects/Genie

… and Zig language and Jai language look promising too. The future will tell which will replace C (and C++). Hopefully one of them will.

Nim –> WASM … https://www.spiria.com/en/blog/web-applications/webassembly-nim

zig seems to match Nim’s size, when built using `-Drelease-small=true`. I tried a simple “hello world” binary and got a size of 53k (on Debian 9.6, zig v0.4.0): https://abacusnoirform.blog/2019/05/21/1621/