The hottest new trend in photography is manipulating Depth of Field, or DOF. It’s how you get those wonderful portraits with the subject in focus and the background ever so artfully blurred out. In years past, it was achieved with intelligent use of lenses and settings on an SLR film camera, but now, it’s all in the software.

For the Pixel 2 smartphone, Google had used some tricky phase-detection autofocus (PDAF) tricks to compute depth data in images, and used this to decide which parts of images to blur. Distant areas would be blurred more, while the subject in the foreground would be left sharp.

This was good, but for the Pixel 3, further development was in order. A 3D-printed phone case was developed to hold five phones in one giant brick. The idea was to take five photos of the same scene at the same time, from slightly different perspectives. This was then used to generate depth data which was fed into a neural network. This neural network was trained on how the individual photos relate to the real-world depth of the scene.

With a trained neural network, this could then be used to generate more realistic depth data from photos taken with a single camera. Now, machine learning is being used to help your phone decide which parts of an image to blur to make your beautiful subjects pop out from the background.

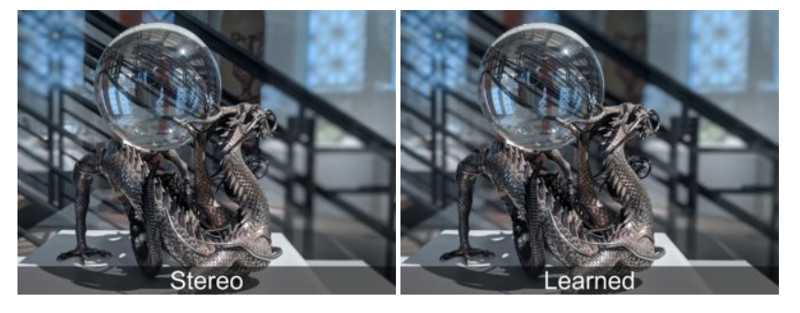

Comparison images show significant improvement of the “learned” depth data versus just the stereo-PDAF generated depth data. It’s yet another shot fired in the smartphone camera arms race, that shows no signs of abating. We just wonder when the Geiger counter mods are going to ship from factory.

[via AndroidPolice]

Why would anyone want parts of an image to be blurry? A sign of a professional image is that it is sharp throughout. Years ago some cheap amateur cameras would make images with fuzzy areas. That was a major distinction between blurry amateur photography and crisp professional photography, which is distinguished by being sharp from the nearest point to the furthest point in the image. Bokeh is a sign of inept photography.

If you’re dryly documenting? Perhaps. As soon as there’s a creative or artistic element involved, that goes out of the window. Why do have theaters have spot lighting when the technology exists to light the entire stage blindingly? Focus, or lack of it, is a tool you can utilize to tell the story you’re trying to tell. A completely sharp scene can be very busy. By tuning out the background you can direct the attention of the viewer towards your subject.

Indeed. One of the things I do is create backgrounds for visual productions. Often these must be placed within a couple of feet of the subjects. What I have learned to do (and think I’ve found some success with) is to blur the image printed on the backgrounds to create the illusion of depth. Depth of field is a fundamental limitation of optics, including human eyes, and human visual perception uses clues like out-of-focus backgrounds to build a model of the scene in our minds. So to answer stereoscope3d’s question, I make images professionally, and that’s one reason I would want some items to be blurry.

That is NOT professional. It is the mark of the rank amateur. If you were professional, you would know how to make your images sharp.

Take my advice, then. NEVER, EVER, EVER try to take photographs of anything under a microscope. The phenomenon of things being out of focus with nothing you can do about it is so real it would drive anyone who requrires everything to be in focus to become insane. Only the narrowest portion of the image is in focus in that context.

I’d argue the reverse. Anyone who claims that field of depth isn’t part of the professional’s bag of tricks is likely a rank amateur. If the claim is made direct adamantly and without nuance or substantiation doubly so. Be wary of those dealing in absolutes.

And doubling-down on ridiculous claims is the mark of a troll.

Then, by your own definition, you must be a troll.

Though, as a not even half defense, more like a quarter of a defense – there was that movement many years ago, when Ansel Adams and friends rebelled against the popular soft and dreamy photography of the day. They called themselves group f64 or something. Small aperture to make crisp sharp images of landscapes etc.

I seriously hope you’re being sarcastic…

Absolutely not! Fuzzy areas on photographs are a terrible waste of pixels, as well as being ugly and causing eyestrain. Only bad photographs done by unskilled photographers have bokeh! Some people use the excuse that they want to convey depth. It doesn’t. If they want depth instead of distorted 2D, they should shoot in 3D. Bokeh does not add any depth at all. If you are not skilled enough to make your pictures sharp, you should not be making pictures. Period.

The only technology that is in focus at all ranges is a pinhole camera which, although interesting, is impractical to use. Parts of an image being out of focus is the norm for all photographs unless 100% of the image is objects so far away that the ratio of distance/aperture is practically zero like with astronomical photos. In the typical context a skilled photographer chooses what objects are in best focus at the expense of others. It’s one of the decisions photographers make along with choosing the frame of the image and just when to take the picture that makes photography an art. (..ISO & exposure settings too ). Video games and CGI images often violate the norm by making all distances show up equally in focus but that is what makes such images unnatural. Old guys like me (older than Unix Time) have not-so-much flexible eye lenses and live the life of things being out of focus. We can put on glasses so what we are reading is in focus, but have no chance of reading small text on the TV across that room that way. Or I could put on a different set and the TV is clearly while my book is unread-ably fuzzy. I have to choose, or go with bifocals. The fuzziness of things being out of focus is normal and a useful tool in photographic art, not a sign of weakness or ineptitude.

I got my ratio backwards I see. :-) Oops.

Photographs are a kind of measurement. Because of this, they cannot be perfectly precise. The Heisenberg Uncertainty Principle defines the absolute limit there, but usually our instrument’s limitations come into play first. At different field depths a focused image is more or less precise with its best being whatever its focused at and with lesser precision as one deviates from that distance. In fact, something infinitesimally close to the lens is infinitely out of focus, like puppy noses in the camera. There is no way even the most skilled photographer could take a focused picture of a cold wet puppy nose up against the camera lens and simultaneously show the print pattern on the wallpaper across the room. Blurriness in photography is often absolutely unavoidable and its a good thing. You don’t know the disgust I experienced the first time I had to look at Mick Jager in 1080p. (so far I’ve been able to avoid seeing him in 4K. 640×480 is bad enough although I think I could handle 64×48 :-) )

I do hope everyone here at Hackaday will forgive me for waxing eloquent about this photo-fuzziness issue. I understand fuzziness, thoroughly. EVERYTHING in the universe is a little bit fuzzy and consequently out of focus no matter how much we turn the focus knob. You can thank the Author of the universe for making it that way and the likes of Heisenberg and Planck for pointing it out. Fighting fuzziness is like fighting the second law of thermodynamics. An image can only get worse the more you do to it and it doesn’t start out perfect either. You can stack images and do processing to pull the maximum detail out of your data but you end up having to earn every pixel and run the risk of creating fictitious content if you go to far. Best to accept that resistance is futile and embrace the warm fuzziness. It’s not half bad, especially with cookies and milk. ???? (Impressionistic art can be beautiful too….Ahhh!!! Monet!!!)

sorry but most of what you say is bogus:

first of all you cannot generalize photography as a whole. when you work in science or medicine you often work with thin sections around 2-6 µm thick this is more or less a single plain this is relatively easy to capture completely sharp with the right equipment.

secondly sharp is a matter of will. do you really want to have everything sharp or do you want to go more for the artsy stuff? (hint hint the artsy stuff is easier don’t say its blurry just call it an artistic effect) if you want to make it sharp you just need the right tools and enough patience. stacking software has come a very long way and is used routinely in science. high quality objectives are almost distortion free (the microscope type not the standard stuff unfortunately). the tools definitely exist its just up to you if you have the money and time for it.

You need to realize that other than your first sentence, I am in agreement with you fully. Yes if a microscope slide is thin enough, all of what is on it is, for the most part, in focus and the natural blurriness of the optical image is finer than the pixel size. Every time I’ve ever looked through a microscope, it was an experience in 3d exploration moving about laterally in two dimensions and adjusting focus on different levels to discover things closer or farther away. That’s why I warned stereoscope3d never to look into a microscope. Telescopes are OK as long as you don’t point it at the sun. :-) It is true that EVERYTHING is fuzzy to some degree. Heisenberg demonstrated a distinct limit in the combined precision of position and momentum of measuring anything. It was initially thought as a measurement limitation, but has later been accepted as a fundamental limit of things in the is universe, so everything is fuzzy in both momentum and position to some degree. (The universe is finite in its level of detail) As long as that scale is smaller than your pixel size you’re just fine and wouldn’t know the difference.

Stacking is a great tool especially in astronomy, but there are limits to what you can do there also and you DO run the risk of generating fiction while doing statistics like that. Statistics may say the average household in a region had 3 1/2 children but if you go to that region you won’t find any households with 3 1/2 children. If stacking pictures has a rhythm and it correlates somehow with variance in the light source you could get nonsensical results. If the brightness of something turns on and off in brightness with a square-wave pattern, it would add up to look like it’s 1/2 as bright as it’s actual maximum and constant. Statistics are powerful tools for pulling information out of data, but they are also capable of producing fiction. Stacking pictures is a statistical process so you have to be at least initially a bit suspicious. 300W street lights are on average about ~150W bright but we never see them operating at that brightness except maybe for short transition periods while warming up if they are mercury vapor type.

Tell that to the team at JPL who “fixed” the original Hubble Space Telescope images in software.

BrightBlueJim says: Tell that to the team at JPL who “fixed” the original Hubble Space Telescope images in software.

ummm, you’ve got your history wrong. It took a skilled NASA bloke many hours of tedious and somewhat dangerous taking apart, switching out parts, and putting back together to fix Hubble. Spherical vs Parabolic Mirrors….Sheesh!!

No, I don’t. During the time between deploying HST and actually fixing it, the interim solution (so that there could be SOME use made of the telescope) was for the wizards at JPL to process the images to within a pixel of their lives. The results weren’t all that good, and nobody really knows how much fiction was generated, but that’s what they did.

From Wikipedia: “Nonetheless, during the first three years of the Hubble mission, before the optical corrections, the telescope still carried out a large number of productive observations of less demanding targets. The error was well characterized and stable, enabling astronomers to partially compensate for the defective mirror by using sophisticated image processing techniques such as deconvolution.”

I see the wisdom in that and knew about it. Not as good as actually fixing it, but its up there and its running and the people on the ground wanted pictures so you may as well make the best of it and that’s what they did. :-) It really is an art pulling the most data out of a bunch of combined images without “creating” data too much. :-) I would argue that any time you aggregate data together into a statistic you always introduce a kind of “dark side” to the data that may or may not byte you (sorry, couldn’t help the pun) at some instant. I wouldn’t be surprise if there wasn’t a mathematician out there who managed to prove it. :-) That’s why direct uncombined, preferably linear, measurements are so precious, to the point of obsessive pursuit. (my precious….) Ain’t science cool? Me thinks so. :-)

a real professional here in the comments!!

facepalm.

Uhm… no. Just no.

You like your steaks well done and with ketchup, don’t you?

Well done, neat, [no A-1 sauce, no salt, no catsup]

Inept photography is not knowing how or when to use bokeh. Bokeh is use to separate the subject, unclutter the background or as an artistic statement between others.

A properly calculated DoF will get in focus the front and rear of the subject, just the front or a range or in other cases from the frontal focal point to infinity.

While you definitely do not want or need bokeh on every single picture (documentation, journalism, historical…) it has it’s place.

Now if you just dislike then that is fine, i’ts your opinion. But the use of bokeh is not a sign of being an inept photographer (when used properly).

Don’t panic, this can be solved. Read a paper about the human eye and after understanding that go search for a good explanation on how a cameras and lenses work.

Obvious troll is obvious

I know you – you’re that guy from the Department of Redundancy Department.

Unless he is from the UK, or Canada,

then it is the Ministry of Redundancy Ministry!

On second thought, in Canada it might be called Redundancy Canada Redundancy…

Trolls used to be clever. What happened to the good old times?

Because that’s how your eyes work. It shows focus. The big thing this may be useful for is video. One of the problems now with high-FPS video is that it looks WRONG. In real life, things look blurry when they’re moving around. You can’t focus on everything. Being able to select what should be focused in video could help this, along with some creative camera work.

Portrait photography is quite often done with the subject in sharp focus with the background blurred to a degree, or even completely out of focus. This is NOT a sign of a “rank amateur” but rather evidence of someone who knows how to take an interesting photo that draws the viewers eyes to where the photographer wants. Obviously, you are not a professional photographer, so perhaps you might want to go read a few books on the subject and/or study under a true professional before you go spouting nonsense about which you know nothing.

Not nearly as obvious he’s not a professional photographer, as it is that he’s a troll.

“New” trend? What? At least write “smartphone photography” and not “photography” then.

Light field cameras do this much better. https://en.wikipedia.org/wiki/Light-field_camera. Lytro pioneered this concept using a camera that had a whole bunch of lenses and sensors in a single camera, allowing you to choose depth of field and point of focus later, but Lytro has since gone out of business.

There is something wonderful about a three letter acronym with the same number of syllables as the phrase it is acronyming. POV comes to mind. Movie makers like to use it because it, well, it isn’t quicker than saying Point of View. But it sounds cooler. Much like Stimpy with his tricorder that “makes the coolest noises”. Likewise DOF, unless you are the kind of tone-deaf nerd who pronounces acronyms like words.

It’s quicker to read/write though.

By definition an acronym is pronounced like a single word (SCUBA), where an initialization has each letter spoken individually (FBI). So the “tone-deaf nerds who pronounce acronyms like words” are correct and are the guy who doesn’t know the difference between an acronym and an initialization.

You probably meant initialism, initialization is something to do with software and reticulating splines I think.

I like the way the 3d printed penta-phone-holder puts all the lenses in the same plane with it’s perpendicular upward a bit and to the right. That should help the computer figure out what to do with the five images. :-) The concept should work with other phones too Nexus 5’s can be cheep nowadays, especially if their screens are cracked. I suppose the Pixel 3 wouldn’t have to be in beautiful condition either as long as they are stable and their cameras still work well. :-)

This one is new to me. I was waiting for the 4K and higher video cameras to come out. I was thinking better optical measuring systems can be made including depth measurements with the better resolution sensors… especially with more than one. Also… stitching the photos to deblur as has been demonstrated on HaD seems my first thought. Depends on the effect required for the subject.

In regards to the Geiger Counter… man… would be sweet to have a RadScout type system that can detail isotopes/elements and give a quantitative value. Same goes with data logging other ionizing and non-ionizing full spectrum range EMF events. Looks like the referenced systems are basically pixel counters with a scintillation material. https://en.wikipedia.org/wiki/Scintillator

For a price of 5 pixels, one can hire bunch of freshman, go get anything in focus and stereo.

For the price of 5 of the cheapest Pixel 3s (On sale) – not including cases and 3D printed frame – you could buy a DSLR from and a high-end portrait lens. The Canon EOS 6D Mark II is $1300 new from B&H, and the Canon EF 70-200mm f/2.8L IS II USM – widely regarded as Canon’s best (and most versatile) portrait lenses in part because of its superb bokeh and narrow depth of field is $1400 new with free shipping.

Instead of reinventing the hardware wheel, It’d be interesting to see the software on the Light L16 Camera which can be found for $1600 and already has 16 sensors.

No one is suggesting that this is the best way to get those images. This was a neat tool used by google to design and train the software to achieve the effect with a single camera. So for everyone except google we’re comparing 700-800 dollar phone against your 2700 dollar Cannon setup or the 1600 dollar L16.