In an earlier installment of Linux Fu, I mentioned how you can use inotifywait to efficiently watch for file system changes. The comments had a lot of alternative ways to do the same job, which is great. But there was one very easy-to-use tool that didn’t show up, so I wanted to talk about it. That tool is entr. It isn’t as versatile, but it is easy to use and covers a lot of common use cases where you want some action to occur when a file changes.

The program is dead simple. It reads a list of file names on its standard input. It will then run a command and repeat it any time the input files change. There are a handful of options we’ll talk about in a bit, but it is really that simple. For example, try this after you install entr with your package manager.

- Open two shell windows

- In one window, open your favorite editor to create an empty file named /tmp/foo and save it

- In the second window issue the command:

echo "/tmp/foo" | entr wc /tmp/foo - Back in the first window (or your GUI editor) make some changes to the file and save it while observing the second window

If you can’t find entr, you can download it from the website.

Frequently, you’ll feed the output from find or a similar command to entr.

What It Isn’t

I had mentioned incron before as a way to attach actions to file changes. It also makes things easier, although perhaps not as easy as entr. As the name suggests, incron does for filesystem changes what cron does for time. That is, it causes some action to occur any time the specified file system changes happen. That survives a reboot or anything else short of you canceling it. This is very different from entr. With entr, the command runs like a normal application. As long as it is running, changes in the target files will trigger the specified action. When you stop the program, that’s the end of it. Usually, you’ll stop with a Control+C or a “q” character.

Speaking of the keyboard, you can also press space to trigger the action manually, as though a file changed. So, unlike incron, entr is an interactive tool. You’ll want it running in the foreground.

Options

There are several command line options:

- -c – Clear screen before executing command

- -d – Track directories that do not start with “.”

- -p – Do not execute the command until an event occurs

- -r – Kill previously run command before executing command

- -s – Use shell on first argument

The /_ placeholder gets the name of the first file that caused a trigger, although that doesn’t seem to work properly with -s. For example:

find /tmp/t/data -name '*.txt' | entr cp /_ /tmp/archive

When one of the .txt files changes, it will copy to /tmp/archive.

The -d option has a peculiarity. With it you can use a directory name or a file name and the program will watch that directory along with any files. The file changes behave as normal. However, any new files in the directory will cause entr to stop. This lets you write things in a loop like this:

while true; do ls -d src/*.[ch] | entr -d make done

The loop ensures that entr is always looking at the right list of files. This will also cause an error exit if you delete one of the files. The ls command provides all the .c and .h files in the src directory. The command is smart enough to infer the directory, so you don’t need to set it explicitly.

Missing Changes

The -r option is good if you are running a program that persists — for example, you might use kdiff3 to show the differences between a recently changed file and an original copy. This option causes entr to kill the program before starting a new one. Without this flag, it is possible, too, for entr to miss a file change. For example, make a file called foo and try this:

echo foo | entr -ps 'echo change; sleep 20'

In another shell, change foo. You’ll see the change message print on the original shell. If you wait 20 seconds you’ll see something like “bash returned exit code 0.” Now change foo again, but before you see the bash message, change it again. Once the 20 second timer expires, entr will go back to waiting and the new change will not cause a trigger!

Exit entr and start it again with the options -psr instead of -ps. Now do the same test again. You’ll see that the change registers and the original bash script never completes. However, if there are no changes for 20 seconds, the last script will exit normally.

Examples

There are plenty of examples in the tool’s man page. For example:

find src/ | entr -s 'make | head -n 20'

This gets a list of all files in the source tree (including subdirectories) and when any change, you run make. The head command only shows the top 20 lines of output.

A lot of editors make automatic backup of files, but if yours doesn’t it would be pretty simple to make an auto archive with entr, although, honestly, use git or something if you want real version control:

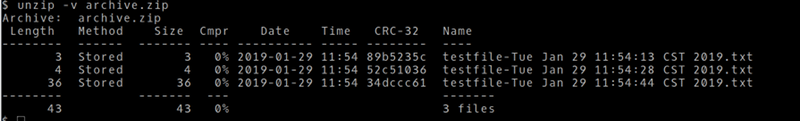

echo testfile.txt | entr -s 'AFN=/tmp/testfile-$(date).txt; cp testfile.txt "$AFN"; zip -j archive.zip "$AFN"'

This stores each version of testfile.txt in archive.zip along with a timestamp on the file name:

It was tempting to use /_ in this script, but it doesn’t seem to work with the -s option very well.

Embrace Change

I doubt I’ll use entr as much as I use incron. However, for little one-off projects, it is pretty handy and I could see making some use of in that case. However, as usual, for any given task there are usually many ways to accomplish it. Having entr in your toolbox can’t hurt.

There are still other ways. For example, last time, someone mentioned in the comments that systemd can take a “path unit” that can trigger when a file or directory appears or changes. This still uses inotify internally, so it is really just another wrapper. Still, if you like systemd, it is a consistent way to set up something similar to incron but under the tentacles of systemd.

Yet another crude hack, made necessary by the poor design decision to base linux on POSIX. Between stuff like this, the nightmare called “systemd” and the terrible issues with asynchronous I/O, Linux is looking more and more like the old grey mare that need to be retired, except nobody has bothered to make anything better.

How is the ability to watch files for being changed a hack and a bad design decision?

How is systemd a nightmare? (especially with the ton of issues it actually solved)

How is asynchronous I/O bad, unless you hate performance?

I don’t think you’ll get real answers out of him. I think we found the real “old grey mare.”

at least with the async io stuff, there are some real issues with the api design that prevent a number of useful patterns from being used but they’re also still being worked on to allow for better and easier use. The other ones he’s completely wrong about.

https://lwn.net/Articles/776703/ has a trove of good information about it, and i’m really looking forward to what comes next with it.

Yet another Linux elitist that has a hard time letting go of where we were so that we can get to where we want to be. I have a feeling that you still drive an Edsel and refuse to use a remote control for the black and white console television in your living room, it just adds unnecessary complexity to an already perfect platform after all. Who needs color or convenience when you have 8-bits of grey and two semi-functional legs that are perfectly capable of getting you from point to point to point.

I’m really surprised that you’re able to get on the internet without having a stroke though. Are you using a 300 baud modem or maybe one of those new-fangled 1200 baud modems?

Should I continue berating you with the ridiculousness that you discharge like a sickness or just leave you alone to wallow in your own self-pitty?

My only complaint about this is that the old black and white TVs didn’t have 8 bits of grey, they had a theoretically infinite number of grey values given the completely analog nature of both the signal and reproduction methods. Obviously it wouldn’t actually be able to produce that many due to amplifier noise and other things causing it to be unable to accurately pick a specific value like that.

What us wrong with systemd? Can someone explain it to me? I’ve heard this “systemd is bad” repeated often but the details as to why are murky. Thanks.

Brief:

The Unix idea is a collection of small tools that you can chain together to get the job done. Old init was a bunch of scripts that did what they said, and were easy to read — but you had to read them when the shizz hit the fan. So you had to be able to read/modify shell scripts, which is a basic Unix skill.

Systemd is _one_ program that hopes to do everything. (So it’s in conflict with the Unix ideal.) It’s invented its own ways of doing things, creating a dependency structures that are particular to systemd, meaning that systemd is an additional thing that you have to learn in and of itself. The single-tool-to-do-everything reportedly makes it a ton easier to administer large installations / multiple computers, and the dependency system _does_ boot up a laptop super quick.

So basically you have old-schoolers who are good with scripting for whom old-school init worked just fine, and you have new-schoolers who are used to a single control panel for everything or who administer large systems and don’t mind jumping through all the systemd hoops to get it done.

I’m honestly in the first camp, so I hope I haven’t painted the systemd side too broadly.

This is pretty spot on, and I’m in camp 2. I’ve done the init route, it works as long as the libraries and packages that your script requires are installed and your init script is checking things out. Systemd allows you to configure your application as a full service with dependencies and requirements before your application is even started. No fuss, no muss.

Having said that, I do think that it’s a bit ridiculous that they want to use it for “everything”. Once you have a single point of dependency for any kind of hierarchical tree, you have a fragile system. That’s one of the positives of using Linux over Windows as a platform, because of the decentralized system architecture that Linux provides(or rather provided). I’m not going to get on the Windows hate train either, Microsoft does some impressive things as well that are far better than Linux could provide.

I think it’s important for any engineer to look at the pros & cons of each platform(and distro) before making a jump to it as a solution.

Isn’t that the problem though? The major distributions are jumping on without asking their engineer users the pros and cons? A non-systemd solution requires more work to obtain, and marginalizes it’s users to one-off distributions?

I appreciate the clear explanation. I personally tend toward the init side. As Father Mulcahy might have said “Ah! Modularity!”

At it’s core, systemd is designed to bring up software to run. For that it does dependency management, as well as triggers.

For this, there is general the “systemd” way, you need to do it the way systemd decided, or it won’t work at all. In the old days of bringing up your system, it was simply about 200+ shell scripts that where called doing their thing.

I welcome the change to systemd in this area, for the simple reason that those shell scripts provided a ton of flexibility, but also a ton of different ways things can fail. Or even, some software had startup scripts that acted differently then others (simple example, some required a “start” parameter to actually start things, some had “start” as default as well. Some provide a “restart”, but not all of them)

Systemd also provides logging. And this is where it gets actually quite a bit of complains. Instead of plain text log files, systemd does it’s logs in binary form, and thus requires special tools to read. Which is a bit of a switch, however, this does provide good ways to filter logs.

Good for some, bad if you have all kinds of tools/scripts that look at plain text log files.

(Also, stuff started with systemd gets it’s stderr output logged by default, which is pretty awesome, as that saves work on the application developer side to ensure that things are always send to the logger)

But the largest failure there (IMHO), is that they introduced this logging before it was ready, and it’s still not ready. There is no facility to send your logs to a remote server. You’ll have to use some ugly workarounds to get that working.

So that’s the main 2 things of systemd.

And finally, some people complain because they think systemd is trying to do everything. But the reality is that it has a bunch of optional parts that you can use, and are a bit more optimized. (Example here is login prompts)

And there are misconceptions, like “systemd-mount” isn’t a replacement for the mount tool, it’s a wrapper that systemd uses to use the mount tool.

I agree with Elliot. The Unix ideal is to have lots of little tools that you build bigger tools out of (the original Minecraft). However, systemd has had some issues. It tries to do everything. It has historically poorly coordinated with other things in many people’s opinion. It makes it hard to find/view/manipulate logs without using its tools. It is pervasive so it is hard to remove if you don’t want to use it for some things it does.

However, from a zoom out perspective, it isn’t just systemd. Baloo comes to mind and I’m sure there are others. My personal opinion is this has been caused by experienced Windows/Mac (historical) developers jumping to Linux. There, the mindset is often, “The program is smarter than the user so I’ll do everything and not give a lot of visibility because I always work!” Which is great until things don’t work. Then it is much harder to coax what is wrong out of the system.

But it seems like it is where we are, so there’s no real point in fighting about it anymore.

Couple of things were/are wrong with it actually.

If you ignore the stuff that is not about the code, such as that Lennart is an ***hole to work with. There are still couple of technical problems with it. The biggest one at the time was that it was forced into production too soon, I do Linux QA for last few years and I can assure that it have been “fun” experience, it was really easy to crash it with stress testing back then etc. This has been mostly dealt with after the first few years.

It’s also huge codebase with dbus interface so there is a large surface that is almost impossible to get proper security review.

It’s causing nightmares to the BSD camp, because it actually provides interfaces that important projects are starting to use, but systemd never aspired to be portable in the first place.

So there are actually technical reasons for the opposition but my guess is that most of the opposition was just because it was a huge change in the base system and that it means that everyone would have to learn something new.

I wish more people knew about the kernel VFS’ inotify solution, and the suite of userspace tools built around it. They hook into the VFS, instead of grossly and inefficiently doing all sorts of dentry/lstat calls. They don’t always work on every filesystem, because some — namely remote — don’t always notify clients, or in a cluster, if the node itself has been updated with a lock (i.e., essentially only work for modifications of files on the same node).

Outside of inotify, be very careful running some of these commands (e.g., find and loops with entr) on a cluster or network file system. I regularly have to educate individuals on what should never be done on such, as dentry and *stat calls do not get pulled from memory, or are only run once. We’re talking a performance hit due to going from hundreds of nanoseconds to milliseconds (4 orders of magnitude).

As always, scripters need to know what they are doing.

> In the second window issue the command: echo “/tmp/foo” | entr wc /tmp/foo

That last “/tmp/foo” looks superfluous.

btw, in a modern bash you can get rid of the echo:

entr wc <<< /tmp/foo

It’s not the way it’s piped that makes it look redundant. It’s because you’re performing an action “wc foo” on the same file that you’re watching (“echo foo”).

So if you wanted to check the lines in foo when bar changes: echo “/tmp/bar” | entr wc /tmp/foo

The entr command is just more flexible, which means that you have to do something redundant-looking when you want the simplest case.

You could use /_ but I had not discussed it yet so I spelled it out. Also, I was confused about your comment until I saw the editor’s red pen removed the example I had with the <<< syntax.

If you are running python, the watchdog package (https://pythonhosted.org/watchdog/) is an excellent, easy-to-use and cross-platform library for monitoring file system events.

Does “common use cases” = common case? Or you should use a case or enclosure? Or you should use more than one case? Is it the same as “use-cases”?

Yes, I’m waging a personal war against “use case”. First the hyphen, then the World!

https://en.oxforddictionaries.com/definition/use_case

Good-luck with that.

Oxford “living dictionary”. We can prune it when it gets unruly.