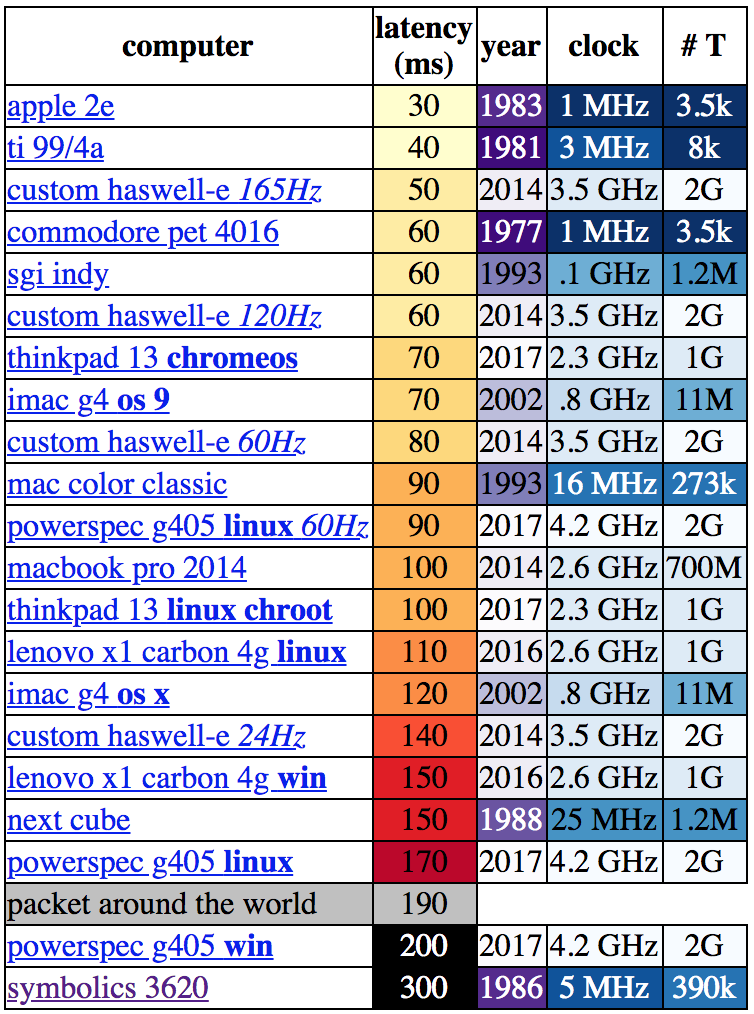

Ever get that funny feeling that things aren’t quite what they used to be? Not in the way that a new washing machine has more plastic parts than one 40 years its senior. More like “my laptop can churn through hundreds of gigaflops, but when I scroll it doesn’t feel great.” That perception of smoothness might be based on a couple factors, including system latency. A couple years ago [danluu] had that feeling too and measured the latency of “devices I’ve run into in the past few months” (based on this list, he lives a more interesting life than we do). It turns out his hunch was objectively correct. What he wrote was a wonderful deep dive into how and why a wide variety of devices work and the hardware and software contributors to latency.

Let’s be clear about what “latency” means in this context. [danluu] was checking the time between a user input and some response on screen. For desktop systems he measured a keystroke, for mobile devices scrolling a browser. If you’re here on Hackaday (or maybe at a Vintage Computer Festival) the cause of the apparent contradiction at the top of the charts might be obvious.

Let’s be clear about what “latency” means in this context. [danluu] was checking the time between a user input and some response on screen. For desktop systems he measured a keystroke, for mobile devices scrolling a browser. If you’re here on Hackaday (or maybe at a Vintage Computer Festival) the cause of the apparent contradiction at the top of the charts might be obvious.

Q: Why are some older systems faster than devices built decades later? A: The older systems just didn’t do much! Instead of complex multi-tasking operating systems doing hundreds of things at once, the CPU’s entire attention was bent on whatever user process was running. There are obvious practical drawbacks here but it certainly reduces context switching!

In some sense this complexity that [danluu] describes is at the core of how we solve problems with programming. Writing code is all about abstraction. While it’s true that any program could be written directly in machine code and customized to an individual machine’s hardware configuration, it would be pretty inconvenient for both developer and user. So over time layers of sugar have been added on top to hide raw hardware behind nicer interfaces written in higher-level programming languages.

And instead of writing every program to target exact hardware configurations there is a kernel to handle the lowest layers, then layers adding hotplug systems, power management, pluggable module and driver infrastructure, and more. When considering solutions to a programming problem the approach is always recursive: you can solve the problem, or add a layer of abstraction and reframe it. Enough layers of the latter makes the former trivial. But it’s abstractions all the way down.

[danluu]’s observation is that we’re just now starting to curve back around and hit low latency again, but this time by brute force! Modern solutions to latency largely look like increasingly exotic display technologies and complex optimizations which reach from UI draw functions all the way down to the silicon, not removing software and system infrastructure. It turns out the benefits of software complexity in terms of user experience and ease of development are worth it most of the time.

For a very tangible illustration of latency as applied to touchscreen devices, check out the Microsoft Research video after the break (linked to in [danluu]’s piece).

…and/or the possibility of sloppy optimisation of development code over time. Layer upon layer of bloatware bloated rubbish.

Those who can, develop. Those who can’t, slag others off in HaD comments.

Linus is going to be so butt-hurt then. ;-)

But you can learn to develop software, it’s more enriching than refining HaD comments…

++++

Nonsense.

Exactly. Cake with so many layers that a truck is needed to move it :)

THIS so much!

Being a software developer and having to deal with slow software that you didn’t write is sort of a personal hell. There’s no excuse for poor performance, at least from a technical perspective. (From personal experience, it’s usually management saying “we want it cheap and now, so don’t worry about fast!”).

Approaching from the other side, “perfect as the enemy of good”. Nothing would get released because there’s always one more thing that could be done, be the goal, optimization, or just one…more…feature.

That feature thing seems to be the biggest issue. So much focus on features no one asked for that there is no time to fix performance problems and bugs, especially when management wants a new release every year (or every quarter!). We’re at the point where most people and companies would happily buy a new release just for bug fixes and performance enhancements, but they are already paying a separate fee for those (and buying upgrades as well)…how do you sell the new release as a bug fix when you are also charging a maintenance fee for bug fixes? Plus, those leading in features want to stay in the lead and those trailing want to catch up. So you end up getting new release version 2019 that still has bugs from version 2001, and which still uses Java, Flash, and 3 different versions of VB runtime because no one has had time to rewrite those components…and the only thing the average user gets out of it vs the 2001 release that ran faster (even on 2001 hardware) is that it opens files made in the 2019 release. My industry is full of it. Creo is at least a decade behind and while every new release gets features the competition had 10-15 years ago, every new release is also even more buggy and slow. SolidWorks leads the pack but works so hard to stay there that the performance drop from 2017 to 2018 was so bad that a lot of companies rolled back and just keep a couple of 2019 machines around to open vendor files and down-convert them. CATIA does it right…it’s stable and fast and it doesn’t get annual releases…but the price is too high to get much traction in the marketplace, even if the time saved by engineers would easily pay the difference. So basically, everyone is to blame at this point.

Adobe has done this a lot. Customers are lucky if Adobe releases ONE patch/update on any version of any of their products. Any bugs still left, tough. Buy the next high priced version when it’s available.

Honestly I don’t get it. Working in the industry with dozens of Creo seats, we basically just pay to use it per year. We don’t want new features, just bug fix and support (and obviously licence). It’s so much effort (read time and money) to switch to another system at that scale of industry that there’s no way we’re gonna leave to Catia or Solidworks, even if everyone desperately wants to. So why can’t they (Creo) focus their energy on optimization rather than bling bling shiny features? Easier to keep a customer than getting a new one in that case.

All deserving of the grace hopper award for wastefulness. 1ms to be worn around the neck at all times.

The moment he showed 1ms lag in that video, I wanted to see what 2ms looked like.

When you look at the compiled code, the many layers of abstraction often vanish. The layers are there for the humans. In interpreted code, it often compresses into an indirect call or reference to a pointer. The Pythonish systems have bounds checking and argument parsing and guessing to do that isn’t the fault of the layers of abstraction. For example, if you look at Linux drivers, which are rather complicated to write, it is very likely to be mainly configuration stuff. The actual run-time code is often very small and fast.

Wow, I’ve long believed that old Apple 2 from the 1980s was the fastest computer I’ve ever owned. It had a 3 MHz speedup card, which really felt awesome with software designed for 1 MHz (except games that played too fast).

The IIgs was especially fast. I would bet everything it does is faster than a modern PC. Boot. Load AppleWorks. Write a letter. Save and quit. All faster on an Apple than any modern Windows machine.

No. I still have my GS (and a 2e) and while it is fun, it is painful, especially in GS/OS.

1) 60Hz hurts

2) Finder window redraws that you can watch

3) Booting from the 40MB HD with 3MB of RAM takes a few minutes. (booting from a RAM disk is pretty quick).

4) AppleWorks is pretty fast, but you are not presented with a blank document. So getting to the document, typing, saving, printing on the 80cps dot matrix printer… these take a while.

But once it is running it is responsive! So latency is good.

I have one with a 10MHz accelerator. I added a card that emulates drives with an SD or CF-II. Boot is basically a high pitched beep – done! I run KIX (A UNIX-like shell on ProDOS) and an 8 bit frame grabber. It is a hoot. (And the sculpted KB on a IIe Platinum is very nice). A 6 core 4GHz Intel with Ubuntu and 32G of RAM is as responsive and as others have said, does a lot more. I would be waiting weeks or months for the IIe to do what the Linux box does in a few seconds of ripping through text files and spitting out compiled C++.

Framework over framework, we build applications that weight 50MB and just draw two boxes …

Just look at Android apps or Node.js. It’s getting stupid.

The difference is that back in the 80s you’d have had to stop after you’d drawn your two boxes. Now that’s just the beginning.

Shannon, I think the 1980’s demo scene would respectfully disagree.

Nope, that’s all just boxes.

Obviously I was trying to make a point. The frameworks that [imval] is complaining about make the development of new software much much easier. Demo scene isn’t a thing on modern machines because you’d just use a library to open a video player.

Bulls**t!!! I had a Atari ST and Amiga back then and they had pretty responsive GUI environments with just 1 MEG of ram., O course they weren’t running garbage bloatware like java. Just plain old compiled C code no fancy, bloated libraries that ran into the hundreds of megabytes.

I coded Gui apps that had a disk foot print in the tens of KB in C and Modula-2 at the time.

No need to swear, young man.

I’m sure you had lovely GUIs in a megabyte of RAM. You can do the same today if you like. However, what we’re talking about here are the software libraries on modern multi-tasking systems.

Spoiler alert!

The letters under the ** are “hi” in that order. Ooops.

A little reminder, the Amiga had a fully preemptive multitasking OS, the kind you expect today. 1 MB was a bit tight, but as soon as you had 4 MB RAM, you were golden.

Make the learning curve steep enough to code in (e.g. Amiga) and you’ll get code from top notch programmers.

Conversely when you make something so easy that the cleaning crew can code in, you get garbage.

BTW some of my Win32 GUI C programs are around 100K and do not require additional run time components. They run on win2K to win10.

I all but gave up on learning Android programming when my “Hello World” app was 1Mb in size…

That’s a minimum size app; you hello world app certainly had an icon or multiple size icons an,d certainly the AppCompat framework.

I do make a webcast player with material design, icon, rest queries, listings, having ads, lib for json, appcompat lib and a audio player and the apk is under 2Mb : 1.99MB for the debug version and 1.67Mb for the release signed apk.

You just have to be carefull what you have as dependencies!

Thanks for the response, I’m quite the Luddite when it comes to installing apps on my phone or tablet. I just don’t want to load anymore spyware.

My Samsung Galaxy came with so much “Swampscum” and Verizon bloatware that I can’t delete and won’t use because they ask for permission to access Contacts, and other files I feel they don’t need to access in the primary use of the apps.

You can’t remove those apps unless you’re rooted , but if you use something like “Adhell3” , you can disable all the bloatware you want and use it as an adblocker without needing to root your Samsung galaxy device. I have about 50 apps disabled on my Note 8 using Adhell. Check it out!

Instead of unpacking it and researching the reasons why it’s that way? To be fair, this attitude won’t get you far in any new area, not just Android.

Counterpoint: I enjoy no longer having to manually tell my computer games what IRQ to use for sound, or rebooting in extended memory mode vs expanded memory mode depending on the needs of the program I’m trying to run. Both achievements are only made possible by big overarching frameworks that can hide those sorts of details.

Can you imagine the smartphone ecosystem if all apps needed to be recompiled for every different hardware configuration?

Oh yes indeed :-)

Oh, thanks for bringing that up. Now I’m going to have nightmares about running out of IRQs again…

Boxes like Amigas never had that nonsense in the first place…

The TI-99/4 and 4A didn’t have that problem either. Peripherals contained their own Device Service Routine. The console operating system knew absolutely nothing about any hardware not built into the console, aside from ‘dumb’ devices like joysticks or a cassette recorder.

Connecting ‘sidecar’ devices or plugging in the Peripheral Expansion Box and cards into the PEB automatically extended the console OS with their functions. To use the new hardware, a program only had to ‘know’ the function calls. That made all peripheral hardware directly available to BASIC and other languages without needing to update the programming language.

For any software that wasn’t programmed to use a specific peripheral, that hardware was simply ignored by that program. DSR’s just sit there waiting for a program to call them to action.

In other words, Texas Instruments perfected Plug n Play before the IBM PC existed.

If only IBM had done something like that so that expansion cards would automatically, without user intervention, add extensions seamlessly to the system firmware in a way that was completely transparent to software. They tried something like it with MCA but every MCA device required booting the PS/2 with a special configuration floppy to be able to set it up. On a TI it Just Worked by plugging in and hitting the power button.

Heh, yeah, what smartphones were before the iphone.

I had a windows mobile 6.5 smartphone and I remember having to edit the registry settings to change icon colors.

And I thought it was ridiculous that I had to regedit my phone more than my computer.

“Manifested obsolescence through software that does more than ever before.”

FTFY

Does it really? Sounds like one of those articles of faith that keeps the jobs flowing. After all if one has done everything, what’s left?

I believe so. Everything I regularly use does, at least. Much of it is behind the scenes things that you don’t remember that you used to have to do, like saving files, most of my editors these days just remember the work I’ve done so that even if they or my machine crash they’ve still got everything. On that note also: I experience far fewer stability issues with my software these days as well.

Good for you, not what i’ve experienced though.

Yeah, I just take it for granted now that i can write into an unsaved file, kill the machine, and the content will be there when I boot it again.

I get grumpy now when I re-open a file and the undo buffer has been cleared.

We’re running machines with processors magnitudes faster than those of yesteryear. Around the turn of the millennium we were looking at about 300 MHz, 32-bit, single-core processors to get our work done. So in that timeframe our computers have gotten 10 times faster, and yet so much slower.

You can chalk it up to new features but I don’t think there are enough new features in most software to warrant the sluggish operation.

Plus, “responsive and fast” could be added to the list of features customers want.

Just Because it is doing more doesn’t mean it does more.

Of course nothing to do with all your data/actions being sent to google/facebook/wherever!

Nothing to see here, peon. Move along. It is all for your benefit, and government knows best.

I’ve been saying for a long time that the faster the processor, the longer it takes to boot up.

That’s the perfect reason to add a video player to your bootloader!

No more boring booting, just watch an episode of your favourite SciFi until your mobile has booted! \o/

One jokes, but there’s already an expired patent on loading screen mini-games (US 5718632 A).

There was a game on the original PlayStation that had something akin to a Space Invaders mini-game for a loading screen. I can’t for the life of me remember what game it was but I thought it was a fantastic idea and I kind of wish some of the more modern games would offer something like that. Something lightweight that won’t significantly impact the load times, just for something to do because some of these games take a while to load.

You played Galaxian while the Playstation loaded the entire game of Ridge Racer off the disc so you didn’t have to sit through any loading screens once the game started.

I have a ryzen 2700x at 4ghz with a samsung evo 960 and ram at 3200. My windows 10 takes about 30 seconds to get to password screen when the machine is completely turned off. And is completely operational 40 seconds after I pressed power on, and that’s running windows 10. It’s even faster if I enable “fast boot” in my bios, but then that means I need to jump the motherboard if I ever want to go back in the bios menu , because fast boot is so fast you don’t have time to press the needed key to get into the bios. With fastboot on it takes about 25 seconds to get my pc operational. Thing is , processor isn’t the only thing involved in the boot process. Ram , HDD speed and bloatware will affect the boot speed much more than processor alone.

I’m thinking most of those machines are garbage. Yours is “modern”. Display is also a big part of latency (input lag). Gamers have been striving for lower input lag and response times. Business computers and laptops don’t bother with any of that. Going from a computer like yours with 144hz display at 1ms response time to a Thinkpad laptop is day and night. Old displays look like a stuttering mess.

I have an Amiga 1200 that boots from a Compact Flash card. It goes from power off to Desktop ready in under 10 seconds. It does that with a 68030 @ 28 MHz and a total of 6 MB RAM. Remember, Amiga OS has a GUI and is a multitasking OS, so there are a few things to do and load during boot.

There is no real excuse why a system like yours, that is much faster in EVERY respect, should take 4 times as long to boot.

No excuse except you don’t have USB, ethernet, wifi, networking file sharing/print servers, OS-level spell-checking, indexed file searching that can handle terabytes of data, 32 bit graphics at a resolution your Amiga can only dream, with graphical UI effects that are more sophisticated than what your Amiga could generate with raytracing software and a week or two’s worth of time. Seriously, the login screen for Windows 10 involves a couple of graphics effects that are run so fast by modern hardware we completely take them for granted…

You’re like that guy in a 1950’s car who brags about how fast his car goes on 100HP compared to a 250HP luxury sedan from present day. No AC, you’d die in a crash over 25mph, the ride at 50mph is deafening, it gets half the gas mileage, the handling and brakes are atrocious, etc. Meanwhile the luxury sedan can do 80mph on a 90 degree day and keep the interior quiet enough to carry a conversation at normal speaking volume, and a pleasant 69 degrees…and get 30mpg doing all that.

As I’ve been shouting into the void between co-workers ears for a long time – only write code when you’ve exhausted the possibilities for avoiding writing code.

https://en.wikipedia.org/wiki/X_Window_System#Principles resonates.

One of my favourite things is deleting code :-)

There’s nothing quite like reducing thousands of lines of nonsense to something you can read through on one screen without scrolling.

I second this. Deleting huge chunks of unnecessary code is always a highlight! And if that code was to support a feature no one actually used that I get to remove? Even better!

It certainly doesn’t help that everything is trying to communicate with a computer on the other end of the world. Checking for updates… Sending diagnosed info… Waiting for approval… Updating always at the moment you try to do something (while it could have done that during the period it was “sleeping”). And let us not forget constantly checking allllllll files for possible viruses. Ahhh progress.

The assumption of an “always on…” connection has changed the world, and not always for the better.

This is jumping to wrong conclusions based on flawed measurements. I suspect most of those data points are dominated by screen input lag + screen refresh. Any modern PC or laptop can have a latency of input to on-screen reaction of less than 10 ms (proof in any competently conducted test of gaming monitors). Perhaps certain applications are burdened with UI latency, but this is absolutely not due to some common tendency in modern computers.

Hence the invention of statistics for teasing out the message in the noise. Just needs a much larger, more comprehensive sample size.

Also, all PC like systems where tested with Linux. Which, depending on the configuration, has a pretty low priority on handling user input, and prefers to more performance related IO first (like disk IO)

“Perhaps certain applications are burdened with UI latency, but this is absolutely not due to some common tendency in modern computers.”

That’s what statistics are for. Pulling the “common tendency” out of the noise that’s “it’s not happening, it couldn’t be happening, it makes us look bad if it is happening”.

Input latency is a particular issue on Android. It’s partially masked by increase hardware speed. Pixel/Nexus phones stay snappy but modern budget phones are abysmal. Part of it is the input buffer and handling multi-touch, part of it is increased multi-tasking / context switching, some of it is lazy UI development paired with clunky UI libraries (hopefully Flutter with its declarative UIs will fix this).

Bluetooth controller latency on Android is particularly noticeable when you try to emulate old games. The muscle memory you developed years ago on SNES or Genesis suddenly don’t work. There’s a measurable difference in iOS and Android bluetooth input latency.

Modern games on consoles can have this issue as well. Splatoon 1&2 have gyro aiming, Splat1 has ~50ms input latency and Splat2 has ~100ms input latency. The different is VERY noticeable. It destroyed my snap shots until a later patch reduced the latency.

This doesn’t explain why my 4th gen X1 carbon is often slower than my T450s. Both have identical OS and software installed. Both have the same amount of RAM and SSD sizes.

How Windows 10 can open Word 2000 instantly but needs seconds for a PNG.

Perhaps it’s because Win 10 noticed that you loaded W2000 before and pre-loaded it while you weren’t looking and hid it from the performance monitor display so you would not know.

I worked to create an automation app that waited for a requester to be created before continuing, but the software just blew by that section. It turned out the software I was automating created all the requesters and gave them negative coordinates to keep them off screen, changing their location to make them snappier to appear. Of course this meant that start-up lagged as hundreds of them were created and positioned out of view.

I think there is a major “not made by my generation” problem. Abstraction was a wonderful thing at one point. We really shouldn’t be doing most tasks in Assembler or even C. Every 15 years or so we get a new framework that gets bolted on top of the old one and is supposed to make everything SOOO much easier. Instead programming is actually harder because once you get past the simple tutorial apps the framework usually just isn’t meant to do whatever you need it to do! So execution AND development are both slowed down, frustrated for no real gain. And if anyone tries to point this out they just get labeled as a greybeard that is afraid of change.

One must, of course, choose a framework appropriate to the problem domain and to the suggested solution to the problem. If you begin your project correctly that’s the sort of thing that should be evaluated.

This is basic economics at work — the programmers will get lazier and lazier (virtuous programmers that they are) and speed up their development by heaping on inefficiencies until the result is so obnoxiously slow that you, the customer, are actually willing to ditch the product in favor of a competitor who put more effort into optimization.

This finds an equilibrium when all competing software is just slow enough to be annoying, but not quite infuriating. Increase the speed of the underlying hardware, and the inefficiency equilibrium just shifts a little more.

Of course, some customers value efficiency more highly than others, and end up making choices that diverge widely from the majority of the market. :wq

I hear google spends a lot of machine time fuzzing chrome to find bugs. Maybe what we need is a cryptocurrency that derives its value from fuzzing code to increase software efficiency for everyone.

At the price of battery life/charging time and of course new hardware price to be forked out. You/we all pay for those out of our pockets!

Quite true. The more “modern” the software is the more bloated it is and the more useless features it has that 99.9% of the user community will never use.

But some coder thought it would cool.

Yep indeed, just look at the proliferation of clean and distraction free writing apps with minimalist everything including paragraph type/font selection…..just write.

We had those for a long time, back then people called them ‘editor’.

Except that 9/10 times it’s not the “coder” making that decision, it’s sales and marketing insisting that “everyone” is asking for it. In my 20 years of professional development experience, most of the major issues of feature creep can be attributed directly to the typical sales commission rewarding people for saying “yes” when they should be saying “no.”

The Gigatron responds in the same frame to the game controller, so 15ms-30ms, depending at what point you touch. But hookup a PS/2 keyboard, and you already get at least a full frame delay and are in Apple-2 territory or slower.

My solution is, I resort to do most everything I can at the command line & still find fvwm the best desktop environemtn to ensure productivity, I’ve, personally, found the more elaborate interfaces contribute nothing to productivity.

Lots of people dislike / curse / do not use IDE’s like eclipse because of there sluggishness and slow start speeds.

Some time ago I (again) tried to use CodeBlocks and for about 10s it showed loading of modules for a bunch of different languages and what not that I have no intention of ever using, while it could easlily be made smart enough to recongize that I’m writing some C++ code and only load the modules needed for that.

After opening a web browser it needs another 6 seconds to load the first web page which is just a few kB of custom html and a few small PNG’s which reside on my own HDD.

For 20 years I’ve been annoyed by programmers not giving a single second of thought about responsiveness of the programs they write. Most of those programmers probaby have a relatively fast PC and they seem to assume everybody has the same, or Moore’s law will fix it for them, or their users.

Then there are the inherently slow interpreted languages, which are fine for simple scripts, but get abused for writing complete and complex applications. There are many of those, but I’ll just give one example of Meld, which is written in Python, and is a quite sluggish progam.

That microsoft video also looks silly.

Difference in latency between 1ms and 10ms on a screen with a refreshrate of (presumedly) 60Hz or so?

But I admit I’m not a hardcore gamer, they probably know better what latency does.

SkethUp is an example of alleged interactive 3D sketching program in single threaded Python that is sluggish to hell.

A fellow name Nikita had an interesting blog entry on the subject.

http://tonsky.me/blog/disenchantment/

If you ever used the application Borland Delphi (Object Pascal) … That was how an IDE should work! Loved working in that IDE for visual programming. Now for my c/c++, python, etc…. I mostly just use Geany or nano in Linux, or notepad++ in Windows land.

You can use Lazarus project, free IDE based on freepascal, almost identical to Delphi and mostly compatible with Delphi code and components. Great piece of software if you need to code some quick and dirty Windows/Linux application but capable of much more.

Back in 1984, one Bill Basham wrote a program called Diversi-Dial.

It was less than 64K, controlled 7 300 baud modems, and ran on an Apple //e.

As a programmer myself, I considered it quite a feat. Nowadays, people use compilers and interpreters

to get their program written. As another poster said, when Hello World is 1+ MB, you can see how bloated it has become.

Not everyone has the fastest whiz-bang latest computer so I do understand having to write for multiple hardware configurations.

What gets me though is Windows 8-10. No one asked for them. Windows 7 is still a solid operating system that does

what most people need. Microsoft recently started sending out updates about how it was ending security updates

for Windows 7. The sky is falling! The sky is falling! The world is doomed! I never relied on Microsoft products for

security. I browse the weather channel page, a few tech sites, get my mail, play Star Trek Online and Rail Simulator.

I also mess around with VB. Windows 7 allows me do to all this without fancy tiles, smart apps etc.

Recently I read that Microsoft now has a version of windows desktop you can run in the cloud.

I wouldn’t trust it. It’s a slow march to software as a service. You don’t pay, you can’t use the OS.

I see that coming down the road, it’s a matter of time. As for me, I’ll stick with my Windows 7. I’m happy.

Yes but MS is going to play hardball with nagware, and security updates (like no one has learned from XP what happens).

When Microsoft sent the nagware to “up” grade from 7 to 10, I was pissed. Tried to delete the file gwt.exe or whatever it was called, didn’t have permissions. Had to force “ownership” of the C: drive and all sub directories and files to myself, then I was finally able to delete that sucker.

Of course, Windows really didn’t like me changing the folders’ ownership properties and became unstable so I had to nuke it anyway…

Woz wrote his long floating point for BASIC and fit it in 256 bytes. I know modern compilers are very efficient and can often produce faster code than humans because it does not have to be readable. But for the life of me, I don’t know why some programs are so huge. I’m sure there must be good reasons, but ???

I ran Windows 2000 for the longest time, but it eventually got to being a problem in college because to access the online courses I needed a “modern” browser and there simply weren’t new releases of Firefox, Chrome or whatever that supported 2000. Nothing wrong with the computer or OS per se, it just became impossible to get the software I needed to get the job done.

Win 7 is solid and I for one have no intention of “up”grading to 10 any time soon, but at some point developers are going to stop writing software for 7 and a change will be forced. Lets just hope by then there’s a decent replacement available.

So, I’ve been doing software development for over 36 years, first as a hobby, then my profession and the past few years I realized they are both the same thing. Back in the old days, on a DOS operating system, one was single-threaded and a BASIC program, interpreted, would take a few seconds to a few minutes to execute depending on complexity. Of course we were talking about processors operating at 10 megahertz or so and RAM in the 32KB or 64KB or 128KB (now we’re flying). Also, we were talking the original Intel 8 bit 8088 processors.

About 34 years ago, I graduated to IBM mainframes with 32 bit architecture and 1 gig of ram using E-MVS (extended multiple virtual storage). I think we had 128K disk-packs the size of large file cabinets with a robotic tape cartridge silo for data backups. Reel-to-reel was still there, but it was a 3rd Tier choice for storage due manual intervention in mounting and dismounting tapes, but it could hold volumes of data second to none. With the time-sharing option (TSO), which was partitions in the box itself, i.e. 2 dev systems, 1 test and 1 prod VM, we were screaming with are water-chilled machines. The chillers were housed in buildings the size of a 3 car garage.

Then, in 1995, everything changed and a revolution took place with Windows 95. A gorgeous, intuitive interface and a true operating system instead of the facade that was Windows 3.1 still running DOS 7.0 in the background. Now we were styling and we could develop with Visual Basic, remember that? What are we doing these days, Visual C++. We’re a long way from the Beaches of Normandy. What are we up to these days, Windows 10? Yeah and the next version is probably going to be in the cloud.

Nowdays, I have an Android Smartphone with an Octacore (4 CPUS and 4 GPUS just for graphics) Qualcomm RISC processor. I have 4 Gigs of Ram and I enhanced the built in 32 GB with an additional 64 GB Samsung mini-SD card. This Android phone is more powerful, has more dynamic memory (RAM) and more non-volatile storage then the mainframes I worked with 30+ years ago. Also, it is connnected 24 x 7 to the internet so I can access anything anywhere as long as I have a stable connection. We did not have the internet 30 years ago. Also, my 1000+ songs are on my Android smartphone, with multiple different playlists. We didn’t have that either and with bluetooth, I can play it in my car, my RV, my boombox and my high-fidelity home stereo system.

Speaking of the cloud, that appears to be where everything is headed. Let those server farms supporting the cloud architecture do all the heavy lifting. Your smartphone just has to support the UI and the wireless transport to and from the cloud. I will probably help my employer move into the cloud before I retire, which will also be in the next year or two. We’ve come a long way and it’s been one heck of a ride and most enjoyable all the way.

I’ve noticed that in the Era of Windows (1990 Windows 3.0 to present day) that many programmers continue to create software like they’re writing a program for DOS.

They do all kinds of things that are not needed, duplicating functions provided by the Windows API. What things? Custom file dialogs and other utilitarian things. Just. Stop That. Need to open or save a file? Use the @#%#%@% standard dialog box and make your program leaner. (Nevermind that Microsoft Office is the worst offender of not using Windows Standard Functions. Same for Adobe.)

This is one of the major reasons a GUI OS exists, so individual programs don’t have to each have their own support for printers, don’t each have to do their own thing for accessing video features above basic text. Windows provides a huge abstraction layer so programmers don’t have to work as hard – yet many of them do, making more work for themselves and bloating their software.

Cross platform software is especially guilty of this. It often has unnecessary stuff that provides a uniform OS interaction, that’s not standard on *any* OS it can be compiled for. Wouldn’t it be far simpler to (for example) write the code so that when compiling for Linux it branches to standard Linux file open/save function calls, compile the source for Windows and it uses standard Windows functions. Thus the user experience becomes easier because the cross-platform program interacts with each OS in the way the *users of the OS* are used to from most of the other software they use. Many have been the times I’ve run into cross-platform software bugs that simply wouldn’t exist if the programmers hadn’t insisted on making all the users use *their* invented way of doing something rather than using the API functions of each OS.

Yes. Yes! YEEEEESSSSSSS!!!!!!

It’s not just me.

It’s not like we are asking for the device/program to do the task instantaneously. But it would be nice to have it actually acknowledge our input without noticeable lag. Typical scenario:

Push button.

Nothing happens.

Assume you fat-fingered it.

Push button again.

Nothing happens.

Glare at device. Wonder if it is hung or crashed. Shake it. Decide to give it one more chance.

Push button.

Action happens 3x in a row. Slowly.

Curse.

I have devices that can perform multiple billions of operations per second, yet cannot respond to a simple click fast enough to feel like it is not in slow-motion. If enough time passes between user input and some (hell, ANY) response, that the user can wonder if the input was accepted and try it again, then it’s wrong. You don’t have to make things actually work faster to make people happier, you just have to respond faster. And it’s not even that fast, by computer standards. Humans are glacial compared to processor speeds. And yet, every new device seems slower than the last, and every software update slows it down further.

And it’s all down to sloppy programming. I know. I have spent most of my career optimizing software from above, in and below the OS level. It’s crap piled on crap piled on crap until everything crawls. I *HATE* bad software. I *DESPISE* slow software. We’ve been writing code long enough to know better. Hoping that running crappy, slow software on faster hardware will fix the problem is like hoping feces tastes better if you spread it on a firmer piece of toast. As a programmer, every second of a users time that you waste should weigh on your soul like it was shortening your own life. But it seems these days that performance, like security, is an afterthought, if anything.

I don’t see this getting any better without some sort of major change in the way we do things.

“Computers should wait for people, not the other way around.”

Part of the reason you have that sequence is that we’ve been conditioned through decades of computer unreliability to expect it around every corner.

I’m not entirely sure it is just what you mention. I think there is some slowing down going on in the os’s maybe through updates or some such mechanism. Certainly I have observed such in the apple stuff. Once new os and he has been released updates often result in slow system. And, thee was that proven case of apple slowing down ios devices due to “ageing batteries”… yup.

Computer programmers should write code to save people time and not the other way round. i.e. don’t write it in crappy inefficient languages and framework to save development time.

[quote]Speaking of the cloud, that appears to be where everything is headed. [/quote] … But … is is good? Just because (like lemmings) ‘seem’ to be… Should we. Can we withstand the ‘net’ disappearing some day due to say a war that cuts out our ‘network’? Lots of applications/data should stay ‘in-house’ in my opinion. The ‘reason’ just because everyone is doing it, doesn’t make it the ‘right’ thing to do. Even cell-phones… Can we live without access to a cellphone? I know I can. Can you?

As for slow down…. Boot time does seems slower …the faster the system… Just is. More complex setup I suppose. Luckily booting isn’t part of my daily routine. Only boot when I have too which can be months. So not a big deal. That said, I use Linux on all my home computers. Once booted the system(s) just fly. Even the little Raspberry Pis with SSH (I don’t use GUIs with these small devices). I don’t go for bling, so I use a fast minimal GUI on my desktops. By using SSDs, and lots of RAM, Gigabit networking, middle of road graphics card and a current processor I don’t see ‘slow downs’ (other than internet related which I have no control over).

As a fresh college grad programmer from the real-time world (Z-80, 68XXX, etc.) starting in the 80s with RTOSs, I understand ‘speed’ and need for optimized code, threading, fast user response, etc… Carries through for even programs I write/support for desktops. My brain is always looking for ways to save a few cycles as I write C code… Even in slow languages like Python. I don’t see that mindset today. In fact reading posts, say on the RPI, they bemoan not having enough memory (>1GB) and the process is ‘slow’ at 1.2Ghz…. Sheesh, come on guys…. That’s swimming in memory and plenty fast! I remember doing a ‘lot’ with just 4Mhz… 8Mhz, and 32K and thought 256K was a ‘lot’ of memory at the time!

Interesting article, thanks Brian!

the answer is to run older software on modern hardware… I still run windows 7 64bit, office 2003, etc. On reasonably modern hardware (high end 2 years ago).

Windows boots in about 5 seconds. Most things happen instantly…

So why go to the latest bloatware???

“the answer is to run older software on modern hardware…”

Run NeXTStep with some nice packages in a VM.

Higher clock (faster) cpu need longer pipeline (shorter pipeline stages), this leads to interrupt latency increase.

More layer on every level (hardware and software) increase latency.

Synchronization between hardware elements (like core-core, core and memory, gpu and core, hardware peripheral and core etc.) increase latency. (older MAC with PowerPC, the PowerPC cpu integer and fpu units can exchange data over the memory (cache) only)

Memory mapped IO need to be non cached (or bus snooping needed). (non cached memory slows down cpu)

(On x86 the in/out instructions are few 100 cycle latency)

Even bad hardware design (like the rpi usb network design which only allows around 30% bandwidth) leads to higher latency.

Larger cpu register files (64 bit registers) and SIMD execution units (AVX 512 has 32 512bit registers, this is 2048 byte for context save) add to the latency.

If your OS saves every register on context switch (like Linux), then on a avx512 capable x86 cpu context switch is at least 2048 byte save and also at least 2048 byte restore, and not talking about the paging and MMU latency.

The OS design can hit the performance. Ex. if on your OS every program has it’s own virtual memory space (every executable compiled (starts) on the same address (Linux, Windows, MacOS etc)), and if the cpu has VIVT (virtually indexed and virtually tagged) L1 instruction cache (some arm cpus), than on context switch the kernel must flush the L1 instruction cache! Huge performance hit, a single task context switch can take 100 usec.

[Our OS’s ( Threos ) context switch on rpi2 is around 0.2usec other OS on the same hardware is 10usec at least.]

With lots of cores the OS locks granularity can be pain. OS shared resources (task/thread list, mmu pages etc) must be locked.

Most OS shared libraries are not shared in memory. Code duplication in memory.

[In our OS we really share the shared library codes in memory between tasks (mmu protected), minimal memory footprint duplication. => smaller memory footprint => cache friendly]

And finally as everybody observed application software (and libraries) are becoming really large. Large program => large memory footprint, and this also leads to higher latency (program loading, execution etc).

Back when Windows 95 was the hot new thing (IIRC wasn’t even 95a yet) I bought a new PC for my folks from tiger direct. From poking the power button to fully loaded and ready to go was 45 seconds. That was to where the hard drive quit thrashing, not just to having the desktop appear.

I haven’t timed my fancy AMD six core box where the CPU has more CPU cache than the old Win 95 machine had RAM, but I know it’s considerably slower to start.

Of course both are handily beaten by most 1980’s micros where boot time was flip power switch *BEEP*. A Commodore 64, TI-99/4A and many others made a contemporary Mac or PC appear glacial.

Here’s a post that seems relevant to this topic: https://games.greggman.com/game/imgui-future/

Personally, I sometimes have issues with text I’m typing taking way too long to actually register, mostly with search engine search bars or in-browser programs like Discord. This obviously isn’t a problem with the hardware itself, but with software designed to give such conveniences as auto-complete suggestions for every letter I type, even when the rest of the sentence I’m writing is already waiting in the keyboard buffer. Just waiting a fraction of a second after the last key press to start looking for spellcheck-autocomplete suggestions seems like it would eliminate most cases of this problem. But then I’d have to wait each time I want autocomplete instead of… every time I type a character in a field with autocomplete.

I’ll just have actually get myself to do some work and find out for myself if I can do any better. Maybe the now-richer history of computing will let us cross-reference design ideas to get what we want from higher-level abstractions using fewer layers?

SketchUp is supposedly an interactive 3D drawing, BUT they have decide to write it in *singled threaded* and in Python no less. If you have anything complex, it becomes a slide show on a machine that run AAA 3D games smoothly with few orders of magnitude more complex modelling and rendering.

I can understand if it were still a proof of concept internal demo or open source with a tiny group of people working on it but that’s not the case. It is a case of lazy development.

my windose computer in the 90s used about 15% cpu and about 40% memory just to idle (and these would fluctuate wildly for no apparent reason, with countless exe processes opening and closing in task-manager). My current windose computer at work does the same and I imagine this will be the situation 20 years from now.

iPad pro with 120hz screen and its zillion pixels looks pretty smooth to my eyes when I move the Pencil.

I like the iOS approach, two tasks max at the time, corresponding to my human limitation and I feel more productive.

if only it had an accessible file-system :)

Microsoft’s solution to the ‘abstraction’ induced latency issue…..

1. Devote a core to the display.

2. Increase the OS interface interrupt by 100x

3. Devote a core to the touchscreen

4. Devote a core to trying to predict where the finger will be.

5. Ignore the lag it induces in other applications.

Now that Moore’s law has stopped, I really hope programmers will get forced to stop using clock cycles as a clutch.

*crutch