“Never Twice the Same Color” may be an apt pejorative, but supporting analog color TV in the 1950s without abandoning a huge installed base of black-and-white receivers was not an option, and at the end of the day the National Television Standards System Committee did an admirable job working within the constraints they were given.

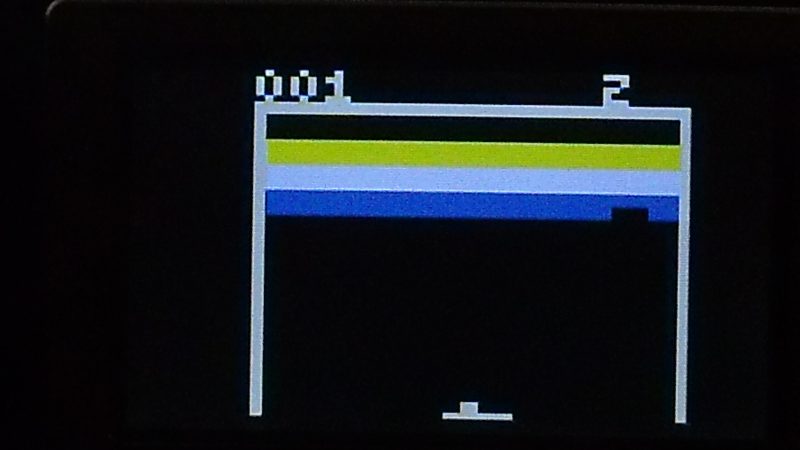

As a result of the compromises needed, NTSC analog signals are not the easiest to work with, especially when you’re trying to generate them with a microcontroller. This PIC-based breakout-style game manages to accomplish it handily, though, and with a minimal complement of external components. [Jacques] undertook this build as an homage to both the classic Breakout arcade game and the color standard that would drive the home version of the game. In addition to the PIC12F1572 and a crystal oscillator, there are only a few components needed to generate the chroma and luminance signals as well as horizontal and vertical sync. The game itself is fairly true to the original, although a bit twitchy and unforgiving judging by the gameplay video below. [Jacques] has put all the code and schematics up on GitHub for those who wish to revive the analog glory days.

Think NTSC is weird compared to PAL? You’re right, and it’s even weirder than you might know. [Matt] at Stand Up Maths talked about it a while back, and it turns out that a framerate of 29.97 fps actually makes sense when you think it through.

You have upped your game sir, nice work!

Brad

“a bit twitchy and unforgiving judging by the gameplay video below”

It is sot because of the bad quality trimmer potentiometer I used to control the paddle. With a good quality potentiometer it will be more playable.

The paddle controller is a hack in itself. I used at trimmer potentiometer to which I glued a push pin (after removing the pin). But it was not easy to handle because of the shape so I topped it with an epoxy buildup.

I had the same issue on my AVR Tiny-85 Video Game Hack as well. One solution was to take 256 samples of the ADC as the frame was drawing, and then average them out in the vertical blank time. This made a kind of smoothing filter on the ADC. Without that, it would wander and jump all over!

Brad

Paddle pots were a bit of an issue back in the day too… even on the spendier systems they’d be twitchy after a couple of months in use. Many things tried like tightening the wiper springs and hosing them out with contact cleaner regularly.

There were design tricks for paddles.

A pot goes hi z so the effect of that is the ratio between normal z and hi z. Starting with a 500k pot lessens the fault effect.

And wiring the pot as a rheostat fixes the max z. Use a separate series resistor.

I recall experimenting with a resistance ladder made of scavenged resistors and a row of foam-tinfoil switches along the bottom of the screen. A far more direct interface.

As for the paddle itself, I also added a couple of push switches to the paddle, fully high and fully low, with the defult being whatever the paddle happened to be. Then another two to offset slightly from the paddle left or right and another to force dead center.

A great effort with a 4 cycles/instruction chip.

I wonder if there would be a way to offload the phase selection to an external chip so a slower uc could be used or a higher resolution generated.

Two things come to mind …

The dreaded PLL chips

Or one of the many QAM chips. Quadrature Amplitude Modulation.

If you’re interested, have s look at how the NTSC port of an original IBM CGA card worked. They did some bit switching to alter phase an consequently the NTSC colors were different to the CGA colors.

They were faced with the same challange of generating multiple phases from a low clock freq.

Their solution was quite novel.

What they can do is just generate the colorburst, then use higher resolution pixels to do artifact color.

(Assuming it can do higher resolution pixels)

On a more general note, NTSC can be abused all over the place.

Round frames up to 30Hz, omit interlace to get 240p… anything close will display on almost everything. Consistent is the big goal. Everything else can be hacked.

That’s the wall you run into.

As you want increase horizontal resolution you reach a point that one pixel is less in time than one cycle of color burst. Altering phase (color) then becomes quite difficult without high precision circuitry.

That is why I mentioned the IBM CGA card that had a NTSC output. It is a pulse position hack as phases angel was far more complex.

PAL and SECAM aren’t that much more complex and they deliver always rhe same colour.

Conditions that would lead NTSC to have wrong color would lead PAL to have no color, not correct color. Desaturation is a good failure mode, but let’s not describe PAL’s phase reversal and vertical chroma filter as magically capable of recovering good signal from a nonlinear phase distortion.

SECAM’s FM modulation of chroma sounds like a total pain to deal with, and every computer from the era agreed: most things I’ve found that worked in France emitted component of some sort, not SECAM.

You’re thinking about as signal level and linear phase distortion. That is not the problem that PAL was designed to fix.

PAL was introduced in the VHF era.

VHF terrestrial signals are often distorted by co signals that bounce off flat surfaces like the ground or buildings that have a slightly longer path. This introduces intermodulation distortion and consequently smaller phases distortions as the path lengths are only slightly different.

It was this VHF transmission path problem that PAL was designed to fix.

If you analyse the on paper specs, PAL doesn’t seem so good.

However the errors within PAL are less visually perceivable to human eye sight.

Nice. The [Great Wozniak] needed 44 chips to do this in the 70’s, maybe even in only monochrome. How the times have changed.

I read the story of Woz and Jobs building the first Breakout machine. Or rather, Woz building it and Jobs totally ripping the dude off. Some things never change.

Woz was outputting analog RGB to drive an arcade monitor and not NTSC.

” supporting analog color TV in the 1950s without abandoning a huge installed base of black-and-white receivers was not an option”, What’chu talkin’ ’bout, Willis? The FCC adopted an INcompatible colour (yes, that’s spelled right) system in 1950, but fortunately they changed their mind.

The backward-compatible NTSC standard was adopted in 1953, after the failed non-compatible system was first tried out in 1950.

““Never Twice the Same Color” may be an apt pejorative, but supporting analog color TV in the 1950s without abandoning a huge installed base of black-and-white receivers was not an option, and at the end of the day the National Television Standards Committee did an admirable job working within the constraints they were given.”

Considering the TV engineering book I had at the time, very. Mostly NTSC in glorifying detail, but some PAL, and SECAM. HDTV had just started.

An interesting question to ask is, if tasked with designing the color TV standard, what would you have done differently keeping in mind the limitations of technology available back then? For me, the one that stands out would be to not use the oddball 59.94Hz rate and just keep it at 60Hz. Also add color calibration references in some of the VBI lines, initially to simplify manual color calibration but later for automatic color calibration.

59.94 came because the horizontal frequency had to be detuned to be an integer division of the audio modulation frequency to reduce crosstalk. 4.5MHz ÷ 286 = 15734Hz. Perhaps it would have been better(??) to change the nominal modulation center frequency for audio to 4504.5kHz instead, but I have to admit to being skeptical.

Wonderful build. But one nit about the article text: NTSC stands for National Television System(s) Committee, not Standard(s) as we so often see.

Oops! Thanks for picking that up. I fixed it.

It’s all fun to put down NTSC for not having the phase correction of PAL, but then you can fault PAL for not having the clarity of ATSC. When you finalize your standard many years later, you have the luxury of fixing problems that were found.

Both systems have there benefits and drawbacks. While the higher vertical resolution of most PAL systems at 625 lines as nice, it’s usual 50 Hz scan rate did give noticeable flicker at times. Especially problematic for me when watching in low ambient light conditions.

I was never involved in broadcasting PAL signals, but the US NTSC was fairly easy to tweak and maintain. Anyone recall the old indianhead card for resolution?

Comparing PAL to ATSC is wrong, you need to compare ATSC to DVB instead.

PAL/SECAM vs NTSC is an academic debate. You aren’t going to find much non-NTSC equipment here in North America. Would a PAL TV set even work on 120VAC 60HZ?

Hackaday isn’t just North American, so PAL is just as relevant as NTSC.