There was a time when microprocessors were slow and expensive devices that needed piles of support chips to run, so engineers came up with ingenious tricks using extra hardware preprocessing inputs to avoid having to create more code. It would be common to find a few logic gates, a comparator, or even the ubiquitous 555 timer doing a little bit of work to take some load away from the computer, and engineers learned to use these components as a matter of course.

The nice thing is that many of these great hardware hacks have been built into modern microcontrollers through the years. The problem is you know to know about them. Brett Smith’s newly published Hackaday Superconference talk, “Why Do It The Hard Way?”, aims to demystify the helpful hardware lurking in microcontrollers.

Join us below for a deeper dive and the embedded video of this talk. Supercon is the Ultimate Hardware con — don’t miss your chance to attend this year, November 15-17 in Pasadena, CA.

Coming of Age In a World of Fast Computing

Today’s hardware hackers often did not arrive in the world of microcontrollers after an apprenticeship in the bare-metal world of 8-bit microprocessors, instead they are more likely to be of the generation who learned to code on a PC or Mac and who came to hardware through boards such as the Arduino. They are good at coding and for them computers have always been very fast indeed. What would have necessitated a hardware solution for those grizzled old engineers is for today’s engineers a problem that can be dealt with in software.

This is fine, until you find yourself using a platform with limited resources such as a low-cost microcontroller. Suddenly clock cycles matter just as they did years ago, and a few lines of code or a function call can make enough difference as to ruin a project.

What to Look For in Microcontroller Features

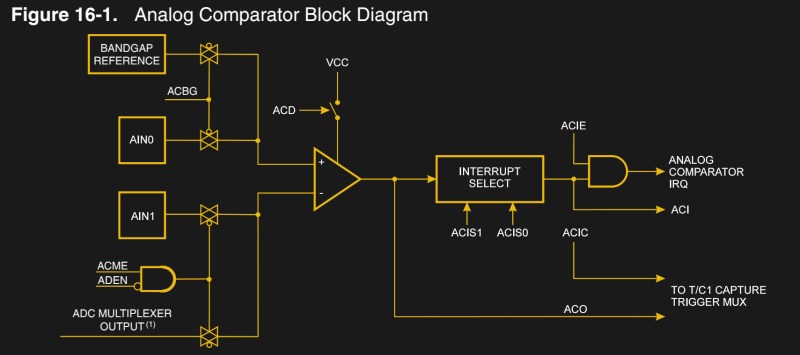

At this point those extra bits of hardware might come in extremely useful, but you don’t see much in the way of 74 logic or other chips surrounding a typical ATtiny or PIC. This is because many microcontrollers come with components such as comparators, timers, or basic combinational logic built-in, there to be enabled through software. These are not immediately apparent to someone who came to microcontrollers through software alone, and hence the point of Brett’s talk. Why do it the hard way when you can often pick a microcontroller that already contains the hardware to preprocess your inputs without extra code?

The example he starts with sets the tone, a simple analog input to trigger once a certain level is reached. Using an analog read function followed by a compare does the job, but at the expense of those valuable clock cycles. The trick is in the discovery of the microcontroller’s built-in comparators that can do the job for free.

Brett exhorts us to study the data sheets for popular chips, and even takes us through a few examples. Features such as pin-change interrupts, or inbuilt quadrature decoders are mind-blowing, if you were unaware that they were on-board. Along the way he touches on entertaining annoyances such as different vendor’s names for them, to give you a head start on those data sheets.

In addition to the talk below you can check out Brett’s own blog post about on the topic. He’s also made his slides available as a .pptx file, if you are interested.

tl;dr: read the datasheet! :P

True, although there’s also the temptation to turn every problem into a nail, if one isn’t careful. Right tool for the job applies as much to internal hardware, as external.

It’s kind of a pain to go through the datasheet and implement support for the hardware though. It would be nice if someone could write a few chip-specific libraries, but overall a software solution can often be prototyped faster and even be more portable.

The Microchip MPLab IDE for their PIC family has a pretty good selection of configurator tools for the built-in PIC peripherals, which will generate an appropriate code snippet that you can then plop into your program.

It’s not perfect, but it works pretty well for casual use.

You don’t even need to read the datasheet. These sort if features and peripherals normally make it to the device summary.

This talk is really great, though. Yes, it’s “read the datasheet”, but at a more fundamental level, it’s “understand the datasheet”. And that’s something that’s hard for people who are new to microcontrollers, especially those who are approaching them from a software background. There are a lot of new concepts hidden in the silicon.

Projects like Arduino and MicroPython are a double-edged sword. On one hand, they introduce a lot of new people to the scene as gently as possible. But on the other hand, by hiding/abstracting away the microcontrollers’ peripheral hardware, they make it harder to discover the good stuff.

So in a way, this talk is a more politely phrased “here’s all the useful things that you’re missing out on when you use Arduino”. And frankly, that’s my biggest beef with the “higher level” languages: they don’t make enough use of the ICs’ hardware peripherals.

He doesn’t cover as much in this (short) talk as you might want. An expanded version of “here’s a set of common hardware peripherals and what they’re good for” would be a great service to the new blood. IMO.

Was about to say that…

…and the application notes. And the reference manual. And the available libraries’ documentation. A couple hundred pages. No biggie.

“Why do it the hard way when you can often pick a microcontroller that already contains the hardware to preprocess your inputs without extra code?”

But…but…uphill both ways, through the snow.

…in 100 degree temperatures at 100 percent humidity!

Typo

“The problem is you know to know about them.”

I’m assuming it’s “you have to know about them”

No man, its you know, to know. You know?

No

Sometimes you don’t know what you don’t know, or as Donald Rumsfeld famously stated, “there are also unknown unknowns”.

Phillip G. Armour dubbed it “2nd order ignorance” in an article anyone in software development should read: http://www.corvusintl.com/CACM002-5OI.htm

The problem is finding the micro that has the mix of hardware support you need. There is a bewildering zoo of them, each with manuals that can be hundreds of pages long, and if you find that they left out something you really need too bad so sad you are screwn, try the next one.

Which is why I fell in love with the Parallax Propeller. For all its limitations — it’s gotten a bit old — all the pins are the same, all the cores are the same, and all the built-in automation like PLL’s and counter-timers is the same for each core. You can do anything that someone has written a driver for on as many as 8 cores. Need twelve serial ports? No problem. Three fast SPI channels? No problem. Simultaneous VGA and NTSC video? Easy. Two or three VGA outputs? Do-able.

And most of the chip’s limitations will be blown away by the P2 later this year. Yes it’s more expensive than a PIC or AVR but the time you save finding and sourcing the right chip with the particular hardware you need more than pays for the prop on a one-off or limited run project.

Prop is pretty cool but is held back by 32k RAM total for program and data (Von Neumann) and to create bit bang peripherals that work anywhere near as fast as hardware versions you need to write in assembly.

This is compounded by a non pipelined 4 clock per instruction limit whereas even fairly old AVRs pipeline for near MIPS parity with the frequency.

This is why P2 is really exciting, even though very late. It will have 512K, 2 clocks/instruction at 160 MHz, and fast execution from Hub RAM supported natively. I have one of the early eval boards and the respun silicon to fix the prototype bugs is nearly ready to ship. Drivers in assembly never really bothered me because you usually don’t write your own — you pluck one from the OBEX written by someone else.

True, which is why there are companies that take stuff like that and repackage it in a more user-friendly manner. Easier to do a search.

ST has the STM3Cube family, which helps select capabilities (ports, ADC/DACs, environments, etc.) of their 32 bit ARM processors.

https://www.st.com/content/st_com/en/stm32cube-ecosystem.html

I am sorry to inform you that bit banding is going the way of the dodo, the newer ARMs don’t support it. Also don’t get your hopes up, it doesn’t work with the DMA (yes, I wanted to do something crazy to avoid using the core).

Why wouldn’t new ARM processors support it? It’s not for ARM to decide, but for the implementer. The STM32 Cortex-M4 family do have bit banging, and many other vendors do (with different implementations or names). I don’t know why would you want to use DMA with it, though. Are you trying to hack some audio/video streaming with it? DMA has no precise timing, it wouldn’t work. Anyway, the intended use of bit banging is to avoid the complex locking/unlocking operations needed to modify a port bit (e.g. turn on bit 7 of the 32-bit port A) when you have multiple threads or interrupts using that port. If you don’t use a method like that, you need to: disable interrupts (but check if they were disabled already), read the 32 bit value to a register, modify the bit in the register, write the 32 bit value back, re-enable interrupts (if they were enabled). And don’t make me talk about nested interrupts!

I don’t see bit-banding in CM7, CM23, or CM33. (Wikipedia says it’s “optional” on M7 and CM23, but I don’t see it in the arm infocenter documents at all for those), and I don’t think I’ve seen it in any actual CM0 or 0+ chips. Freescale has something better (for less memory), and nearly every chip has implemented a more powerful set/clear/etc bits within their “port” peripherals…

It’s a shame that compiler gurus didn’t jump on bit-banding to provide a true single-bit type, what with them being individually addressable. :-(

In fact, I see that bit banging (which was a third party feature) is starting to appear in the proper ARM specification, so it’s more likely to show up more frequently in the future rather than disappear. Any vendor can implement it whether it shows up in the ARM specification or not. It’s not really tied down to ARM specifically. But be aware that it can have different names, like I2C is called TWI in PIC processors.

I’m not sure what you’re talking about. Reference to this “proper ARM spec”? “bit banging” usually refers to a SW technique rather than a HW feature. The ability to do atomic operations on single pins or memory bits (which HELPS with bit-banging) was built in to many 8bit microcontrollers’ instruction sets. To help displace those chips, ARM added bit-banding to the initial cortex-M CPUs, which was very general in its functionality, but only for single bits. It apparently turned out that people preferred more powerful (multi-bit) and less general (IO pins only) capabilities that could be more easily put in the GPIO peripherals, few people used bit-banding, and now bit-banding is going away.

I meant “banding”, not “banging”. Where did you read that bit-banding is going away? As I’ve said, if ST wants to implement bit-banding in any of their processors (ARM or not), they can. There’s nothing ARM can do about it. What may be phasing out from ARM (I especulate, here) is its inclusion in the ARM specification. That would make sense, as it’s not much of a “core” feature. ARM has a history of leaving the choice of peripherals to the manufacturers, and this inclusion was not in the spirit of things.

“bit banding” is fundamentally different from having set/clear-bit registers in the peripherals, by being “standardized”, by being part of the “core”, and by the mechanism by which bits are addressed The set/clear registers are common, useful, mostly sufficient, and frequently better than bit-banding (with the ability to set/clear multiple bits at once.) But ARM’s “bit-banding” seems to be going away (especially for RAM access.) Apparently it’s not very compatible with either cache or faster memory bus technology: https://community.arm.com/developer/ip-products/processors/f/cortex-m-forum/10266/why-cortex-m7-doesn-t-support-bit-banding

OK, I was not aware that bit-banding was also being used for SRAM. It makes sense that it will cause cache implementation difficulties. As for I/O mapping, it shouldn’t be so problematic.

Reading the datasheet in detail and doing everything with direct register access (which can still be done in Micropython BTW) is quickly becoming a bit of a lost art, along with many techniques embedded engineers used in the past to eek out every ‘bit’ (no pun intended) of performance from their underpowered 8-bit MCUs with tiny amounts of RAM and Flash/EEPROM/EPROM e.t.c. In many cases engineers would resort to things like bit shifts instead of multiplying/dividing by constants of the power of 2, or optimized implementations of certain functions/algorithms e.t.c.

This has all become redundant now as microcontrollers are much faster & use of hardware vendor libraries is becoming the accepted norm to minimize development time. No one wants the read the datasheets & reference manuals. Usually reading these is a last resort.

The other thing to consider is that in the past one only needed to learn how to use one or two MCU’s (with their own architectures, peripherals, instructions sets e.t.c) over say a decade…. Today there are so many MCU’s out and more being released at a ridiculous rate. an Embedded engineer ends up having to learn so many MCU’s over a small period of time and then throw away the MCU specific knowledge of older MCUs as she/he ends up having to learn newer faster architectures e.t.c. Remembering all of the intricacies of every microcontroller can be challenging, even if one has read the datasheets/reference manuals many times.

I used to get so excited about programming ‘old school’ Microcontrollers (8051s, AVRs, MC68HC11s, PICs) using the ‘old school’ way in both C and assembly. Those were the good days. Today while the tech has tremendously improved, the embedded development process has turned into a real shit show.

Or maybe I’m just getting old and weary. Either way I’m glad I don’t have to do embedded development anymore.

today, in a hw project could live 2,3 or more mcu, spi / uart / i2C are key moving data between mcus. Define which mcu is exactly for a project its a nightmare: many vendors / thousands datasheets with small letters. Using hw with built in features, or using software libraries in many cases depends of mcu price, couple mcu dont broke a wallet but 10000 pieces could broke a startup project

You have your “abstractions” of very common functionality (like Arduino) that are “easy”, but don’t do anything with the special HW features of a particular chip. Or you have your “complete” libraries like ASF or STMCube that are supposed to do everything, but whose documentation tends to be at least as confusing as the datasheets (and less complete, and more prone to errors.) Or you can read the 1000+ pages of datasheet. (ok, it’s not that bad. Once you become familiar with the “styles” of special peripherals that are available, you can search for that sort of thing specifically. Like PWM using timers. With complementary outputs. And configurable dead time. And you don’t need to read the USB or Ethernet sections of the datasheet to see how those work…)

This is one of the things I enjoy about breakouts. If you buy the average breakout from Adafruit etc. it comes with a software library, but those libraries often don’t support all of the functions of the chip. Environment sensors often have interrupt options which you could manually do in software, or you could work your own library to get the chip to handle that for you if the interrupt pin is exposed. The HT16K33 breakouts usually only act as LED drivers, but the chip has a button matrix controller function as well. Some LED drivers have page memory too, which a lot of the breakout libraries don’t cover, and some even have audio modulation.

Turns out that in the “make it easily accessible for newbies” world a lot of deeper stuff gets glossed over in the name of simplicity, but that stuff is quite fun to dig out and get working if you’ve the time.

If you want to know more than how to use DigitalWrite or download Adafruit libraries, I’d suggest free book about PIC micros, but don’t worry, much of it is also relevant for ATMELs. It’s like datasheet but written in more user friendly way, explaining every module of 16F887, versatile Microchip controller. Once you know what’s under the hood of the (any) chip you’ll easily write your own code for accessing functions other libraries don’t expose.

Link : https://www.mikroe.com/ebooks/pic-microcontrollers-programming-in-c

I would recommend learning to use something which actually makes sense to use with C. Like something that doesn’t have only one useable register (remember, it’s not 1959 anymore to use something that tries to mimic PDP1). Even the simple 8051 makes more sense.

These days, it’s probably best to learn how to use ARM processors.

You don’t need to read hundreds of datasheets: reading a couple of good ones is enough. Checking any “top of the line” controller will give you a good idea of what is available out there. Analog comparators? Check. PWM? Check. Cryptography, DMA, USB, I²C, CAN, UART? Of course. You’ll find information about most of these peripherals in any major controller’s reference manual. If you don’t want to deal with complex buses, external DDR3 RAM, or anything like it, check a full-featured lower class controller. You don’t need to learn how to use them, of course; only to grasp their purpose and principle of operation. That’s useful information. The next time you need a controller for a project, you can look for one that has analog comparator, or an internal reference, or an up/down 16-bit timer with external trigger that you know you will need. Or easily check whether the one you have at hand has anything of the like.

In what sense are these feature “hidden”

Near the end of the talk Brett implied that these features are ignored by Software Engineers. I’d argue that these peripherals separate the professionals from the enthusiasts.

HaD.io has an optical chronograph in which an IO pin is polled as fast as the 32bit processor programmed with Python can manage. My colleague designed a similar product (a spinning tachometer version) with an 8 bit PIC using its built-in peripherals 15 years ago which consumed 1/1000th the power, 30x the performance and 1/10th the cost.

Peripherals are becoming the next HaD fad. After that will be analog hardware as HaD’s mostly software programmer readership progresses even further.

There was this movie I watched when I was a kid, Buck Rogers in the 25th Century (1979). In one scene the character (who traveled to the future from the 20th century) is wining lots of money in the casino. The security guards want to take him out, accused of cheating (“you’re wearing a computer”), but he argues: “this game is ridiculous: you just need to know how to count up to 25 to win!”. There you go, we’re in the 25th century and people doesn’t know how to use a hardware counter. Or count up to 25, for what it matters.