Smart speakers have proliferated since their initial launch earlier this decade. The devices combine voice recognition and assistant functionality with a foreboding sense that paying corporations for the privilege of having your conversations eavesdropped upon could come back to bite one day. For this reason, [Yihui] is attempting to build an open-source smart speaker from scratch.

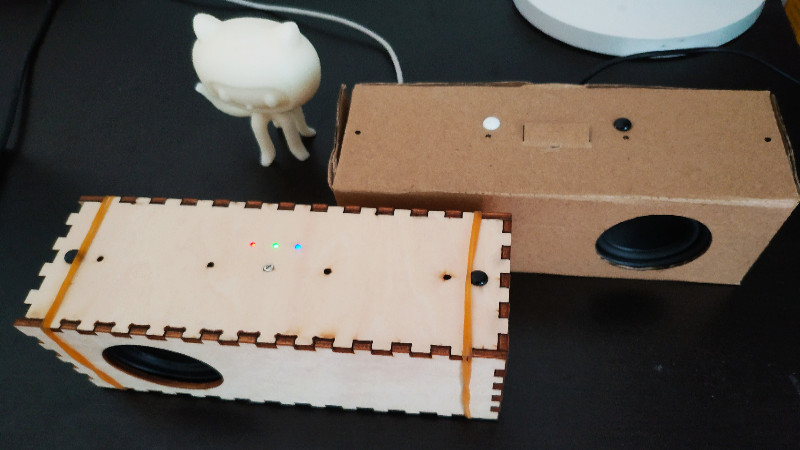

The initial prototype uses a Raspberry Pi 3B and a ReSpeaker microphone array. In order to try and bring costs down, development plans include replacing these components with a custom microphone array PCB and a NanoPi board, then implementing basic touch controls to help interface with the device.

There’s already been great progress, with the build showing off some nifty features. Particularly impressive is the ability to send WiFi settings to the device using sound, along with the implementation of both online and offline speech recognition capabilities. This is useful if your internet goes down but you still want your digital pal to turn out the lights at bed time.

It’s not the first time we’ve seen a privacy-focused virtual assistant, and we hope it’s not the last. Video after the break.

How pray tell does “open source” have anything to do with what is essentially an always on listening device ? I’ve never seen so many people so willingly eager to surrender their privacy in the name of “convenience”. That mindset combined with legislation exploiting all avenues of fear mongering (including the embarassing “students” at a certain Ivy League university enthusiastically signing a petition to abolish the 1st Amendment), the “Patriot Act”, efforts to demonize law abiding gun owners, etc – all point to a very prescient prediction of future life, courtesy of a Mr Orwell.

“Open source” means you can audit and change the source code. “always on listening” is not continuously sending audio to the cloud. The audio will be processed locally until a keyword is triggered. You can also make it work offline, which should have no privacy problem.

If the device was fully local, no remote corporate backend, and didn’t log anything recorded (except perhaps if YOU CHOOSE to turn on logging of things said just after the “wake word” and wish to store them in a file encrypted with a key only you know for your own later perusal) there’s nothing anti-freedom/anti-privacy left about it. And such a thing can definitely be open-sourced, nothing incompatile there. At that point the only objection becomes the fact that such devices are still a vastly inferior less reliable more buggy way to enter queries and access information than keyboard/command line/mouse and gui/touchscreen/… methods.

You don’t need to go that far – at least for Google Assistant, you can do the voice-to-text yourself and send the text directly if you want. At that point you know everything that’s being sent, and the Google Assistant portion is essentially just a smart command line.

That being said the biggest problem with the Google Assistant goal is that the library isn’t anywhere *near* as capable as the actual system is, since it’s unable to do a ton of things due to what Google is willing to provide openly.

I’ll need to take a look at this. Between the death of the Chromecast Audio, the Muzo Cobblestone’s terrible audio output, and wonky support for my ancient Squeezebox touch, I could use something with stereo output for whole house audio.

Totally offtopic but I designed that octocat model back in 2011! Here is the original openscad source if anyone wants to play with it http://kliment.kapsi.fi/octocat/ (server migration in 2017 messed up the timestamps, sorry about that). See also: the horrible print quality we put up with at the time.