What the Flock? It’s probably just some quirk of The Almighty Algorithm, but ever since we featured a story on Flock’s crime-fighting drones last week, we’ve been flooded with other stories about the company, some of which aren’t very flattering. The first thing that we were pushed was this handy interactive map of the company’s network of automatic license plate readers. We had no idea how extensive the network was, and while our location is relatively free from these devices, at least ones operated on behalf of state, county, or local law enforcement, we did learn to our dismay that our local Lowe’s saw fit to install three of these cameras on the entrances to their parking lot. Not wishing to have our coming and goings documented, we’ll be taking our home improvement dollars elsewhere for now.

alexa89 Articles

Hackaday Links: July 28, 2024

What is this dystopia coming to when one of the world’s largest tech companies can’t find a way to sufficiently monetize a nearly endless stream of personal data coming from its army of high-tech privacy-invading robots? To the surprise of almost nobody, Amazon is rolling out a paid tier to their Alexa service in an attempt to backfill the $25 billion hole the smart devices helped dig over the last few years. The business model was supposed to be simple: insinuate an always-on listening device into customers’ lives to make it as easy as possible for them to instantly gratify their need for the widgets and whatsits that Amazon is uniquely poised to deliver, collecting as much metadata along the way as possible; multiple revenue streams — what could go wrong? Apparently a lot, because the only thing people didn’t do with Alexa was order stuff. Now Amazon is reportedly seeking an additional $10 a month for the improved AI version of Alexa, which will be on top of the ever-expanding Amazon Prime membership fee, currently at an eye-watering $139 per year. Whether customers bite or not remains to be seen, but we think there might be a glut of Echo devices on the second-hand market in the near future. We hate to say we told you so, but — ah, who are we kidding? We love to say we told you so.

Oral-B Hopes You Didn’t Use Your $230 Alexa-Enabled Toothbrush

With companies desperate to keep adding more and more seemingly random features to their products, Oral-B made the logical decision to add Alexa integration to its Oral-B Guide electric toothbrush. Taking it one step beyond just Bluetooth in the toothbrush part, the Guide’s charging base also acted as an Alexa-enabled smart speaker, finally adding the bathroom to the modern, all-connected smart home. Naturally Oral-B killed off the required Oral-B Connect smartphone app earlier this year, leaving Guide owners stranded in the wilderness without any directions. Some of the basics of this shutdown are covered in a recent Ars Technica article.

Amidst the outrage, it’s perhaps good to take a bit more of a nuanced view, as despite various claims, Oral-B did not brick the toothbrush. What owners of this originally USD$230 device are losing is the ability to set up the charging base as an Alexa smart speaker, while the toothbrush is effectively just an Oral-B Genius-series toothbrush with Bluetooth and associated Oral-B app. If you still want to have a waterproof smart speaker listening in while in the bathroom, you’ll have to look elsewhere, it seems. Meanwhile existing customers can contact Oral-B support for assistance, while the lucky few who still have the Connect app installed better hope it doesn’t disconnect, as reconnecting it to the smart speaker seems to be impossible, likely due to services shut down by Oral-B together with the old “oralbconnect.com” domain name.

We recently looked at a WiFi-enabled toothbrush as well, which just shows how far manufacturers of these devices are prepared to go, whether they intend to support it in any meaningful fashion or not.

The Future Looks Bleak For Alexa Skill Development

While the average Hackaday reader is arguably less likely than most to install a megacorp’s listening device in their home, we know there’s at least some of you out there that have an Amazon hockey puck or two sitting on a shelf. The fact is, they offer some compelling possibilities for DIY automation, even if you do have to jump through a few uncomfortable hoops to bend them to your will.

That being said, we’re willing to bet very few readers have bothered installing more than a few Alexa Skills. But that’s not a judgment based on any kind of nerd stereotype — it’s just that nobody seems to care about them. A fact that’s evidenced by the recent revelation that even Amazon looks to be losing interest in the program. In a post on LinkedIn, Skill developer [Mark Tucker] shared an email he received from the mothership explaining they were ending the AWS Promotional Credits for Alexa (APCA) program on June 30th.

Continue reading “The Future Looks Bleak For Alexa Skill Development”

Smart Assistants Need To Get Smarter

Science fiction has regularly portrayed smart computer assistants in a fanciful way. HAL from 2001: A Space Odyssey and J.A.R.V.I.S. from the contemporary Iron Man films are both great examples. They’re erudite, wise, and capable of doing just about any reasonable task that is asked of them, short of opening the pod bay doors.

Cut back to reality, and you’ll only be disappointed at how useless most voice assistants are. It’s been twelve long years since Siri burst onto the scene, with Alexa and Google Assistant following years later. Despite years on the market, their capabilities remain limited and uninspiring. It’s time for voice assistants to level up.

Why Did The Home Assistant Future Not Quite Work The Way It Was Supposed To?

The future, as seen in the popular culture of half a century or more ago, was usually depicted as quite rosy. Technology would have rendered every possible convenience at our fingertips, and we’d all live in futuristic automated homes — no doubt while wearing silver clothing and dreaming about our next vacation on Mars.

Of course, it’s not quite worked out this way. A family from 1965 whisked here in a time machine would miss a few things such as a printed newspaper, the landline telephone, or receiving a handwritten letter; they would probably marvel at the possibilities of the Internet, but they’d recognise most of the familiar things around us. We still sit on a sofa in front of a television for relaxation even if the TV is now a large LCD that plays a streaming service, we still drive cars to the supermarket, and we still cook our food much the way they did. George Jetson has not yet even entered the building.

The Future is Here, and it Responds to “Alexa”

There’s one aspect of the Jetsons future that has begun to happen though. It’s not the futuristic automation of projects such as Disneyland’s Monsanto house Of The Future, but instead it’s our current stuttering home automation efforts. We’re not having domestic robots in pinnies hand us rolled-up newspapers, but we’re installing smart lightbulbs and thermostats, and we’re voice-controlling them through a variety of home hub devices. The future is here, and it responds to “Alexa”.

But for all the success that Alexa and other devices like it have had in conquering the living rooms of gadget fans, they’ve done a poor job of generating a profit. It was supposed to be a gateway into Amazon services alongside their Fire devices, a convenient household companion that would help find all those little things for sale on Amazon’s website, and of course, enable you to buy them. Then, Alexa was supposed to move beyond your Echo and into other devices, as your appliances could come pre-equipped with Alexa-on-a-chip. Your microwave oven would no longer have a dial on the front, instead you would talk to it, it would recognise the food you’d brought from Amazon, and order more for you.

Instead of all that, Alexa has become an interface for connected home hardware, a way to turn on the light, view your Ring doorbell on models with screens, catch the weather forecast, and listen to music. It’s a novelty timepiece with that pod bay doors joke built-in, and worse that that for the retailer it remains by its very nature unseen. Amazon have got their shopping cart into your living room, but you’re not using it and it hardly reminds you that it’s part of the Amazon empire at all.

But it wasn’t supposed to be that way. The idea was that you might look up from your work and say “Alexa, order me a six-pack of beer!”, and while it might not come immediately, your six-pack would duly arrive. It was supposed to be a friendly gateway to commerce on the website that has everything, and now they can’t even persuade enough people to give it a celebrity voice for a few bucks.

The Gadget You Love to Hate

In the first few days after the Echo’s UK launch, a member of my hackerspace installed his one in the space. He soon became exasperated as members learned that “Alexa, add butt plug to my wish list” would do just that. But it was in that joke we could see the problem with the whole idea of Alexa as an interface for commerce. He had locked down all purchasing options, but as it turns out, many people in San Diego hadn’t done the same thing. As the stories rolled in of kids spending hundreds of their parents’ hard-earned on toys, it would be a foolhardy owner who would leave left purchasing enabled. Worse still, while the public remained largely in ignorance the potential of the device for data gathering and unauthorized access hadn’t evaded researchers. It’s fair to say that our community has loved the idea of a device like the Echo, but many of us wouldn’t let one into our own homes under any circumstances.

So Alexa hasn’t been a success, but conversely it’s been a huge sales success in itself. The devices have sold like hot cakes, but since they’ve been sold at close to cost, they haven’t been the commercial bonanza they might have hoped for. But what can be learned from this, other than that the world isn’t ready for a voice activated shopping trolley?

Sadly for most Alexa users it seems that a device piping your actions back to a large company’s data centres is not enough of a concern for them. It’s an easy prediction that Alexa and other services like it will continue to evolve, with inevitable AI pixie dust sprinked on them. A bet could be on the killer app being not a personal assistant but a virtual friend with some connections across a group of people, perhaps a family or a group of friends. In due course we’ll also see locally hosted and open source equivalents appearing on yet-to-be-released hardware that will condense what takes a data centre of today’s GPUs into a single board computer. It’s not often that our community rejoices in being late to a technological party, but I for one want an Alexa equivalent that I control rather than one that invades my privacy for a third party.

Squeezing Secrets Out Of An Amazon Echo Dot

As we have seen time and time again, not every device stores our sensitive data in a respectful manner. Some of them send our personal data out to third parties, even! Today’s case is not a mythical one, however — it’s a jellybean Amazon Echo Dot, and [Daniel B] shows how to make it spill your WiFi secrets with a bit of a hardware nudge.

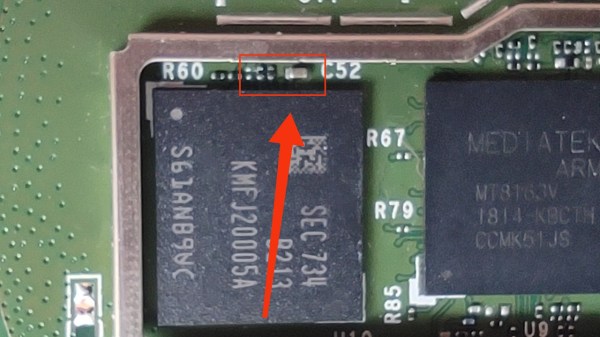

There’s been exploits for Amazon devices with the same CPU, so to save time, [Daniel] started by porting an old Amazon Fire exploit to the Echo Dot. This exploit requires tactically applying a piece of tin foil to a capacitor on the flash chip power rail, and it forces the Echo to surrender the contents of its entire filesystem, ripe for analysis. Immediately, [Daniel] found out that the Echo keeps your WiFi passwords in plain text, as well as API keys to some of the Amazon-tied services.

Found an old Echo Dot at a garage sale or on eBay? There might just be a WiFi password and a few API keys ripe for the taking, and who knows what other kinds of data it might hold. From Amazon service authentication keys to voice recognition models and maybe even voice recordings, it sounds like getting an Echo to spill your secrets isn’t all that hard.

We’ve seen an Echo hijacked into an always-on microphone before, also through physical access in the same vein, so perhaps we all should take care to keep our Echoes in a secure spot. Luckily, adding a hardware mute switch to Amazon’s popular surveillance device isn’t all that hard. Though that won’t keep your burned out smart bulbs from leaking your WiFi credentials.