Tesla have always aimed to position themselves as part automaker, part tech company. Their unique offering is that their vehicles feature cutting-edge technology not available from their market rivals. The company has long touted it’s “full self-driving” technology, and regular software updates have progressively unlocked new functionality in their cars over the years.

The latest “V10” update brought a new feature to the fore – known as Smart Summon. Allowing the driver to summon their car remotely from across a car park, this feature promises to be of great help on rainy days and when carrying heavy loads. Of course, the gulf between promises and reality can sometimes be a yawning chasm.

How Does It Work?

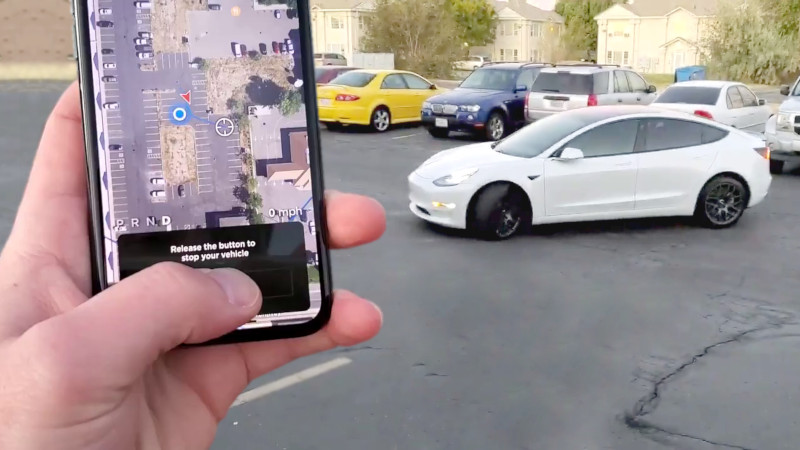

Smart Summon is activated through the Tesla smartphone app. Users are instructed to check the vehicle’s surroundings and ensure they have line of sight to the vehicle when using the feature. This is combined with a 200 foot (61 m) hard limit, meaning that Smart Summon won’t deliver your car from the back end of a crowded mall carpark. Instead, it’s more suited to smaller parking areas with clear sightlines.

Once activated, the car will back out of its parking space, and begin to crawl towards the user. As the user holds down the button, the car moves, and will stop instantly when let go. Using its suite of sensors to detect pedestrians and other obstacles, the vehicle is touted to be able to navigate the average parking environment and pick up its owners with ease.

No Plan Survives First Contact With The Enemy

With updates rolled out over the air, Tesla owners jumped at the chance to try out this new functionality. Almost immediately, a cavalcade of videos began appearing online of the technology. Many of these show that things rarely work as well in the field as they do in the lab.

As any driver knows, body language and communication are key to navigating a busy parking area. Whether it’s a polite nod, an instructional wave, or simply direct eye contact, humans have become well-rehearsed at self-managing the flow of traffic in parking areas. When several cars are trying to navigate the area at once, a confused human can negotiate with others to take turns to exit the jam. Unfortunately, a driverless car lacks all of these abilities.

A great example is this drone video of a Model 3 owner attempting a Smart Summon in a small linear carpark. Conditions are close to ideal – a sunny day, with little traffic, and a handful of well-behaved pedestrians. In the first attempt, the hesitation of the vehicle is readily apparent. After backing out of the space, the car simply remains motionless, as two human drivers are also attempting to navigate the area. After backing up further, the Model 3 again begins to inch forward, with seemingly little ability to choose between driving on the left or the right. Spotting the increasing frustration of the other road users, the owner is forced to walk to the car and take over. In a second attempt, the car is again flummoxed by an approaching car, and simply grinds to a halt, unable to continue. Communication between autonomous vehicles and humans is an active topic of research, and likely one that will need to be solved sooner rather than later to truly advance this technology.

Pulling straight out of a wide garage onto an empty driveway is a corner case they haven’t quite mastered yet.

Other drivers have had worse experiences. One owner had their Tesla drive straight into the wall of their garage, an embarrassing mistake even most learner drivers wouldn’t make. Another had a scary near miss, when the Telsa seemingly failed to understand its lack of right of way. The human operator can be seen to recognise an SUV approaching at speed from the vehicle’s left, but the Tesla fails to yield, only stopping at the very last minute. It’s likely that the Smart Summon software doesn’t have the ability to understand right of way in parking environments, where signage is minimal and it’s largely left up to human intuition to figure out.

This is one reason why the line of sight requirement is key – had the user let go of the button when first noticing the approaching vehicle, the incident would have been avoided entirely. Much like other self-driving technologies, it’s not always clear how much responsibility still lies with the human in the loop, which can have dire results. And more to the point, how much responsibility should the user have, when he or she can’t know what the car is going to decide to do?

More amusingly, an Arizona man was caught chasing down a Tesla Model 3 in Phoenix, seeing the vehicle rolling through the carpark without a driver behind the wheel. While the embarassing incident ended without injury, it goes to show that until familiarity with this technology spreads, there’s a scope for misunderstandings to cause problems.

It’s Not All Bad, Though

Some users have had more luck with the feature. While it’s primarily intended to summon the car to the user’s GPS location, it can also be used to direct the car to a point within a 200 foot radius. In this video, a Tesla can be seen successfully navigating around a sparsely populated carpark, albeit with some trepidation. The vehicle appears to have difficulty initially understanding the structure of the area, first attempting a direct route before properly making its way around the curbed grass area. The progress is more akin to a basic line-following robot than an advanced robotic vehicle. However, it does successfully avoid running down its owner, who attempts walking in front of the moving vehicle to test its collision avoidance abilities. If you value your limbs, probably don’t try this at home.

Wanting to explore a variety of straightforward and oddball situations, [DirtyTesla] decided to give the tech a rundown himself. The first run in a quiet carpark is successful, albeit with the car weaving, reversing unnecessarily, and ignoring a stop sign. Later runs are more confident, with the car clearly choosing the correct lane to drive in, and stopping to check for cross traffic. Testing on a gravel driveway was also positive, with the car properly recognising the grass boundaries and driving around them. That is, until the fourth attempt, when the car gently runs off the road and comes to a stop in the weeds. Further tests show that dark conditions and heavy rain aren’t a show stopper for the system, but it’s still definitely imperfect in operation.

Reality Check

Fundamentally, there’s plenty of examples out there that suggest this technology isn’t ready for prime-time. Unlike other driver-in-the-loop aids, like parallel parking assists, it appears that users put a lot more confidence in the ability of Smart Summon to detect obstacles on its own, leading to many near misses and collisions.

If all it takes is a user holding a button down to drive a 4000 pound vehicle into a wall, perhaps this isn’t the way to go. It draws parallels to users falling asleep on the highway when using Tesla’s AutoPilot – drivers are putting ultimate trust in a system that is, at best, only capable when used in combination with a human’s careful oversight. But even then, how is the user supposed to know what the car sees? Tesla’s tools seem to have a way of lulling users into a false sense of confidence, only to be betrayed almost instantly to the delight of Youtube viewers around the world.

While it’s impossible to make anything truly foolproof, it would appear that Tesla has a ways to go to get Smart Summon up to scratch. Combine this with the fact that in 90% of videos, it would have been far quicker for an able-bodied driver to simply walk to the vehicle and drive themselves, and it definitely appears to be more of a gimmick than a useful feature. If it can be improved, and limitations such as line-of-sight and distance can be negated, it will quickly become a must-have item on luxury vehicles. That may yet be some years away, however. Watch this space, as it’s unlikely other automakers will rest for long!

I bet this feature exists to collect data Tesla can use to improve object detection, path planning, and right of way heuristics from many users in real-world condition but with lower stakes (low speed, no driver in the car).

Exactly this. If you can navigate a parking lot you can navigate anything on the road. And people are probably less likely to be falling asleep here vs on a highway. Honestly although I wouldn’t personally use it other than as a novelty I have to say it’s a good idea.

Or, they take the easy way out and write a whole bunch of special rules and exceptions to deal with a parking lot situation, which makes it “good enough” to navigate through 99.999% of the cases in this special environment – and the rest is up to the lawyers to deny any responsibility.

https://i.imgur.com/bYMDzPF.mp4

– Interesting… The first comment chain was removed, which wasn’t exactly in support of Tesla, including backing by Elliot regarding safety stats being kept secret. Censorship sponsored by Tesla?

That’s HaD for you. They go back to old articles and “sanitize” them to remove comments and discussion the editors don’t like, but what they can’t remove right away without being too obvious. This has happened maaaaany times before – the more controversial or one-sided the opinions pushed in the article, the heavier the moderation.

They leave some debate, but only the weak and indirect arguments to the contrary, so it looks like there’s some back and forth going on, but that’s just for window dressing. The idea seems to be to bend backwards for what the editors consider to be “trolls” and then simply erase them out of history after a while, and leave only the narrative that the editors agree with.

Well we were going off topic and mainly discussing Autopilot rather than Smart summon.

But it’s the same system at work.

No, it’s not. The Smart Summon and Autopilot systems are very different. The only thing they have in common is that they used the same sensors, but the way the sensor input is used by each system is like night and day.

“The only thing they have in common is that they used the same sensors”

So you’re saying that Tesla chose to use an inferior AI for the purpose of navigating around a parking lot, when they could have used the superior AI that actually drives the car on the highway?

Or, are you saying that the AI they use for navigating on the highway isn’t good enough to drive the car around a parking lot, so they had to implement a more advanced version for the purpose, and it’s still failing miserably?

Of course, Autopilot isn’t good enough to navigate in a parking lot without a driver. The problems of highway driving, parking lots, driving on city streets are all different in there own right and need a more specific AI to solve. To try and say that – if Autopilot is good enough on the highway and is so smart – it should be easily able to navigate a parking lot is naive. Trying to create a general “solve everything” AI system would be an easy way to failure because it would be way too risky and unreliable, so for now the systems remain separate.

” The problems of highway driving, parking lots, driving on city streets are all different in there own right and need a more specific AI to solve. ”

All that points to the fact that the AI is too narrow in scope to deal with any of them in a truly effective manner. It’s just playing special cases and exceptions.

A person is not a specific AI, yet they do just fine in every one of those scenarios.

Yes, a person would do fine in each of those scenarios. A person is pretty good at many tasks and can apply knowledge generally to a wide variety of tasks, while AI is VERY good at specific tasks, but it is quite hard to generalize the knowledge and do other tasks. That’s why each of the problems is being solved independently. You keep comparing AI to a person which is why you are still giving your same arguments that Autopilot/Smart Summon is unsuitable. I can agree Smart summon is not great, but don’t clump Autopilot with it since it is a different system solving a different problem.

““solve everything” AI system would be an easy way to failure”

On the contrary. What’s missing is the common factor to all the scenarios: understanding of your surroundings and circumstances, and the objects that exist regardless of circumstances.

As a real person driving a car, just because you’re driving on the highway, you can’t ignore things that wouldn’t normally be present on a highway – such as a person pushing a shopping cart. Yet this is exactly what the AI does because it can’t “solve everything”.

This is what happened with the Uber case where it ran over a person. It wasn’t expecting pedestrians outside of designated areas, so it simply ignored the person. It did recognize that there is an object in the way, but since it did not understand what it was, it simply ignore it.

> understanding of your surroundings and circumstances, and the objects that exist regardless of circumstances

Immediately going to that is way too hard. Obviously that is the end goal FSD (Full self driving) Tesla is trying to achieve. But Autopilot and Smart summon are not that.

As for the Uber case, I’m not familiar with it, but systems don’t just ignore things if the sensor registers it accurately. It just may not know how to behave or deal with it correctly. With Tesla, the system can be trained which is the whole point of the fleet of Tesla vehicles gathering data.

Lol, yeah, ‘we were off topic’ with the removed autopilot/AI/misdirection of ‘autopilot’ intent chain that was deleted, but the big “When a Tesla blocks me from going where I need to go, the owner will wish they had paid for the self-changing-4-flat-tires feature.” comment chain remains…. Noticed other selectively well-articulated comments pruned as well. Obviously not off-topic comments being removed. Nice work on this one Luke.

Had a good laugh at “If you isolate all safety records to highway use only Teslas would be far safer, still.”… Interesting, since last I knew even the NTSB can’t manage to get Tesla’s safety records, at least anything realistic.

>”Immediately going to that is way too hard. ”

Yeah? Too bad.

Autonomous driving is like pole vaulting. If you don’t pass the bar, you don’t qualify for the next round. If you don’t even have a pole, you shouldn’t even be on the field.

The things that the AI most sorely lacks are: true object recognition and object permanence. Basically, the understanding that there exists a world with objects in it, and those objects exist even when they’re not seen. The next step is then the understanding that objects are objects even if they don’t perfectly match their descriptions. That’s a big jump from any AI we have today, and it seems nobody has any idea how to make one.

The main contents, for my part at least, for the comment chain that I started was:

1) The Tesla self-driving software really is that bad. It’s just that when it’s being used on the highway, there’s less things to consider and go to wrong and they come in infrequently, so it appears to be performing very well. In reality, it really is that clueless: the sensors have real troubles picking up anything around the car, and the AI simply does not “understand” most of the information it receives.

People simply anthropomorphize the AI and create a false belief that there’s some thinking going on in the computer, creating the illusion of ability – and the illusion of safety.

2) The AI is relying on existing commercial image recognition software based on statistical inference, because that’s the most reliable thing there is at the moment, but which is has a fundamental problem: it can only “see” things that its told to look for. It’s doing list comparisons of known objects and simply ignores whatever is not on the list. The alternative of using ANNs have the exact same problem: they only respond to things you train them to recognize.

This means the Tesla engineers are playing whack-a-mole with reality and having to add in special cases and exceptions for every hazard they want to watch out for. This is an exercise in futility – they can never cover enough cases to be truly comparable to a real intelligence driving the car.

There’s also the issue that existing CV software is only about 90% accurate at best. If you push the sensitivity any higher, you start to get so many false positives that it becomes useless – it’s like hitting a wall because the false positives increase exponentially. It means you have about 1/10 chance the computer simply doesn’t recognize what’s right in front of it, and that’s terrible.

3) Tesla isn’t “pushing the envelope” with the technology – to the point that it would justify using their customers as beta testers. They’re mainly implementing solutions that already exist and can be bought on the market. They don’t really have any original R&D going on, and the problems in things like computer vision and AI are common to the whole field – nobody has the solution to make a car drive itself safely.

4) The safety record of Tesla cars isn’t comparable to the average rate of accidents on the road, because the Tesla cars are not average cars: they’re new and in perfect nick, and the autopilot is only officially available in situations where the least amount of accidents happen in the first place. The Tesla autopilot is playing easy mode with training wheels on – and the fact that they still manage to kill so many people should be taken as a bad sign.

I’ll try to address your points:

1) Highway driving isn’t very complicated and that’s why even a smart system like “Autopilot” akin to airline autopilot can be used to assist the driver.

2) NNs are fine for autopilot since there are a lot few cases of highway use (again not extremely complicated). For fully autonomous self driving on all roads (a long ways away still), I agree, there are many more factors needed to be trained and many more unexpected scenarios. We can see how much smart summon is struggling in parking lots, but again Autopilot is not the same system.

3) Yeah, maybe they aren’t pushing the envelope, so? And yeah, other companies like Google/Waymo are also working autonomous driving in the city (most difficult). Tesla is trying to gather data from the whole fleet of vehicles – that is something original they have.

4) If you isolate all safety records to highway use only Teslas would be far safer, still.

1) “Highway driving isn’t very complicated”

That’s true for most of the time, but that’s not an excuse in the least. What you’re doing is just betting that nothing out of the ordinary happens.

2) ” there are many more factors needed to be trained and many more unexpected scenarios”

You can’t solve this by adding exceptions to exceptions. There’s an infinity of permutations and corner cases and it’s simply not possible to check them all. You have to go one step further and have a real intelligence instead of a trained checklist.

3) “Yeah, maybe they aren’t pushing the envelope, so?”

False advertising misleading consumers. No justification to using people as guinea pigs for what is a fundamentally flawed system that is known to result in death and damage. All the others are just as guilty.

>” Tesla is trying to gather data from the whole fleet of vehicles – that is something original they have.”

Yet if there’s nothing they can do with it, that’s no excuse either.

>”assist the driver.”

One of the comments censored out of the previous concerned exactly this:

The whole purpose of the Tesla autopilot is not to be an assist to the driver, but a replacement of the driver. If the driver can’t take his eyes off the road, then the system has no point – the effort spent monitoring the AI is just as much if not greater than just paying attention to the road in the first place.

Tesla is washing their hands off the responsibility by saying that you’re supposed to keep your hands on the wheel at all times, but really they have nothing against people understanding the autopilot to be driving for you, since that IS the selling point. That’s why they call it “autopilot” in the first place instead of a “lane assist” or something else.

Umm, no. That’s your misunderstanding and trying to villainize Tesla. Autopilot is akin to airline autopilot which does not do the flying for a pilot. It assists the pilot/driver to do the more straight forward tasks that a machine is excellent at doing (better than a human). The pilot still needs know what he’s doing, he should be able to land in case of system failure and such. He monitors the system to ensure nothing out of ordinary is happening. In the original comment thread you called it “autodrive” which shows you’re not exactly sure what Tesla feature you’re criticizing. The feature you are referring to about is Tesla’s “Full Self Driving” feature which is not available yet. The “Autopilot” system does much more than “lane assist”, but also is nowhere near “Full Self Driving” which is still many years away.

>” Autopilot is akin to airline autopilot which does not do the flying for a pilot.”

Yes it does – under limited conditions. That’s the whole point of it. It keeps the set parameters, such as the altitude, heading, and in certain situations does other actions like landing. This is equivalent to saying to the Tesla car “follow this road until I say otherwise”.

An auto-pilot lets you take your hands off the wheel entirely, so you can avoid the burden and stress of continuous attention to the actual task of controlling the vehicle. You are no longer in the loop.

A lane assist on the other hand nudges you back onto your lane when you veer off, but you were still actively driving in the first place. The Tesla “autopilot” is a lane-assist that pretends to be an auto-pilot – a safety tool promoted to the status of a self-driving robot – although they say you should keep your hands on the wheel… But really, you’re not driving – it is driving for you.

>”straight forward tasks that a machine is excellent at doing (better than a human)”

That’s the rub.

The Tesla autopilot is NOT better at going down a highway than a human. It has a fundamental lack of awareness about its surroundings, and the lack of understanding about what it does happen to become aware of, that makes in patently UNSAFE to ride with.

The ONLY thing it is better at compared to a human is not getting bored and losing attention, but that’s a cheap consolation when your Tesla has driven you under a semi-trailer, or into a highway separator.

>”straight forward tasks that a machine is excellent at doing (better than a human)”

This doesn’t even close apply to driving, on a highway or otherwise. Only thing a machine may be better at is being attentive. As Luke said, there are corner cases upon corner cases, exceptions upon exceptions. Was that a sheet of 3/4″ foam, or 3/4″ plywood that flew off the truck in front of me? – Does it warrant an evasive maneuver? How risky of an evasive maneuver is warranted? Is it a reasonable choice to take the ditch to avoid this [floating plastic bag, tennis ball, basket ball, cinder block, baby, grandma, bike, etc]? I recognize a school bus object appeared in front of me – safe to swerve into an oncoming traffic lane to avoid it? – Just the tip of the iceberg… risk analysis of evasive maneuvers, let alone ethics of if you get into what equates to minimizing loss of life or injury situations are not in the realm of what they’re toying with. Personally, nor do I think they should be. I don’t think developers should be developing algorithms to ‘take the elderly person over the kid if no clear path available’ type logic – and you better hope that was a ‘kid’ and not a ‘deer’ object identified as one of the two bad choices available if you made a choice like that… Oiy.

Re: my “not in the realm of what they’re toying with” – That was presumptuous, although it seems most likely. And if they are coming up with analytics to put a varying value on human lives to evaluate a situation, that seems like a dark path… And you might want to hope you’re not the only person in your car in a bad situation (lets put you into that concrete barrier to save lives in that full minivan that just pulled out in front of us).

-Just throwing another out – that deer running across the field looking to possibly intersect our path – a human knows the rough maximum speed of a deer (or insert many other animals here), how quickly it can change it’s course, and if/when it should become a concern (yes, assuming the human saw the deer/animal in time, which arguably happens more often than not). Where to even begin with an AI – if you’re even monitoring peripheral concerns (unlikely as of now) – is that actually a deer, or a horse, or cow, or other? – A deer running towards the road vs a horse running in a fenced pasture towards the road are hugely different concerns… And if/when we’re deciding to brake, is that tractor/trailer rig tailgating us, or open road behind us, being factored into our maneuver?

The amount of factors we weigh in something we wouldn’t even consider a ‘situation’ is crazy – we may not make the right choice 100% of the time, but pretty dang often. And at least when we don’t, it was due to human error. We know humans make bad choices occasionally, and can identify with that, and at least have a chance of coping with the outcome. The same doesn’t go for machines. To those stating ‘it’s as good as the average person’ – even if that was true, if you think that should be the bar, I think you should replace your calculator app with an application that gives you an answer ‘as good as the average person’ to whatever equation you give it…

>” if they are coming up with analytics to put a varying value on human lives ”

That’s a very far “if”. Like in the Uber case, it was ultimately determined that the car saw the person it ran over, but it didn’t see them as a person because people don’t go on the driveway. Since they were not positively identified as anything, the car decided to do nothing about it.

They had only programmed/trained in the detection for people on the sidewalk as avoidable objects in case they might step onto the road, but not for people who are already on the road. That’s how the computer vision works. It doesn’t inherently separate objects from their background. To the CV algorithm it’s all just pixels and the statistical analysis reveals whether there’s a person, a cat, a dog, a coffee mug… etc. among those pixels. Still, the computer does not see the object – just the result of the correlation check.

You can’t just ask it “is there something in the way?” – because that would mean checking an impossibly long list of statistical correlations for anything and everything the computer is trained to recognize. It’s not a very long list in reality, because the cars aren’t hauling a supercomputer with them, and because statistically speaking, if you test for 8000 different objects you’re very likely to have a false positive for at least one, so the car would start to hallucinate cats and umbrellas and flying pencils everywhere and it just couldn’t drive.

If your object fail to register on that checklist, then the AI simply has no information to react to, so it doesn’t react.

>”It just may not know how to behave or deal with it correctly.”

Which is the same as ignoring it. If the car doesn’t register the object correctly (doesn’t understand what it is), and it can’t find a rule to deal with the object (default case is empty), then it simply does nothing. What else could it do? The alternative is to default to emergency braking, which means the car probably wouldn’t go anywhere because it doesn’t understand most objects and would constantly default to braking.

This is what makes the Autopilot so scary. It recognizes and understands so little of its surroundings that if it were to err on the side of safety, it wouldn’t drive an inch. Instead, it defaults to just driving on – and hoping for the best.

>”With Tesla, the system can be trained which is the whole point of the fleet of Tesla vehicles gathering data.”

That’s not how it works. With every new special case and exception, you get a certain probability of a false positive. Suppose you have a 0.1% chance of falsely identifying a non-object such as a shadow as an object. If you have 1000 different cases to check, the probability that you get a false positive out for at least one of them is about 63%. Checking for more cases increases your chances of getting it wrong.

The value of “gathering data” is a moot point, because you can’t integrate that data into the system without causing the AI to hallucinate and react spuriously to things that don’t exist. The more cases you add, the less sensitive you have to make the detection to avoid causing weird behavior, so the system’s detection rate becomes worse and worse. This is a fundamental problem with any AI that does not actually understand what it’s looking at, and is simply trying to match statistical correlations.

This is the exact reason why Google / Waymo uses a Lidar instead of computer vision to navigate the car.

A Lidar will report an object if it’s there. It’s much easier for the AI then to predict that the object is going to intersect the car’s path based on its movement – again without ever knowing what the object actually is. Correct identification then becomes a bonus feature, so you know not to avoid a flying plastic bag.

Though of course, the lidar has its own issues, such as seeing puddles as holes in reality, or getting completely confused by rain and snow, or a wet leaf stuck on the scanner.

> “if it were to err on the side of safety, it wouldn’t drive an inch”. Exactly. Even with as little as it understands now, I seem to remember an article where emergency breaking was disabled through an update while in autopilot because they were having too many false positives. That seems like a great/safe idea… And that was with as little as it already understands now… The more situations/objects you look for and can understand, the more false positives. Really the only adjustment from there is to downplay the sensitivity to allow you to move, and end up ignoring some (more and more as you add more things to look for) situations that are NOT false positives along the way. Pretty much what they had to do already, at least at one point.

– That is my rub, as at least from my view, this isn’t a technology we can enhance to make it viable, it’s just plain out not a solution. You can’t enhance a steam train into a 300MPH maglev train; at some point you just need a different technology to accomplish something. Steam power doesn’t make good spacecrafts either. This is just a game of ‘how much money can we make before we kill the wrong people with this, that PR can’t smooth over’. Unfortunately non-consenting public is part of this study, and will quite likely be the ‘wrong people’, rather than the driver.

– And for what it’s worth, I have nothing against Tesla in particular. Comments apply to really any public-road autonomous vehicle projects. Some of the things SpaceX is doing is amazing – nothing against Elon either. I also wouldn’t consider myself a Luddite by any means – I’ve trained and implemented production-use ML models – and while I wouldn’t consider myself an expert by any means, I do know enough to be familiar with workings. Even at my lowly level, I run into plenty of misconceptions of what these systems are capable of. Combine a general public misconception of what Machine Learning/AI really is, add in a PR/marketing department, and you’ve got a dangerous combination.

I bet this feature exists so that Musk can keep Tesla in the news and deflect customers unsatisfied that not only is Telsa not making good on its promise for LV but they don’t seem to be even close to it (being fair, no one else is all that close either).

When a Tesla blocks me from going where I need to go, the owner will wish they had paid for the self-changing-4-flat-tires feature.

Yes… violence is always the answer to every problem… how dare anything else be in your way… the road belongs to YOU.

In the famous words of A Pimp Named Slick Back “I mean scientifically speaking has not hitting a bitch achieved the desired results?”

And if that happens, you will wish you paid for better health insurance.

I hope your car has an “avoiding an arrest warrant for vandalism/destruction of property” feature. Let’s face it, it’s only because of the security of “anonymity” of the internet that your fingers are hypothetically writing fat checks that I’m sure you couldn’t/wouldn’t cash in real life (and if you did there’d be plenty of consequences you’d regret after the fact). So let’s drop the tough guy act, it’s not fooling anyone.

What if an F-150 blocks you from where you need to go? Do you slash that up, or is it part of your approved caveman tribe? What about a grandma pushing a shopping cart slowly?

The moment you enter a public area, you can have no expectation of not being impeded. The sooner you get over yourself, the happier you’ll be. Take a deep breath, enjoy the scenery, laugh at your misfortune, and carry on.

exactly, if you get angry over almost nothing it will first damage your health than your community

This comment thread is a Trainwreck

Heh. This was always going to be the case.

How are autonomous cars going to be safer? By driving like your Uncle Leonard on a Sunday. No speeding, proper distance between the vehicles, slow lane-changes, not running yellows… human drivers are gonna hate’em.

“Tesla have always aimed to position themselves as part automaker, part tech company.”

What is a tech company? Is it just a company that makes a phone app? That seems to be the cutoff. I’d think that a company that pioneers electric car manufacturing would be considered technology, but the bay area drains meaning out of everything and now tech just means phones and stuff.

Anyway. This stuff should be regulated. It’s insane that they’re allowed to do this and release it on the world. Extremely trivial and petty amounts of convenience is apparently all it takes for us to make extremely bad decisions.

I wish they’d concentrate on realizing their original goal of affordable and widespread electric cars instead of getting lost in this bougie hype meme crap

In modern experience, a ‘tech company’ means they ship unfiinished products and promise to field-upgrade them later. Tesla is obviously following that model to a T.

No thanks on this and anything else like it. I’ve seen enough computer crashes in my day to leave me with a significant sense of distrust. As much as I like computers, I will always avoid putting my life and the life of other people directly into the hands of one. No Tesla toy car for me, thank you very much.

I wonder how long until Tesla’s lawyers demand this article be pulled. They don’t take kindly to negative press.

bet you’ve never flown on an airliner eh?

There’s a world of difference between a human piloted aircraft with electronics and software designed to help the aircraft fly and a shoddily made car and software that purports to automate driving.

Yes, the problems the cars are solving are much more difficult than the ones the aircraft encounter.

Shannon. Absolutely wrong.

Where you point a car the car goes. But with driving a ship or plane, where you point the vehicle is not always where it goes.

For a simple example 20kts of wind hitting a plane from the right means the plane had to compensate by starting to the left simply to go straight.

It is called “crab angle”

Ships are even worse because you have both current and wind that affect the Course Made Good (or the true direction the vessel is headed).

To further reinforce, that is also why there are far harder licensing requirements for both ships and planes.

Sword: and that’s why there’s kilometers wide safety corridor around, behind, and forward of an airplane or a ship on autopilot.

Cars on roads travel at similar speed than oceangoing ships, but sometimes there’s less than three feet between the wing mirrors.

Not sure if you mean that in a sarcastic or factual manner. The problems the cars are solving are far harder.

Having spent thousands of hours on real autopilots and and maybe 50 on Teslas ‘autopilot’, I will tell you they are two different animals in two very different environments.

An aircraft autopilot does a few things very well. It does some things better than a human (landing in zero visibility) and some things worse (autoland crosswind limits are lower than manual landing crosswind limits.) It’s great at maintaining altitude and following a programmed course.

But the aircraft autopilot lives in a completely different world. It follows a course via GPS, not sight. Off by 100 feet laterally? No worries, no one at your altitude is within 3 miles of you. Autopilot disconnects in cruise? No worries – take a moment to figure out why and reconnect it. The airplane should do fine on it’s own while you figure it out. You have space and you have time.

For landing, it will use precise radio beams that will put it within a couple feet of centerline on a runway that’s 150 or 200 feet wide – with two pilots monitoring it intently, ready to go around should the autopilot not perform adequately. Close to the ground, you don’t have much space and you don’t have much time, so you pay complete attention and are prepared to go around.

Through all of this the aircraft is told exactly what path to follow. It does not have to think. There’s no traffic three feet off our wingtip, there are no pedestrians and no ambiguous signs to read visually.

The Tesla ‘autopilot’ lives in a far more chaotic world. GPS is not accurate enough to keep you in your lane, sight must be used. But road markings get obscured, other cars drift out of their lanes, traffic cones block lanes, and deer run out in front of you. And it’s all close to you. An aircraft autopilot deals with none of these.

The Tesla autopilot is a good lane follower and the adaptive cruise control works (though it occasionally brakes for a crossing car that I can tell will be clear of our lane.) It’s a nice workload easer, and it might see a a car stopping two cars in front of me that I can’t, but it’s nowhere close to replacing a human driver. The environment is just too chaotic.

Not to mention double and triple redundant systems as well as TWO qualified pilots in the control position.

But you know that in Airbus whatever the pilot does is actually executed by a computer (and sometimes it isn’t) . So back to the original point – you have to trust the computer with your life

Aren’t the software and sensors the current issue with the Boeing 737 max ? As things are right now , I would be very cautious to trust a car’s autopilot software with my life.

Every car now is computer controlled. They have had fly by wire throttle for a long time, and many modern cars even have steering and brakes fly by wire without even a backup mechanical linkage. I can think offhand of more than one car company that has had throttle or brakes go to 100% with no user input due to software failure. One of them wouldn’t even let you turn it off during “unintended acceleration” because it had no mechanical shutoff key/switch and the push button decided not to work.

I hate it too, but you can’t pretend it’s only a Tesla thing. It’s almost every single car on the road for the last ten years. So stay completely off the road, because even if you don’t have one the majority of other vehicles do.

” They have had fly by wire throttle for a long time, and many modern cars even have steering and brakes fly by wire without even a backup mechanical linkage”

Source? I never seen one as AFAIK mechanical is still mandatory (even if brake without servo-vac is almost useless, same for non assisted steering for 99.9% of drivers).

In general terms, the “greatness” is in the accessibility applications. Being able to un/park your vehicle from tight spots is great.

One early video showed a wheelchair-bound driver remote-unparking his Tesla (pre-Smart Summons, IIRC) out of his handicap spot where another driver had illegally-parked beside him, blocking his access.

The current map-based “valet parking fetch” trick most folks try feels like a gimmicky implementation. I wonder if they could pull off a “security detail/dog walk” mode, where the remote-driver has to have one hand on the car body at all times for anything more complex than un/parking.?.

I especially like the throwback to 1865 Locomotive Act law from the British Parliament here.

Select “steam locomotive” drive-tone and ‘punk to taste. ;-)

There is a huge difference between backing out of a space whilst you are stood next to it and navigating a car park with other people and cars from 200ft away.

When this feature first came out and it was the former that was ok. Telsa are not the only company doing this.

But driving the car aka James Bond in Tomorrow Never Dies, it’s a movie not real life.

This company should be regulated out of existence, it is a threat to anybody who is around these stupid cars and stupid users. Even in the “successful” cases the car is either blocking other people’s use of the right of way by taking too much to do anything, or not respecting traffic signs (for example, not COMING TO A COMPLETE STOP in a STOP SIGN, or failing to yield to cars in a right-of-way road, etc.). This is GARBAGE. This company should stop fooling around. I hope they get an owner into a serious accident because of these stupid features and get sued out of existence.

You couldn’t regulate the company out of existence, all you’d do is regulate the emerging tech out of existence.

Ok zoomer

Kids, play nice!

Ya because screw progress! /S

Let me get this straight, one of the most successful companies in a long time is providing innovation on an unprecedented scale, somehow needs to be stopped because your inconvenienced for an extra 2.5 seconds? Never mind that said company has, in a short amount of time, single handily changed the coarse simultaneously of the entire auto and energy industries. Something that hasn’t been done in so long that others are unable to field anything like it still after 16 years? Every argument you made

1) Not coming to a stop – there is a reason its called a “California stop” on the west cost and its not because of Tesla

2) Not respecting rights of way – again not unique to the Tesla or its user group

3) Liability – its clear on every part of the app, car, manual etc that the USER/OWNER is fully responsible for its use and operation. The car could RUN YOU OVER and still the owner would be responsible not Tesla. You could try to go after Tesla but the operator was the one with the finger on the button, pedal, etc. sure there is room to litigate, but your fighting a battle long lost.

Is there room for improvement sure but you can’t do that in a bubble and Tesla is quick to innovate and update. Find another company doing the same thing. I would imagine you would have to look at SpaceX to see a similar innovation path that is shacking industry to its core. So get over your hope that someone gets hurt and stop deluding yourself that in some way Tesla is doing something bad. Look at any legacy energy company, they are doing far more harm then you realize. Flyash, Ash ponds, sulfur pyramids, Taylor Energy spill (2004- ongoing) etc. How about Firestone tires/tyres?

In the end your myopic view is so narrow and so close minded that its hard to fathom how your able to function in life. Not trying to be mean but I just can’t understand the mindset that you would wish thousands of people out of a job and others getting hurt to validate your point. Over making more useful suggestions, like maybe they need a playground to test their innovation out on first.

The problem is the arrogance of people using it and those like you trying to justify it.

Why should anyone have to wait an extra 2.5 seconds because you want to play with your new toys and inconvenience the people around you?

That’s called being rude, on your part. You are breaking a social convention so you should expect that others are going to get upset, but like too many people today you haven’t a thought for anyone else around you.

It’s no different to when the Top Gear blokes would drive a combine harvester through a small village and block the roads. Done for TV to show them being utterly obnoxious. But you didn’t get the joke, so you scream “hey progress”

Next time you find yourself getting into a lift with a bunch of other people, press all the buttons and see what they say. In reply tell them “hey, it’s only waiting a bit for a few extra stops, whats the problem?”

That said the OP brings up an issue very poorly, which is not just limited to Tesla but all manufacturers of a product that has the ability to do something undesirable.

The Tesla argument is the same as that used by gun manufacturers – we are just making a tool, it’s not our responsibility how it’s used but we state you must use it in a law abiding manner.

I call bullshit. Tesla can issue all the warnings they want but they know how its’ going to be used – driving round car parks (or down the freeway whilst the driver sleeps) without a person behind the wheel – so they are directly responsilbe for that. They could quite easily stop the car from working in a car park, but they are taking the direct opposite approach and making it work, then claiming they are not responsible.

In a gun analogy, that would be like fitting the gun with a target scanner which only allowed it to fire when it detected a human in front. But requiring you to manually pull the trigger still. Then claiming it’s not their fault you are killing people with it.

You can sell whatever you want to anyone, provided you are honest about what you are supplying, they can use it as they please and the consequences are on them. It’s not complicated. And as for being obnoxious, as long as you are within the terms of use for the service you’re using (Road, car park, elevator), your ‘social conventions’ just show you to be a pest.

The issue here is being honest about what you’re supplying. Tesla’s marketing, nomenclature, and UX clearly oversells their tech.

I can respect your opinion. Even trying to lump me into the arrogance group. However as the end user of a product its your responsibility to use it sensibly. If you want to get upset at anyone its the person pressing the button in the lift/elevator. Not the company making the buttons. Its not Tesla’s fault that some of their customers are idiots. Idiots exist and in my opinion we should not try to save them from themselves. It used to be called personal responsibility. Not get rid of an entire manufacture because of a few users don’t know how to find a better time or place. Unless your saying that its totally OK to get rid of your employer for the same reason. But I figure your European, so over regulation is your thing and so is the inability to properly differentiate between personal accountability (Tesla autonomous options) and manufacturer liability ( Volkswagen emissions equipment). Might be why your unemployment rate is 2x the US. As is your tax rate. How do you like having nearly 40% of your effort going to someone else? I guess being educated can come across as arrogant, wasn’t intended. My entire point was the difference between someone using something wrong vs shuttering an entire business.

As for your gun analogy that actually kida exists in the form of Tracking Point rifles. And I assure you that the end user is held responsible not the manufacturer.

>”Its not Tesla’s fault that some of their customers are idiots. ”

When you sell something for what it’s not, the customers are not responsible for mistaking it for something it isn’t.

The Tesla autopilot is a lane-assist. It attempts to detect where the lane is, and then steer towards the middle of it. It’s basically like throwing a pinball down between two slats – the reason it goes down the middle is because it bounces off the walls.

This is commonly done as a safety device where the car attempts to detect when you’re in danger of going -outside- of the lane, but otherwise it lets you drive the car. The assumption is that you are responsible for keeping the car going the right way, and if you should fail, then there’s a fair chance that the computer catches your error – even if the computer is not nearly perfect. The computer can fail half the time, but it still has a 50/50 chance of catching you when you fail, and that’s much better than nothing.

Tesla is doing it so you can actually take your hands off the wheel and let the car roll down the lane, trusting that it never loses track of the road. This is a fool’s gamble, because the computer is far more likely to lose the lane than you are, because it is less aware of things, and it has far far less understanding of things than you have. The Tesla autopilot is simply awful at it, but it is not so awful that it would fail immediately because cars don’t go off the road that fast. Even if you’re only right 90% of the time, it doesn’t mean you’ll crash 10% of the time because the consequence of a false judgement (or lack of judgement as it were) is almost always not going to be catastrophic. You can be a pretty terrible driver and still drive on successfully for quite a while before the inevitable.

Tesla’s gamble is simply that the computer should get lucky enough and have fewer catastrophic errors than the person, given that the average person gets distracted and bored sometimes – and that the average person who makes the catastrophic error is often drunk, reckless, sleepy, old… which is a pretty low standard to have. Arguably, they aren’t even reaching that standard.

Or to put it in other words:

You have a person who is almost perfectly 100% correct in their understanding, except for the 10% of time when they’re not paying attention. Against that, you have a computer which is just 90% correct in its understanding, but it pays attention 100% of the time to compensate.

On the face of it, you’d expect both of them to make errors the same 10% of the time – so they’d be equal. But, being 90% correct is not the same thing as being 90% alert.

The lack of judgement gets you into all sorts of trouble, because the missing 10% includes all the tricky edge cases that WILL get you into trouble, whereas the missing 10% in attention is evenly spread among all the cases, so 90% of the time it’s not going to be a catastrophic omission.

Even if the computer was alert 1000% of the time – meaning that it had a shorter reaction time and made more measurements – it’s still going to fail that 10% of the time with the edge cases that it just doesn’t understand. It cannot compensate for its lack of comprehension with sheer speed and vigilance. It’s just too dumb to drive safely.

Perhaps the current design of roads is not very conducive to automated vehicles. Unfortunately, roads are unlikely to change much in the foreseeable future as for the most part they are government-run, with no incentive for improvement, as election cycles are much shorter than election cycles

Why should we shift the goalposts?

It’s good enough for people to drive on, and if the computer isn’t good enough to do the same then why should we spend extra resources to accommodate an inferior system?

…or maybe the idea that automated vehicles alone will solve all traffic issues is past due to be retired.

City design should be around people and mass transit, with personal vehicle traffic, automated or otherwise, kept to a minimum.

That’s the gospel, but why?

Personal vehicle traffic is what makes the economy work by allowing people to actually move around when they need to, where they need to. Owning your means of transportation means you have the power of choice over it, and so if you need to then you can take that job on the other side of the city, or find that cheaper product from a shop in the next town.

Public transportation serves political ends – it serves those people who want to play Sim City with other peoples’ lives. If public transportation is built according to the actual needs and desires of the people, it quickly becomes too costly and too ineffective to operate. At worst, these systems meet 80-90% of the actual need, and then most people are forced to own a vehicle anyways, which is worse than having either-or.

So you should be really careful when you say “should” about a matter concerning how other people do something.

Of course, that isn’t to say public transportation is useless. It’s just that it’s more or less a fixed infrastructure – a system – and the rest of the society has to adapt to it, rather than the infrastructure adapting to the society.

Any time you put a line down on the map, you expect the people to follow it, like the old colonial masters who put a straight line across the desert and said “You’re Syria, You’re Iraq”. Well, it was awfully convenient for the mapmakers, and really, who’s the more important people here?

Well, first of all personal vehicles make up too much of our economy; look at the hit whenever carmakers run into trouble (and need to be bailed out). This dependence is pretty unhealthy. As well as the attendant use of fossil fuels.

Second…it’s not sustainable. It’s not going to be possible for every world citizen to own and operate their own vehicle.

Third – cities designed around personal vehicles aren’t as ‘livable’ as those designed to accommodate other modes – walking, bikes/scooters/etc, taxis/Ubers, public transit. We are seeing a new generation of city dwellers who don’t own cars, and many who don’t even licence up. Zipcars and other rentals are available for when a vehicle is required.

Your view of public transportation seems very US-centric. It’s much more advanced and efficient in other parts of the world. And could NYC even function without the subway?

I’m not saying that there’s no longer a place for the personal vehicle, especially in rural or less-populated areas. Just that it should not be the centrepiece.

Nice to see the anti-Tesla trolls have found this article via their favorite search engines.

All of these comments are right, though. This shouldn’t be let out on the road when it clearly isn’t working.

Great idea, but HORRIBLE execution.

Nice to see the Tesla owners have found the thread to defend them, as well, by the way ;)

lol, no I don’t own a Tesla. Just don’t like to see all of the undeserved hatred toward them.

Basement dwelling trolls who belittle that which they can’t afford, and in many cases, can’t understand.

Unwarranted hype damages all by mis-directing funds and effort from those who truly deserve it to those who would simply sell you bunk and magic tricks.

Consider: an aspirin pill gets rid of the pain but an antibiotic gets rid of the disease. At the first glance, the aspirin seems more effective because it works faster, and the disease eventually clears by itself. Yet, if you were to compare actual effectiveness, you wouldn’t spend a dime on the aspirin pill because it’s not really bringing you any closer to the cure. Tesla’s self-driving cars are just like this: they’re not really solving the problem of autonomous driving, but because they seem to be doing something, people assume they are the cure instead of just a palliative.

Nice to see the Tesla fanbois defending their leader against any criticism at all, even if it’s warranted.

I still hold to the idea that “Tesla,” the company, is merely an Epic Troll by Edison’s descendants to discredit, further, his Nemesis.

To all the tesla haters:

You could address every other car company then.

Literally the previous blogpost were about the claymore mines which were installed in hundreds of millions of cars. And I see 0 post like “burn them to hell” there. Because its not Tesla right?

To all the posts saying its “not ready for the roads!”: nothing is. Never. Or that nice “This company should be regulated out of existence” comment is nice too.. Then every other should be also regulated out of existence too.

My father’s 2 year old VW diesel transporter randomly catched fire on the highway, almost died right there. Door locks failed, locking him inside. All VW vehicles should be recalled and VW put out of business.

I had the brakes fail in my Citroen BX which was 3 or 4 years old at that point. Fun times when you are going 100kph. All Citroens should be crushed and Citroen put out of buisness.

I had the electric door software glitch in my other Citroen. If you locked/unlocked it one side locked the other unlocked. Battery reset helped. Same.

I had the front suspension corroded and collapsed at 30kph in a Mazda after 3 years. All Mazda cars should be recalled and Mazda put out of buisness.

Oh and what about the stuck accelerator pedals in the Toyotas? Which were stuck in software not by literally stuck, and multiple people died due to the unattended acceleration.

What about the Ford ecoboost engines? Which literally kills themselves after 50k km.

BTW I used to work for a company which manufactured brake systems for heavy vehicles. I found a SW bug to which I said recall all of them ASAP. The bug were known to be occurred for more than 100 vehicles already disabling the brake controller ECU completely, needing a replacement (long story why a sw bug needs a hw replacement…). Upper management just said fuck it and be quiet. Currently this is on the roads in almost 200k vehicles.

TLDR:

Trolls are loud. No car company is perfect.

https://en.wikipedia.org/wiki/Whataboutism

“Whataboutism, also known as whataboutery, is a variant of the tu quoque logical fallacy that attempts to discredit an opponent’s position by charging them with hypocrisy without directly refuting or disproving their argument. It is particularly associated with Soviet and Russian propaganda.When criticisms were leveled at the Soviet Union during the Cold War, the Soviet response would often be “What about…” followed by an event in the Western world.”

>”nothing is. Never.”

https://en.wikipedia.org/wiki/Nirvana_fallacy

Nobody wants them to be perfect. Just better.

>”Door locks failed, locking him inside. All VW vehicles should be recalled and VW put out of business.”

You should sue them. Ford Pinto had this exact same problem and they were punished for it. If this is a systemic flaw in the cars, instead of complaining about complaining, you actually should take them to court for it – you could save lives!

In your example of airbags, a recall was issued. No recalls have been issued for several issues related to teslas.

Upon reading the rest of your comment I realize that you are all for a lack of consequences for clearly selfish reasons. Your failure to become a whistle blower makes you complicit. You may very well have blood on your hands already, if not, you might soon.

>blood on your hands

Yea right. And you know absolutely nothing how real life works it seems.

By this logic everyone who ever got into proximity to people or things which have a chance to take lives are complicit. All those had the opportunity to become a “whistle blower” right?

>In your example of airbags, a recall was issued.

Yea, but this is a PR feat at this point to make them look better. They possibly knew this problem since the beginning. The number of lives it taken now reached a point where the cost of a recall is acceptable. These are almost 25 year old vehicles at this point. Most of them are already in the scrapyard. At this point a recall wont make the car manufacturer itself bankrupt. That’s why they do it.

Also you possibly know nothing about the contracts that a first party supplier signs with the car manufacturer.

First, the company would not let it slide ruining my life. Would you be a “whistle blower” if there is a possibility to loose all your wealth, your mental health in the courtroom, all your time in the next few years, possible jailtime, your opportunity to ever do what you like and to ever take a job in this field (which I actually like)?

Also there would have been a possibility that this ruins the company of almost 30k people.

Secondly those machines make money, no one will take them off the road because a chance that the primary brake system could fail. Ever heard of business viewpoint? Each life has a value which is not infinite as todays social justice warriors put it. A death and/or property damage here and there is perfectly acceptable if that system prevents vastly more accidents that it would happen if that system would not exists in the first place.

Fixing that bug took almost 2 years of a team of 3 guys including me. Almost 100k lines of code (this was a single sw component out of around 50) were thrown out and redesigned since this bug were in the design itself and could not be fixed. Ever heard of safety critical software? Its takes more than 3 minutes to patch it and test it to meet the regulations that thees needed to met. In that 2 years what would have those 200k vehicles do? Stand and rot?

They refused to do/plan a recall/sw update and let me backport this fix to the vehicles already on the road. Backporting and developing the tools to update this component would have taken another year but still… The next revision of the product contains this fix, but the older ones wont ever receive it.

Also where did I wrote that failure of the brake system controller means failure of the complete brake system itself? Dont you know what a fail operational system is? Or backups? Yes it has a chance that the driver are an idiot and this failure causes a huge accident if the driver does not know what a retarder, engine brake or handbrake is and how to use it.

Meanwhile at Boeing planes are falling out of the sky for similar reasons.

You know about this potentially life threatening issue, so it’s time to get your your moral compass and decide which way it points.

Clearly you’ve decided to take the money so you are complicit.

The rest of your rambling to justify your position thefore dont really matter.

You’ve made your bed, just live with it and dont try to justify it to anyone but yourself as it simply wont work.

PS: I’m not saying you are wrong, it’s your life and choice. But dont try to sound like a murderer does when caught and grasping for excuses. Just plead guilty.

>”how real life works”

Real life works how you make it work. IF you choose to act like a cynical sociopath, the world is made that much worse for it.

Dude, you have terrible luck with catastrophic failure in cars. I’ve never heard of anyone with even one problem of the magnitude you describe. Maybe you just shouldn’t drive.

Why did you buy not one , but 2 Citroens ? Fool me once ..

Wow, just the thing for worthless lazy slobs that want to avoid walking 200 feet.

Gimmick for sure.

They need to hire Common and fix their AI, clearly.

I’m just waiting for the inevitable headlines, 10 year old kid takes car for a joyride from half way across the world, wife kills husband on commute home from the comfort of her living room, self drive car murderers passengers, who’s to blame for the missing curly bracket… :D

And an article in today’s Daily Mail

https://www.dailymail.co.uk/sciencetech/article-7660019/Alarming-video-shows-driverless-Tesla-car-cruising-road-wrong-way-summoned.html

Had to remove my ad blocker to even get on the site , then I remembered why I have an ad blocker and quickly left that site. Why in your right mind would you even link to such an ad-filled website. Pretty sure if I stayed on there for another 10 seconds I was going to get my first ever epilepsia attack. The video doesn’t show where the car came from when it was summoned or how it got on the wrong side of the road, but it does start with the car on the wrong side. The video is a combination of these 2 youtube videos. Seems the video cut and switched orientation when the person filming turned his phone around. https://www.youtube.com/watch?v=dnioHfg1xbQ https://www.youtube.com/watch?v=XchnvhKwcvs

That’s a national paper website.

The local regional paper websites are like that on steroids.

Daily Mail is yellow papers. Calling it “national” gives it an air of authority it doesn’t deserve.

As yet I’ve not seen any tech that is free of failures. The closest is refrigeration but vehicles are nowhere near that reliability. Now you want to add in the human error of a programmer and put that code in a computer which a single cosmic ray event can cause to fail, and it in charge of a ton of metal and large array of sensory electronics driving around the streets, plus NOW add even sometimes without a human driver being present ?!?!.

We still have the same formula. Human error + mechanical-technical failure. It’s just shifting the blame from the driver to the human error of the coder and the mechanical failures are still there, but we’ve managed to add electronic failure to the mix.

So yes, with good cause I still say this line of “progress” is likely a big mistake… but I also know we must still try “because it is there”.

> a single cosmic ray event can cause to fail

No, that’s not how things work. You may not notice, but almost everything around you is computer-driven. Your car, if 30 years older or less, is full of computers. ABS, ECU, ESC… People with pacemakers, ear implants, there are computers everywhere. And they are not killing people, locking accelerators or throwing planes from the sky… Well, Boeing 747-MAX is falling from the sky, but you got the picture…

This line of progress is needed. I personally don’t like the “self-driven car” thing, but the car detecting danger and steering away or braking by itself is a good touch. Strapping an engine to a carriage was deemed dangerous too, having all those horseless carriages zipping around faster than any horse could, and who knows if the driver could safely drive that carriage on those speeds… 30 MPH? Are those guys crazy?

Telsa will never do this. No car manufactuer will. But I’d like to see them perform the tests that have been hypothesized about a self driving car’s moral choices. Essentially an Asimov test.

Setup a scenario on closed road with a mum pushing a pram across the road, a group of nuns on the sidewalk, a group of bikers in the next lane and a prison truck oncoming.

The car thinks it’s carrying a family.

Force an emergency stop because mum steps out in the road.

The car cnanot possibly stop in time.

Let the car choose who it’s going to hit.

This is the road we are on, the steps that Tesla are taking now might be laughable but the end goal is in sight.

If you a human were in that situation what would you choose to do?

Now is it fair to question a machine making that choice?

Popcorn…

> If you a human were in that situation what would you choose to do?

You cannot *choose*, you *react*, and that makes all the difference.

You sit comfortably on your chair, with the detailed map of the area in one hand with all distances, masses and speeds in one hand, and Wolfram Alpha on the other. Facial recognition shows you all data from every person involved, so you can decide how to proceed and inflict the least possible damage for the entire society. After calculating time and time again, with a team of expert researchers ranging from Physics to Sociology, you are ready. You get back to the car, look at the message on the dashboard: “REALITY PAUSED. RESUME?”

You take a last look on the paper with the decision, fasten you seat-belt, and press “RESUME.”

Like that commercial: https://www.youtube.com/watch?v=B2rFTbvwteo

No, that’s not what happens. If much, you have a tenth of a second of usable time, but probably less. You don’t have time to look on all rear mirrors, count how many people are on the left, on the right, the speed and size of the vehicle behind you. You react, as fast as your reflexes can, and hope for the best.

A car cannot make moral choices, it is not possible for it to do so. And Asimov laws are good for a fiction platform, but unenforceable on real world. What the car have is data. It will decide only based on this data, not on any internal desire or inclination.

Your scenario have no good outcome. No matter the result, someone will be injured or killed. Like thousands of traffic incidents we have every day worldwide.

Find a bunch of these, equip each one with a cell phone, paired to the next car, with remote control software to mash the button.

Then when the first person summons their car, the rest follow.

Cue the Benny Hill chase music.

How secure is the app & the car messaging system? Seems to open all sorts of possibilities.

Looking for a parking space – summon somebody else’s car so it unparks, then steal their

spot.

“Mummy, this car followed me home, can we keep it?”

Seems like it opens up more interesting opportunities for vehicle theft?

Could you get it to drive itself onto a truck, or into a garage/other concealed area.

Or as a way to harass people. (Move their car into less safe place,

they wonder where it has gone, use somebodies car to obstruct traffic)

How soon before we see this used in a movie chase scene?

(Or has it already been done.)

“Cue the Benny Hill chase music.”

I believe that music is named “Yakety Sax”

https://www.youtube.com/watch?v=ZnHmskwqCCQ

Really bad idea.

Figuring out permissible direction of travel in a parking lot is a hard problem.

In many cases the signs are at the entry to the parking area (e.g. arrows on the road, signs

by the road, etc.).

So it has to remember what arrows you passed before you parked the car.

It also has to correctly interpret them (know which ones it should forget as you circled

through the lot looking for a space) so it can know which way it is okay to go.

Or read arrows which have half-worn away, or remember them from months or years

ago when they were there (as a human who visits a place frequently would),

or just observe the angle of the parking spaces to figure out which direction has to go.

(It also can not assume that the driver was following the correct travel directions when

they parked.)

At the very least it needs a huge sign that pops up and says that it is a

semi-autonomous vehicle. (Or maybe an inflatable pilot, like in “Airplane.”)

Should also have a warning sound. (So people know it may start moving.)

I hope parking lot owners start to prohibit this.

I wish parking lot owners would have some tech to point you to an empty stall, or back to where you parked your car.

Many parking lots have exactly what you’re talking about in the first part of your post. As for the second post, take a picture of your car.

Space is deep

Space is dark

It’s hard to find

Where you parked

….

Burmashave

You win the internet today.

Spot on. ‘AI’ isn’t magic, it’s math, with quite a bit of ‘probably this’ in the output. Nowhere near ready for primetime. Amazed there haven’t been any high-profile incidents yet. Curious what Tesla’s spent in PR damage control already; sooner or later they aren’t going to be able to buy someone out. Bad enough if owners want to endanger their own lives with ‘autopilot’, but it is the way the general public/pedestrians are part of this proof of concept alpha testing that really gets my goat. Some akin autopilot to an airliner autopilot – I’d agree, in the effect you should be trained and licensed to enable it, and know exactly what it is capable of, and it’s limitations. Not just let anyone who can plop down the cash ‘push the button’ and think it’s magic that the car seems to understand its surroundings and rules of the road. So many misconceptions and dangers there…

Like you said Autopilot is akin to airliner autopilot. Drivers should pay more attention when it is active and Tesla encourages that. They even have systems in place to force you to place and hand on the wheel of it detects you aren’t doing so. As for endangering their lives, I’m not quite sure I’d agree with that. Autopilot drives far safer than your average driver and will also react faster in almost all situations. Having Autopilot enable and an attentive driver is actually safer for the general public and this “alpha testing” as you call it is actually needed to gradually improve the system at a reasonable rate.

Your claims about safety are un-proven. Until Tesla opens its data, we won’t ever know anything more than their marketing department wants us to know. But from what little we do know, it’s certainly not ‘far’ safer than a human driver. It may almost be on par with the average. (Whether that’s good enough…discuss. The sample of average humans includes some people who are intoxicated.)

The sample of average accidents involves quite many people who are intoxicated or impaired, and cars that are compromised by being out of repair, bad tires, etc.

Meanwhile, the Tesla data is restricted to a) highway use only, b) in new cars with good service history, c) the driver is supposed to take over at the first hint of trouble or else the car stops.

It’s simply a non-comparison to say that Autopilot is safer or less safe – the AI is still driving with training wheels on – although it is a bad indicator that it has managed to kill and maim people regardless.

The only claim I am making is having a dedicated system for driving in a lane, maintaining speed and multiple cameras and sensors around your vehicle to avoid collisions working alongside the driver is safer than not having it. The driver can disengage the system at any moment, so I don’t see how it can be less safe unless the driver is not paying attention.

>” I don’t see how it can be less safe unless the driver is not paying attention”

Exactly because of that. The whole point of an auto-drive system is that the driver could spend less attention on actually driving the car. If you have to sit there squeezing the wheel with white knuckles in wait for the computer to f**k up, there’s no point in using it at all.

Why do you need a lane-keeping assistant if you can already keep your lane? Because you don’t want to spend the effort of keeping track of where you are relative to the road, and offload the task to a computer. Hence, when you are using the system, you are not paying attention to that aspect of driving because you assume the computer will take care of it for you. When the computer fails to meet the standard, it becomes less safe for you to trust the task for the computer rather than just drive yourself.

You see, there’s a fundamental difference between a system that’s designed to keep you on the road if you accidentally veer off your lane, and a system designed to drive for you.

One assumes the driver is in control, the other assumes the driver is not in control. Tesla is deliberately suggesting to you that their system is driving -for- you, but officially they’re saying it is a system that is meant to back you up. They’re officially saying its the first type of system to avoid litigation, but really they’re marketing it as the second type of system.

It’s kinda like printing “drink responsibly” on a bottle of cheap beer. It’s there just to play lip service. We all know you’re making the cheapest beer on the market for people to get blind drunk on.

@Meem. You are making a mistake in logic. You say that adding additional, automated, safety features to the human driver by definition must make the system as a whole safer. However, you overlook the fact that by introducing those features you might change the behaviour of the human (i.e. they pay less attention, thinking the automation will keep them safe).

Yeah, I agree. That was the caveat – that the driver is attentive as Tesla recommends/requires. I’m mostly speaking from anecdotal evidence. When I’m using Autopilot, the vehicle is driven more safely: it keeps a safer distance with the vehicle ahead of me and can quickly react to changes in speed even of vehicles not immediately in front of me. Those are just some examples. To put the blame on the system instead of the driver who chooses not to pay attention is not logically sound either, though.

>” this “alpha testing” as you call it is actually needed to gradually improve the system at a reasonable rate.”

There is no “gradual improvement” with the type of AI that Tesla is using. Statistical inference image recognition etc. only gets you 90% of the way before you start to have too much false positives and the car starts to react to its own “hallucinations”. These AI systems are “brittle” – they fail catastrophically when you work them up to their limits.

This is a problem that affects the entire field – Tesla is not advancing the field with their IMPLEMENTATION of technologies copied from others who are developing them – just like they aren’t advancing battery technology by commissioning Panasonic to build them a new factory that makes batteries according to a tried and tested formula developed by Panasonic. Tesla itself does very little fundamental R&D.

They’re just selling you the idea that by inventing the same wheel over and over again, you’re getting something new. That’s called “innovation” – in contrast to “inventing”. Indeed, Elon Musk’s engineering means buying widgets off the market and hiring people to make them fit together. This is just your standard Silicon Valley dot-com business model: you sell it before you have it, take the money and hire people to do it, and if it doesn’t work then you take your golden parachute and bail.

Also, there’s a fundamental difference in the way computer vision typically works, from how human vision and more importantly, how human cognition works.