We have all watched videos of concerts and events dating back to the 1950s, but probably never really wondered how this was done. After all, recording moving images on film had been done since the late 19th century. Surely this is how it continued to be done until the invention of CCD image sensors in the 1980s? Nope.

Although film was still commonly used into the 1980s, with movies and even entire television series such as Star Trek: The Next Generation being recorded on film, the main weakness of film is the need to move the physical film around. Imagine the live video feed from the Moon in 1969 if only film-based video recorders had been a thing.

Let’s look at the video camera tube: the almost forgotten technology that enabled the broadcasting industry.

It All Starts With Photons

The principle behind recording on film isn’t that much different from that of photography. The light intensity is recorded in one or more layers, depending on the type of film. Chromogenic (color) film for photography generally has three layers, for red, green and blue. Depending on the intensity of the light in that part of the spectrum, it will affect the corresponding layer more, which shows up when the film is developed. A very familiar type of film which uses this principle is Kodachrome.

While film was excellent for still photography and movie theaters, it did not fit with the concept of television. Simply put, film doesn’t broadcast. Live broadcasts were very popular on radio, and television would need to be able to distribute its moving images faster than spools of film could be shipped around the country, or the world.

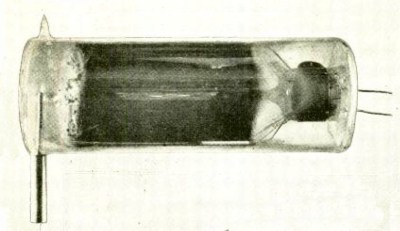

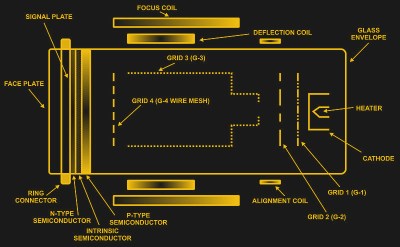

Considering the state of art of electronics back in the first decade of the 20th century, some form of cathode-ray tube was the obvious solution to somehow convert photons to an electric current that could be interpreted, broadcast, and conceivably stored. This idea for a so-called video camera tube became the focus of much research during these decades, leading to the invention of the image dissector in the 1920s.

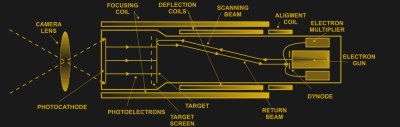

The image dissector used a lens to focus an image on a layer of photosensitive material (e.g. caesium oxide) which emits photoelectrons in an amount relative to that of the intensity of the number of photons. The photoelectrons from a small area are then manipulated into an electron multiplier to gain a reading from that section of the image striking the photosensitive material.

Cranking Up the Brightness

Although image dissectors basically worked as intended, the low light sensitivity of the device resulted in poor images. Only with extreme illumination could one make out the scene, rendering it unusable for most scenes. This issue would not be fixed until the invention of the iconoscope, which used the concept of a charge storage plate.

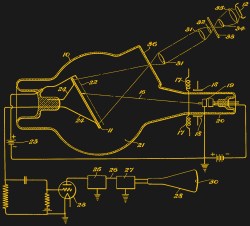

The iconoscope added a silver-based capacitor to the photosensitive layer, using mica as the insulating layer between small globules of silver covered with the photosensitive material and a layer of silver on the back of the mica plate. As a result, the silver globules would charge up with photoelectrons after which each of these globule ‘pixels’ could be individually scanned by the cathode ray. By scanning these charged elements, the resulting output signal was much improved compared to the image dissector, making it the first practical video camera upon its introduction in the early 1930s.

It still had a rather noisy output, however, with analysis by EMI showing that it had an efficiency of only around 5% because secondary electrons disrupted and neutralized the stored charges on the storage plate during scanning. The solution was to separate the charge storage from the photo-emission function, creating what is essentially a combination of an image dissector and iconoscope.

In this ‘image iconoscope’, or super-Emitron as it was also called, a photocathode would capture the photons from the image, with the resulting photoelectrons directed at a target that generates secondary electrons and amplifies the signal. The target plate in the UK’s super-Emitron is similar in construction to the charge storage plate of the iconoscope, with a low-velocity electron beam scanning the stored charges to prevent secondary electrons. The super-Emitron was first used by the BBC in 1937 for an outdoor event during the filming of the wreath laying by the King during Armistice Day.

The image iconoscope’s target plate omits the granules of the super-Emitron, but is otherwise identical. It made its big debut during the 1936 Berlin Olympic Games, with subsequent commercialization by the German company Heimann making the image iconoscope (‘Super-Ikonoskop’ in German) leading to it being the broadcast standard until the early 1960s. A challenge with the commercialization of the Super-Ikonoskop was that during the 1936 Berlin Olympics, each tube would last only a day before the cathode would wear out.

The image iconoscope’s target plate omits the granules of the super-Emitron, but is otherwise identical. It made its big debut during the 1936 Berlin Olympic Games, with subsequent commercialization by the German company Heimann making the image iconoscope (‘Super-Ikonoskop’ in German) leading to it being the broadcast standard until the early 1960s. A challenge with the commercialization of the Super-Ikonoskop was that during the 1936 Berlin Olympics, each tube would last only a day before the cathode would wear out.

Commercialization

American broadcasters would soon switch from the iconoscope to the image orthicon. The image orthicon shared many properties with the image iconoscope and super-Emitron and would be used in American broadcasting from 1946 to 1968. It used the same low-velocity scanning beam to prevent secondary electrons that was previously used in the orthicon and an intermediate version of the Emitron (akin to the iconoscope), called the Cathode Potential Stabilized (CPS) Emitron.

Between the image iconoscope, super-Emitron, and image orthicon, television broadcasting had reached a point of quality and reliability that enabled its skyrocketing popularity during the 1950s, as more and more people bought a television set for watching TV at home, accompanied by an ever increasing amount of content, ranging from news to various types of entertainment. This, along with new uses in science and research, would drive the development of a new type of video camera tube: the vidicon.

The vidicon was developed during the 1950s as an improvement on the image orthicon. They used a photoconductor as the target, often using selenium for its photoconductivity, though Philips would use lead(II) oxide in its Plumbicon range of vidicon tubes. In this type of device, the charge induced by the photons in the semiconductor material would transfer to the other side, where it would be read out by a low-velocity scanning beam, not unlike in an image orthicon or image iconoscope.

Although cheaper to manufacture and more robust in use than non-vidicon video camera tubes, vidicons do suffer from latency due to the time required for the charge to make its way through the photoconductive layer. It makes up for this by having better image quality in general and no halo effect caused by the ‘splashing’ of secondary electrons caused by points of extreme brightness in a scene.

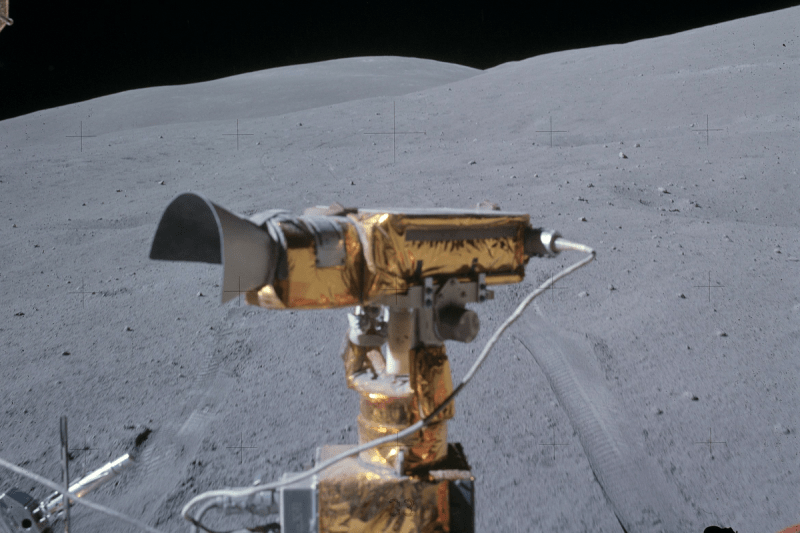

The video cameras that made it to the Moon during the US Apollo Moon landing program would be RCA-developed vidicon-based units, using a custom encoding, and eventually a color video camera. Though many American households still had black-and-white television sets at the time, Mission Control got a live color view of what their astronauts were doing on the Moon. Eventually color cameras and color televisions would become common place back on Earth as well.

To Add Color

Bringing color to both film and video cameras was an interesting challenge. After all, to record a black and white image, one only has to record the intensity of the photons at that point in time. To record the color information in the scene, one has to record the intensity of photons with a particular wavelengths in the scene.

In the Kodachrome film, this was solved by having three layers, one for each color. In terrestrial video cameras, a dichroic prism split the incoming light into these three ranges, and each was recorded separately by its own tube. For the Apollo missions, the color cameras used a mechanical field-sequential color system that employed a spinning color wheel, capturing a specific color whenever its color filter was in place, using only a single tube.

So Long and Thanks for All the Photons

Eventually a better technology comes along. In the case of the vidicon, this was the invention of first the charge-coupled device (CCD) sensor, and later the CMOS image sensor. These eliminated the need for the cathode ray tube, using silicon for the photosensitive layer.

But the CCD didn’t take over instantly. The early mass-produced CCD sensors of the early 1980s weren’t considered of sufficient quality to replace TV studio cameras, and were relegated to camcorders where the compact size and lower cost was more important. During the 1980s, CCDs would massively improve, and with the advent of CMOS sensors in the 1990s, the era of the video camera tube would quickly draw to a close, with just one company still manufacturing Plumbicon vidicon tubes.

Though mostly forgotten by most, there is no denying that video camera tubes have a lasting impression on today’s society and culture, enabling much of what we consider to be commonplace today.

Here something also on this sorta subject regarding why some old film was HD or higher.

https://www.youtube.com/watch?v=rVpABCxiDaU

Useless Trivia…

The “Emmy Award” gets it’s name from the Emitron tube.

Thanks Steven. I quite like things like that. It will serve you well if you ever get on QI.

Meaning you knew that trivia or are youjealous not too have known?

From my side, thank you Steven. Full stop.

Transition from film to CMOS/CCD and magnetic tape is why some TV shows and made for TV movies will not see a 1080 or 4k rescan.

Star Trek TNG was shot on film and Paramount was able to take the original master, redo the CGI, and issue Blu-ray version. They had plan to do same with DS9 and Voyager but TNG didn’t sell as well as they had hoped so it’s been scrapped. Other shows like Babylon 5 and Farscape were done mostly to magnetic tapes so short of reshooting everything with 20 years old props and actors who aged 20 years (if still alive), those shows will not get a nice upscale.

No one had the foresight to anticipate the jump to 1080 and 4k displays so soon after video camera and tape recorder became cheap.

Funny that you comment Babylon 5, because the live action was recorded in HD. Unfortunately, all the SFX files are lost, so the HD versions are a mix.

Give technology the time to advance. Interpolation and software can already produce doubled resolution without jaggies on diagonals. Artificial intelligence will eventually allow intelligent sharpening and the faking of details. Once the algorithms are in place, if the studios don’t want to make an improved video, you can do it yourself at home.

Err I may be mis-remembering but I though TOS was on film and TNG was video. Thus the upscaled look and 4:3 aspect. All the scenes in TNG look like they are framed in 4:3 instead of a wider format and cropped.

I’m only aware of one TNG episode that used CGI heavily. I think things like phasers and photon torpedoes CGI was used. The rest was practical effects done on film. Which was why it was relatively cheap to upgrade the series to HD. All they had to do was transfer them using a modern digital film transfer. And recomposite them. The series that came later like Voyager and DS9, did use more CGI which is probably why they have not been upgraded.

There’s actually a modern day application of all of this technology. The photocathodes which convert the light into electrons are now used to generate the electron beams of some of the worlds most powerful particle accelerators. I’m a grad student doing research in this area and its always funny to look at the papers in my field from the ’60s and earlier because they are all about “picture tubes” and night-vision goggles.

Also, I’m assuming that the cathode mentioned in the first section is Cesium-Oxide activated Gallium Arsenide, not just Cesium oxide which I don’t think emits on its own. The Cesium is actual used to lower the energy requirement of emission from Gallium Arsenide (a semiconductor) which acts as the actual photocathode. It’s only a layer a few atoms thick of Cesium.

The “almost forgotten technology”? Gimme a break! Tube-based video cameras only went out of common use in the 1980’s – that’s less than 40 years ago. People’s memories aren’t that short.

The image of the moon camera raised some expectations about the contents of this article, although interesting I expected to read more about the moon cameras and how they did things on the moon and back on earth.

Some interesting info related to this article and the moon cameras: http://www.hawestv.com/mtv_FAQ/FAQ_moon_5.htm

more about the moon

https://www.provideocoalition.com/mechanical-television/

(the PDF at the end of the page contains a lot of interesting info!!!)

A very good book on lunar TV development:

“Live TV from the Moon”

http://www.cgpublishing.com/Books/9781926592169.html

I reckon people were using the better tube cameras into the late 90s. Some of them sat more comfortably on the shoulder, whereas most CCDs were only hand supported so the larger ones of those gave you arm ache. Until the true “palm sized” ones came along that were only as bulky as an SLR.

But I did almost forget I’ve got two vidicon cameras around somewhere, color and a mono.

I’ve got two Vidicon cameras and a recorder I think the date on them is late 1982, they’re branded Magnavox but everything is Sony. Camera is shoulder size, is about the same size as the later full size VHS and Beta “Shouldercam”, but it’s just the camera – the recorder is a Betamax unit with a shoulder strap that’s separate from the camera. Together they weigh a good 20 lbs and the camera will streak and ghost with points of light, but actually produces a decent quality picture.

At one point I had a modular betamax that came unplugged from the tuner/timer and PSU and could plug into a battery pack and camera. I found a camera I could plug into it, but never had a battery pack. Belts all crumbled in that one, and it was past the time belt kits were in distribution.

Sounds like a Sony SL-2000 or the same thing rebranded. I lugged one of those all around Europe in 1984 for a documentary.

The median age in the USA is 38 years. Most Americans were born after the tube-based cameras died out. Fewer still were old enough to remember these cameras, and even fewer still were in a profession where they could actually work with them.

This makes people holding first-hand memory of these cameras a small, small minority among Americans, even smaller on a global scale. That’s close enough for “almost forgotten”.

Pshaw, what are ppl gonna forget next, the milk being delivered???

People delivering milk?!?…

Yeah this is a bit of a tough concept so bear with me… Every area had it’s own mini-Amazon, but they only sold dairy products and dropped them off fresh every morning to subscribers.

We had a bread and donut delivey man who was willing to drive up our half-mile long farm lane to see if we needed anything.

I remember both those tube-based cameras, and even delivered milk. (First house while growing up had that. ) And I even used the first Panasonic grade portable camera and video recorder, with the local Cable TV gang. Trivia blurb, the video recorder was invented in the US by Ampex, about the time the idea of reel to reel audio was also running.

My family and me had a b/w TV camera with a tube and an UHF box for a long time. In fact, CCD is still new to me. I’m way below the 38yrs thing also. Years ago, I used that Vidicon (?) camera to capture shots of the moon during night time. With my an ISA-based TV capture card and PC running Win9x. That being said, I don’t remember the milk thing. Was long before my time, when glass bottles were still used, I suppose.. 73 from Germany.

Back in the days of my first career as a broadcast technician, handling image orthicon tubes was serious business.

Can’t recall the numbers now, but very expensive. At the end of life they were sent back to RCA to be refurbished. Much cheaper than new.

Has there been any other type vacuum tube that could be refurbished and reused?

CRT’s definately were. there are some videos on youtube.

TV CRTs were refurbished.

https://www.youtube.com/watch?v=W3G7b-DcOO4

I think large, multi megawatt broadcasting tubes could also sometimes be refurbished?

This is starting to sound like an article from People Magazine about aging movie stars who want to get pregnant.

Ha! Thanks, I nearly spit my beer out.

What on God’s gray earth is a “film-based video recorder”???

Using Kodachrome as the exemplar of color film is just wrong. As wonderful as it was it has been discontinued for years. It required non-standard processing… standard processing used for other color film would deliver black and white images.

Here are SOME of the recent TV shows shot on film:

The Walking Dead (2010– ) …

Breaking Bad (2008–2013) …

24 (2001–2010) …

The Sopranos (1999–2007) …

The Wire (2002–2008) …

Band of Brothers (2001)

I think there was actually some film based video recording thing. Done opto electronically like the audio tracks on the side of movie film. But it used something like 8mm or so strip just for the mono video. I think the point was that it was full line resolution as if it was right off the high end outside broadcast cameras, whereas the magnetic tape they had then only got half the resolution or something.

I took that phrase “film-based video recording” to mean kinescope. The cameras were video cams; they were live-switched, and the resulting live broadcast was recorded to film by a special film camera recording a monitor. I don’t believe 8mmwas ever used, but 16mm film was common for this (especially news). Not sure if 35mm was used much. A lot cheaper then than originating on film and editing the film in post. A lot of old tv shows like The Honeymooners was kinescoped. It doesn’t look very good but at least it isn’t entirely lost. I believe Twilight Zone also had some kinescoped episodes.

Right that was the more widely used version for sure. Not certain if the one I’m describing was deployed much or whether it was a gee whizz what’s new article about something that never took off.

Most of those old kinescoped shows had dark halos around bright or moving objects. Some of them, such as The Honeymooners, have been put through digital processing to clean up the old films and get rid of most of those halos.

Our early Moon probes shot their pictures on film that was quickly developed onboard then scanned in narrow horizontal strips for transmission as video stills back to Earth. The cameras of the day were too low resolution and couldn’t handle the brightness of the sunlight reflected off the Moon. Some early Earth orbiting satellites did the same thing, while the Corona ‘birds’ dropped their capsules with the developed film so the high resolution images could be studied.

The author probably used Kodachrome as an example because it was a pretty image. No wonder Paul Simon wrote a song about it.

Gorgeous as Kodachrome was, it did have weird processing and was super, super contrasty; it looked good as an original but duplicated poorly. The examples you list are all shot on color negative film, which would then be scanned in frame by frame on something like the modern successor to the Rank Cintel, and then the post production done digitally from that point on.

Is that better than modern digital cinematography? Depends on who you ask. Directors like Chris Nolan and Quentin Tarantino insist it is and still use color negative film on their projects, and even distribute on actual projected positive film prints to a few select theaters.

Out of interest, the Iconoscope was also a WWII device for projecting aerial reconnaissance photographs onto a table that allowed production of maps and diagrams as this fascinating film shows: https://www.youtube.com/watch?v=ZphtLn4xcEY

Let us not forget a guy named Farsnworth who actually invented TV first, that boob Zworkin eventually got stuck, and they paid Farnsworth for using the patents.

Oh and if any of you, I’m looking at you folks from Hack A Day directly, there’s an excellent museum on the subject keeping the computers, and other things, and even the visiting machines and people company at Fort Evans also known as InfoAge, at the VCF East.

I think the explanation misses one of the key and most interesting parts: how do you “read” the charge on the tube’s storage surface with a scanning beam? Wikipedia explains:

“When the electron beam scans the plate again, any residual charge in the granules resists refilling by the beam. The beam energy is set so that any charge resisted by the granules is reflected back into the tube, where it is collected by the collector ring, a ring of metal placed around the screen. The charge collected by the collector ring varies in relation to the charge stored in that location. This signal is then amplified and inverted, and then represents a positive video signal.”

Correct. I remember that in what I’ve read over the past good many years regarding TV image gathering prior to the development of the CCD device. In fact there’s a positively sterling capsule history of them in the book “First Light” regarding telescopes and what happens when they first see stars. Especially since the big eye at Palomar was using CCD devices originally designed for the first camera on Hubble.

CCD will always be the superior digital image sensor technology. When a CCD image is zoomed in, you have sharp edged pixels all the way, no matter how large they’re expanded without using interpolation.

CMOS images, no matter how high the resolution, are fuzzy when zoomed way in. Sure you can make massive images with huge amounts of pixels, but zoom in to try and see the tiny details and you get fuzz. They’re like a Class D audio amplifier in a way. Zoom in on a waveform output by one and it’ll look smooth, until you get close enough and it’s all fuzzy. Ultrasonically fuzzy that blurs out when it hits the analog speakers but still fuzzy.

That deep down fuzz in CMOS sensors also sort of echoes film grain. Movies shot on film with a larger grain can be difficult to digitize to a high compression codec because the grain creates a bazillion tiny ‘objects’ and a lot of motion that’s difficult to tune the compression settings to handle. Try to retain too much detail and you get a massive file despite using HEVC. Lower the settings for detail and motion too much and you get a blocky mess that looks like a poorly made MPEG1 Video CD.

A 1970’s or 1980’s movie shot on lower cost film may need a 1 gig HEVC file to look as good as a newer movie shot at 4K digitally that will easily pack into a 1080p 500 megabyte or smaller HEVC file. I did a 1 hour 30 minute one that attempting to squeeze under a bit over 900 megabytes crossed the line from *I* can’t tell the difference to utter mush. And to do that small I had to resort to two pass software encoding that took over 14 hours with a six core CPU VS the GPU’s hardware NVENC that can compress digital sourced video very cleanly into tiny files at nearly 200 frames per second processing speed. (The name of this game is saving space and convenience of use, not preserving ultimate quality, that’s what the original media is for.)

You get the same problem scanning old photos, negatives and slides. The film grain makes it so that even if you scan at 2,000 DPI it’s not going to look one bit better than at around 600 DPI. You’re just making larger pictures of the grains.

If you want really good looking digital photos, find a digital camera from the late 90’s to 00’s. One with a big glass lens and a high original price tag – often available at yard sales or thrift stores for a few dollars. They’ll have a CCD sensor that will take better pictures than a newer, cheaper, CMOS camera with 4x the resolution and a little lens. The Fuji ones that used SmartMedia, then later XD Picture Card, are good ones to get. SmartMedia is limited to 128 megabytes, though a few cameras can take 256 megabyte cards, if you can find one of the very limited supply of such that were released before the format was axed and replaced with XDPC. Many XDPC cameras can take larger cards than their specifications say. I have one such camera that officially tops out at a 1 or 2 gig but it accepts a 4 gig. Takes quite a while to boot up with that size card. Another to look for are old Sony Mavicas that take the little CD-RW or Memory Stick. I have a CD-RW Mavica and one that takes up to a 128 meg Memory Stick. Really nice pictures, flashes that blast away the darkness. They aren’t speedy, take a bit between shots, especially the disc one.

Kodachrome was indeed gorgeous. I’d call it super-saturated rather than super-contrasty, but even that is in more of a comparison to other transparency films. Ektachrome and Fujichrome had lower responses in red-yellow and stronger responses in blue-green.

It was all dependent on what you set out to photograph – you want people, vibrant street or portraiture, use Kodachrome. If you want landscapes or art photography, use Ektachrome.

Now, if you want something super-contrasty, go for Cibachrome!

And kodachrome is stable. I’ve got kodachrome slides that my father took in the 1950s, and while there’s a little fading, they’re still beautiful, and well within the envelope of correctable/restorable.

I miss kodachrome.

All that without once mentioning the word “photomultiplier”, or Philo T. Farnsworth, the inventor of the image dissector and who was instrumental in some of the other things that made electronic television practical. Until Farnsworth, everyone else experimenting with video transmission via radio waves was fiddling around with electro-mechanical devices.

Farnsworth made the mistake of giving Vladimir Zworykin a tour of his lab in California, apparently unaware that he had connections to RAC. Zworykin took his observations to RCA, where he planned to produce electronic TV using information Farnsworth had shown him and squeeze out Farnsworth. But Zworykin’s device that eventually sort of worked was nothing like his patent application.

Farnsworth was willing to aid other inventors. (Apparently clueless that anyone would try to screw him over.) He communicated with Baird in the UK but Baird was stubbornly sticking with his electro-mechanical system.

Desperate to continue their practice of never ever licensing a patent, RCA assembled a team to make Vladimir Zworykin’s concept work. They came back with a device that was essentially a copy of Farnsworth’s, saying it was the only way it could work. RCA gave up and for the first time in company history licensed a patent instead of buying it outright or copying something then telling the inventor without money to go pound sand. But they’d nearly run out the clock on Farnsworth’s patent expiration so they didn’t have to pay royalties for very long. RCA also heavily implied for a long time that they’d invented television, which easily buried Farnsworth’s own TV receiver manufacturing company. RCA did come up with the method used by NTSC to add color.

Since 1986 RCA has mostly been just a name and logo owned by General Electric, which owned RCA from its founding in 1919 until 1936, when RCA went independent.

But before all that, what really made it possible, was one man who believed in Farnsworth’s ideas. George Everson wrangled the bankers and other investors to keep the money coming in so Farnsworth could concentrate on making a camera tube suitable for use.

If we didn’t have television today and a fellow like Farnsworth popped up with his ideas, without a George Everson in his corner it would go nowhere because investors wouldn’t want to spend any money on it. They like things that are either production ready or >thisclose< to it, and they want a majority share.

All colour film would produce a monochrome image if you skipped the colour developer. “Standard processing” isn’t a thing.

B&W film – developer, stop, fix. 1. “develop” the light-struck halide crystals by chemically changing them to metallic silver. 2. “stop” the reaction by dunking the film in weak acid (developer is alkaline), 3. “Fix” the image by converting any un-developed halide crystals into a soluble form. FInally, wash the dickens out of it to remove all unwanted residue. Result – a B&W image in negative.

Colour negative film: 1. First developer. Convert light-struck halide crystals in each of three colour-sensitised layers into metallic silver. 2. Stop. 3. Colour developer – attach dyes to metallic silver. 4. Stop. 5. Bleach out the metallic silver, leaving only the dyes. 6. Fix. Convert remaining halides to soluble form. Finally, wash the dickens out of it to remove all unwanted residue. If you skipped steps 3 and 4 you would get a B&W negative. Some films were colour-coupled, meaning they already had proto-dyes embedded in the emulsion, and these dyes were activated by the processing chemicals.

Processing colour transparency films like Kodachrome was very complex and used a re-exposure to light as part of the process. Ektachrome et al used another chemical instead to “reverse” the exposure. I used to process ektachrome at home and it was very precise as to temperatures, something like 38 degrees C plus or minus half a degree.

It’s been a long time, so some details int he above might be slightly wonky.

Something in your description of color film must be slightly wrong: The dyes must be in the film in any case (different color couplers mixed into the different layers at the time of manufacturing). Otherwise you could not get different dyes (RGB or CMY) into the different layers of the film. Of course if you do just BW processing, these color coupkers are not activated and the remaining silver BW picture would probably dominate any color anyway.